Author: Biteye Core Contributor Viee

Editor: Biteye Core Contributor Crush

Community: @BiteyeCN

* Approximately 2500 words, estimated reading time 5 minutes

From the strong alliance of AI+DePIN in the first half of the year to the current creation of market value myths by AI+MEME, tokens such as $GOAT and $ACT have occupied most of the market's attention, indicating that the AI track has become the core field in the current bull market.

If you are optimistic about the AI track, what can you do? In addition to AI+MEME, what other AI sectors are worth paying attention to?

01 AI+MEME Craze and Computing Infrastructure

Remain optimistic about the explosive power of AI+MEME

AI+MEME has recently swept the entire on-chain and centralized exchange market, and the trend is still continuing.

If you are an on-chain player, try to choose tokens with new narratives, strong communities, and small market capitalizations, as there is a relatively large wealth effect between the primary and secondary markets. If you don't play on-chain, you can consider news arbitrage when a token is announced to be listed on exchanges like Binance.

Explore high-quality targets in the AI infrastructure layer, especially those related to computing power

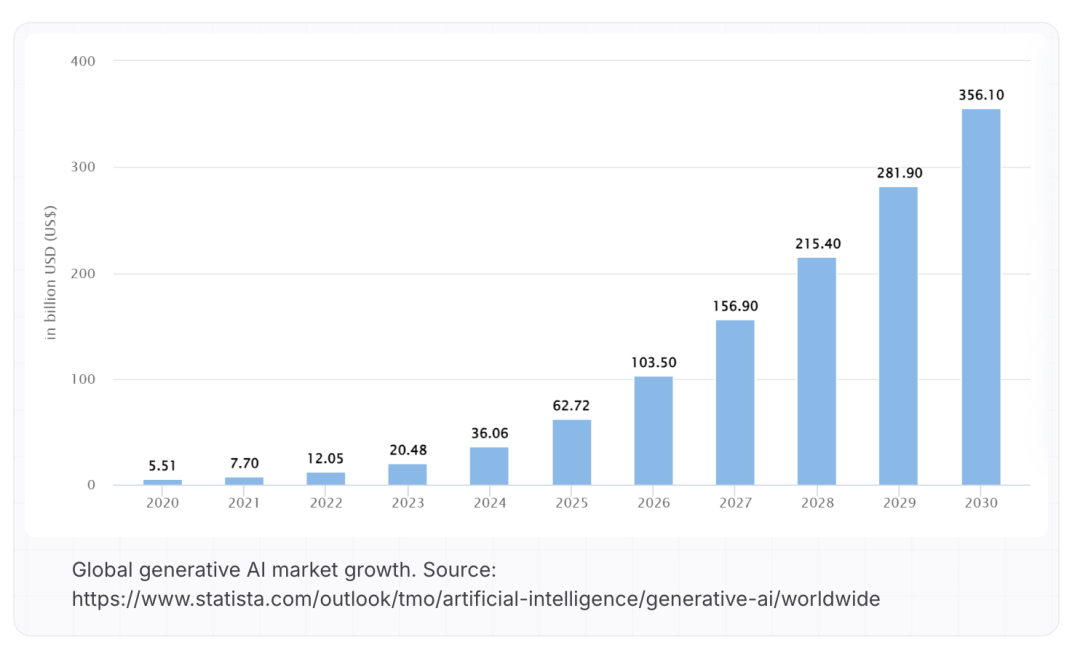

Computing power is a definite narrative for the development of AI, and cloud computing will raise the valuation foundation. With the explosive growth of AI computing power demand, especially the emergence of large language models (LLMs), the demand for computing power and storage has almost increased exponentially.

The development of AI technology is inseparable from powerful computing capabilities, and this demand is not short-term, but a long-term and continuously growing trend. Therefore, projects that provide computing power infrastructure are essentially solving the fundamental problem of AI development and have very strong market appeal.

02 Why is decentralized computing so important?

On the one hand, the explosive growth of AI computing power faces high costs

Data from OpenAI shows that the computing power used to train the largest AI models has almost doubled every 3-4 months since 2012, far exceeding Moore's Law.

With the soaring demand for high-end hardware such as GPUs, the imbalance between supply and demand has led to record-high computing power costs. For example, the initial investment in GPUs alone for training a model like GPT-3 is close to $80 million, and the daily inference cost can reach $700,000.

On the other hand, traditional cloud computing cannot meet the current computing power demand

AI inference has become the core process in AI applications. It is estimated that 90% of the computing resources consumed in the life cycle of AI models are used for inference.

Traditional cloud computing platforms often rely on centralized computing infrastructure, and as computing demand grows rapidly, this model is clearly unable to meet the constantly changing market needs.

03 Project Analysis: Decentralized GPU Cloud Platform Heurist

@heurist_ai has emerged in this context, providing a new decentralized solution for a global distributed GPU network to meet the computing needs of AI inference and other GPU-intensive tasks.

As a decentralized GPU cloud platform, Heurist is designed for compute-intensive workloads such as AI inference.

It is based on the DePIN (Decentralized Infrastructure Network) protocol, allowing GPU owners to freely provide their idle computing resources to the network, while users can access these resources through simple APIs/SDKs to easily run AI models, ZK proofs, and other complex tasks.

Unlike traditional GPU cloud platforms, Heurist has abandoned the complex virtual machine management and resource scheduling mechanisms, and instead adopted a serverless computing architecture, simplifying the use and management of computing resources.

04

AI Inference: Heurist's Core Advantages

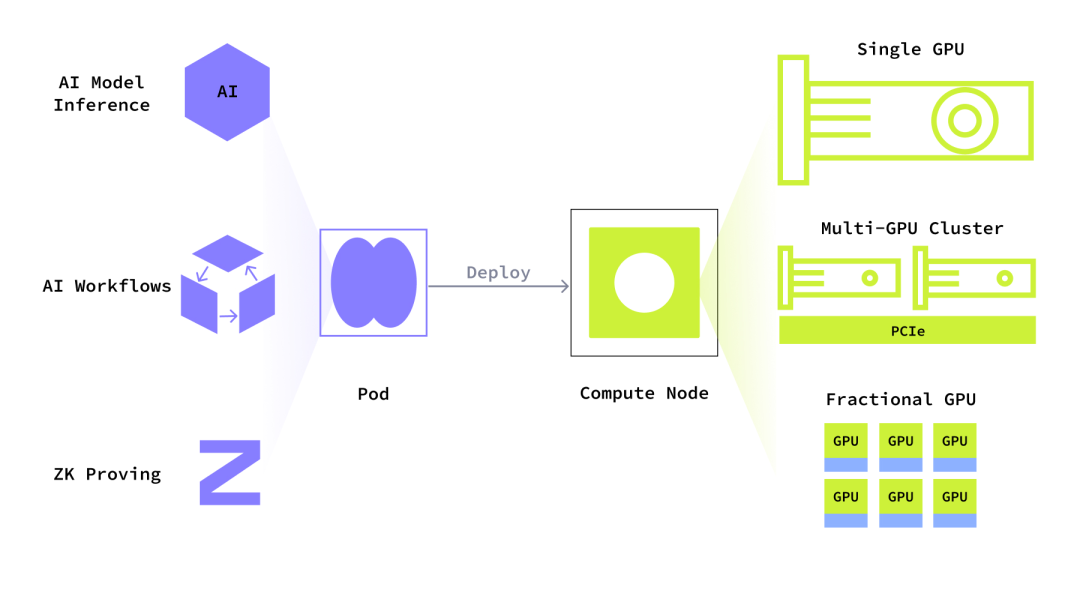

AI inference refers to the computational process of applying a trained model to actual application scenarios, which requires relatively less computing resources compared to AI training and can be efficiently executed on a single GPU or a machine with multiple GPUs.

Heurist has designed a globally distributed GPU network based on this, able to efficiently schedule computing resources to ensure the rapid completion of AI inference tasks.

At the same time, the tasks supported by Heurist are not limited to AI inference, but also include the training of small language models and model fine-tuning, which are also compute-intensive workloads. Since these tasks do not require intensive inter-node communication, Heurist can allocate resources more flexibly and economically to ensure optimal utilization.

Heurist's technical architecture adopts an innovative Platform-as-a-Service (PaaS) model, providing a platform that does not require the management of complex infrastructure, allowing users and developers to focus on the deployment and optimization of AI models without worrying about the management and scaling of underlying resources.

05 Heurist Core Functions

Heurist provides a series of powerful functions to meet the needs of different users:

Serverless AI API: Users can run more than 20 fine-tuned image generation models and large language models (LLMs) with just a few lines of code, greatly reducing the technical threshold.

Elastic Scaling: The platform dynamically adjusts computing resources based on user needs to ensure stable service even during peak periods.

Permissionless Mining: GPU owners can join or withdraw from mining activities at any time, and this flexibility attracts a large number of high-performance GPU users to participate.

Free AI Applications: Heurist provides various free applications, including image generation, chatbots, and search engines, allowing ordinary users to directly experience AI technology.

06 Heurist's Free AI Applications

The Heurist team has already released several free AI applications suitable for ordinary users' daily use, including the following:

Heurist Imagine: A powerful AI image generator that allows users to easily create artistic works without any design background. 🔗https://imagine.heurist.ai/

Pondera: An intelligent chatbot that provides a natural and smooth conversational experience, helping users easily obtain information or solve problems. 🔗 https://pondera.heurist.ai/

Heurist Search: An efficient AI search engine that helps users quickly find the information they need, improving work efficiency. 🔗 https://search.heurist.ai/

07 Heurist's Latest Progress

Heurist recently completed a $2 million funding round, with investors including well-known institutions like Amber Group, providing a solid foundation for its future development.

In addition, Heurist is about to conduct a TGE and plans to collaborate with OKX Wallet to launch an activity for minting AIGC Non-Fungible Tokens (NFTs). This activity will provide 100,000 ZK token rewards to 1,000 participants, bringing more opportunities for community engagement.

Activity link: https://app.galxe.com/quest/OKXWEB3/GC1JjtVfaM

Computing power is the core driving force for the development of AI, and any decentralized computing project that relies on computing power stands at the commanding heights of the industry. Not only does it cater to the growing demand for AI computing power, but also, with the stability and market potential of its infrastructure, it has become a sought-after target for capital.

As Heurist continues to expand its ecosystem and launch new features, we have reason to believe that the decentralized AI computing platform will occupy a pivotal position in the global AI industry.