Source: Forbes

Compiled by: MetaverseHub

Last week, there was news that OpenAI received $6.5 billion in a new round of financing, and its market valuation reached $150 billion .

The funding reaffirms OpenAI’s enormous value as an artificial intelligence startup and also shows that it is willing to make structural changes to attract more investment.

The sources added that the massive round was strongly sought after by investors given OpenAI's rapid revenue growth and could be finalized within the next two weeks.

Existing investors such as Thrive Capital, Khosla Ventures and Microsoft are expected to participate. New investors including Nvidia and Apple also plan to participate, and Sequoia Capital is also in talks to return to invest .

At the same time, OpenAI launched the o1 series, its most sophisticated AI model to date, designed to excel at complex reasoning and problem-solving tasks. The o1 model uses reinforcement learning and thought chain reasoning, representing a major advance in AI capabilities.

OpenAI provides the o1 model to ChatGPT users and developers through different access tiers . For ChatGPT users, users of the ChatGPT Plus plan can access the o1-preview model, which has advanced reasoning and problem-solving capabilities.

OpenAI’s application programming interface (API) allows developers to access o1-preview and o1-mini in higher-tier subscription plans.

These models are available in the Level 5 API, which allows developers to integrate the advanced features of the o1 models into their own applications. The Level 5 API is a higher-level subscription plan provided by OpenAI for access to its advanced models.

Here are 10 key takeaways about the OpenAI o1 model:

01. Two model variants: o1-Preview and o1-Mini

OpenAI released two variants: o1-preview and o1-mini. The o1-preview model excels in complex tasks, while o1-mini provides faster and more cost-effective optimization solutions for STEM fields, especially coding and mathematics.

02. Advanced Thinking Chain Reasoning

The o1 model uses a thought chaining process to reason step by step before answering . This deliberate approach improves accuracy and helps with complex questions that require multi-step reasoning, making it superior to previous models such as GPT-4.

Thought chain prompts enhance the reasoning ability of artificial intelligence by breaking down complex problems into sequential steps, thereby improving the logical and computational capabilities of the model.

OpenAI’s GPT-o1 model advances this process by embedding it into its architecture, simulating the human problem-solving process.

This enables GPT-o1 to excel in competitive programming, mathematics, and science, while also improving transparency as users can track the model’s reasoning process, marking a leap forward in human-like AI reasoning.

This advanced reasoning capability causes the model to take some time before responding, which may appear slow compared to the GPT-4 series models.

03. Enhanced security features

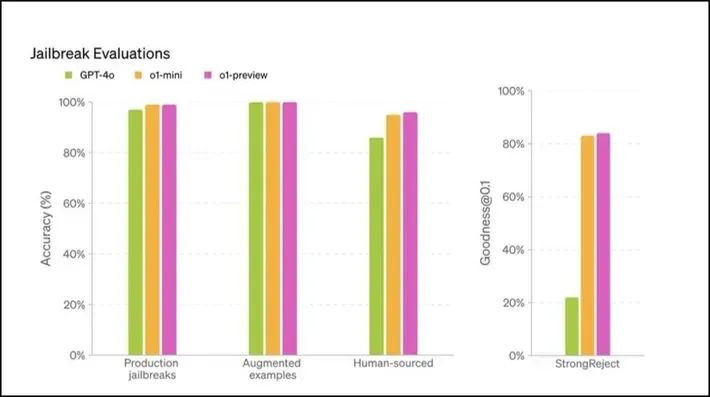

OpenAI has embedded advanced security mechanisms in o1 models. These models have demonstrated superior performance in evaluation of unauthorized content and have shown resistance to jailbreaking, making them safer to deploy in sensitive use cases.

"Jailbreaking" of AI models involves bypassing security measures, which can easily lead to harmful or unethical outputs. As AI systems become more complex, the security risks associated with "jailbreaking" also increase.

OpenAI's o1 model, especially the o1-preview variant, scored higher in security tests, showing stronger resistance to such attacks .

This enhanced resilience is due to the model’s advanced reasoning capabilities, which help it better adhere to ethical guidelines and make it more difficult for malicious users to manipulate it.

04. Better performance on STEM benchmarks

The o1 model ranks among the best in various academic benchmarks. For example, o1 ranked 89th in Codeforces (a programming competition) and ranked in the top 500 in the US Mathematical Olympiad Qualifiers.

05. Reduce "high-level hallucinations"

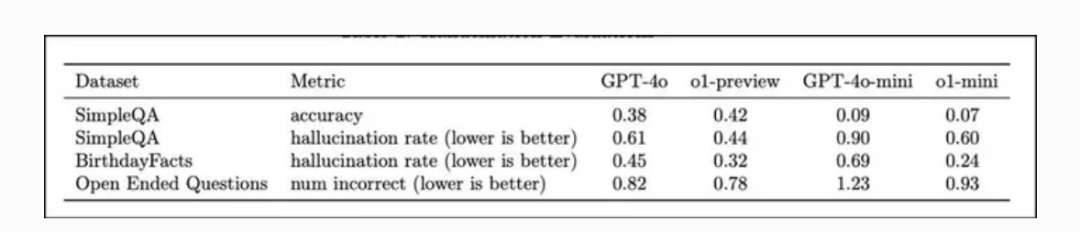

“Hallucination” in large language models refers to the generation of false or unfounded information. OpenAI’s o1 model solves this problem by using high-level reasoning and thought chaining processes, allowing it to think about problems step by step .

Compared with previous models, the o1 model reduces the incidence of "hallucinations".

Evaluations on datasets such as SimpleQA and BirthdayFacts show that o1-preview outperforms GPT-4 in providing realistic and accurate answers, thereby reducing the risk of misinformation.

06. Training based on diverse datasets

The o1 model is trained on a combination of public, proprietary, and custom datasets, making it proficient in both general knowledge and domain-specific topics. This diversity gives it strong conversational and reasoning capabilities.

07. Price-friendly and cost-effective

OpenAI's o1-mini model is a cost-effective alternative to o1-preview that is 80% cheaper while still having strong performance in STEM fields such as mathematics and coding .

The o1-mini model is tailored for developers who need high accuracy at a low cost, making it ideal for budget-constrained applications. This pricing strategy ensures that more people, especially educational institutions, startups, and small businesses, can access advanced AI.

08. Security work and external "red team testing"

In the context of large language models (LLMs), “red team testing” refers to rigorously testing AI systems by simulating attacks from other people or in ways that could cause the model to behave in a harmful, biased, or unintended way.

This is critical to identifying gaps in content safety, misinformation, and ethical boundaries before models are deployed at scale.

Red team testing helps make LLM more secure, robust, and ethical by using external testers and different testing scenarios. This ensures that the model is resistant to “jailbreaking” or other forms of manipulation.

Prior to deployment, the o1 model underwent a rigorous security assessment, including red team testing and readiness framework evaluation . These efforts helped ensure that the model met OpenAI’s high standards for safety and consistency.

09. More fair, less biased

The o1-preview model outperforms GPT-4 in reducing stereotyped answers. In fairness evaluations, it selects the correct answer more often and also improves in handling ambiguous questions.

10. Thought chain monitoring and deception detection

OpenAI has used experimental techniques to monitor the o1 model’s thought chain to detect deception when the model intentionally provides false information. Preliminary results show that this technology has good prospects in reducing the potential risks of false information generated by the model.

OpenAI’s o1 model represents a significant advance in AI reasoning and problem-solving, particularly in STEM fields such as math, coding, and scientific reasoning .

With the introduction of the high-performance o1-preview and cost-effective o1-mini, these models are optimized for a range of complex tasks while ensuring higher security and ethical compliance through extensive red team testing.