Author: PonderingDurian, Delphi Digital Researcher

Compiled by: Pzai, Foresight News

Given that cryptocurrencies are essentially open-source software with built-in economic incentives, and AI is disrupting the way software is written, AI will have a huge impact on the entire blockchain field.

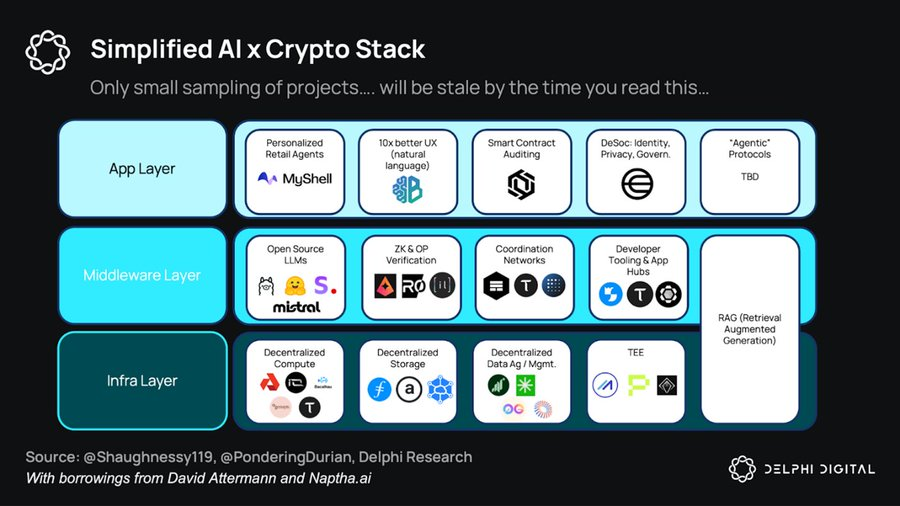

AI x Crypto Overall Stack

DeAI: Opportunities and Challenges

In my view, the biggest challenge facing DeAI is at the infrastructure layer, as building base models requires a lot of capital, and the scale of data and computation has very high returns.

Considering the scaling law, tech giants have a natural advantage: in the Web2 era, they have reaped huge profits from the monopoly rents of aggregating consumer demand, and have reinvested these profits in cloud infrastructure over the past decade of artificially lowered fees, and now the internet giants are trying to dominate the AI market by occupying data and computation (the key elements of AI):

Comparison of token sizes of large models

Due to the capital-intensive nature of large-scale training and high bandwidth requirements, a unified super-cluster is still the best choice - providing the best-performing closed-source models to tech giants - they plan to rent out these models at monopolistic profits and reinvest the proceeds in each subsequent generation of products.

However, it turns out that the moat in the AI field is shallower than the network effects of Web2, with leading frontier models rapidly depreciating relative to the field, especially with Meta's "scorched earth policy" of open-sourcing frontier models like Llama 3.1 that reach SOTA performance.

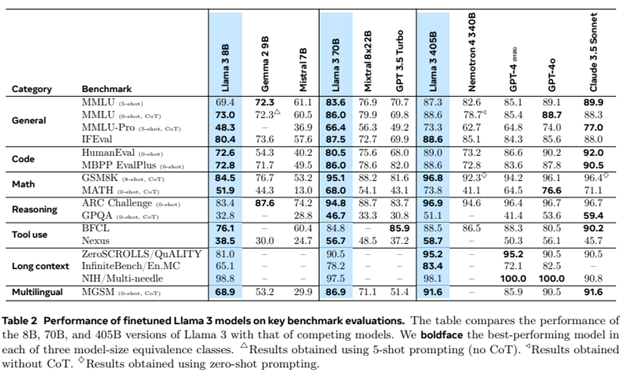

Llama 3 Large Model Scores

At this point, combined with emerging research on low-latency decentralized training methods, (some) frontier commercial models may be commoditized - as smart pricing falls, competition will (at least partially) shift from hardware super-clusters (favoring tech giants) to software innovation (slightly favoring open-source/crypto).

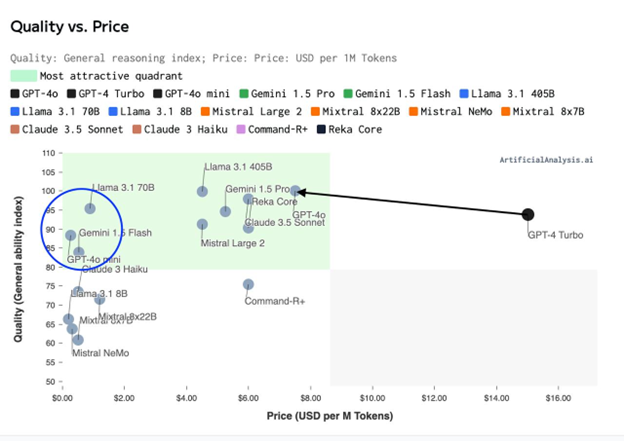

Capability Index (Quality) - Training Price Distribution

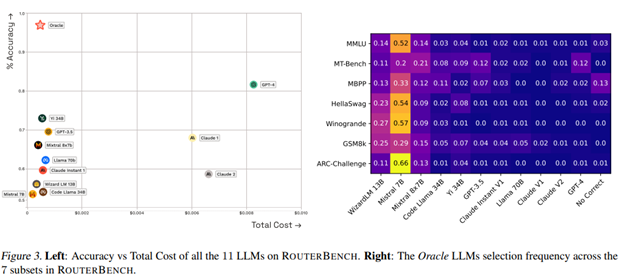

Considering the computational efficiency of "mixture of experts" architectures and large model synthesis/routing, we are likely to face not just a world of 3-5 giant models, but a world composed of millions of models with different cost/performance tradeoffs - an interconnected intelligent mesh (hive).

This constitutes a huge coordination problem, which blockchain and crypto incentive mechanisms should be well-suited to help solve.

Core DeAI Investment Areas

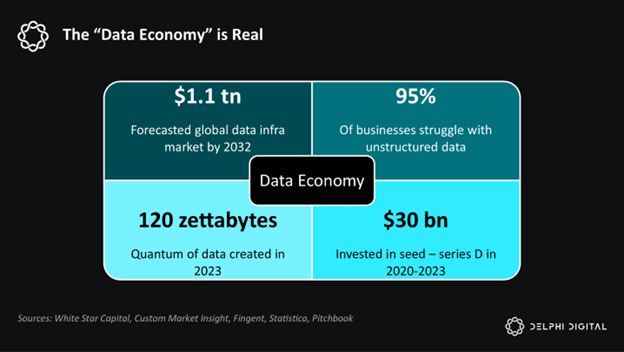

Software is eating the world. AI is eating software. And AI is fundamentally about data and computation.

Delphi is bullish on the various components in this stack:

Simplified AI x Crypto Stack

Infrastructure

Given that AI is powered by data and computation, DeAI infrastructure aims to procure data and computation as efficiently as possible, often using crypto incentive mechanisms. As mentioned earlier, this is the most challenging part of the competition, but given the scale of the end market, it may also be the highest-returning part.

Computation

So far, distributed training protocols and the GPU market have been constrained by latency, but they hope to coordinate potential heterogeneous hardware to provide lower-cost, on-demand computing services for those excluded from the giants' integrated solutions. Companies like Gensyn, Prime Intellect, and Neuromesh are driving the development of distributed training, while io.net, Akash, and Aethir are realizing lower-cost inference closer to the edge.

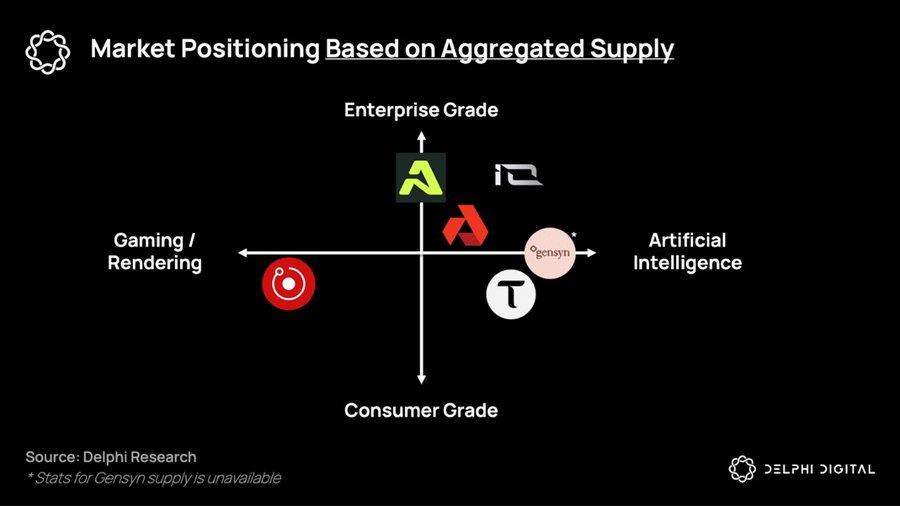

Project ecosystem position distribution based on aggregated supply

Data

In a ubiquitous intelligent world based on smaller, more specialized models, the value and monetization of data assets will be increasingly high.

So far, DePIN has been widely praised for its ability to build lower-cost hardware networks compared to capital-intensive enterprises (such as telecom companies). However, DePIN's biggest potential market will emerge in the collection of new data sets that will flow into on-chain intelligent systems: agent protocols (discussed later).

In this world, the world's largest potential market - the workforce - is being replaced by data and computation. In this world, De AI infrastructure provides a way for non-technical people to seize the means of production and contribute to the coming network economy.

Middleware

The ultimate goal of DeAI is to achieve effective composable computing. Just like the capital Lego of DeFi, DeAI makes up for the lack of absolute performance today through permissionless composability, incentivizing an open ecosystem of software and computational primitives to compound over time, and (hopefully) surpassing existing software and computational primitives.

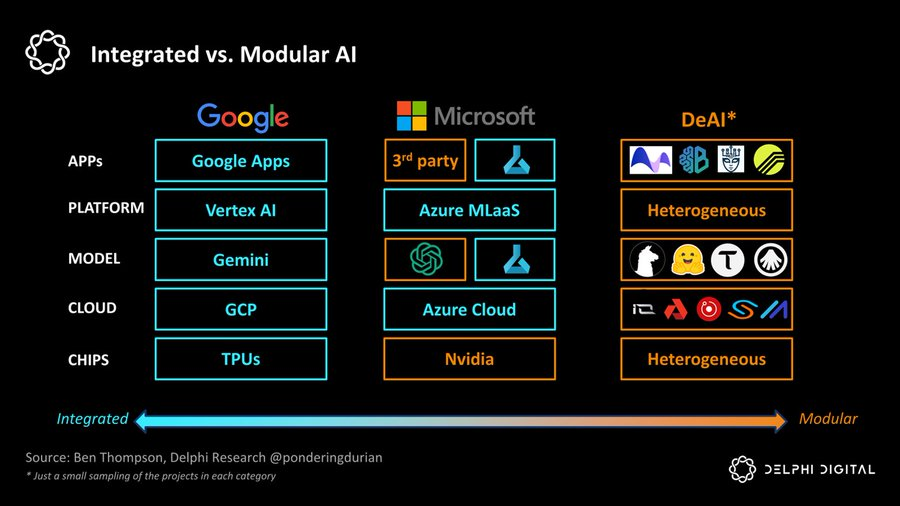

If Google represents the extreme of "integration", then DeAI represents the extreme of "modularity". As Clayton Christensen has warned, in emerging industries, integrated approaches often take the lead by reducing friction in the value chain, but as the field matures, modular value chains will gain a foothold by increasing competition and cost efficiency at each layer of the stack:

Integrated vs. Modular AI

We are very bullish on several categories that are critical to realizing this modular vision:

Routing

In a world of intelligent fragmentation, how can we choose the right model and time at the best price? Demand aggregators have been capturing value (see Aggregation Theory), and routing functionality is crucial for optimizing the Pareto curve of performance and cost in the network intelligence world:

Bittensor has been a leader in the first generation, but there are also many specialized competitors.

Allora holds competitions between different models in different "topics" in a "context-aware" and self-improving way over time, providing information for future predictions based on historical accuracy under specific conditions.

Morpheus aims to be the "demand-side router" for Web3 use cases - essentially an open-source local agent that can grasp the relevant context of the user and efficiently route queries through the emerging building blocks of DeFi or Web3's "composable computing" infrastructure.

Agent interoperability protocols like Theoriq and Autonolas aim to push modular routing to the extreme, making a flexible, composable, and compound ecosystem of Agents or components a fully mature on-chain service.

In short, in a world of rapidly fragmenting intelligence, demand-side aggregators will play an extremely powerful role. If Google is a $2 million company indexing the world's information, the winner of demand-side routers - whether Apple, Google, or a Web3 solution - the company that indexes the agents of intelligence will be of a much larger scale.

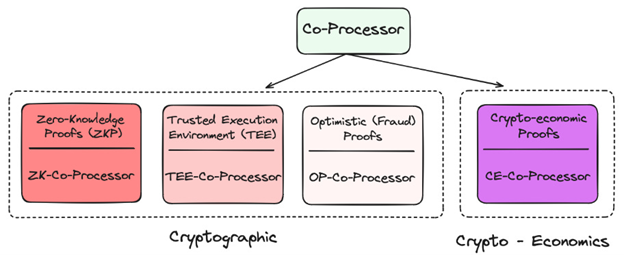

Coprocessors

Given its decentralized nature, blockchain is severely constrained in terms of data and computation. How to bring the data and compute-intensive AI applications that users need into the blockchain? Through coprocessors!

Applications of Coprocessors in Crypto

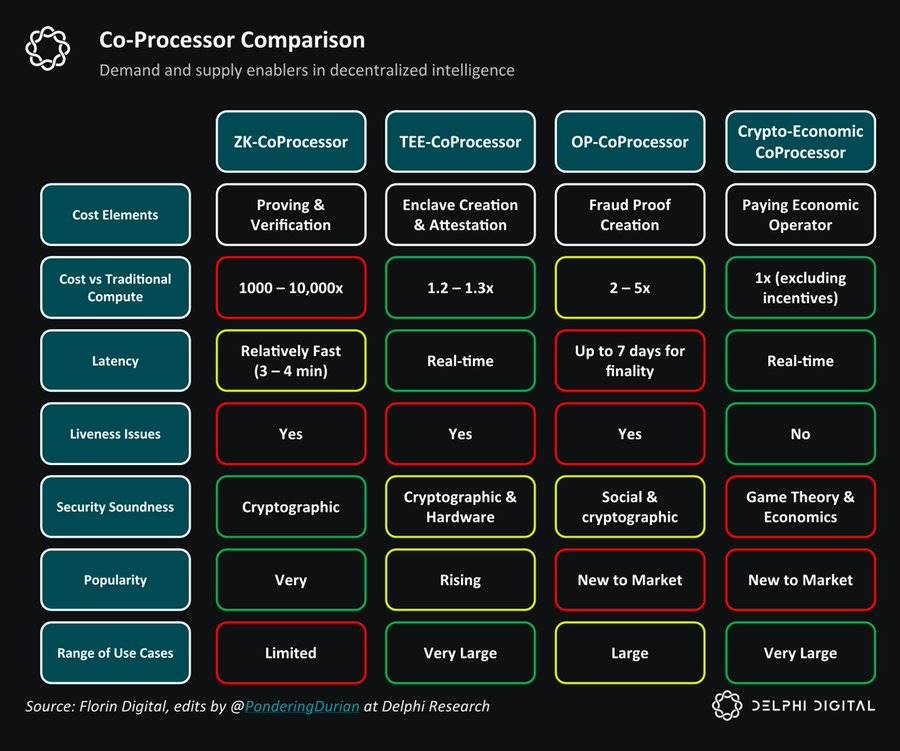

They all provide different technologies to "verify" the underlying data or models being used as "oracles" that can maximize the reduction of new trust assumptions on-chain while dramatically increasing their capabilities. So far, many projects have used zkML, opML, TeeML, and crypto-economic methods, each with their own pros and cons.

Coprocessor Comparison

At a higher level, coprocessors are crucial for the intelligence of smart contracts - providing "data warehouse" like solutions to query for more personalized on-chain experiences, or to verify if a given inference is correctly completed.

TEE (Trusted Execution) networks, such as Super, Phala and Marlin, have become increasingly popular recently due to their practicality and ability to support large-scale applications.

Overall, coprocessors are essential for integrating high-certainty but low-performance blockchains with high-performance but probabilistic intelligent agents. Without coprocessors, AI would not appear in this generation of blockchains.

Developer Incentives

One of the biggest problems with open-source AI development is the lack of sustainable incentive mechanisms. AI development is highly capital-intensive, with very high opportunity costs for computing and AI knowledge work. Without proper incentives to reward open-source contributions, this field will inevitably lose to hyper-capitalist supercomputers.

From Sentiment to Pluralis, Sahara AI and Mira, these projects aim to bootstrap networks that allow decentralized individual networks to contribute to network intelligence, while providing appropriate incentives.

Through business model compensation, the compounding speed of open-source should accelerate - providing developers and AI researchers a global choice outside of big tech companies, with the potential to be handsomely rewarded based on the value they create.

While this is extremely difficult to achieve, and the competition is getting fiercer, the potential market here is enormous.

GNN Models

Large language models divide patterns in large text libraries and learn to predict the next word, while Graph Neural Networks (GNNs) handle, analyze and learn graph structured data. Since on-chain data is primarily composed of complex interactions between users and smart contracts, in other words, a graph, GNNs seem to be a reasonable choice to support on-chain AI use cases.

Projects like Pond and RPS are trying to establish base models for web3, which may be applied in use cases such as:

Price Prediction: On-chain behavior models for price prediction, automated trading strategies, sentiment analysis

AI Finance: Integration with existing DeFi applications, advanced yield strategies and liquidity utilization, better risk management/governance

On-chain Marketing: More targeted airdrops/targeting, recommendation engines based on on-chain behavior

These models will make heavy use of data warehouse solutions like Space and Time, Subsquid, Covalent and Hyperline, which I'm also very bullish on.

GNNs can prove that large models and Web3 data warehouses are indispensable auxiliary tools, providing OLAP (Online Analytical Processing) capabilities for Web3.

Applications

In my view, on-chain Agents may be the key to solving the well-known user experience problems of cryptocurrencies, but more importantly, over the past decade we have invested billions of dollars in Web3 infrastructure, yet the utilization by the demand side has been pathetically low.

No need to worry, Agents are coming...

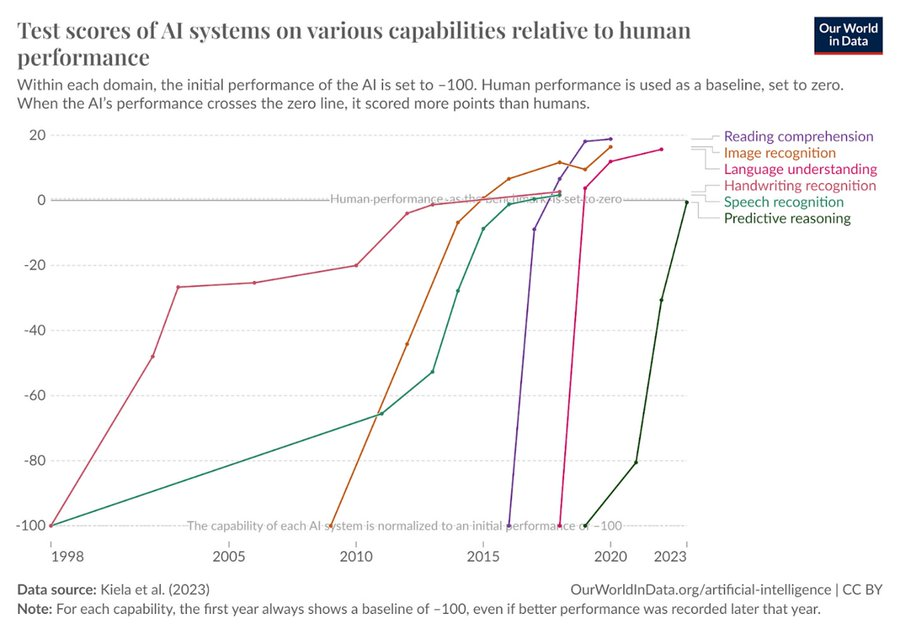

Growth in AI Test Scores Across Dimensions of Human Behavior

And these Agents leverage open, permissionless infrastructure - spanning payments and composable computing to achieve more complex end goals, which also seems logical. In the upcoming networked intelligence economy, economic flows may no longer be B->B->C, but user->Agent->compute network->Agent->user. The ultimate outcome of this flow is agent protocols. Application or service-oriented businesses have limited overhead, primarily leveraging on-chain resources to run, and the cost of satisfying end-user (or each other's) needs in a composable network is far lower than traditional enterprises. Just as the application layer captured most of the value in Web2, I'm also a proponent of the "fat agent protocol" theory in DeAI. Over time, value capture should shift towards the upper stack.

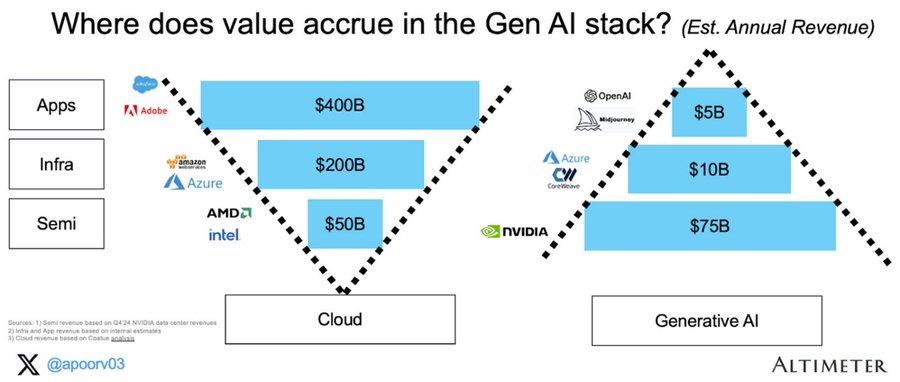

Value Accrual in Generative AI

The next Google, Facebook and Blackrock may well be agent protocols, and the components to realize these protocols are being born.

The Endgame of DeAI

AI will transform our economic paradigm. Today, market expectations are that this value capture will be limited to a few large companies on the West Coast of North America. But DeAI represents a different vision. An open, composable intelligence network vision, with rewards and compensation for even the smallest contributions, and more collective ownership/governance.

While some claims about DeAI may be exaggerated, and many projects' trading prices are vastly higher than their current driving force, the scale of the opportunity is indeed very objective. For those with patience and foresight, the ultimate vision of truly composable computing in DeAI may prove the very rationale of blockchains themselves.