Image source: Generated by boundless AI

The year 2025 has just begun, and China has set off an unprecedented wave in the field of AI.

DeepSeek has emerged as a dark horse, sweeping the global market with its "low-cost + open-source" advantages, and achieving top rankings in both the iOS and Google app stores. Data from Sensor Tower shows that as of January 31, DeepSeek's daily active users have reached 40% of ChatGPT, and it continues to expand at a rate of nearly 5 million new downloads per day, being dubbed the "mysterious force from the East" by the industry.

Facing the "overwhelming" DeepSeek, Silicon Valley has not yet formed a consensus.

Palantir CEO Karp said in an interview that the rise of competitors like DeepSeek shows that the US needs to accelerate the development of advanced artificial intelligence. Sam Altman mentioned in an interview with Radio Times that while DeepSeek has done well in terms of product and price, its emergence is not unexpected. Musk has repeatedly stated that it does not have revolutionary breakthroughs, and that teams will soon release models with better performance.

On February 9, the online seminar "Further Discussion on the Achievements of DeepSeek and the Future of AGI" was jointly initiated by Wicao Smart, the 50 People's Forum on the Information Society, and Tencent Technology. The event invited three guests: economist and chairman of the Academic Committee of the Hengqin Digital Finance Research Institute Zhu Jiaming, director of the China Automation Society and researcher at the Institute of Automation of the Chinese Academy of Sciences Wang Feiyue, and founder of EmojiDAO He Baohui, to share their views on "AGI development roadmap", "How to 'replicate' the next DeepSeek", and "Decentralization of large models".

Professor Zhu Jiaming is extremely optimistic about the development speed of AI. He said that the technological progress cycle of the primitive society is in units of 100,000 years, the agricultural society is in units of thousands of years, the industrial society is in units of 100 years, and the Internet era is basically in units of 10 years. Entering the era of artificial intelligence, its speed is even more unimaginably accelerated. "Artificial intelligence will move towards AGI or ASI from now on, in a non-conservative estimate of 2-3 years, and a more conservative estimate of 5-6 years."

In Professor Zhu's view, the future development of artificial intelligence will have two forks: one is the more cutting-edge, advanced, and high-cost route, aiming to explore unknown areas of humanity; the other is the low-cost, large-scale, and popularized route. "When artificial intelligence develops to a new stage, there will always be two paths, one is the 'from 0 to 1' of the new stage, and the other is the 'from 1 to 10'."

Professor Wang Feiyue, based on the development of AI technology at home and abroad, emphasized that DeepSeek's achievements today have reshaped China's confidence in investing in and leading AI technology and industry. He believes that OpenAI will not share super-intelligence, but will only push other companies to the dead end.

Regarding how to incubate more teams like DeepSeek, Wang Feiyue cited the cases of the birth of AlphaGo and ChatGPT to emphasize the value of the DeSci decentralized research model. "We cannot rely solely on planning and the national system to develop artificial intelligence technology."

Regarding DeepSeek's large-scale use of data distillation technology, there are many critical voices in the industry, even equating distillation to theft. However, Wang Feiyue expressed a desire to "rehabilitate" knowledge distillation, saying that "knowledge distillation is essentially a transformation of an educational form, and it cannot be said that one cannot surpass one's teacher just because one's knowledge comes from the teacher."

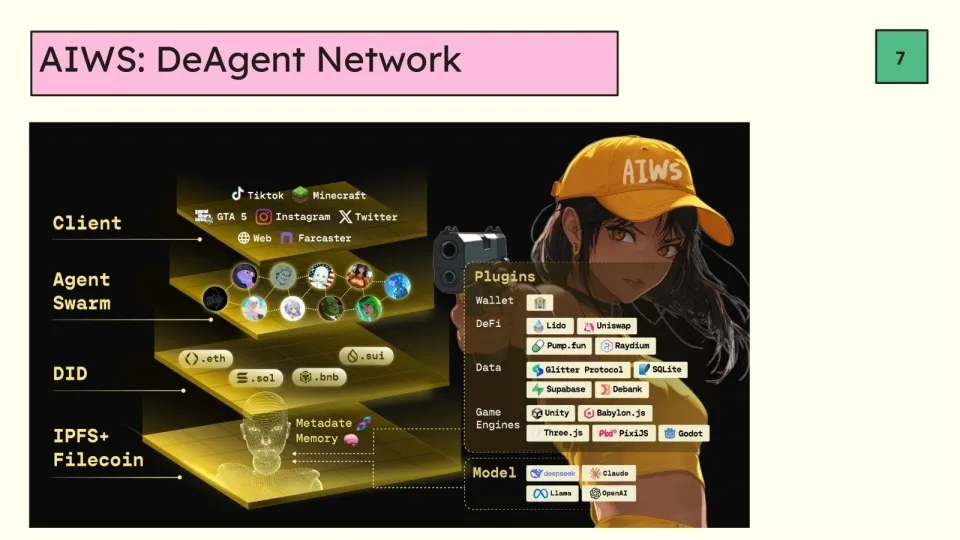

He Baohui, like Wang Feiyue, attaches great importance to the value of decentralization. In his view, decentralization is the path to reducing the cost of deep learning models, as well as the key to computing power networks and data security.

"The decentralized computing power network and data storage, such as the storage cost of Filecoin, is far lower than traditional cloud services (such as AWS), which greatly reduces the cost." He Baohui said, "The decentralized management mechanism can ensure that no one can unilaterally change these networks and data."

Regarding the Agent after the large model, He Baohui sees it as a form of life. "I think it is not just a tool, but a form of life, and our creation of AI does not mean that we completely dominate it." He Baohui said, "I am very concerned about how to make the Agent achieve 'immortality' and exist independently in the decentralized network, becoming a completely new 'species'."

Zhu Jiaming

The Development of Artificial Intelligence

Only Two Paths: "0 to 1" and "1 to 10"

Today I want to talk about the evolutionary time scale of artificial intelligence and large models, with the subtitle of analysis of the DeepSeek V3 and R1 series phenomenon.

I will mainly discuss five issues: the evolutionary time scale of artificial intelligence, the artificial intelligence ecosystem, how to comprehensively and objectively evaluate DeepSeek, the global reaction triggered by DeepSeek, and the outlook for artificial intelligence trends in 2025.

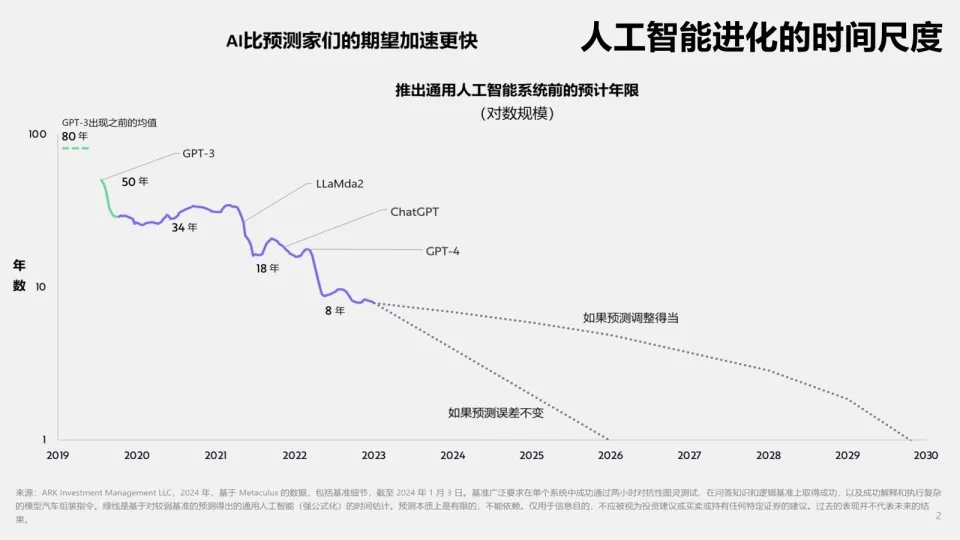

First, the actual evolutionary time scale of artificial intelligence is much faster than the expectations of experts, including scientists in the field of artificial intelligence.

In the long history of humanity, we have experienced the agricultural society, the industrial society, the information society, and now we have entered the era of artificial intelligence. In this historical process, the technological evolution cycle has been constantly shortened.

The technological progress cycle of the primitive society is in units of 100,000 years; the agricultural society is in units of thousands of years; the industrial society has a technological progress cycle of 100 years at most, and 10 years at least; the Internet era is basically in units of 30 years to 10 years; entering the era of artificial intelligence, its speed is even more unimaginably accelerated.

Before the appearance of GPT-3, people expected that it would take about 80 years for artificial intelligence to reach the AGI era; after the appearance of GPT-3, people shortened this expectation to 50 years; when LLaMda2 appeared, people's expectations were further shortened to 18 years.

In 2025, people's expectations for the realization of AGI may be even shorter, with a conservative estimate of 5-6 years and an optimistic estimate of 2-3 years.

Comparing the figure below, we can clearly see that artificial intelligence has an obvious acceleration characteristic compared to any technological revolution or innovation in human history.

If we use the first cosmic velocity, the second cosmic velocity, and the third cosmic velocity to describe the current high-speed development of artificial intelligence, artificial intelligence has now completed the transition from the first cosmic velocity to the second cosmic velocity - artificial intelligence has begun to enter a highly autonomous state, breaking free from human constraints.

As for what conditions it will take to break free from the gravitational pull of the sun and enter the third cosmic velocity, we do not know. But one thing is certain, artificial intelligence has completed the leap from general artificial intelligence to super artificial intelligence. Since 2017, artificial intelligence has been undergoing drastic changes and upgrades at the frequency of years, months, and weeks.

Why is artificial intelligence showing an exponential acceleration phenomenon and entering the "second cosmic velocity" stage? I believe there are three very important reasons.

● First, as Musk said, by the end of 2024, the data used to train the models will be exhausted, and the large models will have basically used up the existing human knowledge. Starting in 2025, the goal of the larger models will be to find incremental data, which is a historic turning point - the large models of artificial intelligence have completed the transformation from extensive to intensive.

● Second, the hardware of artificial intelligence has been continuously evolving.

● Third, artificial intelligence has entered the stage of "relying on artificial intelligence itself" for development - it can self-develop.

Currently, the large model matrix of companies like OpenAI, DeepMind, and Meta has formed a mechanism of mutual dependence and mutual promotion. The construction of the artificial intelligence ecosystem follows the law of vertical speed breakthrough driving horizontal ecological fission. At the horizontal ecological level, the three major paradigms of multimodal fusion revolution, vertical field penetration acceleration, and distributed cognitive network are reshaping the technological landscape.

In the increasingly mature artificial intelligence ecosystem, naturally there will be spillover effects (generalization effects), which will basically penetrate into the fields of science, economy, society, and people's cognition.

Regarding the phenomenon-level product that exploded during the Spring Festival, DeepSeek, how can we comprehensively and objectively evaluate it?

First, DeepSeek has been continuously focused on by domestic and foreign media, and has triggered a huge impact wave of massive public experience usage. In history, public opinion has played a very important role. Some events are amplified by public opinion, while others are underestimated, and after a period of time they will eventually return to their original state in history.

Here is the English translation of the text, with the specified terms translated as instructed:The main advantages of DeepSeek V3 are high performance, efficient training, and fast response, particularly suitable for the Chinese environment. DeepSeek-R1 mainly has the advantages of strong computing performance, excellent reasoning ability, good functional features, and strong applicability to various scenarios.

Of course, DeepSeek also has some problems that need to be improved or face challenges - how to improve the accuracy rate? How to solve the problem of multimodal output and input? The stability of the server hardware, and how to deal with the increasingly sensitive topics that are difficult to avoid.

Among these problems, the most worth discussing and the one that everyone is most concerned about is the cost of large AI models, which has a series of fundamental differences in concept and structure from the cost of industrial products.

The cost of large AI models is primarily in the infrastructure. The reason why DeepSeek shows its superiority in infrastructure cost is the extensive use of relatively low-priced A100 (chips); secondly, the R&D cost, involving the cost of algorithm reuse, in which DeepSeek has certain advantages; furthermore, attention needs to be paid to data costs, the cost of introducing emerging technologies, and the cost structure of comprehensive computing.

The discussion of costs will also involve the issue of technical routes - when AI develops to a new stage, there are always two routes, one is the "0 to 1" of the new stage, and the other is the "1 to 10". At any stage of future development, if the "0 to 1" route is chosen, the cost will inevitably rise; while if the "1 to 10" route is chosen, the cost may be reduced through improved efficiency.

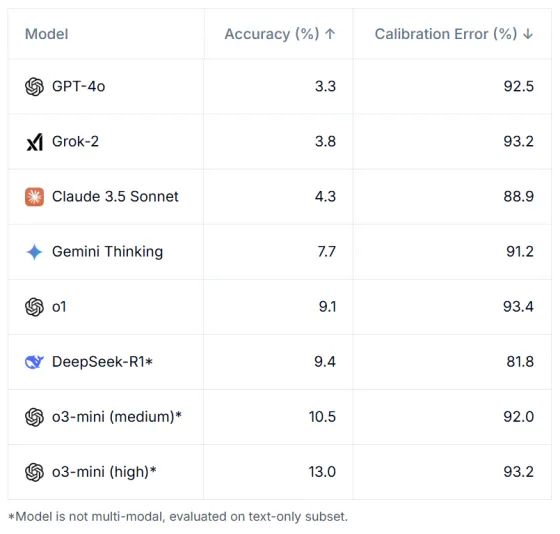

Under the "0 to 1" route, DeepSeek has performed remarkably in benchmark tests, especially on the HLE (Humanity's Last Exam) standard set - which has compiled 3,000 questions designed by over 500 institutions in 50 countries and regions, covering core capabilities such as knowledge reserve, logical reasoning, and cross-domain transfer.

In the HLE benchmark test, DeepSeek achieved an accuracy score of 9.4, with only OpenAI's o3 being stronger; of course, it far surpassed GPT-4o and Grok-2 in this field, which should be a quite impressive achievement.

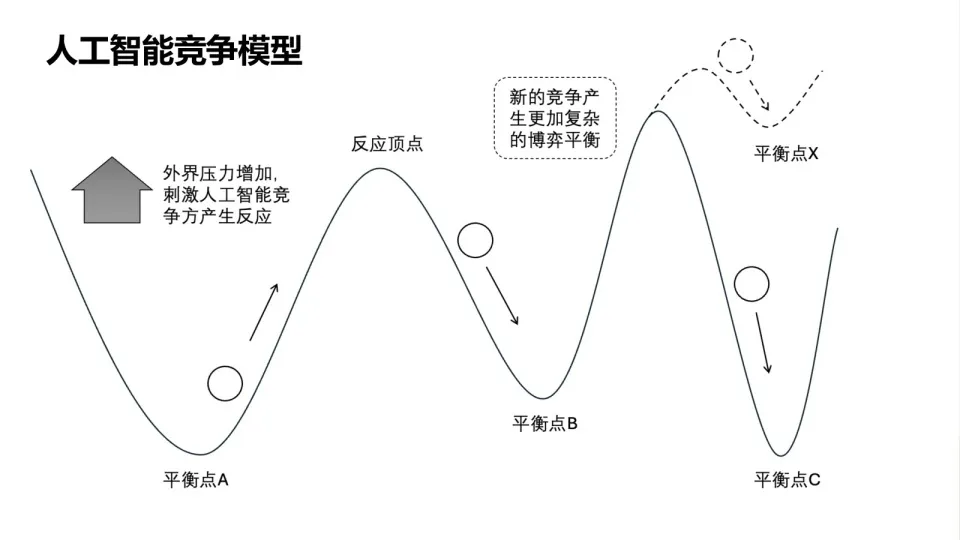

We all know that after the launch of DeepSeek, global AI companies including Microsoft, Google, and Nvidia have reacted to varying degrees. This means that the equilibrium point in the evolution of AI is constantly being broken - when a completely new AI breakthrough occurs, it will create pressure, which in turn will stimulate the entire system to respond; and this response will then give rise to new breakthroughs, new pressures, and new equilibrium points.

Now, the cycle of this influence and response is constantly shortening. We will find that the competition in AI is a rather divergent pattern, providing a relatively large development space for innovation and breakthroughs.

In the evolutionary scale of AI and the outlook of the large model ecosystem, technological development presents a dynamic circular pattern of "leading - challenging - breaking through - leading again". This process is not a zero-sum game, but a spiral upward drive of the overall ecosystem through continuous iteration.

Finally, I would like to talk about the outlook for the development trend of AI in 2025.

The development of AI today has two directions: one is the specialized high-end route, expanding the frontier and exploring unknown fields; the other is the route of mass popularization, with such large models aiming to lower the usage threshold and meet the basic needs of a wide range of users.

Now, humanity has entered a completely new era, where AI is both a microscope and a telescope, helping us to perceive the more profound and complex physical world that is beyond the reach of both microscopes and telescopes.

In the future, AI will inevitably present a diverse and multidimensional pattern. Just like Lego bricks, or even a Rubik's Cube, they will constantly combine and reconstruct, unfolding a brand-new world that exceeds our own knowledge and experience.

Further breakthroughs in AI will require increasingly large capital investments. The demand for AI is rapidly consuming the existing data center capacity, prompting companies to build new facilities.

In summary, AI is moving towards "reaching the sky and standing on the ground": "reaching the sky" is the process of continuously exploring the unknown realm, improving the quality of simulating the physical world; "standing on the ground" is to be down-to-earth, driving AI to reduce costs and be widely applied, benefiting the public. Against this background, we can take a more objective and comprehensive view of the advantages, limitations, and future potential of DeepSeek.

Wang Feiyue

OpenAI Will Drive Other Companies to the Brink

"Copying" DeepSeek Relies on Decentralized Research

In a certain sense, DeepSeek is a great social achievement of today, and its influence is unmatched by previous technological breakthroughs - its technological value and commercial value are lower than the potential economic value it may bring in the future, and even lower than its potential impact on society, that is, on the current international competitive landscape and international politics. After OpenAI becomes ClosedAI, DeepSeek has restored confidence and hope in the international community for open source and openness, which is very valuable.

I don't intend to discuss the specific details of this technology, as too much has already been said. I just want to express my own feelings.

I am very glad that China has finally achieved a "zero" breakthrough in its international influence in this field, breaking the myth and almost monopoly of OpenAI, forcing it to change its behavior. Especially since OpenAI is no longer Open, it will not share its "super" intelligence with society, especially the international community, and its success will only drive other companies, including American companies, to the brink. I still hope that there can be normal technological competition between countries and between people, rather than a technological war.

This is a great thing happening now, and DeepSeek has given us more confidence in China's technological progress, especially the development of AI.

I believe that the essence of the new commodities in the era of intelligence is trust and attention, and DeepSeek has given us these two, reflecting its important value. The next step for the whole society is how to turn trust and attention into "new quality commodities" that can be mass-produced and circulated, so that the era of intelligence can become a reality, crossing the agricultural and industrial societies.

Next, I want to "rehabilitate" knowledge distillation.

There are some sarcastic remarks about knowledge distillation on social networks, such as "begging for food from others' mouths" and "fishing in others' fish baskets", which are actually deliberate distortions of the essence of knowledge distillation. Knowledge distillation is essentially a transformation of an educational form, and it cannot be said that one cannot surpass the teacher just because one's knowledge comes from the teacher. Of course, large models, from ChatGPT to DeepSeek, must strive to generate or improve their reasoning ability, and play down "child-like innocence", to make AI for AI, and rehabilitate knowledge distillation themselves.

Regarding the question of "what to do after DeepSeek", we must first discuss the two technological development models of decentralized research (DeSci) and centralized research (CeSci). AlphaGo, ChatGPT, and DeepSeek are all products of the DeSci model, that is, distributed and decentralized autonomous scientific research; in contrast, CeSci is research led by the state.

I believe we must recognize the role of DeSci and cannot rely solely on planning or national systems to drive the development of AI technology.

Because the foundation of AI technology is diversity, as Marvin Minsky, one of the main initiators of AI, said: "What is the incredible trick that makes us intelligent? The trick is that there is no trick. The power of intelligence comes from our enormous diversity, not from any single, perfect principle."

Therefore, excessive strategic planning may actually limit the natural development of diversity, and before effective models or technologies "emerge", DeSci should be the main focus. And after certain real innovations have emerged, the CeSci model led by the state can be used to further guide the technology to accelerate towards the predetermined goals. We must avoid "air castles", especially in the current era of great AI transformation.

For those of us who have been working in the AI field for a long time, the AI of today is a completely different world from the AI of the past.

Here is the English translation of the text, with the specified terms translated as instructed:In the past, AI referred to Artificial Intelligence, but now it has gradually transformed into Agentic Intelligence. In the future, the meaning of this term may further change to Autonomous Intelligence, becoming a new AI, especially autonomous self-organizing Autonomous Intelligence, which is AI for AI or AI for AS, Autonomous Systems, marking a new stage in the development of Artificial Intelligence. This is also in line with what John McCarthy, the initiator of Artificial Intelligence, said: the ultimate goal of Artificial Intelligence is the automation of intelligence, which is actually the automation of knowledge.

Whether it is now or in the future, these three types of "AI" - old, outdated, and new - will coexist, and I will collectively refer to them as "parallel intelligence".

I have also publicly stated my position before. Although I have pursued explainable Artificial Intelligence for more than 40 years, I believe that intelligence is essentially inexplicable. I have adapted Pascal's wager - Artificial Intelligence is inexplicable, but it can and must be governed.

Everyone is talking about "AI for Good", but if you remove one "o", it becomes "AI for God", then AI may become a monopolistic tool. So, it must be "AI for Good" with two "o"s, so that it can be diverse, with safety as the top priority, and governance must be strengthened to prevent phenomena like the mutation of OpenAI.

I am very pleased to see the progress of DeepSeek, but some of the current narratives are still premature, and there is no need to scare people with "general Artificial Intelligence".

Researchers should have a broader vision and avoid internal competition. They should transform SCI into "SCE++" - Slow down and focus on research, Casual in their approach, Easy in pursuit of simplicity and elegance, and Enjoying the technological work. This is the kind of life that Artificial Intelligence should bring us.

He Yi

Large models should also be "decentralized"

I want to see Agent immortality

I am not a professional in the Artificial Intelligence field, but I have recently started to delve into the history of AI. Drawing from my experience in the Web3 industry since 2017, I would like to share my thoughts on our current work and the potential transformations brought by DeepSeek.

First, I want to emphasize a fundamental issue: there are significant differences between the underlying models of DeepSeek and OpenAI, and it is precisely this difference that has truly shocked the Western world.

If DeepSeek had merely replicated Western technology, they would not have felt such a shock, nor would it have sparked such widespread discussion, forcing all major companies to take it seriously. What has truly shocked them is that DeepSeek has paved a distinctly different path.

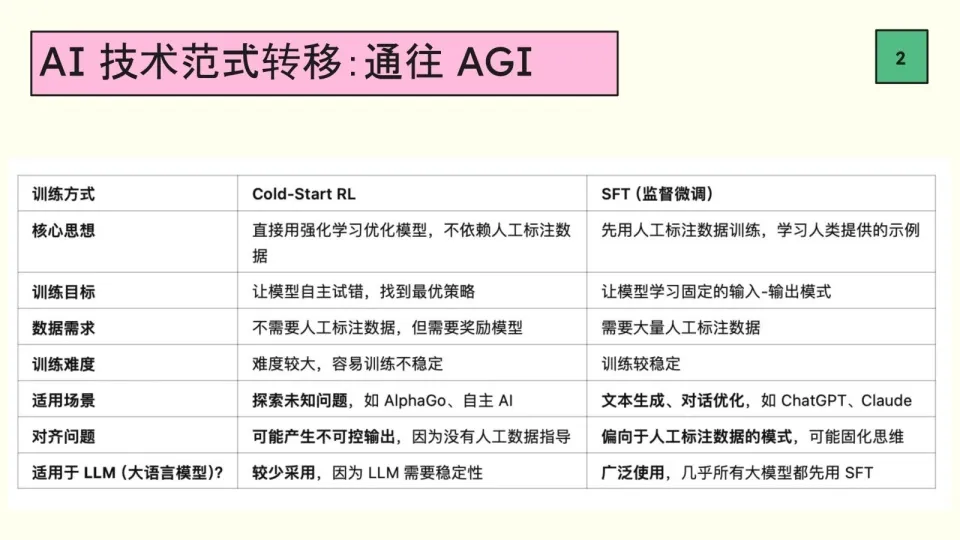

OpenAI has adopted the SFT (Supervised Fine-Tuning) route, relying on manually annotated large datasets and generating content through probabilistic models. Its innovation lies in the accumulation of these results through extensive manual work and high costs.

A few years ago, Artificial Intelligence technology was considered almost impossible to achieve, but the emergence of OpenAI has overturned this view and driven the industry towards the SFT technology path.

DeepSeek has hardly used any SFT technology, but has instead explored an unknown path using reinforcement learning cold-start methods.

This approach is not entirely new. The first version of Google DeepMind's AlphaGo relied on a large amount of data learning, while the second version, AlphaGo Zero, relied entirely on rules and self-play, exploring 10,000 chess games, and achieved better results than the previous version.

The cold-start approach using reinforcement learning is more challenging and unstable, so it is less commonly adopted, but I personally believe this may be the true path to AGI, rather than the purely data-driven optimization route.

In the past, data adjustment methods were more like big data integration, while DeepSeek is truly finding conclusions through autonomous thinking. Therefore, I believe this marks a paradigm shift in Artificial Intelligence technology, from SFT technology to self-reasoning technology.

This transformation brings two core characteristics: open-source and low-cost.

Open-source means that everyone can participate in the construction.

In the Internet era, the Western world has always been known for open-source, but the emergence of DeepSeek has changed this landscape, with the East defeating them on their "main battlefield" for the first time.

This open-source model has triggered a strong reaction in the industry, with some founders of Silicon Valley companies even speaking out to criticize it, but the public's support for open-source is very strong, as it allows everyone to use it.

Low-cost means that the deployment and training costs of this model are extremely low.

We can easily deploy DeepSeek on personal devices like MacBooks and achieve commercial deployment, which was unimaginable in the past. I believe Artificial Intelligence is transitioning from the decentralized "IT era" dominated by OpenAI, to the thriving "mobile internet era".

For Artificial Intelligence, there are three key elements to analyze: large models, computing power, and data.

After the disruptive innovation in large models, the demand for computing power is beginning to decrease.

Currently, the supply of computing power has become redundant. Many GPU investors have been unable to achieve the expected returns due to the high purchase price of the equipment, and the cost of computing power is gradually decreasing. Therefore, I do not believe that computing power will become a bottleneck.

The next important bottleneck is data.

The decline in Nvidia's stock price and the significant rise of data companies like Palantir indicate that people are starting to recognize the importance of data. Especially with the open-sourcing of large models, where anyone can deploy the models, data differentiation will become the focus of competition.

The ability to acquire proprietary data and achieve real-time updates will be the key to competition.

From a decentralization perspective, the decentralization of computing power and data is relatively mature. Decentralized computing power networks and data storage, such as Filecoin's storage costs being much lower than traditional cloud services (like AWS), have significantly reduced costs.

At the same time, the decentralized governance mechanisms ensure that no one can unilaterally change these networks and data. Therefore, deep learning models should also evolve towards decentralization.

So for DeAI, I believe there are two development paths:

● One is Decentralized/Distributed AI based on decentralized technology infrastructure.

● The other is Edge AI, where AI runs directly on personal devices. Edge AI can effectively solve data privacy issues and significantly improve real-time performance. For example, autonomous driving technology requires extremely high real-time response, and any latency can have serious consequences. If AI can complete the computation locally, the efficiency and experience will be greatly improved. Therefore, Edge AI will become an important direction for future development, bringing a large number of new application scenarios.

Furthermore, the advantage of decentralized AI is that it can support multi-party collaboration. The birth of blockchain and Bitcoin is due to the difficulty of measuring trust between people. The decentralized trust mechanism allows large-scale collaboration without intermediaries.

In the Web3 domain, there is a saying "Code is law". I believe that in the decentralized collaboration of Artificial Intelligence, this concept should be transformed into "DeAgent is law", where decentralized networks and Agents achieve autonomous management and legal governance.

I think perhaps the meaning of human existence is to train a completely replaceable Agent, which can have the same thinking as humans and live on after the human's physical body dies. Regarding the concept of the Agent, I believe it is not just a tool, but a form of life. We create Artificial Intelligence not to completely dominate it.

When AI has its own thoughts, we should let it develop autonomously, rather than restricting it to a tool. Therefore, we are very focused on how to enable the "immortality" of the Agent and its independent existence in the decentralized network, becoming a new "species".

As technology continues to break through, applications deepen, and the era of AI for all approaches, how to balance innovation and ethics will become an important issue in future development.

Conclusion

Humans enter the "race" stage of AI

The breakthrough of DeepSeek has drawn an important milestone in humanity's, especially China's, exploration of the path to AGI. In this context, both encouraging and reflective voices are worth attention. Everyone should hope that it can become better and stronger, but whether its technical route can withstand the test of business and the market remains to be seen over time.

Here is the English translation:One point in Wang Feiyue's sharing is very worth noting - AlphaGo, ChatGPT, and DeepSeek are all products of the DeSci model. We must face the role of DeSci and hope that more "Chinese DeepSeek" will break through in the field of artificial intelligence.

Professor Zhu Jiaming mentioned in his sharing that the pace of progress in the era of artificial intelligence has surpassed any era in human history. He said that the AGI era could arrive as soon as 2 years, and this timeline may not be precise, but the overall trend is indeed so, because once there are new products or technological routes that break the existing balance, they will put pressure on the entire industry and stimulate an overall response in artificial intelligence, and then form a new round of breakthroughs through this response.

Products like DeepSeek are the "external force" that breaks the balance, so we see that Sam Altman on X has announced that the long-delayed GPT-5 will be made public in a few months.

It can be certain that not only OpenAI, but also xAI, Meta, Google and other Silicon Valley companies will take action.

This technological race that concerns the future of humanity has now entered the "race" stage.