Written by: Frank Fu @IOSG Ventures

MCP is rapidly occupying the core position in the Web3 AI Agent ecosystem, introducing MCP Server through a plugin-like architecture, and empowering AI Agents with new tools and capabilities.

Similar to other emerging narratives in the Web3 AI field (such as vibe coding), MCP, which stands for Model Context Protocol, originated from Web2 AI and is now being reimagined in the Web3 context.

What is MCP?

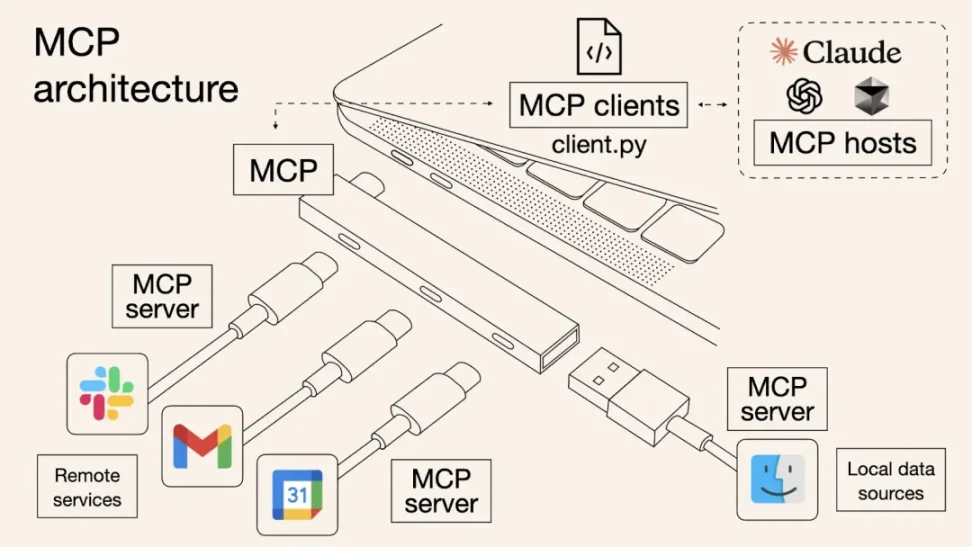

MCP is an open protocol proposed by Anthropic for standardizing how applications pass context information to large language models (LLMs). This enables more seamless collaboration between tools, data, and AI Agents.

Why is it important?

The core limitations of current large language models include:

Unable to browse the internet in real-time

Unable to directly access local or private files

Unable to autonomously interact with external software

MCP bridges these capability gaps by serving as a universal interface layer, enabling AI Agents to use various tools.

You can think of MCP as the USB-C of the AI application domain - a unified interface standard that makes it easier for AI to connect to various data sources and functional modules.

Imagine each LLM as a different phone - Claude uses USB-A, ChatGPT uses USB-C, and Gemini uses a Lightning interface. If you're a hardware manufacturer, you'd have to develop a set of accessories for each interface, with extremely high maintenance costs.

This is exactly the problem faced by AI tool developers: customizing plugins for each LLM platform greatly increases complexity and limits scalable expansion. MCP is designed to solve this issue by establishing a unified standard, like making all LLMs and tool providers use a USB-C interface.

This standardization protocol benefits both parties:

For AI Agents (clients): can safely access external tools and real-time data sources

For tool developers (servers): one-time integration, cross-platform availability

The ultimate result is a more open, interoperable, and low-friction AI ecosystem.

How is MCP different from traditional APIs?

APIs are designed for humans, not AI-first. Each API has its own structure and documentation, requiring developers to manually specify parameters and read interface docs. AI Agents themselves cannot read documentation and must be hard-coded to adapt to each API type (such as REST, GraphQL, RPC, etc.).

MCP standardizes the function call format within APIs, abstracting away these unstructured parts and providing a unified calling method for Agents. You can think of MCP as an API adaptation layer encapsulated for Autonomous Agents.

When Anthropic first introduced MCP in November 2024, developers needed to deploy MCP servers locally. However, in May this year, Cloudflare announced at its developer week that developers can directly deploy remote MCP servers on the Cloudflare Workers platform with minimal device configuration. This greatly simplifies MCP server deployment and management processes, including authentication and data transmission, essentially making it a "one-click deployment".

Although MCP itself might seem unimpressive, it is far from insignificant. As a pure infrastructure component, MCP cannot be directly used by consumers. Its value will only truly emerge when upper-layer AI agents call MCP tools and demonstrate actual results.

Web3 AI x MCP Ecosystem Landscape

AI in Web3 also faces the problems of "lack of context data" and "data silos", meaning AI cannot access real-time on-chain data or natively execute smart contract logic.

In the past, projects like ai16Z, ARC, Swarms, and Myshell attempted to build multi-Agent collaborative networks but ultimately fell into the "reinventing the wheel" dilemma due to reliance on centralized APIs and custom integrations.

Adapting each data source requires rewriting the adaptation layer, leading to skyrocketing development costs. To solve this bottleneck, next-generation AI Agents need a more modular, Lego-like architecture for seamless integration of third-party plugins and tools.

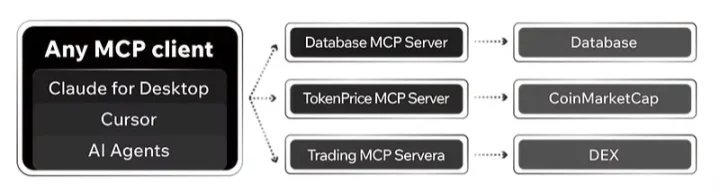

As a result, a new generation of AI Agent infrastructure and applications based on MCP and A2A protocols are emerging, designed specifically for Web3 scenarios, enabling Agents to access multi-chain data and natively interact with DeFi protocols.

▲ Source: IOSG Ventures

(This diagram does not completely cover all MCP-related Web3 projects)

As infrastructure matures, the competitive advantage of "developer-first" companies will shift from API design to: who can provide richer, more diverse, and more easily composable toolsets.

In the future, every application might become an MCP client, and every API might be an MCP server.

This could give rise to a new pricing mechanism: Agents can dynamically select tools based on execution speed, cost efficiency, relevance, etc., forming a more efficient Agent service economic system empowered by Crypto and blockchain as a medium.

Of course, MCP itself does not directly face end users; it is a base protocol layer. In other words, the true value and potential of MCP can only be seen when AI Agents integrate and transform it into practical applications.

Ultimately, Agents are the carriers and amplifiers of MCP capabilities, while blockchain and cryptographic mechanisms build a trusted, efficient, and composable economic system for this intelligent network.