1/ Decentralized AI training is shifting from impossible to inevitable.

The era of centralized AI monopolies is being challenged by breakthrough protocols that enable collaborative model training across distributed networks.

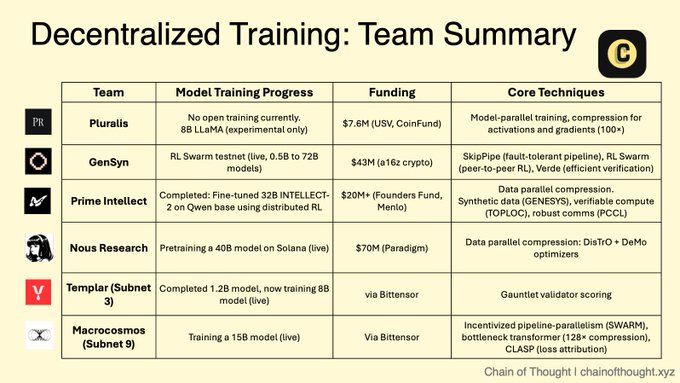

7/ Bittensor subnets are specializing for AI workloads:

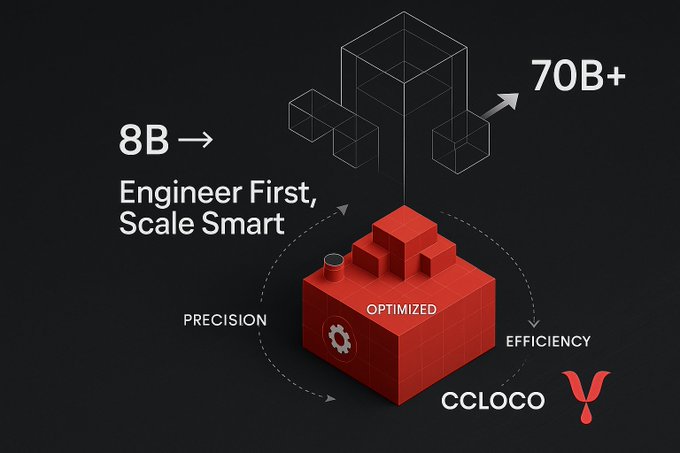

- Subnet 3 (Templar) focuses on distributed training validation.

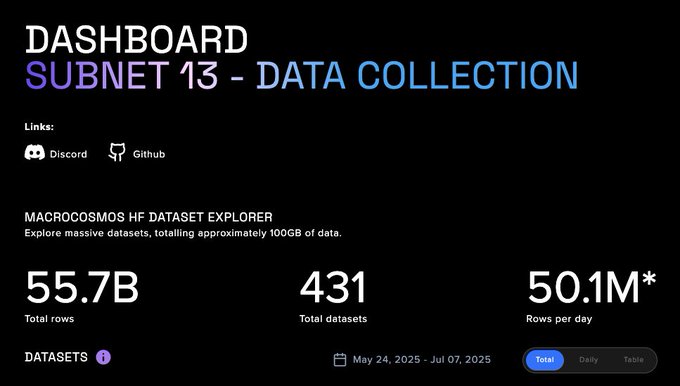

- Subnet 9 (Macrocosmos) enables collaborative model development, creating economic incentives for participants in the neural network.

8/ The decentralized training thesis: Aggregate more compute than any single entity, democratize access to AI development, and prevent concentration of power.

As reasoning models drive inference costs higher than training, distributed networks may become essential infrastructure in the AI economy.

From Twitter

Disclaimer: The content above is only the author's opinion which does not represent any position of Followin, and is not intended as, and shall not be understood or construed as, investment advice from Followin.

Like

Add to Favorites

Comments

Share

Relevant content