Author: The Smart Ape

Original title: I built a Polymarket bot and tested multiple parameter setups, here are the results.

Compiled and edited by: BitpushNews

A few weeks ago, I decided to build my own Polymarket bot . The full version took me several weeks to complete.

I'm willing to invest this effort because there are indeed efficiency loopholes in Polymarket. Although some robots are already profiting from these inefficiencies, it's far from enough. The opportunities in this market still far outweigh the number of robots.

Robot building logic

The robot's logic is based on a set of strategies I used to execute manually, which I automated to improve efficiency. The robot operates on the "BTC 15-minute UP/DOWN" market.

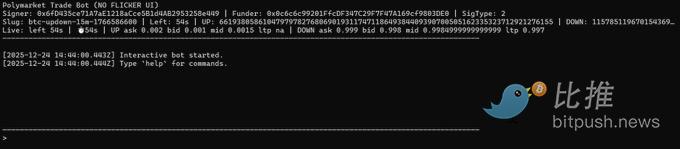

The bot runs a real-time monitoring program that can automatically switch to the current BTC 15-minute round, stream the best bid/ask via WebSocket, display a fixed terminal UI, and allow full control via text commands.

In manual mode, you can place an order directly.

buy up <usd> / buy down <usd>: Buy a specific amount of US dollars.

buyshares up <shares> / buyshares down <shares>: Buy the exact number of shares using a user-friendly LIMIT + GTC (valid until cancellation) order, executed at the current best ask price.

Automatic mode runs a repeating two-leg loop.

The first step involves observing price fluctuations only within the first window of each round. If either side falls quickly enough (with a drop of at least movePct within approximately 3 seconds), it triggers "Leg 1," buying the side that has fallen sharply.

After completing Leg 1, the bot will never buy the same side again. It will wait for "Leg 2 (i.e., hedging)" and will only trigger if the following condition is met: leg1EntryPrice + oppositeAsk <= sumTarget.

When this condition is met, it buys on the opposite side. After Leg 2 is completed, the loop ends, and the robot returns to observation mode, waiting for the next crash signal using the same parameters.

If the round number changes during the loop, the robot will abandon the loop that was opened and start again in the next round using the same settings.

The automatic mode parameters are set as follows: auto on <shares> [sum=0.95] [move=0.15] [windowMin=2]

Shares: The size of the position used in two trades.

sum: The threshold for allowing hedging.

move (movePct): the drop threshold (e.g., 0.15 = 15%).

windowMin: The allowed execution time for Leg 1, starting from the beginning of each round.

Backtesting

The robot's logic is simple: wait for a violent sell-off, buy the side that has just dropped, then wait for the price to stabilize and hedge by buying the opposite side, while ensuring that: priceUP + priceDOWN < 1.

However, this logic needs to be tested. Does it truly work in the long run? More importantly, the robot has many parameters (number of shares, total, moving percentage, window duration, etc.). Which set of parameters is optimal and maximizes profits?

My initial idea was to run the bot live for a week and observe the results. The problem is that this takes too long and can only test one set of parameters, while I need to test many sets.

My second idea was to use online historical data from the Polymarket CLOB API for backtesting. Unfortunately, for the BTC 15-minute up/down market, the historical data endpoint consistently returned an empty dataset. Without historical price ticks, the backtesting couldn't detect the "plunge within about 3 seconds," couldn't trigger Leg 1, and resulted in 0 loops and a 0% ROI regardless of the parameters.

Upon further investigation, I discovered that other users encountered the same issue when retrieving historical data from certain markets. I tested other markets that did indeed return historical data and concluded that, for this particular market, historical data was simply not being retained.

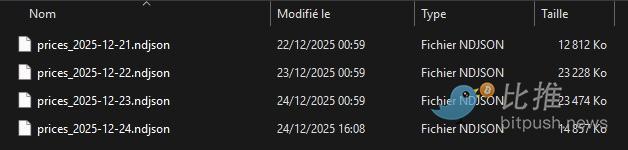

Due to this limitation, the only reliable way to backtest this strategy is to create my own historical dataset while the bot is running by recording the best-ask price in real time.

The logger writes a snapshot to disk, which includes the following:

Timestamp

Round slug

Remaining seconds

UP/DOWN Token ID

UP/DOWN Best Selling Price

Subsequently, the "recorded backtest" process replays these snapshots and deterministically applies the same automated logic. This ensures that the high-frequency data needed to detect crashes and hedging conditions is available.

I collected a total of 6 GB of data over 4 days. I could have logged more, but I thought that was enough to test different sets of parameters.

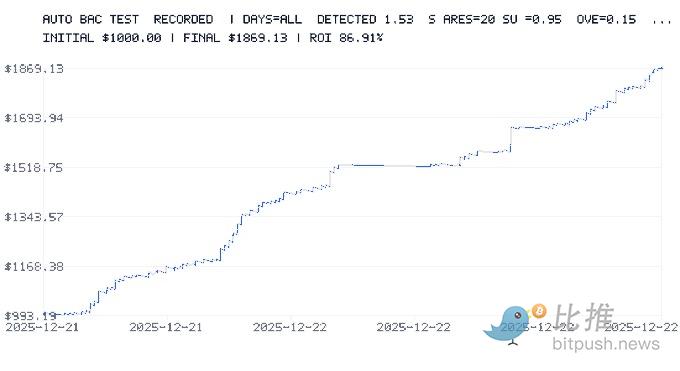

I started testing this set of parameters:

Initial balance: $1,000

20 shares per transaction

sumTarget = 0.95

The threshold for a sharp drop is 15%.

windowMin = 2 minutes

I also applied a constant 0.5% rate and a 2% spread to stay in a conservative scenario.

Backtesting showed an ROI of 86%, and $1,000 turned into $1,869 in just a few days.

Then I tested a more aggressive set of parameters:

Initial balance: $1,000

20 shares per transaction

sumTarget = 0.6

The threshold for a sharp drop is 1%.

windowMin = 15 minutes

Result: The return on investment was -50% after 2 days.

This clearly demonstrates that parameter selection is the most crucial factor. It can make you a lot of money, or it can lead to significant losses.

Limitations of backtesting

Even with costs and price differences included, backtesting still has its limitations.

First, it only used data from a few days, which may not be enough to gain a comprehensive market perspective.

It relies on a snapshot of the best selling price recorded; in reality, orders may be partially filled or filled at different prices. Furthermore, order book depth and available trading volume are not modeled.

Sub-second fluctuations were not captured (data is sampled once per second). Although the backtest has a 1-second timestamp, many things can happen within a second.

In backtesting, the slippage is constant, and there is no simulation of variable latency (e.g., 200–1500 milliseconds) or network spikes.

Every transaction is considered to be executed "instantly" (no order queues, no pending orders).

Fees are collected uniformly, but in reality, fees may depend on: the market/token, the order placer and the order taker, the fee level, or conditions.

To remain pessimistic (cautious), I applied a rule: if Leg 2 is not executed before the market closes, Leg 1 will be considered a total loss.

This is deliberately conservative, but it doesn't always reflect reality:

Sometimes Leg 1 can be closed early.

Sometimes it ends up in-the-money (ITM) and wins.

Sometimes the loss can be partial rather than complete.

While the losses may be overestimated, this provides a useful "worst-case" scenario.

Most importantly, backtesting cannot simulate the impact of your large orders on the order book or the behavior that attracts other traders to target you. In reality, your orders can:

Disrupt the order book.

Attracting or repelling other traders,

This leads to nonlinear slippage.

The backtest assumes you are a pure liquidity taker (price taker), so it has no impact.

Finally, it does not simulate rate limits, API errors, order rejections, pauses, timeouts, reconnections, or situations where the robot is busy and misses a signal.

Backtesting is extremely valuable for identifying a good range of parameters, but it is not a 100% guarantee because some real-world effects cannot be modeled.

Infrastructure

I plan to run the robot on a Raspberry Pi to avoid consuming my host machine's resources and to keep it running 24/7.

However, there is still significant room for improvement:

Using Rust instead of JavaScript will provide far superior performance and processing time.

Running a dedicated Polygon RPC node will further reduce latency.

Deploying on a VPS close to the Polymarket server will also significantly reduce latency.

There are certainly other optimization methods I haven't discovered yet. Currently, I'm learning Rust because it's becoming an indispensable language in Web3 development.

Twitter: https://twitter.com/BitpushNewsCN

BitPush Telegram Community Group: https://t.me/BitPushCommunity

Subscribe to Bitpush Telegram: https://t.me/bitpush