This article was written in collaboration with the Ingonyama team . Special thanks to @jeremyfelder for making this possible .

Hardware accelerated ZKML

We recently integrated the open source Icicle GPU acceleration library built by the Ingonyama team . This enables developers to take advantage of hardware acceleration through simple environment configuration.

This integration is a strategic enhancement to the EZKL engine and solves the computational bottleneck inherent in current ZK proof systems. It is particularly useful for large circuits, such as those generated for machine learning models.

We observe that the MSM time of the aggregated circuit is reduced by 98% compared to the baseline CPU run, and the total aggregated proof time is reduced by 35% compared to the baseline CPU proof time.

This is the first step towards full hardware integration. Together with the Ingonyama team, we continue to work on full support for GPU operations. In addition, we are working on integrating with other hardware vendors - ideally, demonstrating tangible benchmarks for the wider field.

We provide context and technical specifications below. Or you can always go directly to the library here .

Zero knowledge bottleneck

To quote Hardware Review: GPUs, FPGAs and Zero-Knowledge Proofs, there are two components to a zero-knowledge application:

1. DSLs and low-level libraries: They are essential for expressing computations in a ZK-friendly way. Examples include Circom, Halo2, Noir, and low-level libraries like Arkworks. These tools convert programs into constraint systems (or read more here ), where operations like addition and multiplication are represented as individual constraints. Bit operations are trickier and require more constraints.

2. Proof systems: They play a vital role in generating and verifying proofs. The verification system handles inputs such as circuits, witnesses, and parameters. General-purpose systems include Groth16 and PLONK, while specialized systems like EZKL cater to specific inputs such as machine learning models.

In the most widely deployed ZK systems, the main bottlenecks are:

• Multi-scalar multiplication (MSM): Large-scale multiplication of vectors, which consumes a lot of time and memory even when parallelized.

• Fast Fourier Transform (FFT): An algorithm that requires frequent transformations of data, making it difficult to speed up, especially on distributed infrastructure.

The role of hardware

Hardware acceleration, such as GPUs and FPGAs, provides significant advantages over software optimization by enhancing parallelism and optimizing memory access:

• GPU: Provides rapid development and massive parallelism, but consumes a lot of power.

• FPGA: Offers lower latency, especially for large data streams, and is more energy efficient, but has a complex development cycle.

For a more extensive discussion of optimal hardware design and performance, see here . The field is evolving rapidly and many methods remain competitive.

GPU acceleration for Halo2

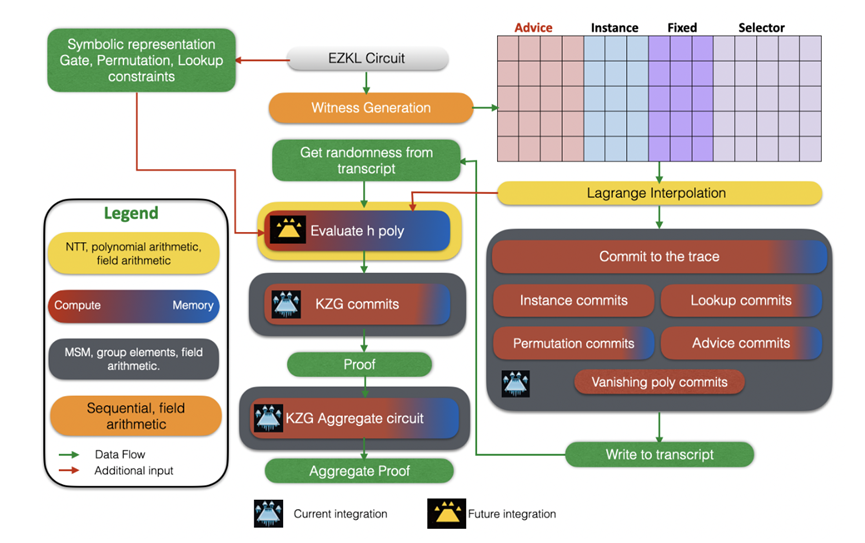

In Halo2 verification systems , bottlenecks may vary depending on the specific circuit being verified. These bottlenecks mainly fall into two categories:

1. Commitment bottlenecks (MSM): These are primarily computational bottlenecks and are usually parallelizable. In circuits where the MSM is the bottleneck, we observe a certain degree of generality. This means that applying GPU-accelerated solutions can effectively address these bottlenecks with minimal modifications to the existing code base.

2. Constraint evaluation bottlenecks (especially in h-poly): These bottlenecks are more complex because they can be computationally or memory-intensive. They depend heavily on the details of the circuit. Addressing these issues requires a tailored redesign of the evaluation algorithm. The focus here is on optimizing the trade-off between memory usage and computation, and deciding whether to store intermediate results or recompute them.

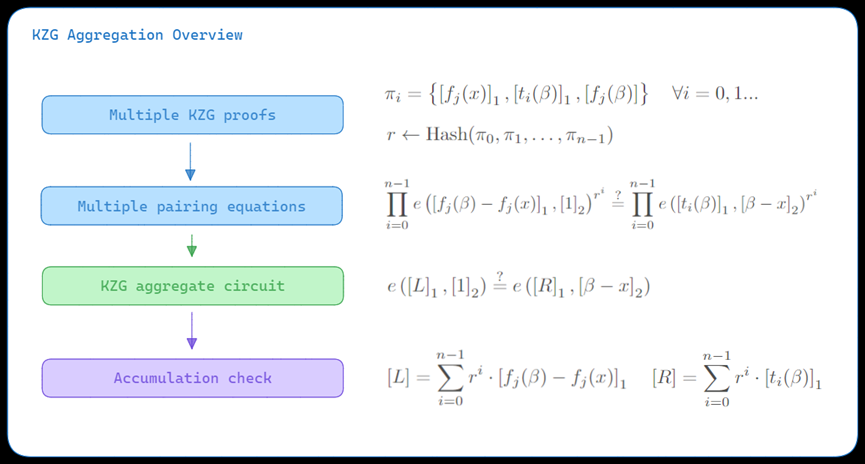

A typical example is the KZG aggregation circuit. In such circuits, the main challenge lies in the accumulation of elements of the elliptic curve group. The constraints in these cases are relatively uniform and low-level (e.g., an incomplete additive formula with level 4 constraints, according to the Halo2 documentation ).

Therefore, most of the complexity comes from commitment (MSM), a computational problem that can be efficiently solved with GPU acceleration.

For the scope of this integration, we chose to focus on commitment bottlenecks. This is low-hanging fruit and an optimization of the core component of the engine (KZG aggregation). This is just the first step, there is still much work to be done.

Icicle: CUDA-enabled GPU

Ingonyama's team developed Icicle as an open source library to accelerate designs for ZK using CUDA-enabled GPUs. CUDA, Computing Unified Device Architecture, is a parallel computing platform and API model created by NVIDIA. It allows software to take advantage of NVIDIA GPUs for general-purpose processing. The main goal of Icicle is to offload significant parts of the proof program code to the GPU and take advantage of parallel processing capabilities.

Icicle hosts APIs in Rust and Golang, which simplifies integration. The design is also customizable with the following specifics:

• High-level API: for common tasks such as submitting, evaluating, and interpolating polynomials.

• Low-level API: for specific operations, such as multi-scalar multiplication (MSM), number-theoretic transformation (NTT), and inverse number-theoretic transformation (INTT).

• GPU kernel: for optimized execution of specific tasks on the GPU.

It is worth noting that Icicle supports basic functions such as:

• Vectorized field/group arithmetic: Efficient handling of mathematical operations on fields and groups.

• Polynomial arithmetic: key to many ZK algorithms.

• Hash functions: critical for cryptographic applications.

• Complex structures: such as inverse elliptic curve number theory transformation (I/ECNTT), batch MSM and Merkle trees.

Integrate with EZKL

The Icicle library has been seamlessly integrated with the EZKL engine, providing GPU acceleration for users with direct access to NVIDIA GPUs or simply access to Colab. This integration enhances the performance of the EZKL engine by leveraging the parallel processing capabilities of the GPU. Here's how to enable and manage this feature:

• Enable GPU acceleration: To enable GPU acceleration, build the system using the Icicle feature and set the environment variables as follows:

export ENABLE_ICICLE_GPU=true

• Reverting to CPU: To switch back to CPU processing, simply unset the ENABLE_ICICLE_GPU environment variable instead of setting it to false:

unset ENABLE_ICICLE_GPU

• Customizing thresholds for small circuits: If you wish to modify the thresholds that make up a small circuit, you can set the ICICLE_SMALL_K environment variable to the desired value. This allows greater control over when GPU acceleration is used.

Overview of current ICICLE integration. This integration targets computational bottlenecks due to MSM, with significant effects seen in KZG aggregation circuits.

Key functions

This integration provides several technical supports.

Most importantly, the integration supports plug-and-play MSM operations in the GPU using the Icicle library. As a target and test environment, we focus on replacing the CPU-based KZG promises in the EZKL aggregation command. This is where multiple proofs are combined into one. More specifically, KZG commits commit and commit_lagrange (done on the CPU) versus MSM operations on BN254 elliptic curves (done on the GPU).

We also enabled environment variables and crate functionality to allow developers to switch CPU and GPU between different circuits of the same binary/build EZKL. To optimize GPU switching, GPU acceleration is enabled by default only for large-k circuits (k > 8).

Benchmark results

Our benchmark results show that integrating the Icicle library into the EZKL engine leads to substantial performance improvements:

• Significant reduction in MSM time: We observed an approximately 98% reduction in multiscalar multiplication (MSM) time compared to the baseline CPU run of the aggregated circuit. This demonstrates efficient offloading of computing tasks to the GPU.

• Significantly faster MSM operations: ICICLE performs MSM operations 50 times faster on average compared to baseline CPU configurations. This speedup is consistent across most MSMs in the aggregate command.

• Overall reduction in proof time: The overall time required to generate aggregate proofs is reduced by approximately 35% compared to baseline CPU proof times. This reflects a significant overall performance enhancement during proof generation.

These results highlight the effectiveness of GPU acceleration in optimizing ZK proof systems, especially on computational aspects such as MSM operations. To verify, you can check out our continuous integration tests here .

future development direction

Going forward, we plan to further optimize and expand EZKL’s ability to integrate with Icicle:

• Scaling GPU operations: One area of focus is replacing more CPU operations with the GPU. This includes operations involving number theoretic transformations (NTT), which are currently CPU-based. By offloading these operations to the GPU, we expect to achieve greater efficiency and speed.

• Introduction of batch operations: Another important development is the addition of batch operations. This enhancement is specifically designed to enable efficient use of the GPU even in smaller and wider circuits. By doing so, we aim to extend the benefits of GPU acceleration to a wider range of circuit types and sizes, ensuring optimal performance in all scenarios.

More broadly, we seek to see integration with other hardware systems. This will provide functional benchmarking and developer flexibility to a wider range of domains.

Through these future developments, we aim to continue to push the performance boundaries of ZK proof systems, making them more efficient and suitable for a wider range of applications.

appendix

Future integration considerations

For contributors and developers, we tested this integration using the aggregate command tutorial on a custom instance with four attestations. Some notes on future integrations

• Benchmark environment: Run c tests using an AWS c6a.8xlarge instance with a benchmark CPU of AMD Epyc 7R13.

• Aggregation command tutorial: Verified the performance of the integration using the aggregation command tutorial, including a test instance with 4 baseline comparisons to prove it.

• Initial testing on a single MSM instance: Initially, testing is focused on a single MSM instance in the EZKL/halo2 crate to verify functionality.

• Issues with GPU context in complete proofs: When extending to complete proofs, it was found that the GPU context was lost after a single operation. The solution is achieved by creating a static reference to maintain the GPU context throughout the prove command.

• Focus on aggregation circuits/commands: This integration mainly focuses on aggregation circuits/commands, which are characterized by a large K (number of constraints) and a small number of advice columns.

• Impact on the Proof command: Modifications in the code will also impact the Proof command. It is necessary to ensure that the performance of individual proofs is maintained or improved with these changes.

• Performance changes based on circuit size and width: For both large and narrow circuits, GPU enhancements produced positive results. However, for smaller (K≤8) and wider circuits, GPU enhancement results in performance degradation.

• Future improvements in general optimization: Plans will enhance GPU integration for all circuit types, with a particular focus on batch operations to achieve optimal performance across a variety of circuit sizes.