In the past year, I have spent a lot of money on AI. It is not easy to be a trend-setter in this era .

In order to learn to make illustrations, I paid Midjourney 10 US dollars a month; later, I also subscribed to ChatGPT Plus, which was 20 US dollars a month, so I could chat casually without having to wait during peak periods; and after that, I subscribed to Perplexity, which was more expensive.

Well, the boss’s expectations...丨Giphy

My boss complimented me, "I'm well equipped with AI, but can I mention my work efficiency? Can't you write a manuscript, make data charts, and write weekly emails at the same time?"

As expected of the boss, this question made me a little confused.

Fortunately, Google has just launched a plan. Users can subscribe to Google One AI Premium for $19.99 per month and use Gmail, Docs, Sheets, Slides and Meet that support Gemini - that is to say, you can use it in the Google Office family bucket. Enjoy Gemini’s AI capabilities.

A $20 package specially prepared for migrant workers...丨Google

The cost of going to work at your own expense has increased again. I wonder what the boss can say this time.

Both are $20, which one is worth more?

The same price is 20 US dollars, but the services are different.

If you buy ChatGPT Plus, you can use GPT-4 and DALL-E 3. Of course, the most exciting thing is that the App Store-like ecosystem created by OpenAI uses "teaching robots" made by others to teach children math, and then Make your own "reading guide" (the world has become a lot cleaner). Of course, you can also use Zapier to connect applications within ChatGPT, such as calendar-text messaging (notifying colleagues of schedules).

However, if you are more accustomed to the workflow of traditional office software, you must be tired of switching back and forth between ChatGPT web pages and documents.

How about making AI more "pragmatic"? With the lowest learning cost, you can enter the work and life of ordinary people like you and me.

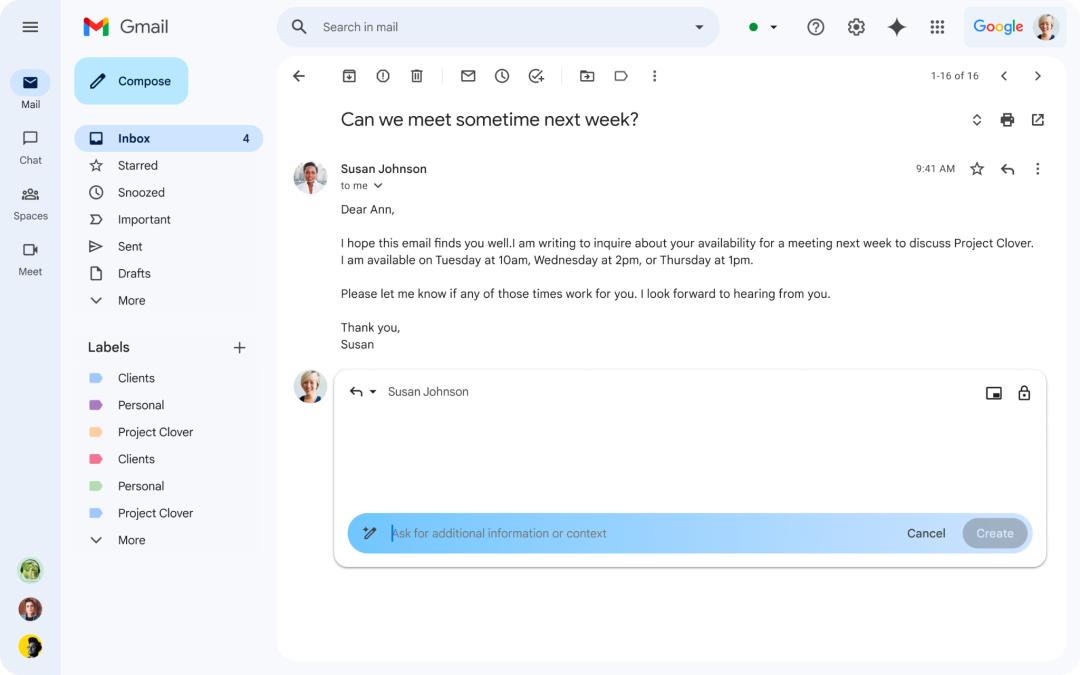

Google's idea is this: Gemini Advanced has just been released, which can generate text and summary documents in Docs; analyze data and generate charts in Sheets; help you write uncertain expressions on Gmail, etc.

“Family Bucket” is a unique scene advantage丨Google

The prerequisite for using Gemini Advanced is to subscribe to Google One AI Premium. Google One is an online cloud storage service provided by Google for use by its "Google Workspace". Therefore, the $20 price of AI Premium also includes 2TB of storage space .

20 dollars a piece is not expensive, but a little adds up to a lot...丨Google

Powering Gemini Advanced is the Gemini Ultra 1.0 model, Google's largest and most powerful model yet, suitable for highly complex tasks. The other two model sizes are: Gemini Pro, the best model for a variety of tasks; and Gemini Nano, the most efficient model for end-to-end devices.

In addition to Google and OpenAI, another "$20 package" option is Microsoft. Not long ago, Microsoft also launched the personal version of Copilot Pro. For the same monthly subscription fee of US$20, you can use Word, Excel, PowerPoint, etc. that are powered by Copilot AI capabilities .

If you can’t arm yourself, at least arm your phone first

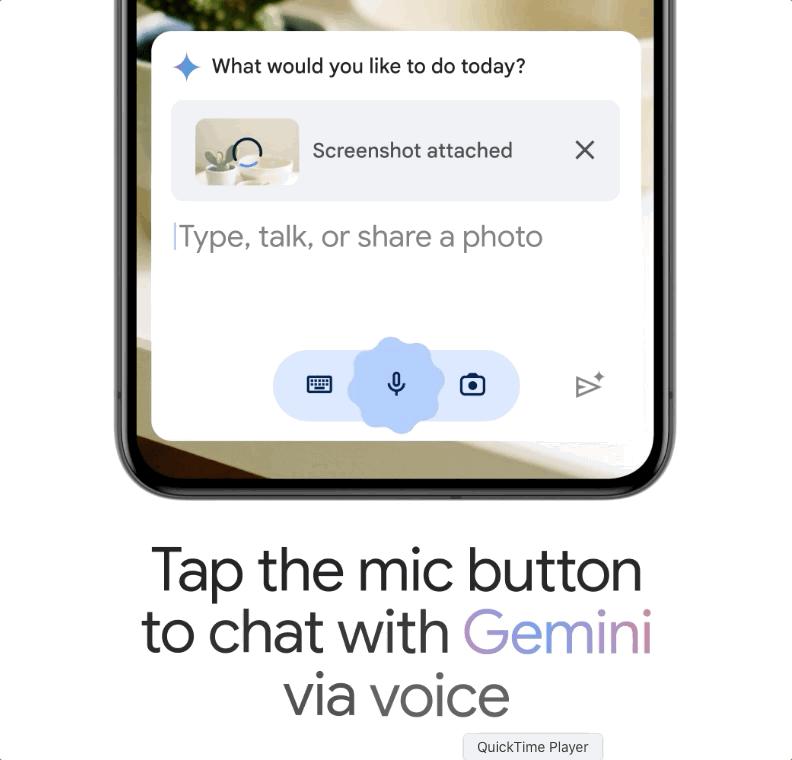

Two months ago, Google released Gemini, a multi-modal AI model. The so-called "multimodal" means to accept, understand, and express various information forms such as text, voice, and pictures at the same time. Close to human’s natural way of understanding and interacting with the outside world.

Google's previous ChatGPT-like AI product was called Bard, and now it is collectively called Gemini (that is, the product name and model name are the same, and the domain name has also been changed from bard.google.com to gemini.google.com). In addition to the web version, an Android version will also be launched. Gemini App, iOS users can experience it in the Google app . Currently, the web version of Bard (soon to be called Gemini) uses the Gemini Pro model, which supports dialogue in more than 40 languages, including Chinese; it supports Wensheng pictures (only supports English).

From now on, everyone will be called Gemini丨Giphy

In the Google display, take a photo with your mobile phone and ask Gemini what is in the photo, and it will tell you, "The prickly cactus adds a touch of desert flavor; simple shapes and natural textures create a calm harmony, etc." ". (This thing has been around for a long time. When I was in elementary school, I didn’t always fail when I read pictures and wrote essays.)

AI applications on mobile phones are what more people are looking forward to丨Google

Although no more is shown, we can expect that such an application will greatly facilitate our observation and understanding of the surrounding environment, such as asking it whether a flower on the street is a cruciferous or a cactus family; or it can tell us what we just heard. A "long speech", summarized for you; or, based on the distance sensor and camera, it can tell the blind person more information than "there is an obstacle ahead", "it is still five steps away from you."

Both the web page and the mobile phone will expand to different modes in the future and gradually support new languages and regions.

It’s not enough to have a model, everyone must be able to use it

When Google announced the test results of Gemini before, Gemini Ultra whetted its appetite:

Gemini Ultra outperforms current SOTA (best/state-of-the-art) results on 30 of the 32 academic benchmarks widely used in large language model development.

Gemini Ultra scored as high as 90.0% in the MMLU (large-scale multi-task language understanding dataset), surpassing human experts for the first time. MMLU comprehensively uses 57 subjects including mathematics, physics, history, law, medicine and ethics for Test world knowledge and problem-solving skills.

On image benchmarks, Gemini Ultra outperforms the best previous models without the aid of object character recognition (OCR).

Based on these achievements, "Gemini Advanced will provide a new experience that performs better in reasoning, following instructions, programming, and creative collaboration." From the model level, enhancing reasoning capabilities and multi-modal capabilities is a basic consensus in the industry .

Gemini Ultra is finally here, and there’s not much time left for GPT5丨Google

As mentioned just now, multi-modal AI complements different perception methods to form the most comprehensive description of the real world as possible . It may not only be a bug-checking tool that can correct typos, but an "edit" customized to your own style; or it may be able to outline and develop strategies based on the complex, unstructured data you provide.

Everyone has a lot of imagination. Google said it has also begun training the next version of Gemini. There is no need to "create user interfaces", from consumer products to developer APIs, it said: Gemini is developing towards ecology .

This article comes from the WeChat public account "Guokr42" (ID: Guokr42) , author: Shen Zhihan, and 36 Krypton is published with authorization.