Translator's Preface: MUD v2 has recently released a stable version, bringing Ethereum one step closer to its vision of a "world computer". The original article was written in October 2015. The author reviews how computers and the Internet have profoundly affected large-scale collaboration in human society for more than half a century. Analyze the problems existing in this collaboration model and propose blockchain (Ethereum) as the best solution to the problem, as well as the huge potential of blockchain (Ethereum) as a world computer. The article hardly involves any technical details. Very friendly to readers with non-technical background.

Look, Skynet!

Ethereum brings strong emotions. Some have compared it to Skynet, the distributed artificial intelligence in the "Terminator" movies. Others once thought the whole thing was just a pipe dream. The network has been operating for several months and shows no signs of a hostile self-awareness or complete collapse.

But if you're not very technical, or not very technical in different areas, it's easy to stare at all this stuff and think, "I'll figure this out later," or decide to ignore it.

In fact, it is not difficult to understand Ethereum, blockchain, Bitcoin and all other technologies, at least the impact on people's daily work and life. Even programmers who want a clear picture of the situation can fairly easily get a good enough model to understand how it all fits together. Blockchain explainers often focus on some very clever low-level details like mining, but these things don't actually help people (other than miners) understand what's going on. So let’s look at the more general story of how blockchain fits into computers and affects society .

Often, to understand the present, we have to start from the past : blockchain is the third act of the drama, and we are at the beginning of that third act. So we have to review that.

SQL: Yesterday’s best ideas

The real blockchain story begins in the 1970s, when databases as we know them were created: relational models, SQL, big racks of spinning tape drives, all that stuff. If you picture big white rooms with expensive beige boulders guarded by men in ties, then you're on the right corner of history. In the "Big Iron Age" (Translator's Note: The Big Iron Age refers to the early stage of the development of computer technology, specifically the era of using mainframes and supercomputers. These large computers are often called "big iron" "iron" because they are often very large, bulky, and require a lot of physical space and energy to run), large organizations pay IBM and others big bucks for large databases and put all of their most valuable data assets in these systems Center: Their institutional memory and client relationships.

The SQL language that powers most content management systems running the Web was originally the command language for tape drives . Fixed field lengths, a bit like the 140-character limit for tweets, were originally designed to allow impatient programs to fast-forward a tape a known distance at super high speeds in order to place the tape head exactly where the next record would begin . This all happened when I was born, it's history, but it's not ancient history yet.

At a higher, more semantic level, there is a subtle shift in the way we perceive reality: things that are difficult to represent in databases become devalued and mystified . Over the years, efforts have been made to bring the real world into databases using knowledge management, semantic web, and many other abstract concepts. Not everything is suitable for database management, but in fact we all rely on these tools to manage society. Things that don't exactly match the database are marginalized and life goes on. Occasionally there will be technological countercurrents and attempts to fight back against the "tyranny" of the database, but the general trend remains the same: if it doesn't fit in the database, it doesn't exist.

You may think you don't understand the database world, but you do live in it. Whenever you see a paper table with boxes (each box represents a letter), you are interacting with a database. Every time you use a website, there's a database (or more likely a mess of databases) lurking beneath the surface. Amazon, Facebook, all these Internet products are backed by databases. Every time customer service shrugs and says "the computer said no" or an organization behaves in a frantic, inflexible way, chances are that behind the scenes there's a database with a limited, rigid view of reality and the cost of fixing the software Too high. There is a need to make organizations smarter, we live in these boxes, as ubiquitous as oxygen and as rigid as punch cards.

Documentation and the World Wide Web

The second act begins with the arrival of Tim Berners-Lee and the emergence of the Web. In fact, it started before his arrival. In the late 1980s and early 1990s, we started taking computer networks seriously. Protocols like Telnet, Gopher, Usenet, and email themselves provided a user interface for the early Internet's spanning arc, but it wasn't until the 1990s that we experienced mass adoption of networked computers , gradually leading me to type this on Google Docs This content is being read while you are reading it in your web browser. The process of connecting the dots is: "The network is the computer," as Sun Microsystems used to say: fast.

In the early 1990s, there were plenty of machines out there, but they were mostly standalone devices or connected to hundreds of machines on a college campus without much of a window to the outside world. Ubiquitous network software and hardware, networks of networks, the Internet took a long time to create and then spread like wildfire. These small pieces became loosely connected and then tightly coupled into the network we know today. We're still riding a technology wave as the web gets smarter, smaller, cheaper, and starts showing up in things like our lightbulbs with names like the "Internet of Things."

Bureaucracy and Machinery

But databases and networks never really learned to get along. The "big iron" in the computer room and the countless tiny PCs scattered across the Internet like dewdrops on a spider web cannot find a common world model that allows them to interoperate smoothly . Interacting with a single database is very simple: the various forms and web applications you use every day interact with the database. But here's the hard part: getting databases to work together invisibly for our benefit, or making databases interact smoothly with programs running on our own laptops .

These technical issues are often obscured by bureaucracy, but we feel their impact every day. Getting two large organizations to work together for your benefit is a pain in the ass, and the root cause is that it's a software problem . Maybe you want your car insurance company to obtain the police report that your car was broken into. Chances are you'll need to pull the data from a database, print out some paper, and mail it to the insurance company yourself: there's no real connection between the systems.

You can't drive this process by clumsily filling out forms unless you're sitting in front of a computer. There is no sense of using a real computer to do things, just treating the computer as an expensive paper simulator. While in theory information can flow from one database to another as long as you give it your consent, in practice the technical costs of connecting to databases are huge, and your computer doesn't store your data to do all of the work for you. . Instead, it's just a tool for you to fill out forms. Why are we wasting all this potential so badly?

data philosophy

The answer, as always, lies within our own minds. Organizational assumptions embedded in computer systems are nearly impossible to translate. The human factors that create software: The mindsets that shape software are mutually incompatible . Every business builds their computer systems according to its own wishes, and those wishes differ about what is important and what is less important, and truth does not flow easily between them. When we need to translate from one world model to another, we involve humans and as a result we are back to a process that reflects filling out paper forms rather than true digital collaboration. The result is a world where all our institutions seem to be working in separate directions and can never fully agree, where the things we need in our daily lives always seem to fall between the cracks, and where every process needs to fill in the same The damn name and address data is filled out twenty times a day, more often if you move. How often do you shop from Amazon instead of some more specialized store just because they know where you live?

There are many other factors that contribute to the huge gap between the theoretical potential of our computers and our daily use: technological acceleration, constant change, the huge overhead of writing software, etc. But at the end of the day, it all comes down to mindset. Although it may appear to be just some binary data, the software's "architects" have a budget in their hands that is enough to build a skyscraper, and changes late in the project are akin to the cost of demolishing a half-finished building. Rows and rows of expensive engineers throw away months (even years) of work: the software is frozen in place while the world keeps changing. Everything went slightly subtly wrong.

Time and time again, we go back to paper and metaphors from the paper age because we couldn't get software right, and the heart of the problem is that we managed to network computers in the 1990s, but we never really figured out how to really network databases. network and have them all work together.

There are three classic models for people trying to make networks and databases work together smoothly.

The first paradigm: the diversified peer model

The first method is to connect the machines directly together and solve the problem as you go. You take machine A, connect it to machine B over the network, and then trigger a transaction over the network. In theory, Machine B captures them, writes them to its own database, and works smoothly. In practice, there are some problems here.

This epistemological problem is quite serious. Databases commonly deployed in our organizations store facts. If the database says the inventory is 31, that is the truth for the entire organization, unless perhaps someone goes under the shelf and counts it, finds that the true number is 29, and enters that into the database as a correction: the database is the institutional reality .

But when data flows from one database to another, it crosses an organizational boundary. For Organization A, the contents of Database A are operational reality, true until evidence disproves it. But for Organization B, communication is a statement of opinion. For example, an order: An order is a request, but it does not become a confirmed fact until the refund point is exceeded in the payment clearing process. One company may believe an order has occurred, but this is just speculation about someone else's intentions until cold hard cash (or Bitcoin) removes all doubt. Until then, a "bad order" signal can reset the entire process. An order exists in hypothesis until a cash payment clears it from the speculative buffer in which it resides and places it firmly in the fixed past as part of the record of fact: This order existed, was shipped, and was accepted , and we have paid for it.

But until then, the order is just speculation.

A simple request, like a new paintbrush flowing from one organization to another, gradually and clearly changes from a statement of intention to a statement of fact . This change in meaning is not usually something we think carefully about. But when we start to think about how much of the world, how many of the systems we live in operate like this: food supply chains, power grids, taxation, education, healthcare systems, we wonder why these systems don’t often cause our Notice.

In fact, we only notice problems when they arise.

The second problem with peering connections is the instability of each peering connection . Making small changes to the software on one end or the other can introduce bugs. These subtle errors may not become visible until the data transfer is deep into Organization B’s internal records. A typical example is: an order is always placed in sets of 12 and processed as a box. But for some reason, one day an order for 13 was placed, and somewhere within Organization B, an inventory processing spreadsheet crashed. Unable to ship 1.083 boxes, the entire system stopped functioning.

This instability is in turn influenced by another factor: the need to translate one organization's philosophical assumptions, indeed the corporate epistemology, into the private internal language of another . For example, we discuss booking a hotel and renting a car as separate actions: the hotel wants to treat the customer as a credit card number, but the car rental office wants to treat the customer as a driver's license. A small mistake led to a misidentification of the customer. Hilariously, the customer was mistakenly asked to provide their driver's license number to confirm the hotel room reservation, but all everyone knew was that the error message was "computer display not supported", when the customer did not realize What the computer now wants is other information when they read back their credit card information.

If you think this example is ridiculous, NASA lost the Mars Climate Orbiter in 1999 because one team was using inches and the other was using centimeters. These things happen all the time.

But in communications between two business organizations, one cannot simply look at the other party's source code to find errors. Whenever two organizations meet and want to automate their backend connections, all these issues have to be solved manually. This is difficult, expensive, and error-prone, so much so that in practice, companies often prefer to use fax machines. It's ridiculous, but that's really how the world works today.

Of course, there are attempts to deal with this mess: introducing standards and code reusability to help simplify these operations and make business interoperability a reality. You can choose to use EDI, XMI-EDI, JSON, SOAP, XML-RPC, JSON-RPC, WSDL, and many other standards to aid your integration process.

Needless to say, the reason there are so many standards is because none of them work properly.

Finally, there is the issue of scale of cooperation. Let’s say the two of us have paid the upfront costs of working together and achieved seamless technology integration, and now a third partner joins our alliance. Then the fourth, fifth, and by the fifth partner, we had 13 connections to debug. The sixth, seventh... and by the tenth, the number is 45. As each new partner joins our network, the cost of collaboration increases, resulting in small collaborative groups that cannot scale .

Remember, this isn’t just an abstract problem: this is banking, it’s a problem faced by the financial industry, medicine, the power grid, the food supply, and governments.

Our computer is a mess.

Hub and Spoke: Meet the New Boss

A common solution to this dilemma is to cut out the exponential (or quadratic) complexity of writing software by connecting peers directly, and simply put someone in charge. There are basically two ways to solve this problem.

The first way is that we choose an organization, say VISA, and both agree that we will use their standard interface to connect to VISA. Each organization just needs to make sure the connector is correct, and VISA takes a 1% cut and makes sure everything settles smoothly.

The problems with this approach can be summarized by the term "natural monopoly" . The business of being a hub or platform for others is effectively a license to print money for whoever achieves an incumbent status in that position. Political power can be exerted in the form of setting terms of service and negotiating with regulators. In short, an arrangement that may have started out as an effort to create a neutral backbone can quickly turn into a clientele of an all-powerful behemoth without which one would be There is no way to achieve this goal. Can't do business.

This model appears again and again in different industries at varying levels of complexity and scale, from rail, fiber optics, airport runway allocation to liquidity management for financial institutions.

In the context of databases, there is a subtle issue: platform economics . If the "hub and spoke" model is that everyone runs Oracle or Windows Server or something similar, and then relies on those boxes to seamlessly connect to each other, because after all they are all cloned pods, then we have the same basics as before Economic proposition: To become a member of the network, you rely on an intermediary who can charge you a membership fee at will, which is disguised as a technology cost.

VISA derives 1% or more from this game on a significant percentage of transactions worldwide. If you've ever thought about how much economic upside a blockchain business might have, just think about how big that number is.

protocols, if you can find them

This protocol is the ultimate “unicorn.” This wasn't a company that was worth a billion dollars two years after it was founded, but it was a really good idea that got people to stop arguing about how to do something and just get on with it .

The internet runs on a handful of things: Tim Berners-Lee's HTTP and HTML standards worked like magic, though of course he just lit the fire and countless technologists gave us the wonders we now know and love mix. SMTP, POP and IMAP drive our email. BGP solved our big router problem. There are also dozens of increasingly difficult-to-understand technologies/protocols that drive the operation of most of our open systems.

A common complaint is that tools like Gchat or Slack perform tasks for which there are already perfectly valid solutions in open protocols (such as IRC or XMPP), but they don't actually use those protocols. The result is that there's no way to interoperate between Slack and IRC or Skype or anything else, except to piece it all together through a gateway that may provide erratic system performance. The result is that the technology ecosystem degenerates into a series of walled gardens owned by different companies and at the mercy of the market .

Just imagine how bad Wikipedia would be now if it were a startup deliberately driven to monetize its user base and allow investors to recoup their money.

But when the protocol strategy succeeds, what is created is a huge amount of real wealth, not money, but actual wealth, because the world is improved by being able to happily work together. Of course, SOAP and JSON-RPC and all the other protocols aspire to support protocol formation, or even become protocols, but the defining semantics of each domain tend to create inherent complexities that lead to a return to hub versus spoke or other models.

Blockchain, the fourth way?

You hear people talking about Bitcoin. Preachers in the pub are absolutely certain that something fundamental has changed, spouting “internet central bank” talk and discussing the end of the state. The well-dressed ladies on the podcast talk about the amazing future potential. But what’s underneath all this? If you separate technology from politics and future potential, what is it actually about?

Behind it is an alternative way of synchronizing the database instead of printing a stack of paper and passing it around. Let's think about cash: I take out a stack of paper from a bank, and the value is transferred from one bank account (a computer system) to another bank account. Think of the computer again as a paper simulator. Bitcoin simply takes a paper-based process, the basic representation of cash, and replaces it with a digital system : digital cash. In this sense, you could think of Bitcoin as another paper simulator, but it's not.

Bitcoin replaces paper in this system with a stable agreement ("consensus") among all computers in the Bitcoin network regarding the current value of all accounts involved in a transaction. It does this with a true protocol-based solution: no middleman taking commissions, and no exponential system complexity from a variety of different connectors. Blockchain architecture is essentially a protocol that works like a hub and spoke to get things done effectively, but without the issue of a centralized trusted third party that might choose to extract commissions , which is a really good thing! The system has some magical properties that transcend paper and databases: the data at all nodes is ultimately consistent. We call it “distributed consensus,” but that’s just a fancy way of saying that ultimately everyone agrees on what bank balances, contracts, etc. should be.

This is a big deal.

In fact, it breaks with 40 years of experience in connecting computers together to do things . As a basic technology, blockchain is a new technology. In this branch of technology, truly innovative ideas that can bring billions of dollars in value and set the direction of an industry for decades are rare.

Bitcoin allows you to transfer value from one account to another without having to move cash or go through the complicated wire transfer process used by banks to adjust balances, because the underlying database technology is new, modern, and better: through more Good technology provides better services. Like cash, which is anonymous and decentralized , Bitcoin has some monetary policy built into it and issues "cash" itself: a kind of "decentralized bank." This is the central bank of the internet, if you will.

It's easy to understand Bitcoin clearly if you think of cash as a special form and cash transactions as pieces of paper moving data in a database.

It is no exaggeration to say that Bitcoin has helped us escape the 40-year abyss caused by the limitations of database technology. Whether it will make a real difference on a fiscal level remains to be seen.

Okay, so what about Ethereum?

Ethereum takes this “beyond paper metaphor” approach to making databases work together, even further than Bitcoin. Rather than replacing cash, Ethereum proposes a new model, the fourth way. You push data to Ethereum and it is permanently tied to public storage (the “blockchain”). All organizations that need access to this information: from your cousin to your government, can see it. Ethereum is trying to replace all the other places where you have to fill out forms to get computers to work together . This may seem a bit strange at first, since you wouldn’t want your health records to exist in such a system. Right, you don't want to do that. If you are storing health records online, you need to protect them with an additional layer of encryption to ensure they cannot be read, which we should be doing anyway. It’s not common to apply proper encryption to private data, which is why you keep hearing these big news stories about hacks and leaks .

So what kind of things do you want as public data? Let’s start with something obvious: your domain name. Your business owns its own domain name, and people need to know that your business and not someone else owns the domain name. This unique naming system is how we surf the entire Internet: it's a clear example of what we want in a permanent public database. We also hope that governments don't keep redacting these public records and taking domains offline based on their local laws: if the internet is a global public good, it's annoying that governments keep breaking it by censoring things they don't like.

Crowdfunding as a testbed

Another good example is crowdfunding projects, what sites like Kickstarter, IndieGoGo and others do. In these systems, someone posts a project online and crowdfunds it, and there is a public record showing the amount of money flowing in. If it exceeds a certain amount, the project kicks in: we want them to record what they did with the funds. This is a very important step: we want them to be accountable for the money they get, and if they don't raise enough money, we want the money invested to be returned. We have a global public good, the ability for people to work together to organize and fund projects. Transparency does help in this scenario, so this is a natural application scenario for blockchain.

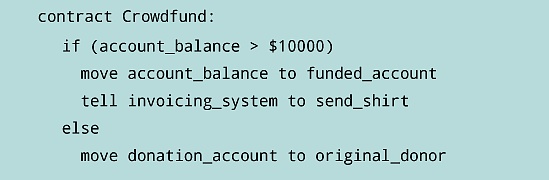

Let’s consider the crowdfunding example in more detail. In one sense, providing funds to a crowdfunding project is a simple contract:

If the account balance is greater than $10,000, fund the project, and if my donation is over $50, please send me a t-shirt. Otherwise, all money is returned.

Expressed as pseudocode, it might be:

If you represented this simple protocol as actual detailed code, you would get something like this. This is a simple example of a smart contract, one of the most powerful aspects of the Ethereum system.

Crowdfunding has the potential to allow us to access venture capital backed by deep technical intelligence and invest in creating real political change. If, say, Elon Musk had access to the capital reserves of everyone who believed in what he was doing, and effortlessly sold shares in (say) a future Martian city, would that be good or bad for the future of humanity?

Building the mechanisms to achieve this kind of large-scale collective action may be critical to our future.

smart contract

The implementation layer of all these strange dreams is very simple: smart contracts envision some simple written agreement represented as software . It's not something you can easily imagine doing for painting a house. "Is the house painted just right?" That's not something a computer can do yet. But for contracts that are primarily about digital things (like cell phone contracts or airline tickets or similar contracts that rely on a computer to provide a service or send you an electronic ticket), software already represents those contracts pretty well in almost all cases. Occasionally a problem will arise, all English legalese will be activated, and a human judge will become involved in the proceedings, but this is a very rare exception indeed. Most often we deal with websites and show the people in the system helping us (such as airline gate agents) with proof that we have completed the transaction with the computer, such as showing them our boarding pass. We go about our business by filling out a few forms and the computer sorts it all out for us, no humans are needed unless something goes wrong.

To make this possible, companies that provide such services maintain their own technical infrastructure: financial support from Internet companies maintains teams of engineers, server farms, and the physical security surrounding these assets. You can buy off-the-shelf services and have people build e-commerce websites for you or other simple cases, but basically this complexity is the domain of large companies, because before you can let the computer system handle the money and provide the service, you need to have All this overhead and technical skills .

This is difficult and expensive. If you're starting a bank or a new airline, software is a very important part of your budget, and hiring a technology team is a major part of your staffing challenge.

Smart Contracts and the World Computer

So what Ethereum offers is a "smart contract platform" that takes a lot of expensive, difficult stuff and automates it. It’s still early days, so we can’t do everything yet, but even on this first version of the world’s first universal smart contract platform, we’re seeing amazing capabilities.

So how does a smart contract platform work? Just like Bitcoin, a lot of people run the software and get some tokens (Ethereum). These computers in the network work together and share a common database called the blockchain. Bitcoin’s blockchain stores financial transactions. Ethereum’s blockchain stores smart contracts . You don't need to rent data center space and hire a team of system administrators. Instead, you use a shared global resource, the "world computer," and the resources you put into the system belong to the people who make up this global resource, and the system is fair and just.

Ethereum is open source software, and the Ethereum team maintains it (with help from many independent contributors and increasingly from other companies). Most networks run on open source software made and maintained by similar teams: We know open source software is a great way to produce and maintain the world's infrastructure . This ensures that no centralized institution can use its market power to do things like drive up transaction fees to make huge profits. Open source software (and, to a slightly more puritanical degree, free software) helps keep these global public goods free and fair to everyone.

The smart contracts themselves that run on the Ethereum platform are written in a simple language: not difficult for a working programmer to learn. There's a learning curve, but it's not that different from what the pros do every few years. Smart contracts are usually very short: 500 lines is a long time. But because they harness the immense power of cryptography and blockchain, and because they run across organizations and individuals, even relatively short programs have immense power.

So what does "world computer" mean? In essence, Ethereum simulates a perfect machine—one that could never exist in nature due to the laws of physics, but can be simulated with a large enough computer network. The network is not scaled to produce the fastest possible computers (although that may come later as blockchain scales), but to produce general-purpose computers that can be accessed by anyone from anywhere, and (crucially) Yes!) It always provides the same results for everyone. It is a global resource that stores answers that cannot be subverted, denied or censored (see the video on YouTube from Cyberpunk to Blockchain).

So what does "world computer" mean? In essence, Ethereum simulates a perfect machine: something that could never exist in nature, limited by the laws of physics, but can be simulated with a large enough computer network. The scale of this network is not intended to produce the fastest computer (although this may be achieved as the blockchain scales), but to produce a universal computer that can be accessed by anyone from anywhere, and (very important!) ) which always provides the same results to everyone . This is a global resource that stores answers that cannot be subverted, rejected or censored.

We think this is a big deal.

Smart contracts can store records of who owns what. It can store payment promises and promise delivery without the need for middlemen or exposing people to the risk of fraud. It can automatically move funds based on instructions given long ago, such as a will or a futures contract. With purely digital assets, there is no "counterparty risk" because the value to be transferred can be locked into the contract at creation and automatically released when the conditions and terms are met: if the contract is clear, there is no possibility of fraud because The scheme actually has real control over the assets involved, without the need for a trustworthy middleman like an ATM machine or car rental agent.

This system operates across the globe, with tens or even hundreds of thousands of computers sharing the workload and, more importantly, supporting the cultural memory of who promised what to whom. Yes, fraud will still be possible at the digital edge, but many types of outright banditry may disappear. For example, you can check the blockchain and find out if a house has been sold twice. Who Really Owns the Brooklyn Bridge? What happens if this loan defaults? Everything is on a shared global blockchain, clear as crystal. Anyway, that's the plan.

Democratic access to state-of-the-art technology

All of this makes it possible to harness the power of modern technology and put it into the hands of programmers working in an environment not much more complex than website coding. These simple programs run on extremely powerful shared global infrastructure that can transfer value and represent ownership of property. This created marketplaces, registries like domain names, and many other things that we currently don’t understand because they haven’t been established yet. When the web was invented to make it easy to post documents for others to view, no one could have imagined that it would revolutionize every industry it touched and transform people's personal lives through social networks, dating sites, and online education . No one would have imagined that Amazon would one day be bigger than Walmart. It’s hard to say where smart contracts will go, but it’s inevitable to observe the network and dream about the future.

While it takes a lot of esoteric computer science to create a programming environment that allows relatively mundane networking skills to move among properties within a secure global ecosystem, the work is done. While programming on Ethereum is not yet a piece of cake, it is largely a matter of documentation, training, and the maturation of the technology ecosystem. The languages are written and good: the debugger takes more time. But the outrageous complexity of writing smart contract infrastructure is gone: smart contracts themselves are simpler than JavaScript and not scary for a web programmer. As a result, we expect these tools to become ubiquitous soon, as people begin to demand new services and teams are formed to deliver them.

future?

I'm excited precisely because we don't know what we've created and, more importantly, what you and your friends will create with it. I believe terms like "Bitcoin 2.0" and "Web 3.0" are not enough, it will be a new thing, new ideas and new culture embedded in a new software platform. Every new medium changes the message: blogging brought long-form writing, and then Twitter created an environment in which brevity is not only the soul but necessarily the body of intelligence. Now we can express simple agreement as freedom of speech, publication of ideas, and who knows where this will lead.

Ethereum Frontier is the first step: it’s a platform for programmers to build services that can be accessed through a web browser or mobile app. Later we will release Ethereum "Metropolis," which will be a web browser-like program currently called Mist that takes all the security and cryptography inherent in Ethereum and combines it with what anyone can The user interface used is perfectly packaged. The recently released Mist showcases a secure wallet, and that's just the beginning. The security provided by Mist is far stronger than current e-commerce systems and mobile applications. In the medium term, the contract production system will be self-contained, so almost anyone can download the Distributed Application Builder, load their content and ideas and upload it. For simple things, no code is required, but the full underlying power of the network will be available. Think like an installation wizard, but instead of setting up a printer, you’re configuring the terms of a loan smart contract: how much, how long, and what the repayment rate is. Click OK to approve!

If this sounds impossible, welcome to our challenge: technology has evolved far beyond our ability to explain or communicate about it!

The world's supercomputer?

Our innovation is not over yet. Soon, we're talking a year or two from now, Ethereum Serenity will take the network to a whole new level. Currently, adding more computers to the Ethereum network makes it more secure, but not faster. We manage limited network speeds using Ethereum, a token that gives priority to the network. In the Serenity system, adding more computers to a network makes it faster, which will eventually allow us to build true Internet-scale systems: hundreds of millions of computers working together to do what we collectively need to do. Today we might speculate that this wonderful software might be used for research in areas such as protein folding, genomics, or artificial intelligence, but who can say for what purposes these software will be used?

Original link: https://consensys.io/blog/programmable-blockchains-in-context-ethereum-smart-contracts-and-the-world-computer