Is Apple lagging behind in the era of big models? Almost everyone hopes to find the answer at Apple WWDC24. However, after the most important keynote speech, controversy also came one after another: the first half of the press conference was a collection of small features that were jokingly called "catching up with Android", and the most important AI in the second half, on the surface, was similar to the AI capabilities that Android phones have already released, and was considered "no surprise" by many people. The joke began to spread online: "The biggest surprise of WWDC24 is that Apple brought a new calculator to the iPad."

Although real AI hardware has not yet appeared, it has become a consensus in the industry that big models may become the "core" of future edge devices, equivalent to operating systems. Does Apple have such important big model capabilities? In the AI era, does Apple really have to rely on third-party big model companies like OpenAI?

On the day of the keynote speech, Apple's stock price fell by 1.9%, which seemed to be in line with the outside world's "bland" evaluation of the conference. However, something dramatic happened. On the second day of the keynote speech, Apple's stock price soared 7% to a record high. The capital market is a manifestation of market synergy. For Apple's huge market value of 3.18 trillion, the huge contrast of emotions behind the funds deserves attention.

Following the previous focus of controversy, we focused our attention on two documents : one is a large model technical document that Apple released quietly on its official website, which contains many details worth digging into. The other is a note sent back by a colleague from Tencent Technology on the scene, which records an inconspicuous closed-door conversation held after the keynote speech. The two people in the conversation were "Apple's senior vice president of software engineering Craig Federighi and Apple's senior vice president of machine learning and artificial intelligence strategy John Giannandrea, both of whom report directly to CEO Cook. The content of the conversation included why to cooperate with OpenAI and how to protect privacy.

This article focuses on these two pieces of information and attempts to unravel the mystery of Apple's "large model competitiveness".

There are two key points:

● Apple is very capable of developing large models on its own;

● OpenAI does not provide support for Apple Intelligence, the two are completely independent. Apple Intelligence is fully supported by Apple's own models.

Digging into the model technical documentation released by Apple:

Two new pieces of information:

This may be the most important point of this release.

First, the information gradually became clear: there were two pieces of information that were not explained at the press conference, which gradually became clear after the press conference.

1. Apple quietly released its self-developed model: not only a small model on the terminal side, but also a large model in the cloud

In the Keynote, Apple has been talking about how AI-enabled devices can bring users amazing application experiences. But who owns these models? Which ones are developed by Apple itself, and which ones are developed in cooperation with OpenAI? Although privacy and security are promised, how are they guaranteed? Musk tweeted that if Apple integrates OpenAI, he will ban Apple from the company. But throughout Apple's keynote, we didn't find a clear answer.

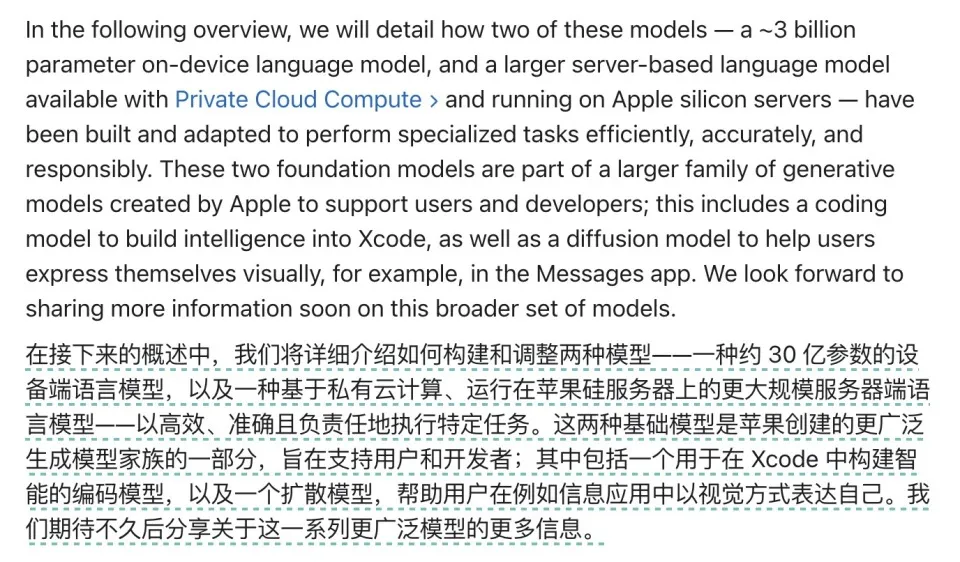

It wasn't until after the launch that it published a technical blog and announced on State of Union the details of the model it would use on Apple devices: both the end and cloud models , both developed by Apple itself.

Self-developed large model catches up with GPT4

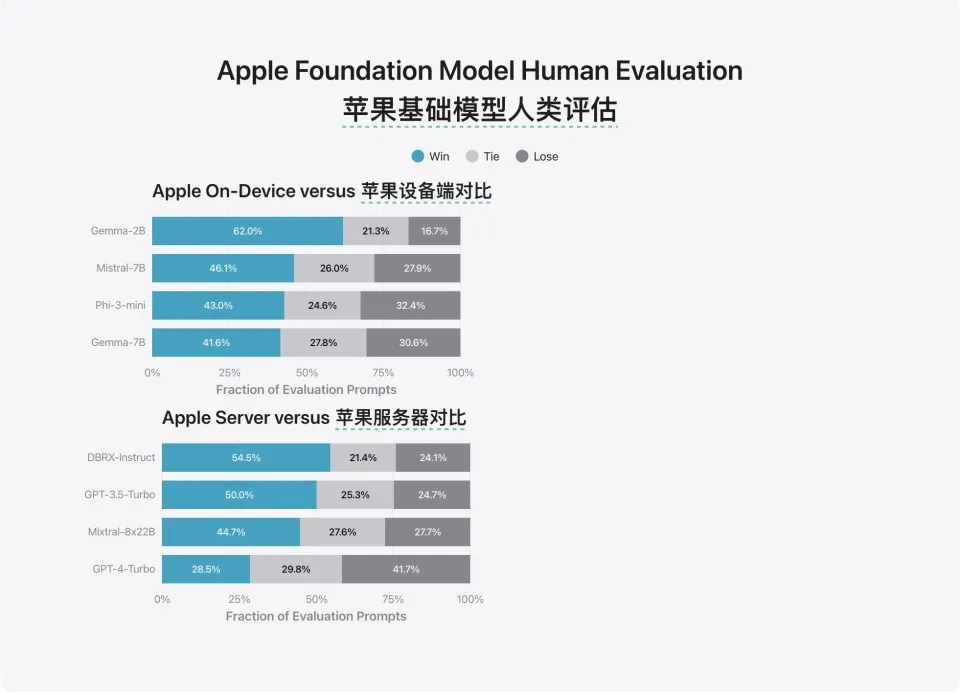

Specifically, the client-side model on Apple devices is a small model with 3 billion parameters (3B), and Apple has not disclosed the specific parameters of the cloud model. The performance of both models is quite good.

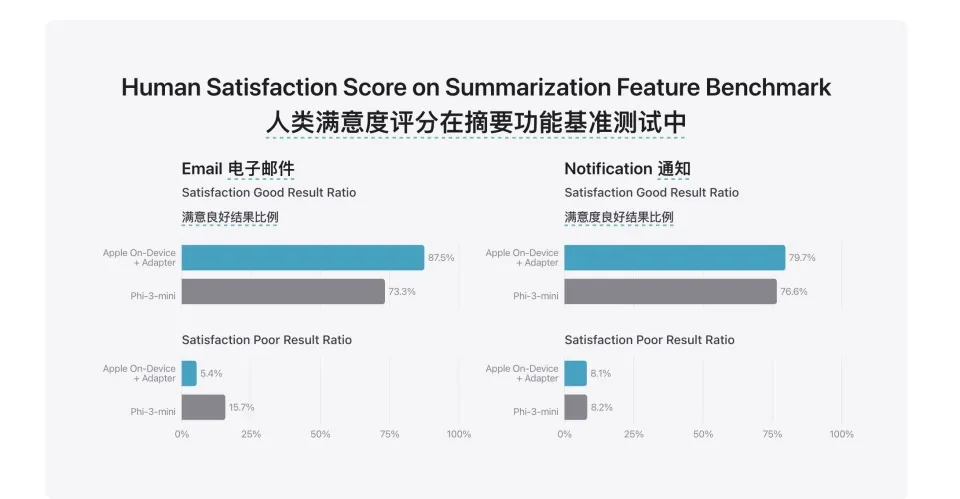

Apple can basically win in terms of the capabilities of 3B-level small models and several mainstream 7B-level models (win + tie probability > 50%). And its cloud model directly reaches the GPT-4 Turbo level (win + tie probability 58.3%).

This release may be the biggest nuclear bomb in Apple's entire wave of updates: Apple has developed its own GPT4-level large model, and it is mature enough to be directly connected to Apple's software and hardware systems as soon as it comes out.

This means that the previous doubts about Apple's model capabilities are completely unfounded. Apple can now build its own internal closed-loop AI system without relying on external model companies. This is something that other mobile phone manufacturers except Google are currently completely unable to do.

This is why Apple has the confidence to list OpenAI as just one of the models, and as one of the models for external calls.

Strong optimization of small models on the client side

In addition, let’s talk about the end-side model. At the press conference, Apple emphasized that most of the operations of Apple Intelligents will be completed on the end-side model. But after the press conference, almost everyone questioned whether a 3B-sized model could really complete the functions shown at the press conference.

First of all, it is actually very difficult to implement a 3B-sized end-side model.

Let's look at the performance of Apple's competitors: Google first deployed its end-side model Gemnini Nano on its flagship phone Pixel 8 Pro in December last year. Its parameter size is only 1.8B and its capabilities are very limited. Samsung S24 also uses Gemnini Nano on the end-side. It should be noted that Pixel 8 Pro has 12G memory. It was not until May this year that Google was able to make Pixel 8 and 8a with 8G memory run on this 1.8B model, and it will take another month to truly upgrade and deploy it. The end-side models deployed by other mobile phone manufacturers are basically at the 1B level of parameter scale.

Apple has managed to make its iPhone 15 Pro with 8GB of memory run a 3B parameter model. This engineering capability is far ahead of its competitors.

Before this, Apple had already made sufficient preparations for this. In the paper "LLM in a flash: Efficient Large Language Model Inference with Limited Memory" that caused a sensation in December last year, Apple proposed a method to solve the problem of running large models in small memory, using two key technologies, windowing and row-column bundling, to minimize data transfer and maximize flash memory throughput.

In this technical document, Apple also mentioned that they used grouped-query-attention and LoRA adapter framework in the model framework. These two technologies can effectively reduce memory usage and inference costs, one to avoid repeated mapping and the other to compress the inference process.

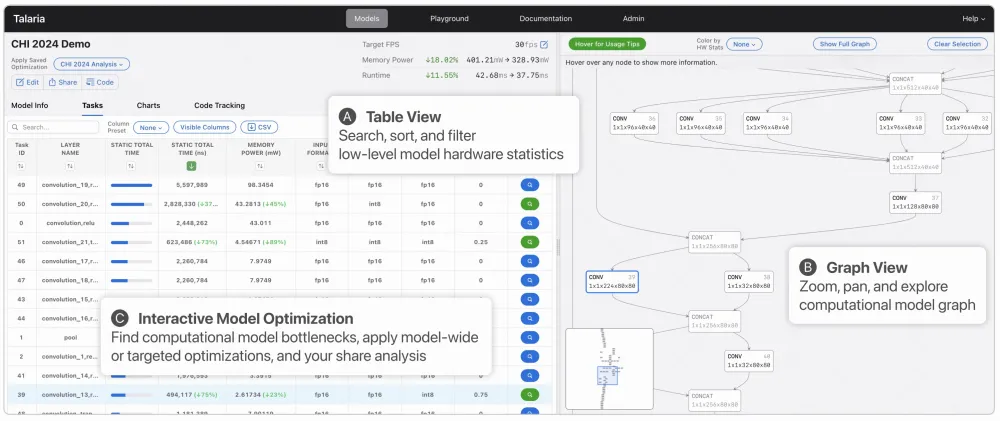

In addition, in order to ensure that the operation of the AI model does not significantly reduce the power consumption of the mobile phone, Apple has also equipped it with a power consumption analysis tool Talaria to optimize power consumption in a timely manner.

With this series of operations, the end-side deployment of the 3B model becomes possible.

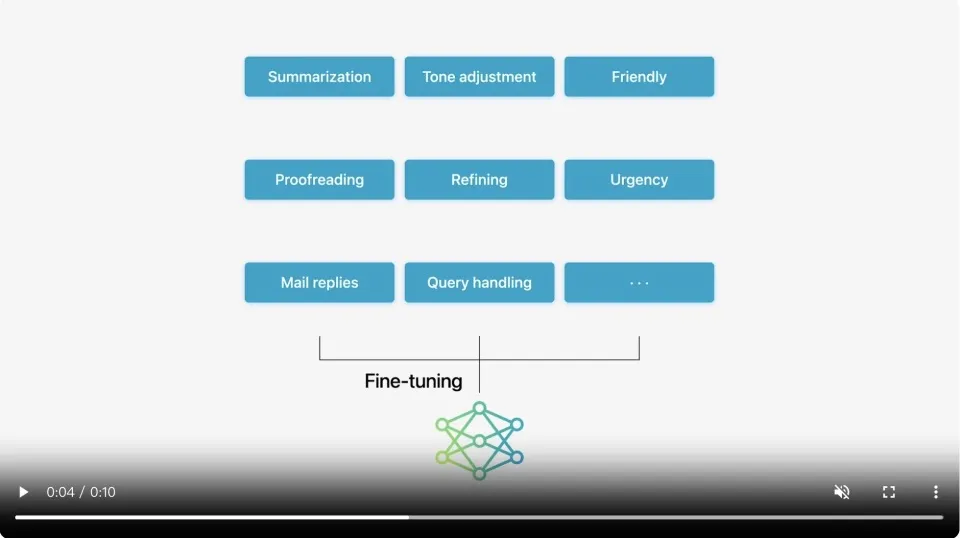

Secondly, in this technical blog, Apple also demonstrated how they ensure the delivery capabilities of small models: not omnipotent, but enhanced for specific tasks.

Specifically, Apple has added a lot of fine-tuned adapters to the base model. Adapters are a collection of small model weights superimposed on a general base model. They can be dynamically loaded and swapped, allowing the base model to perform targeted specialization in real time based on the current task. Apple Smart includes a series of adapters that have been fine-tuned for many of the functions on the press conference, such as emergency judgment, summary, email reply, etc.

Therefore, at least the client-side model can better complete the most common tasks such as basic summarization and email writing.

Overall, the comprehensive model capabilities demonstrated by Apple at this conference can be said to be far beyond expectations. From large models to small models, they have all jumped into the first echelon.

AI that can only be used on new flagships may trigger a new wave of phone replacement

Another piece of news is also very important: although the iOS18 system upgrade can be applied to all models above iPhoneX, Apple's AI function can only be applied to iPhone 15 Pro and above, and other terminals require the capabilities of M1 chips or above. This means that in order to use AI, users may have to undergo a wave of phone replacement.

In fact, this is not Apple's intention to block old users. The operating bottlenecks of large models on the end are computing power on the one hand and memory on the other. For Apple, the computing power problem may not be so tricky. The products decentralized by Apple Intelligent this time start with M1. The NPU capability of the M1 chip responsible for AI reasoning is actually not as good as that of A16, but it is enough to handle the reasoning needs of Apple's end-side models. Then why doesn't iPhone14 Pro or even iPhone 15 work? It's still because of insufficient memory.

Because the model needs to occupy a large amount of running memory (DRAM) during reasoning, a smaller memory will seriously slow down the reasoning speed or even make it impossible to complete the reasoning. Therefore, the 8G memory available on iPhone 15 Pro and above may be the minimum memory required by the current 3B end-side model after a series of optimizations.

But this matter itself also has room for optimization. The PowerInfer2 mobile inference framework released by Shanghai Jiao Tong University yesterday proposed a method to further reduce memory usage. The deployment of GeminiNano to Pixel 8 also shows that Google is making the same effort.

But the memory demand is small, and there are 7B and 14B models waiting in line for the upper end. In the long run, the machine still has to be replaced. After all, only a larger end-side model can bring more experience magic that users pay for.

Roundtable discussion reveals relationship with OpenAI

Since Apple's self-developed large model capabilities are so powerful, why does it still cooperate with OpenAI? Apple's senior vice president of software engineering Craig Federighi and John Giannandrea revealed this detail in a closed-door conversation after the Keynote, and Tencent Technology's colleagues at the WWDC site sent back the content record of this conversation. "Existing large language models with rich public information, such as ChatGPT, also have their uses. These very large cutting-edge models have some interesting features that users like very much, and integrating them into our experience can make the user experience richer."

With this in mind, Apple officially announced at WWDC that it would work with OpenAI to provide more powerful AI services on its platform. However, it is worth noting that OpenAI's ChatGPT does not provide support for Apple Intelligence, and the two are completely independent. Apple Intelligence is fully supported by Apple's self-developed model.

This means that although Apple officially announced its cooperation with OpenAI at WWDC, this cooperation is not integrated into the Apple system as speculated by the outside world. This cooperation is more like a paradigm of cooperation with third-party large model companies. Federighi explained that Apple cooperated with OpenAI because GPT-4o is currently the best LLM, but Apple may cooperate with other LLM providers in the future to allow users to choose external LLM providers. Sam Altman's attitude is also ambiguous. He has always been high-profile, but after the "such an important" cooperation was reached, he only posted a tweet.

Note: Rumor has it that Sam Altman went to Apple Park, but he didn’t have a chance to go on stage

According to Federighi, Apple Intelligence is designed to be highly personalized intelligence that needs to utilize data on personal devices, such as photos, contacts, messages, and emails, to perform tasks. OpenAI's ChatGPT can come into play when users have more complex AI requests. For example, someone can use their Mac or iPhone to send a query to ChatGPT if they want ChatGPT to write a movie script for them.

Moreover, Apple also took a privacy-first approach when designing its integration with ChatGPT. No user data will be sent to OpenAI without the user's permission. Before any request is sent to ChatGPT for processing, the user must first manually allow it. "For example, if I'm a doctor, I may want to introduce a medical model in the future; if I'm a lawyer, I may have a model refined specifically for legal work, and I want to bring it into my personal device. "Apple believes that this will ultimately be a good complement to what users do in personal intelligence. "

Through the cooperation with OpenAI, will Apple continue to introduce a variety of models on Apple devices in the future, just like it is building an APP ecosystem today, to provide users with an extremely personalized and intelligent experience? These are still unknowns, so we will have to wait and see.

Build the AI end-side devices of the future,

Apple has an inherent ecological advantage

Although the application scenarios that Apple showed at WWDC don’t seem magical, it must be said that these are indeed good scenarios for users to actually use. For example, the note-taking learning function on iPadOS, the full-screen recognition function of the calculator, the ability to generate emoji on iOS, etc., are both interesting and useful. They are all applications, but Apple’s is obviously more pleasing and easier to be deeply perceived by users.

After the press conference, in a live discussion led by Tencent Technology, Li Nan, founder of Nu Miao Technology, said: "Apple's end-to-end model has system-level permissions and data access capabilities. If you ask what Apple has really done in terms of AI capabilities, it is the first in the industry to truly seamlessly connect the local API of the mobile phone with the end-to-end model, and the first in the industry to truly give the user's personal data to the end-to-end model for fine-tuning. Other end-to-end models also want to do this, but they cannot get access to this data. Apple's core technical capabilities of end-to-end AI will definitely become stronger and stronger."

This powerful original force comes from Apple's unique ecosystem, which is what Android wants to do but is difficult to achieve. Apple may be the only company that can connect the AI experience and flow of all hardware terminal devices in a short period of time.

This is undoubtedly an important moat that has kept Apple at the top of the technology industry for so many years. They have an extremely solid ecological barrier, not only between products and systems, but also including the underlying chips and development tools, which Apple can achieve complete unification.

This helps Apple, even as a latecomer in the edge AI era, to provide an AI ecosystem that its predecessors could not achieve. They can even provide more AI application ecosystem scenarios. For example, at WWDC24, Apple demonstrated eye-catching features including AI-generated emojis and AI-driven math memos. This is obviously more attractive than many manufacturers who are still only promoting AI cutout capabilities.

"We hope that artificial intelligence will not replace our users, but enhance their capabilities." Craig Federighi talked about Apple's view on AI during the roundtable discussion, "This is different from any artificial intelligence we have seen before."

But problems also arise.

Apple may prove one thing countless times in the future

After the relationship with OpenAI was confirmed, the question that followed was "how Apple can hand over existing user data to a third party to complete AI-generated content while ensuring their security."

This is extremely important to Apple.

As the most important product of this year's WWDC, Craig Federighi spent a lot of time trying to tell users how secure the "end + private cloud" combination created by Apple Intelligence is when he released it. Apple ensures this through system-level security chip encryption and a completely closed transmission path...

Apple emphasized this part of the process in its keynote speech. They added a switch to the system so that all content output to GPT-4o must be actively initiated and confirmed by the user.

At the same time, Craig also stated at the roundtable that Apple has an independent encryption algorithm for processing user needs. If the data is to be put on the cloud, the user data will be desensitized and the data will be destroyed immediately after processing. Apple has no way to interfere with the data in this process.

Apple hopes to tell users that this is safe enough through this method. They even found some independent security researchers, opened their Apple Intelligence servers, and introduced third parties to help them prove that the security of user data will not be leaked.

But this still failed to eliminate the outside world's concerns about the security leakage of privacy data, especially after Siri was connected to GPT-4o. Questions such as how to desensitize user data and how to avoid going wrong in the Apple-OpenAI-Apple transmission path were all thrown to Apple.

Han Xu, chief researcher of Mianbi Intelligence, also expressed his concerns in a live broadcast with Tencent Technology. He believes that as long as the data is transferred from Apple to a third party, whether it is OpenAI's GPT or Google's Gemini , Apple may lose control of data security, which will indeed pose new challenges to Apple's privacy security.

But the good news is that this isn't the first time Apple has faced such a problem.

Apple was once involved in a similar discussion when choosing a search provider. When Apple confirmed Google as the default (overseas) search engine for the iPhone, it was questioned how to ensure that users' privacy data would not be leaked. In an exclusive interview with foreign media, Cook's explanation was surprisingly consistent with their current choice of OpenAI.

Apple chose Google, and Cook said Google is the best in terms of search engines.

Apple chose OpenAI, and Craig also said that OpenAI is currently the best choice for Apple.

When talking about search engines, Cook said that Apple has also incorporated a lot of control options into them, such as developing a private browsing mode in the Safari browser, equipped with an intelligent anti-tracker, etc., to provide users with all-round help.

When Craig talked about AI, he mainly talked about their encryption strategy on the edge + private cloud, but he obviously did not reveal more about OpenAI.

Perhaps it is foreseeable that for a long time in the future, Apple will prove countless times that its AI strategy is safe, including its own and third-party ones. This is a red line for Apple as a company.