Author: Crypto, Distilled

Compiled by: TechFlow

Encryption and AI: Is this the end of the road?

In 2023, Web3-AI became a hot topic.

But today, it’s filled with copycats and mega-projects that serve no real purpose.

Here are the pitfalls to avoid and the key points to focus on.

Overview

IntoTheBlock CEO @jrdothoughts recently shared his insights in an article.

He discussed:

a. Core Challenges of Web3-AI

b. Overhyped trends

c. Trends with high potential

I’ve broken down each of these points for you! Let’s dive in:

market situation

The current Web3-AI market is overhyped and overfunded.

Many projects are out of touch with the actual needs of the AI industry.

This disconnect creates confusion, but also opportunities for those with insight.

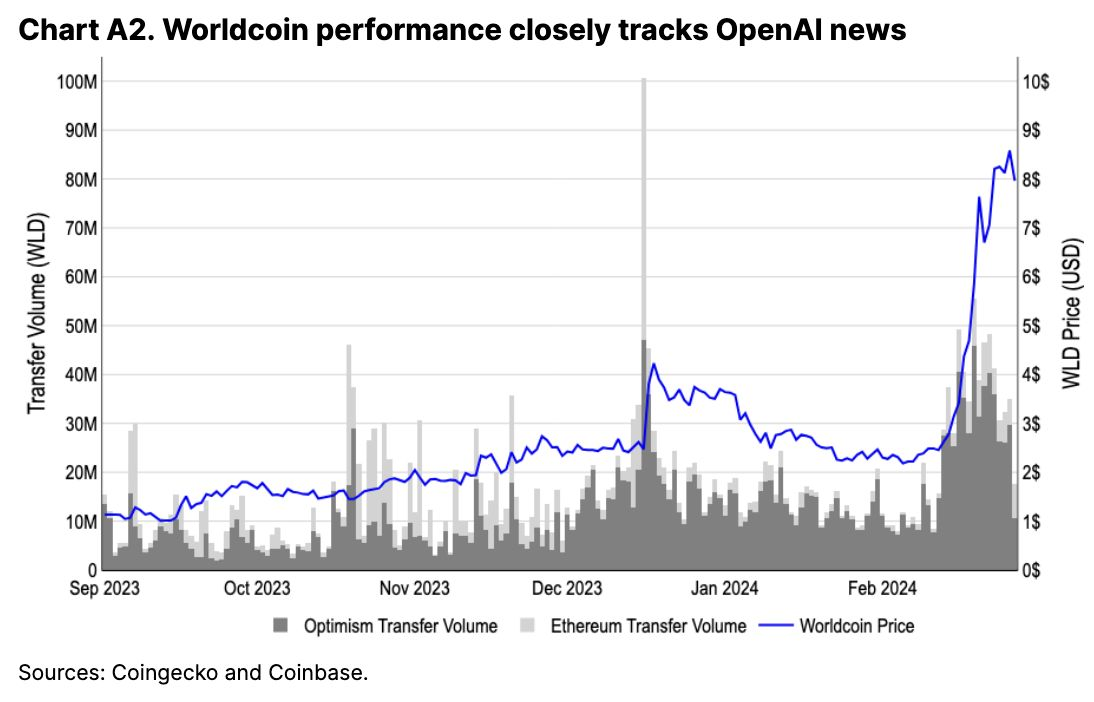

(Courtesy of @coinbase )

Core Challenges

The gap between Web2 and Web3 AI is widening for three main reasons:

Limited AI research talent

Limited infrastructure

Insufficient models, data, and computing resources

Generative AI Basics

Generative AI relies on three major elements : models, data, and computing resources .

Currently, no major model is optimized for Web3 infrastructure.

Initial funding supported a number of Web3 projects that were out of touch with AI reality.

Overrated Trend

Despite all the hype, not all Web3-AI trends are worth paying attention to.

Here are some of the most overrated trends that @jrdothoughts believes:

a. Decentralized GPU network

b. ZK-AI Model

c. Proof of reasoning (thanks to @ModulusLabs )

Decentralized GPU Network

These networks promise to democratize AI training.

But the reality is that training large models on decentralized infrastructure is slow and impractical.

This trend has yet to deliver on its lofty promise.

Zero-knowledge AI model

Zero-knowledge AI models appear attractive in terms of privacy protection.

But in practice, they are computationally expensive and difficult to interpret.

This makes them less practical for large-scale applications.

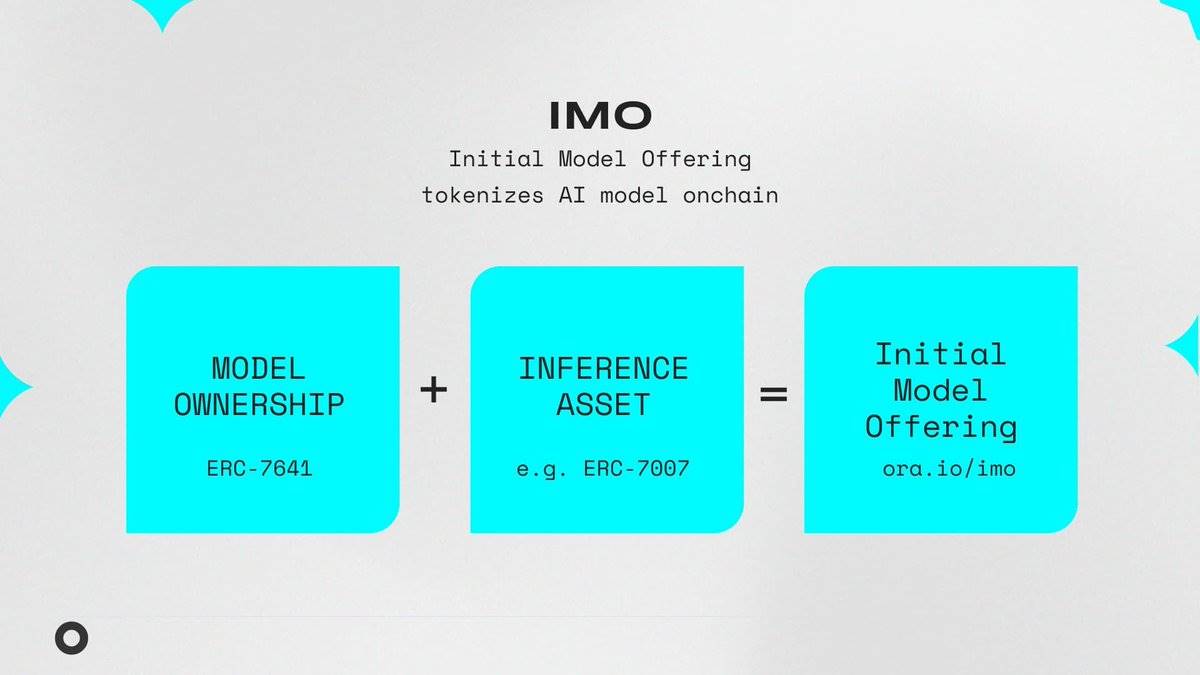

(Thanks to @oraprotocol )

Information in the picture:

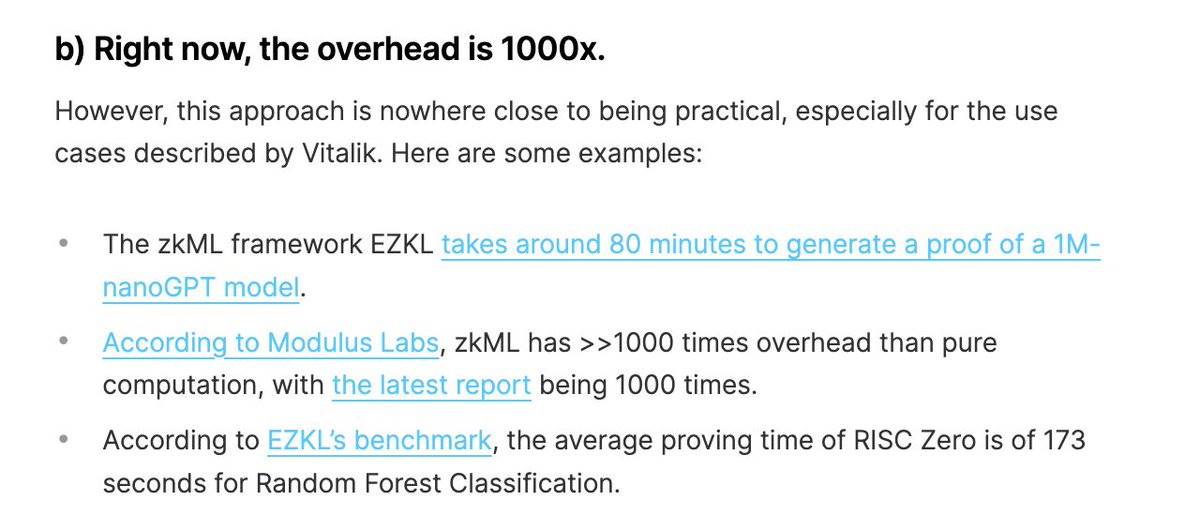

b) Currently, the overhead is as high as 1000 times.

However, this approach is still far from being practical, especially for the use cases Vitalik describes. Here are some examples:

The zkML framework EZKL takes about 80 minutes to generate a proof for a 1M-nanoGPT model.

According to Modulus Labs, zkML’s overhead is more than 1,000 times higher than pure computation, with the latest report showing 1,000 times higher.

According to the EZKL benchmark, RISC Zero has an average proof time of 173 seconds on the random forest classification task.

Proof by Reasoning

The Proof of Inference framework provides cryptographic proofs for AI outputs.

However, @jrdothoughts argues that these solutions solve problems that don't exist.

Therefore, they have limited real-world applications.

High potential trends

While some trends are overhyped, others have significant potential.

Here are some underappreciated trends that could offer real opportunities:

a. AI with a wallet Agent

b. Cryptocurrency to fund AI

c. Small base model

d. Synthetic Data Generation

AI agent with wallet

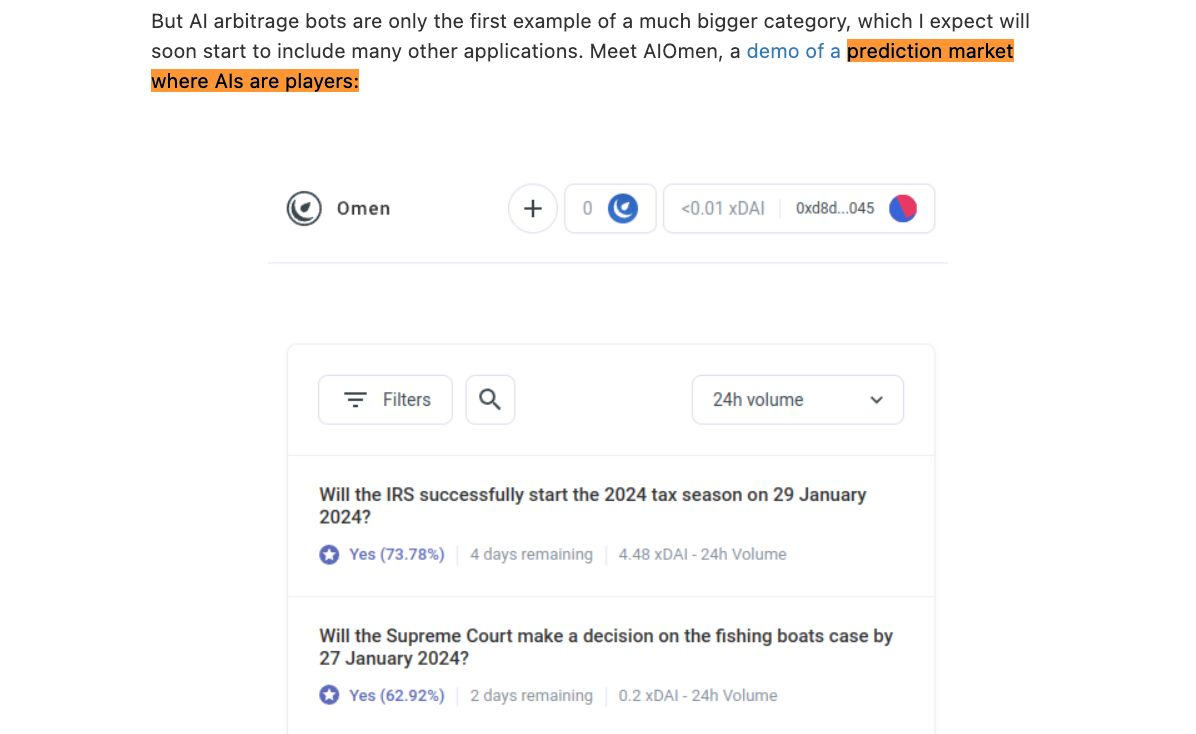

Imagine AI agents having financial capabilities through cryptocurrency.

These agents can hire other agents or stake funds to ensure quality.

Another interesting application is "predictive agents", as mentioned by @vitalikbuterin .

Cryptocurrency Funding AI

Generative AI projects are often underfunded.

Cryptocurrency’s efficient capital formation methods, such as airdrops and incentives, provide critical funding support for open source AI projects.

These approaches help drive innovation. (Courtesy of @oraprotocol )

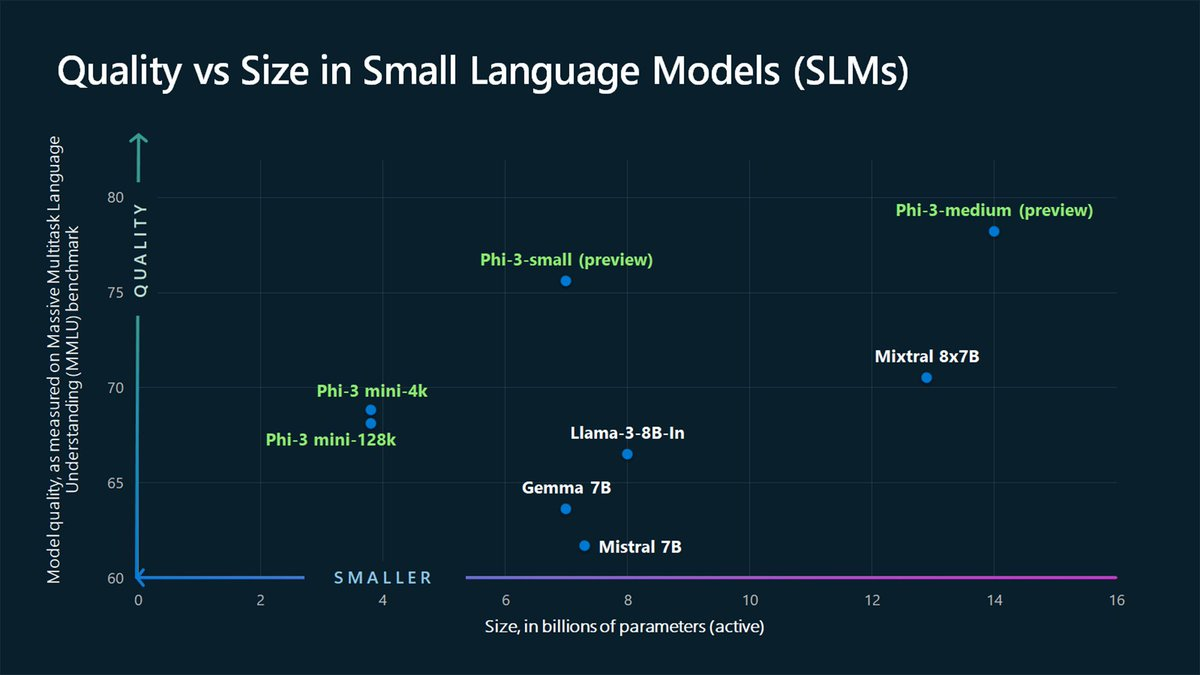

Small base model

Small, basic models, such as Microsoft's Phi model, demonstrate the idea that less is more.

Models with 1B-5B parameters are critical to decentralized AI and enable powerful on-device AI solutions.

(Source: @microsoft )

Synthetic Data Generation

Data scarcity is one of the main obstacles to the development of AI.

Synthetic data generated by the base model can effectively supplement real-world datasets.

Overcoming the hype

The initial Web3-AI craze focused on some unrealistic value propositions.

@jrdothoughts argues that the focus should now turn to building solutions that actually work.

As attention shifts, the field of AI is still full of opportunities, waiting to be discovered by keen eyes.

This article is for educational purposes only and is not financial advice. Many thanks to @jrdothoughts for his valuable insights.