Possible futures of the Ethereum protocol, part 1: The Merge

Author: Vitalik, Founder of Ethereum

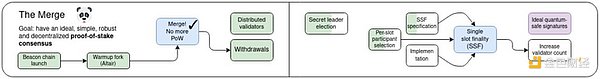

Initially, "The Merge" referred to the most important event since the launch of the Ethereum protocol: the long-awaited and hard-won transition from Proof of Work to Proof of Stake. Today, Ethereum has been running stably for nearly two years, and this PoS has performed very well in terms of stability, performance, and avoiding centralization risks. However, PoS still has some important areas that need improvement.

My roadmap for 2023 breaks it down into several parts: improvements to technical features such as stability, performance, and accessibility to small validators , and economic changes to address centralization risks. The former becomes “The Merge”, while the latter becomes “The Scourge”.

This post will focus on “The Merge”: how can the technical design of Proof of Stake (PoS) be improved, and what are the ways to achieve these improvements?

This is not an exhaustive list of things that could be done with PoS; rather, it is a list of ideas that are being actively considered.

Single Slot Finality (SSF) and Democratizing Staking

What problem are we solving?

Currently, Ethereum takes 2-3 epochs (about 15 minutes) to finalize a block, and requires 32 ETH to become a staker.

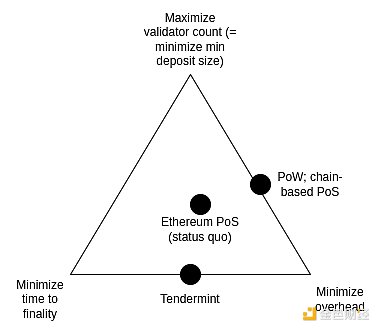

This was originally a compromise to strike a balance between three goals:

Maximize the number of validators participating in staking (which directly means minimizing the minimum amount of ETH required to stake)

Minimize finalization time

Minimize the overhead of running a node

These three goals are in conflict with each other: in order to achieve "economic finality" (i.e., an attacker needs to destroy a lot of ETH to recover a finalized block), every validator needs to sign two messages every time a block is finalized. Therefore, if you have many validators, it either takes a long time to process all the signatures, or you need very powerful nodes to process all the signatures at the same time.

Note that this all depends on a key goal of Ethereum: ensuring that even a successful attack is costly to the attacker. This is what the term “economic finality” means. If we don’t have this goal, then we can solve this problem by randomly selecting a committee (such as Algorand does) to finalize each slot. But the problem with this approach is that if an attacker does control 51% of the validators, then they can attack (undo finalized blocks, censor or delay finalization) at a very low cost: only the fraction of nodes in the committee can be detected as participating in the attack and punished, either by slashing or a minority soft fork. This means that an attacker can attack the chain over and over again many times. Therefore, if we want economic finality, then the simple committee-based approach will not work, and at first glance, we do need full participation from the validators.

Ideally, we would like to preserve economic finality while improving upon the status quo in two areas:

1. Complete blocks in one slot (ideally, keep or even reduce the current length of 12 seconds) instead of 15 minutes

2. Allow validators to stake 1 ETH (originally 32 ETH)

The first goal is justified by two objectives, both of which can be viewed as “aligning the properties of Ethereum with those of (more centralized) performance-focused L1 chains.”

First, it ensures that all Ethereum users benefit from the higher level of security guarantees achieved through the finalization mechanism. Today, most users cannot enjoy this guarantee because they are unwilling to wait 15 minutes; with a single-slot finalization mechanism, users can see the transaction finalized almost immediately after confirming the transaction. Second, if users and applications do not have to worry about the possibility of chain rollbacks (barring relatively rare inactivity leaks), then it simplifies the protocol and the infrastructure around it.

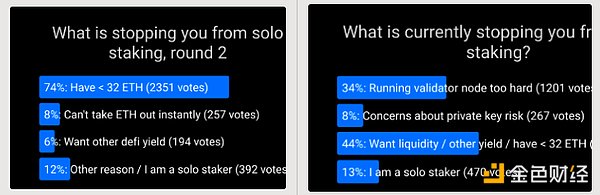

The second goal is motivated by a desire to support solo stakers. Poll after poll has repeatedly shown that the main factor preventing more people from solo staking is the 32 ETH minimum. Lowering the minimum to 1 ETH would solve this problem to the point where other issues become the main factor limiting solo staking.

There is a challenge: both the goals of faster finality and more democratized staking conflict with the goal of minimizing overhead. In fact, this fact is the entire reason we don’t adopt single-slot finality in the first place. However, recent research suggests some possible solutions to this problem.

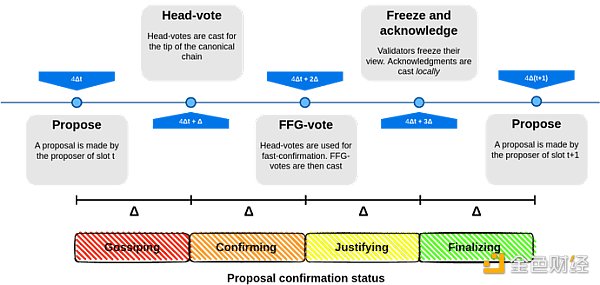

What is SSF and how does it work?

Single-slot finality involves using a consensus algorithm that finalizes blocks within one slot. This is not in itself an unattainable goal: many algorithms (such as Tendermint consensus) already achieve this with optimal properties. One desirable property unique to Ethereum that Tendermint does not support is the "inactivity leak", which allows the chain to continue running and eventually recover even if more than 1/3 of validators are offline. Fortunately, this desire has been addressed: there are proposals to modify Tendermint-style consensus to accommodate the inactivity leak.

Leading Single-Slot Finality Proposals

The hardest part of the problem is figuring out how to make single-slot finality work at very high validator counts without incurring extremely high node operator overhead. To this end, there are several leading solutions:

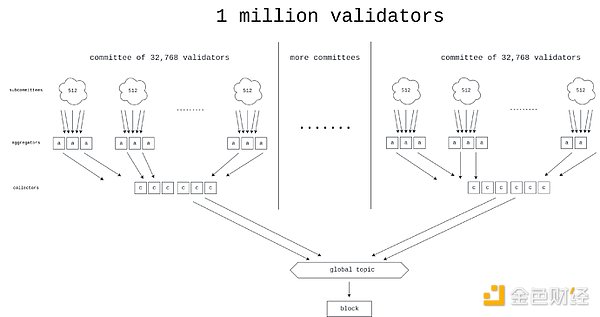

Option 1: Brute Force — Work towards a better signature aggregation protocol, perhaps using ZK-SNARKs, which would essentially allow us to process signatures from millions of validators per slot.

Horn, one of the proposed designs for a better aggregation protocol.

Option 2: Orbit Committees - A new mechanism that allows a randomly selected medium-sized committee to be responsible for completing the chain, but in a way that preserves the attack cost properties we seek.

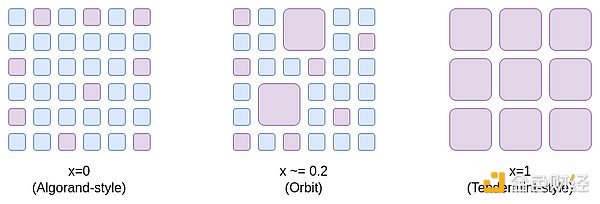

One way to think about the Orbit SSF is that it opens up a space of compromise options ranging from x=0 (Algorand-style committees with no economic finality) to x=1 (status quo in Ethereum), opening up middle points where Ethereum still has enough economic finality to be extremely secure, but at the same time we gain the efficiency advantage of only requiring a moderately sized random sample of validators to participate in each slot.

Orbit exploits pre-existing heterogeneity in validator deposit sizes to obtain as much economic finality as possible while still giving solo validators appropriate roles. Additionally, Orbit uses slow committee rotation to ensure a high overlap between adjacent quorums, ensuring that its economic finality still applies across committee rotation boundaries.

Option 3: Two-Tier Staking - A mechanism where stakers are divided into two classes, one with a higher deposit requirement and one with a lower deposit requirement. Only the tier with the higher deposit requirement would directly participate in providing economic finality. There are various proposals (see the Rainbow staking article, for example) to specify what rights and responsibilities the tier with the lower deposit requirement would have. Common ideas include:

Delegating the right to pledge to higher-level pledgers

Randomly select lower-tier stakers to attest and finalize each block

The right to generate inclusion lists

What are the connections with existing research?

Path to single-slot finality (2022): https://notes.ethereum.org/@vbuterin/single_slot_finality

Specific proposal for Ethereum single-slot finality protocol (2023): https://eprint.iacr.org/2023/280

Orbit SSF: https://ethresear.ch/t/orbit-ssf-solo-staking-friendly-validator-set-management-for-ssf/19928

Further analysis of Orbit style mechanism: https://notes.ethereum.org/@anderselowsson/Vorbit_SSF

Horn, Signature Aggregation Protocol (2022): https://ethresear.ch/t/horn-collecting-signatures-for-faster-finality/14219

Signature merging for large-scale consensus (2023): https://ethresear.ch/t/signature-merging-for-large-scale-consensus/17386?u=asn

Signature aggregation protocol proposed by Khovratovich et al.: https://hackmd.io/@7dpNYqjKQGeYC7wMlPxHtQ/BykM3ggu0#/

Signature Aggregation Based on STARK (2022): https://hackmd.io/@vbuterin/stark_aggregation

Rainbow Staking: https://ethresear.ch/t/unbundling-staking-towards-rainbow-staking/18683

What is left to do? What are the trade-offs?

There are four main possible paths to choose from (we can also take hybrid paths):

1. Maintain the status quo

2. Orbit SSF

3. Brute Force SSF

4. SSF with a two-tier pledge mechanism

1 means doing nothing and keeping things as is, but this would make Ethereum’s security experience and staking centralization properties worse than they otherwise would be.

2 Avoid “high tech” and solve the problem by cleverly rethinking the protocol assumptions: we relax the “economic finality” requirement so that we require attacks to be expensive, but the cost of an attack can be 10 times lower than it is today (e.g., $2.5 billion instead of $25 billion). It is widely believed that Ethereum has far more economic finality today than it needs to, and its main security risks are elsewhere, so this is arguably an acceptable sacrifice.

The main work is to verify that the Orbit mechanism is secure and has the properties we want, and then fully formalize and implement it. In addition, EIP-7251 (increase the maximum valid balance) allows voluntary validator balances to be merged, which immediately reduces chain verification overhead and serves as an effective initial stage for the Orbit rollout.

3 Avoid clever rethinking and instead use high technology to brute force the problem. To do this requires collecting a large number of signatures (more than 1 million) in a very short time (5-10 seconds).

4 avoids clever rethinking and high tech, but it does create a two-tiered staking system that still has centralization risks. The risk depends largely on the specific rights obtained by the lower staking layer. For example:

If lower-tier stakers need to delegate their attestation power to higher-tier stakers, delegation may become centralized and we will end up with two highly concentrated staking tiers.

If random sampling of lower layers is required to approve each block, then an attacker can prevent finality by spending only a tiny amount of ETH.

If lower-level stakers can only produce inclusion lists, then the proof layer may remain centralized, at which point a 51% attack on the proof layer can censor the inclusion lists themselves.

It is possible to combine multiple strategies, for example:

1 + 2: Add Orbit, but do not enforce single-slot finality

1 + 3: Use brute force techniques to reduce the minimum deposit amount without single-slot finalization. The amount of aggregation required is 64 times less than the pure (3) case, so the problem becomes easier.

2+3: Execute Orbit SSF with conservative parameters (e.g., 128k validator committee instead of 8k or 32k), and use brute force techniques to make it super efficient.

1+4: Add Rainbow staking, but without single slot finalization

How does SSF interact with the rest of the roadmap?

Among other benefits, single-slot finality reduces the risk of certain types of multi-block MEV attacks. Additionally, in a single-slot finality world, the prover-proposer separation design and other in-protocol block production pipelines need to be designed differently.

The weakness of brute force strategies is that they make it more difficult to reduce the slot time.

Single secret leader election (SSLE)

What problem are we solving?

Today, it is known in advance which validator will propose the next block. This creates a security vulnerability: an attacker can monitor the network, determine which validators correspond to which IP addresses, and launch a DoS attack on the validator when it is about to propose a block.

What is SSLE and how does it work?

The best way to solve the DoS problem is to hide the information about which validator will generate the next block, at least until the block is actually generated. Note that this is easy if we remove the "single" requirement: one solution is to let anyone create the next block, but require the randao to reveal less than 2256/N. On average, only one validator will be able to meet this requirement - but sometimes there will be two or more, and sometimes there will be none. Combining the "secret" requirement with the "single" requirement has always been a difficult problem.

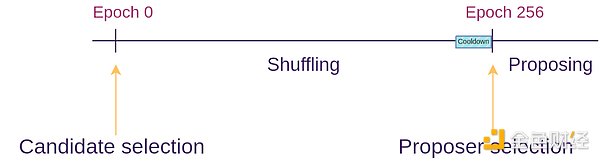

The single secret leader election protocol solves this problem by using some cryptography to create a "blind" validator ID for each validator, and then giving many proposers the opportunity to shuffle and re-blind the pool of blind IDs (this is similar to how mixnets work). During each period, a random blind ID is chosen. Only the owner of that blind ID can generate a valid proof to propose a block, but no one knows which validator that blind ID corresponds to.

Whisk SSLE protocol

What are the connections with existing research?

Dan Boneh’s paper (2020): https://eprint.iacr.org/2020/025.pdf

Whisk (Ethereum specific proposal, 2022): https://ethresear.ch/t/whisk-a-practical-shuffle-based-ssle-protocol-for-ethereum/11763

Single Secret Leader Election tag on ethresear.ch: https://ethresear.ch/tag/single-secret-leader-election

Simplified SSLE using ring signatures: https://ethresear.ch/t/simplified-ssle/12315

What is left to do? What are the trade-offs?

Really, all that’s left is to find and implement a protocol that’s simple enough that we can easily implement it on mainnet. We place a lot of importance on Ethereum being a fairly simple protocol, and we don’t want the complexity to grow further. The SSLE implementations we’ve seen add hundreds of lines of code to the specification and introduce new assumptions in complex cryptography. Finding a sufficiently efficient quantum-resistant SSLE implementation is also an open problem.

It may eventually come to the point where the “marginal additional complexity” of SSLE will only drop low enough if we take the plunge and introduce a mechanism for performing general zero-knowledge proofs in the Ethereum protocol at L1 for other reasons (e.g. state tries, ZK-EVM).

Another option is to not bother with SSLE at all, and instead use extra-protocol mitigations (eg at the p2p layer) to address the DoS problem.

How does it interact with the rest of the roadmap?

If we add attester-proposer separation (APS) mechanisms such as execution tickets, then execution blocks (i.e. blocks containing Ethereum transactions) will not need SSLE because we can rely on dedicated block builders. However, for consensus blocks (i.e. blocks containing protocol messages such as attestations, parts that may contain lists, etc.), we will still benefit from SSLE.

Faster transaction confirmation

What problem are we solving?

It would be valuable to further reduce Ethereum's transaction confirmation time, from 12 seconds to 4 seconds. Doing so would significantly improve the user experience of L1 and rollups, while making DeFi protocols more efficient. It would also make L2 more decentralized, as it would allow a large number of L2 applications to work on rollups, reducing the need for L2 to build its own committee-based decentralized ordering.

What is Faster Transaction Confirmation and How Does It Work?

There are roughly two techniques here:

1. Reduce the slot time, for example to 8 seconds or 4 seconds. This does not necessarily mean 4 seconds of finality: finality itself requires three rounds of communication, so we can make each round of communication a separate block, which will get at least preliminary confirmation after 4 seconds.

2. Allow proposers to publish pre-confirmations during the slot. In extreme cases, proposers can include the transactions they see in their blocks in real time and immediately publish pre-confirmation messages for each transaction ("My first transaction is 0x1234...", "My second transaction is 0x5678..."). The situation where a proposer publishes two conflicting confirmations can be handled in two ways: (i) punish the proposer, or (ii) use witnesses to vote to decide which one is earlier.

What are the connections with existing research?

Based on preconfirmations: https://ethresear.ch/t/based-preconfirmations/17353

Protocol Enforced Proposer Commitments (PEPC): https://ethresear.ch/t/unbundling-pbs-towards-protocol-enforced-proposer-commitments-pepc/13879

Staggered periods on parachains (2018 idea for low latency): https://ethresear.ch/t/staggered-periods/1793

What is left to do? What are the trade-offs?

It is unclear how feasible it is to reduce slot times. Even today, it is difficult for stakers in many parts of the world to get proofs fast enough. Attempting 4 second slot times risks centralizing validators and making it impractical to become a validator outside of a few privileged regions due to latency.

The weakness of the proposer preconfirmation approach is that it greatly improves average-case inclusion time, but not the worst-case: if the current proposer is performing well, your transaction will be preconfirmed in 0.5 seconds instead of being included in (on average) 6 seconds, but if the current proposer is offline or performing poorly, you will still have to wait a full 12 seconds before the next slot can start and a new proposer can be provided.

Additionally, there is an open question of how to incentivize preconfirmations. Proposers have an incentive to maximize their optionality for as long as possible. If witnesses sign off on the timeliness of preconfirmations, then transaction senders can make part of their fees conditional on immediate preconfirmations, but this places an additional burden on witnesses and may make it more difficult for witnesses to continue to act as neutral "dumb pipes."

On the other hand, if we don’t try to do this, and keep finality times at 12 seconds (or longer), the ecosystem will place more emphasis on pre-confirmation mechanisms on layer 2, and interactions across layer 2 will take longer.

How does it interact with the rest of the roadmap?

Proposer-based pre-confirmation actually relies on attestor-proposer separation (APS) mechanisms such as execution tickets . Otherwise, the pressure to provide real-time pre-confirmation may be too concentrated for regular validators.

Other research areas

51% attack recovery

It is often assumed that if a 51% attack occurs (including attacks that cannot be cryptographically proven, such as censorship), the community will come together to implement a minority soft fork, ensuring that the good guys win and the bad guys are inactivity-leaked or slashed. However, this level of over-reliance on the social layer is arguably unhealthy. We can try to reduce reliance on the social layer by making the recovery process as automated as possible.

Full automation is impossible, since that would be equivalent to a consensus algorithm with >50% fault tolerance, and we already know the (very strict) mathematically provable limitations of such algorithms. But we can achieve partial automation: for example, a client could automatically refuse to accept a chain as final or even as the head of a fork choice if it censors transactions it has seen for a long time. A key goal is to ensure that an attacker cannot at least achieve a quick and complete victory.

Raising the bar for Quorum

Today, blocks are finalized as long as 67% of stakers support it. Some people think this is too aggressive. In the entire history of Ethereum, there has been only one (very brief) failure of finality. If this ratio was increased to 80%, the number of additional non-finality periods would be relatively low, but Ethereum would gain security: in particular, many more controversial situations would lead to temporary suspension of finality. This seems to be a healthier situation than the "wrong party" winning immediately, whether the wrong party is an attacker or the client has a bug.

This also answers the question of “what’s the point of having solo stakers?”. Today, with most stakers already staking through staking pools, it seems unlikely that a solo staker could reach 51% of ETH. However, it seems possible for a solo staker to reach quorum-blocking minority if we try, especially if the quorum is 80% (so a quorum-blocking minority only needs 21%). As long as solo stakers do not participate in a 51% attack (either by finality reversal or censorship), such an attack will not result in a “clean win”, and solo stakers will have an incentive to help organize a minority soft fork.

Quantum resistance

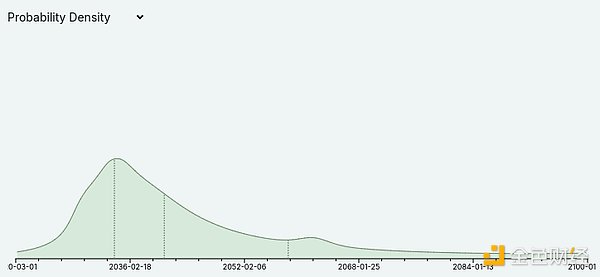

Metaculus currently believes that quantum computers could begin to crack cryptography sometime in the 2030s, albeit with large margins of error:

Quantum computing experts, such as Scott Aaronson, have also recently started to take the possibility of quantum computers actually working in the medium term more seriously. This will have implications for the entire Ethereum roadmap: it means that every part of the Ethereum protocol that currently relies on elliptic curves will need some kind of hash-based or other quantum-resistant alternative. This specifically means that we cannot assume that we will be able to forever rely on the superior performance of BLS aggregation to process signatures from large validator sets. This justifies conservatism in performance assumptions of proof-of-stake designs, and is a reason to more aggressively develop quantum-resistant alternatives.

Special thanks to Justin Drake, Hsiao-wei Wang, @antonttc, and Francesco for their feedback and reviews.