The Selective Disclosure/Compliance Challenge in Web3

*By Peyman Momeni, Fairblock; Amit Chaudhary, Labyrinth; Muhammad Yusuf, Delphi Digital

Why?

Today, after 16 years, blockchains’ utility is mostly limited to meme coins and circular infrastructure projects. Yes it’s fun, yes a lot of people made millions, but are we still building the new internet? What happened to the idea of our decentralized private new internet?

The most obvious application – transfers– hasn’t yet been fully realised even for basic scenarios. Most businesses can’t use it. They can’t share their payroll with everyone. Vitalik is getting bullied for his onchain donations to science. Businesses don’t want to share their confidential strategies and data publicly, users don’t like the choice between a centralized service or getting sandwiched maximally and begging for a change from middlemen mercenaries.

While the machine is running, we, as a collective, cannot continue this path for another 16 years.

With privacy should come confidentiality to secure your onchain actions, allowing for more useful and impactful applications to be built and for users to reap the benefits of a more expressive blockchain experience. Confidentiality is a standard across Web2, it’s imperative that it become a standard across Web3.

The lack of onchain confidentiality has hindered the growth and adoption of even the most obvious applications. Confidentiality is one of the most misunderstood terms in crypto. On one end of the spectrum it is associated with money laundering and illegal financial activities and on the other, it unlocks high utility use cases such as normal private transfers, dark pools, frontrunning protection, confidential AI, zkTLS, gaming, healthcare and private governance.

Economies function efficiently when there is a balance between confidentiality and transparency. Take financial markets as an example - confidentiality makes information valuable and tradable, but selective disclosure of that information would be helpful in preventing [market abuse]. Many financial activities such as portfolio management, asset trading, payments, and banking require confidentiality with a need to balance data disclosure for compliance and regulation purposes. However, the phenomenon of selective disclosure is not limited to finance and compliance. A recent example is the confidential social media theme, which is currently battling misinformation and hate content, necessitating self-regulation by social media giants through disclosures).

Recent challenges in fake content generation through AI models have raised questions about the value of sharing secrets and the on-demand disclosure of secrets. During the COVID pandemic, vaccine research raised controversy because important stakeholders were kept in the dark about the detailed results. Balancing confidentiality and transparency takes on many shapes, and sometimes regulation and compliance make this the most important problem to tackle. Technology comes to the rescue in finding the balance - the key question being asked is whether we can remove the centralized party in selective disclosure or compliance use cases.

We have confidentiality inside our walls, 95% of the internet, iPhones, bank accounts, elections and even a friendly poker game. Just the other day US slapped TD - the largest bank in Canada- with $3B fine over cartel money laundering. But no one is going to avoid banks, no one is scared of privacy in other banks or industries, and even TD itself is not going down. An impactful system shouldn’t be vaporized and banned because of a few bad actors. In web3, we shouldn’t overreact to a few bad examples and myths that we’ve seen. We shouldn’t shy away from building the new internet and turn it into short-term distractions. In most cases, we don’t even have a compliance problem. For private transfers, we can build systems that are as compliant as real-world banks, but still more transparent, private and decentralized. More impactful problems are harder to solve, that’s the way it is.

Even if we only care about money, we can’t extract value from memecoins for another 16 years. Now that we have the scalable infrastructure, opportunities are going to be orders of magnitude greater if we have onchain confidentiality and real applications.

The Elephant in the room

One of the most pressing challenges of DeFi is the balance between confidentiality and compliance. Maintaining user privacy while ensuring regulatory oversight without centralization requires a delicate approach. This article explores the solution through selective disclosure, enabling privacy and accountability without compromising security or compliance.

So far in web3, we’ve figured out private transfers and know how to transfer and trade assets privately by proving the validity of transactions without leaking our private identities. The open-debated technical and philosophical challenge is how we can make sure that the technology is not used by minority of bad actors at the expense of the majority of active users, how can we have at least the same level of privacy as our current banks?

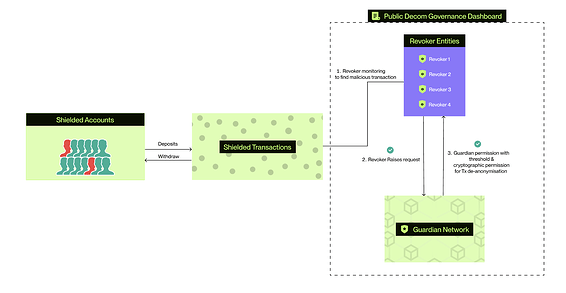

While the private transfers themselves are enabled by ZKPs, different centralized or decentralized techniques and cryptographic schemes such as MPC can be used for compliance. Some of the current efforts for making compliant private transfers are:

Pre-transfer proof of legitimate funds (0xbow/Privacy Pools/Railgun): Users can prove non-association with lists of illicit activities or sanctioned addresses before execution of their transfers.

Post-transfer selective de-anonymization: Balancing blockchain privacy and regulatory compliance by providing accountability using zk and threshold cryptography

DID and regulatory smart contracts: Programming real-world rules such as the 10K limit, and other conditions by privately sharing information using decentralized identifiers and MPC/FHE.

ID verification: Users should engage with non-private and centralized long and haphazard processes of KYC for each of the services they are using.

However, none of these approaches are complete by themselves as they fail to address the balance between privacy and regulations. Deposit limits aim to block illicit funds but often result in inconvenience to legitimate users. Sanction lists are slow to update, allowing bad actors to operate before detection, and there’s no recovery for wrongly flagged addresses. Blockchain analysis tools such as Chainalysis, miss illicit activities due to false negatives. “View-only” access relies on user cooperation, failing against malicious actors. The association sets in privacy pools delay the detection of illicit transactions and rely on untrusted set providers. KYC compromises privacy by forcing users to disclose sensitive information on the first step of using privacy applications, without solving the problem of users turning malicious later. Ultimately, these approaches rely on centralized controls, undermining the decentralized nature of Web3.

Co-existence of Privacy and Compliance through Decentralized Approaches

The answer to balancing privacy and compliance lies in a decentralized compliance framework. This approach allows compliance to coexist with privacy by creating systems where compliance measures can be enforced without compromising user anonymity.

There needs to be different levels of decentralized pre-transfer compliance and case-by-case post-execution audibility through selective disclosure. This way, we still achieve common sense privacy for DeFi while allowing authorities to request more information on a rare case-by-case basis. At the very least, this offers an equivalent level of web2 and tradfi privacy with more decentralization and transparency properties.

Dark pools in traditional finance enable trader anonymity while ensuring regulatory post-trade transparency. Recently dark pools and privacy-focused blockchain protocols such as Railgun, Penumbra, and Renegade are gaining attention. However, they’re either non-compliant or only partially compliant. Selective disclosures can address these issues by ensuring that users’ actions are legitimate while preserving anonymity where appropriate. While users can use a mix of methods to prove their legitimate source of funds and identities, threshold networks can ensure post-transaction accountability.

Post-transaction accountability through MPC/Threshold decryption

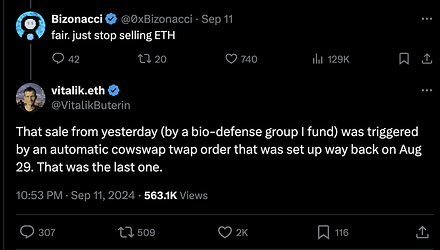

In a threshold network, compliance and accountability are enforced without relying on central authorities. The system is based on independent entities such as Revokers and Guardians:

Accountable Privacy means that users must engage in legitimate activities. Malicious behavior can lead to selective de-anonymization, but only under lawful conditions, ensuring integrity without compromising user privacy unjustly.

Accountable De-Anonymization ensures that de-anonymization requests are public and traceable, requiring cooperation between Revokers and Guardians, thus preventing unauthorized disclosure.

Non-fabrication guarantees that honest users cannot be falsely accused, even if there is collusion. The cryptographic commitments ensure all participants are bound to act transparently, safeguarding user rights.

Here’s a detailed end-to-end flow explaining how transactions are managed, de-anonymization is requested, and the process is carried out in an accountable and publicly verifiable way by users, revokers, and guardians:

User Transaction (Onchain)

Users are accountable for doing compliant transactions. Users face de-anonymization if they act maliciously. Misbehavior leads to loss of privacy, but only under lawful conditions. A user initiates an onchain private transaction on the protocol, and encrypted data is included in the transaction payload. This ensures that all transaction details remain private and secure on-chain, preventing unauthorized access to the user’s data.

Suspicious Activity Detection (Off-chain)

A Revoker such as a DAO, trusted entity, or neutral gatekeeper, monitors the transaction off-chain, which uses monitoring tools to detect any potential illicit activity or suspicious behavior. The Revoker flags the user’s transaction if it appears to violate compliance rules or triggers suspicious activity alerts.

De-Anonymization Request Submission (Onchain)

Once the Revoker identifies a suspicious activity, they submit an onchain de-anonymization request on the governance dashboard. This request initiates the de-anonymization process and makes the request publicly verifiable and transparent to all the network participants. The Revoker does not have de-anonymization rights at this stage but is merely flagging the transaction for further review.

Guardian Review and Voting (Off-chain)

The request is picked up by a decentralized network of Guardians (trusted entities picked up through the governance process). These Guardians act as decision-makers and are responsible for validating the Revoker’s de-anonymization request. They assess the flagged transaction according to governance policies and determine whether de-anonymization should be allowed. This review process occurs off-chain to ensure the privacy of decision-making and governance.

Threshold Mechanism (Onchain)

For the de-anonymization request to proceed, a certain threshold of Guardian approvals must be met (e.g., 6 out of 10 Guardians need to approve). Each Guardian that votes in favor of de-anonymization submits their cryptographic permission onchain, which is aggregated to reach the required threshold. This on-chain submission guarantees transparency and prevents any foul play or unauthorized actions.

De-Anonymization Execution (Off-chain)

Once the necessary cryptographic permissions have been granted, the Revoker can decrypt the flagged transaction. This process happens off-chain, and only the specific transaction under investigation is revealed to the Revoker—no other data or transactions are affected or exposed. Importantly, even the Guardians who approved the request do not gain access to the transaction details; only the Revoker can view the decrypted transaction information.

Post-De-Anonymization (Onchain)

If further suspicious activity is linked to the decrypted transaction, it will be flagged separately, requiring a new de-anonymization request to be submitted by the revoker and approved by the Guardians. The rest of the user’s transaction history and data remain encrypted and private. This ensures that privacy is maintained for non-flagged transactions while enabling compliant de-anonymization for suspicious activities.

Security

There’s a trust assumption in the threshold network. A dishonest majority of malicious validators can work together to decrypt transactions - with or without detection depending on the scheme.

It is worth mentioning that the consequence of such an attack is losing confidentiality, and neither the safety of the network, loss of funds nor private information regarding the identities of users. In this case, the system’s confidentiality will downgrade to the current state of public blockchains. The consequence is more limited in the cases where only ephemeral confidentiality is required or confidentiality is leveraged for better execution quality, not the privacy of users e.g. frontrunning protection, and sealed-bid auctions.

However, validators and operators should be incentivized to protect user privacy with respect to the stakes in the game. The solution lies in building robust networks where compliance can be enforced without compromising decentralization. The transition will involve integrating permissionless compliance mechanisms, where incentives are aligned to encourage honest validator behavior. Approaches like Proof of Stake (PoS) and AVS ensure network security, while cryptographic traitor tracing and slashing mechanisms deter malicious actors. There are many promising recent works in this area: Multimodal Cryptography - Accountable MPC + TEE - HackMD

Threshold MPC and confidentiality beyond compliant transfers

The use of Threshold MPC extends beyond compliance, finding applications across multiple confidentiality sectors:

- Frontrunning protection and MEV Protection: Preventing manipulative trading practices by hiding transaction data until completion. Replacing centralized relayers in Ethereum’s MEV supply chain. Leaderless and incentive-aligned MEV or preconfirmation auctions.

- PvP GameFi or prediction markets: Ensuring fairness and excitement by concealing actions until necessary. Adding the element of onchain surprise by decrypting values. Decentralize decryption of oracle updates in prediction markets (instead of naive hashes as in UMA)

- Private Governance/Voting: Prevention of manipulation during decision-making processes, coercion resistance, and privacy of voters.

- Access Control and SocialFi: Enhancing privacy in decentralized applications while retaining usability and accountability as well monetization of contents in creator economies.

- Confidential AI: Decentralized and privacy-preserving systems for training, inference and data sharing to unlock access to more players and data and not being limited because of privacy concerns.

- Healthcare: Access to more and better personalized healthcare services by analysis of biological or health data without loss of privacy or trusting centralized parties.

Path Forward: Seamless Web3 Confidentiality

The ultimate goal for privacy in Web3 is to make it as seamless as it is in Web2. Encryption in traditional internet applications (e.g., HTTP transitioning to HTTPS) has become so common that users hardly notice it. A similar evolution is required for Web3—Confidentiality should be invisible to the user, seamlessly integrated into their experience. While most confidentiality schemes don’t have compliance challenges in the first place, private transfers can be compliant through multimodal cryptography techniques such as MPC and ZKPs.