💡 Editor's Recommendation:

In the world of Blockchain, decentralized computing is a promised land that is difficult to reach. Traditional smart contract platforms like Ethereum are limited by high computational costs and limited scalability, while new-generation computing architectures are trying to break these limitations. AO and ICP are currently the two most representative paradigms, one focusing on modular decoupling and infinite scalability, the other emphasizing structured management and high security.

The author of this article, Teacher Blockpunk, is a researcher at Trustless Labs and an OG in the ICP ecosystem. He has founded the ICP League incubator and has been long engaged in the technical and developer community, with active attention and deep understanding of AO. If you are curious about the future of Blockchain and want to know what a truly verifiable and decentralized computing platform in the AI era will look like, or if you are looking for new public chain narratives and investment opportunities, this article is definitely worth a read. It not only analyzes the core mechanisms, consensus models, and scalability of AO and ICP in detail, but also delves into their comparisons in terms of security, decentralization, and future potential.

In this ever-changing crypto industry, who will be the true "world computer"? The outcome of this competition may determine the future of Web3. Read this article to get ahead and understand the latest landscape of decentralized computing!

The combination with AI has become a hot trend in the current crypto world, with countless AI Agents starting to issue, hold and trade cryptocurrencies. The explosion of new applications is accompanied by a demand for new infrastructure, and verifiable and decentralized AI computing infrastructure is particularly important. However, smart contract platforms represented by ETH and decentralized computing platforms represented by Akash and IO cannot simultaneously meet the two demands of verifiability and decentralization.

In 2024, the team of the well-known decentralized storage protocol Arweave announced the AO architecture, which is a decentralized general-purpose computing network that supports fast and low-cost scaling, and can therefore run many computationally intensive tasks, such as the inference process of AI Agents. The computing resources on AO are organically integrated through AO's message transmission rules, and the request sequence and content are recorded in an immutable manner based on Arweave's holistic consensus, allowing anyone to obtain the correct state by re-computing, thus realizing the verifiability of the computation under optimistic security guarantees.

The AO computing network no longer reaches consensus on the entire computation process, which ensures the flexibility and extremely high efficiency of the network; the processes (which can be seen as "smart contracts") run on the Actor model and interact through messages, without the need to maintain a shared state data.

While sounding similar to the design of DFINITY's Internet Computer (ICP), ICP achieves a similar goal through a structured subnet of computing resources. Developers often draw comparisons between the two. This article will mainly compare these two protocols.

Consensus Computing and General Computing

The approaches of ICP and AO are both to decouple consensus and computing content to achieve flexible scaling of computing, thereby providing cheaper computing and handling more complex problems. In contrast, the traditional smart contract network represented by Ethereum, all the computing nodes in the entire network share a common state memory, and any computation that changes the state requires all nodes in the network to repeat the computation simultaneously to reach consensus. Under this fully redundant design, the uniqueness of consensus is guaranteed, but the cost of computation is very high and it is difficult to scale the computing power of the network, which can only be used to handle high-value business. Even on high-performance public chains like Solana, it is difficult to bear the intensive computing demands of AI.

As general-purpose computing networks, AO and ICP do not have a globally shared state memory, so there is no need to reach consensus on the computation process itself that changes the state, only on the execution order of the transactions/requests, and then verify the computation results. Based on the optimistic assumption of the security of the node virtual machine, as long as the content and order of the input requests are consistent, the final state will also be consistent. The state changes of smart contracts (called "containers" in ICP and "processes" in AO) can be computed in parallel on multiple nodes, without requiring all nodes to compute exactly the same tasks at the same time. This greatly reduces the cost of computation and increases the scalability, so it can support more complex businesses, even the decentralized operation of AI models. Both AO and ICP claim "infinite scalability", and the differences between them will be compared later.

Since the network no longer jointly maintains a large public state data, each smart contract is seen as being able to handle transactions independently, and smart contracts interact with each other through messages, which is an asynchronous process. Therefore, decentralized general-purpose computing networks often adopt the Actor programming model, which makes the composability of contract businesses worse compared to platforms like ETH, which brings certain difficulties for DeFi. However, it is still possible to use specific business programming specifications to solve this, such as the Fusion Fi Protocol on the AO network, which standardizes the DeFi business logic through a unified "ticket-settlement" model to achieve interoperability. In the early stage of the AO ecosystem, such protocols can be said to be quite forward-looking.

AO's Implementation

AO is built on top of the Arweave permanent storage network, running through a new node network. Its nodes are divided into three groups: Message Units (MU), Computation Units (CU), and Scheduler Units (SU).

In the AO network, smart contracts are called "processes", which are a set of executable code permanently stored on Arweave.

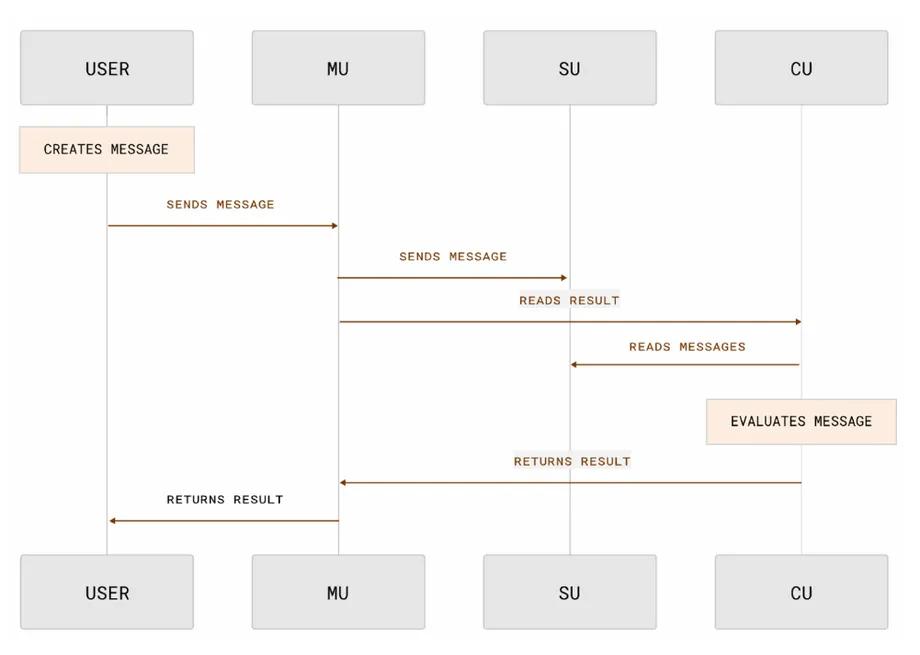

When a user needs to interact with a process, they can sign and send a request. AO has standardized the format of messages, which are received by the MU, verified for signatures, and forwarded to the SU. The SU continuously receives requests, assigns a unique number to each message, and then uploads the result to the Arweave network, where Arweave's consensus completes the order of transactions. After the consensus on the transaction order is completed, the task is assigned to the CU. The CU performs the specific computation, changes the state values, and returns the result to the MU, which is finally forwarded to the user or entered as a request for the next process into the SU.

The SU can be seen as the connection point between AO and the AR consensus layer, while the CU is a decentralized computing power network. It can be seen that the consensus and computing resources in the AO network are completely decoupled, so as long as more and higher-performance nodes join the CU group, the entire AO will gain stronger computing power, able to support more processes and more complex process computations, and can be flexibly scaled on demand.

So how to ensure the verifiability of its computation results? AO has chosen an economic approach, where CU and SU nodes need to pledge a certain amount of AO assets, and CU nodes compete through factors such as computing power and price to earn income by providing computing power.

Since all requests are recorded in the Arweave consensus, anyone can trace back through these requests to reconstruct the entire process of state changes, and if malicious attacks or computation errors are found, they can initiate a challenge to the AO network by introducing more CU nodes to re-compute and obtain the correct result. The pledged AO of the faulty node will be confiscated. Arweave does not verify the state of the processes running on the AO network, but faithfully records the transactions, as Arweave has no computing power, and the challenge process is carried out within the AO network. The processes on AO can be seen as "sovereign chains" with their own consensus, and Arweave can be seen as their data availability layer.

AO gives developers complete flexibility, allowing them to freely choose nodes in the CU market, customize the virtual machines for running programs, and even the internal consensus mechanisms of the processes.

ICP's Implementation

Unlike AO's decoupled node groups, ICP's underlying architecture uses relatively consistent data center nodes, providing a structured resource of multiple subnets, from bottom to top: data centers, nodes, subnets, and software containers.

The bottom layer of the ICP network is a series of decentralized data centers, where the ICP client program runs and virtualizes a series of standard computing resource nodes based on performance. These nodes are randomly combined by ICP's core governance code NNS to form a subnet. Nodes within the subnet process computing tasks, reach consensus, and produce and propagate blocks. The nodes within the subnet achieve consensus through optimized BFT interactions.

The ICP network has multiple subnets at the same time, with one group of nodes only running one subnet and maintaining internal consensus, and different subnets producing blocks in parallel at the same rate, and can interact through cross-subnet requests.

In different subnets, node resources are abstracted into "containers", with business running in the containers. Subnets do not have a large shared state, with each container only maintaining its own state and having a maximum capacity limit (due to the wasm virtual machine limitation). The state of the containers in the network is also not recorded in the subnet blocks.

Within the same subnet, computing tasks are run in a redundant manner on all nodes, but are run in parallel across different subnets. When the network needs to be scaled, the core governance system of ICP, the NNS, will dynamically add and merge subnets to meet usage requirements.

AO vs ICP

Both AO and ICP are built around the Actor message passing model, which is a typical framework for concurrent distributed computing networks, and both default to using WebAssembly as the execution virtual machine.

Unlike traditional blockchains, AO and ICP do not have the concepts of data and chains. Therefore, in the Actor model, the results of virtual machine execution are deterministic by default, so the system only needs to ensure the consistency of transaction requests to achieve the consistency of in-process state values. Multiple Actors can run in parallel, which provides huge space for expansion, so the computing cost can be low enough to run general computations such as AI.

However, in the overall design philosophy, AO and ICP are on completely opposite sides.

Structured vs Modular

The design of ICP is more like a traditional network model, abstracting resources from the underlying data centers into fixed services, including hot storage, computing, and transmission resources. AO uses a more modular design that is more familiar to crypto developers, with transmission, consensus verification, computing, and storage resources completely separated and divided into multiple node groups.

Therefore, the hardware requirements for nodes in ICP are very high, as they need to meet the minimum requirements for system consensus. Developers must accept a unified standard program hosting service, with resources for the service constrained in containers, such as the current maximum available memory of 4GB per container, which also limits the emergence of some applications, such as running large-scale AI models.

ICP also tries to provide diverse needs by creating different subnets with characteristics, but this is inseparable from the overall planning and development of the DFINITY Foundation.

For AO, CU is more like a free computing power market, where developers can choose the specifications and number of nodes to use based on their needs and price preferences. Therefore, developers can almost run any process on AO. At the same time, this is also more friendly to node participants, as CU and MU can also be scaled separately, with a higher degree of decentralization.

AO has a higher degree of modularity, supporting customization of virtual machines, transaction ordering models, message passing models, and payment methods. Therefore, if developers need a private computing environment, they can choose the TEE environment of CU, without having to wait for AO to develop it officially. Modularity brings more flexibility and also reduces the cost of entry for some developers.

Security

ICP relies on subnet operation, and when a process is hosted on a subnet, the computing process will be executed on all subnet nodes, and the state verification is completed by the improved BFT consensus between all subnet nodes. Although this creates some redundancy, the security of the process is completely consistent with the subnet.

Within a subnet, when two processes call each other, such as when the input of process B is the output of process A, there is no need to consider additional security issues, only when crossing two subnets is the security difference between the two subnets considered. The current number of nodes in a subnet is between 13-34, and the final determinacy is formed in 2 seconds.

In AO, the computing process is delegated to the CUs selected by the developer in the market. In terms of security, AO chose a more token economic solution, requiring CU nodes to pledge $AO, and the default computing result is trustworthy. AO records all requests through consensus on Arweave, so anyone can read the public records and verify the correctness of the current state by repeating the calculation step by step. If there is a problem, more CUs can be selected in the market to participate in the calculation to obtain a more accurate consensus, and the pledged CU that made the mistake can be fined.

This allows consensus and computing to be completely decoupled, giving AO far superior scalability and flexibility than ICP. In cases where verification is not required, developers can even compute on their own local devices, just need to upload the commands to Arweave through SU.

However, this also brings problems for inter-process calls, as different processes may be under different security guarantees, such as process B having 9 CUs for redundant computation, while process A only has one CU running, so process B needs to consider whether process A will transmit the wrong result when accepting requests from process A. Therefore, the interaction between processes is affected by security. This also leads to a longer final determinacy formation time, which may require waiting for up to half an hour of confirmation on Arweave. The solution is to set a minimum CU number and standard, and require different final confirmation times for transactions of different values.

However, AO also has an advantage that ICP does not have, which is a permanent storage containing the entire transaction history, so that anyone can replay the state at any time. Although AO does not have the traditional block and chain model, this is also more in line with the idea of verifiability by all in crypto. But in ICP, subnet nodes are only responsible for computing and reaching consensus on the results, and do not store each transaction request, so the historical information is unverifiable, and if a container commits a crime and chooses to delete the container, the crime will be untraceable, although ICP developers have voluntarily established a series of record-keeping containers, but this is still difficult for crypto developers to accept.

Decentralization

The decentralization of ICP has always been criticized, with node registration, subnet creation and merging, and other system-level work all requiring a governance system called "NNS" to decide. ICP holders need to participate in NNS by pledging, and in order to achieve universal computing capability under multiple replicas, the hardware requirements for nodes are also very high. This creates an extremely high barrier to participation. Therefore, the implementation of new features and new capabilities in ICP depends on the exit of new subnets, which must be governed by NNS, and more specifically, driven by the DFINITY Foundation that holds a large number of votes.

AO's completely decoupled approach returns more power to developers, where an independent process can be seen as an independent subnet, a sovereign L2, and developers only need to pay a fee. The modular design also makes it easier for developers to introduce new features. The participation cost for node providers is also lower compared to ICP.

Finally

The ideal of the world computer is great, but there is no optimal solution. ICP has better security and can achieve rapid finality, but the system is more complex, subject to more restrictions, and some of its designs are difficult for crypto developers to accept. AO's highly decoupled design makes expansion easier and provides more flexibility, which will be favored by developers, but there is also complexity in terms of security.

Let's look at it from a developmental perspective. In the ever-changing crypto world, a single paradigm can hardly maintain absolute dominance for a long time, even ETH is no exception (Solana is catching up). Only by being more decoupled and modular, to facilitate replacement, can one quickly evolve, adapt to the environment, and survive the challenges. As a latecomer, AO will become a strong competitor in decentralized general computing, especially in the AI field.