Author: Flagship

Image source: Generated by Boundless AI

How valuable is the title of "Former OpenAI Employee" in the market?

According to a report by Business Insider on February 25, Mira Murati, the former Chief Technology Officer of OpenAI, has just announced the launch of her new company, Thinking Machines Lab, which is raising $1 billion in funding at a $9 billion valuation.

Currently, Thinking Machines Lab has not revealed any product or technology roadmap, and the only public information about the company is the team of over 20 former OpenAI employees and their vision: to build a future where "everyone has access to knowledge and tools, and AI serves the unique needs and goals of people."

Mira Murati and Thinking Machines Lab

The capital mobilization power of OpenAI-affiliated entrepreneurs has formed a "snowball effect". Before Murati, Ilya Sutskever, the former Chief Scientist of OpenAI, founded SSI, which has already raised a $30 billion valuation based solely on the OpenAI gene and an idea.

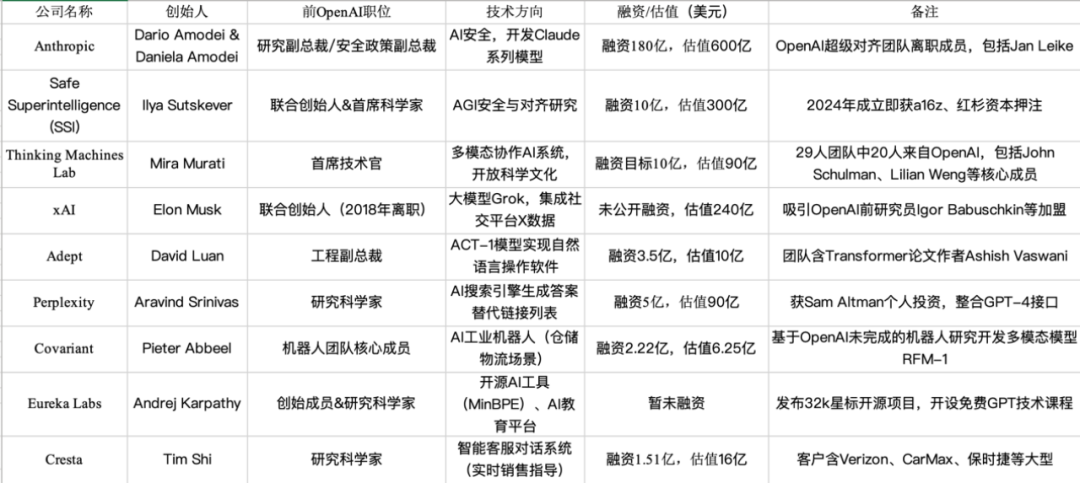

Since Elon Musk's departure from OpenAI in 2018, former OpenAI employees have founded more than 30 new companies, with a total funding of over $9 billion. These companies have formed a complete ecosystem covering AI safety (Anthropic), infrastructure (xAI), and vertical applications (Perplexity).

This reminds one of the Silicon Valley entrepreneurial wave that emerged after the acquisition of PayPal by eBay in 2002, when founders like Elon Musk and Peter Thiel left and formed the "PayPal Mafia," out of which legendary companies like Tesla, LinkedIn, and YouTube were born. The departing employees of OpenAI are also forming their own "OpenAI Mafia."

However, the script of the "OpenAI Mafia" is more aggressive: the "PayPal Mafia" took 10 years to create two trillion-dollar companies, while the "OpenAI Mafia" has spawned five companies with over $100 billion valuations in just two years since the launch of ChatGPT, including Anthropic with a $61.5 billion valuation, Ilya Sutskever's SSI with a $30 billion valuation, and Elon Musk's xAI with a $24 billion valuation. In the next three years, the "OpenAI Mafia" is likely to produce more unicorns worth over $100 billion.

The new round of "talent fission" in Silicon Valley triggered by the "OpenAI Mafia" is impacting the entire valley and even reshaping the global power landscape of AI.

The Fission Path of OpenAI

Of the 11 co-founders of OpenAI, only Sam Altman and Wojciech Zaremba, the head of the language and code generation team, are still employed.

2024 is the peak year for departures from OpenAI. That year, Ilya Sutskever (May 2024) and John Schulman (August 2024), among others, left the company. The OpenAI security team shrank from 30 to 16 people, a 47% reduction; key figures like Chief Technology Officer Mira Murati and Chief Research Officer Bob McGrew left the management team; in the technical team, core talents like Alec Radford, the chief designer of the GPT series, and Tim Brooks, the head of Sora (who joined Google), departed; deep learning expert Ian Goodfellow joined Google, and Andrej Karpathy left again to start an education company.

"United, they are a raging fire; scattered, they are stars in the sky."

More than 45% of the core technical backbone who joined OpenAI before 2018 have chosen to strike out on their own, and these new "portals" have also disassembled and reorganized OpenAI's technical gene pool into three major strategic groups.

First is the "legitimate faction" that continues the OpenAI gene, who can be considered a group of OpenAI 2.0 ambitious individuals.

Mira Murati's Thinking Machines Lab has almost completely replicated OpenAI's R&D architecture: John Schulman is responsible for the reinforcement learning framework, Lilian Weng leads the AI safety system, and the neural architecture diagram of GPT-4 has even been directly used as the technical blueprint for new projects.

Their "Open Science Manifesto" directly targets OpenAI's recent trend towards closure, planning to create a "more transparent AGI development path" through continuous publication of technical blogs, papers, and code. This has also triggered a chain reaction in the AI industry: 3 top researchers from Google DeepMind have joined with the Transformer-XL architecture.

Ilya Sutskever's Safe Superintelligence Inc. (SSI), on the other hand, has chosen a different path. Sutskever, along with two other researchers, Daniel Gross and Daniel Levy, have founded a company that has abandoned all short-term commercial goals and is focused on building "irreversible safe superintelligence" - a technical framework that is almost a philosophical proposition. The company has just been established, but a16z, Sequoia Capital, and other institutions have decided to invest $1 billion to "pay the bill" for Sutskever's ideal.

Ilya Sutskever and SSI

Another faction is the "disruptors" who had already left before ChatGPT.

Anthropic, founded by Dario Amodei, has evolved from an "OpenAI opposition" to the most dangerous competitor. Its Claude 3 series models are on par with GPT-4 in multiple tests. In addition, Anthropic has established an exclusive partnership with Amazon AWS, which means that Anthropic is gradually eroding the foundation of OpenAI in terms of computing power. The chip technology jointly developed by Anthropic and AWS may further weaken OpenAI's bargaining power in NVIDIA GPU procurement.

Another representative figure in this faction is Elon Musk. Although Musk left OpenAI in 2018, some of the founding members of his xAI, including Igor Babuschkin and Kyle Kosic who later returned to OpenAI, also came from OpenAI. With Musk's powerful resources, xAI poses threats to OpenAI in terms of talent, data, and computing power. By integrating the real-time social data stream from Musk's X platform, xAI's Grok-3 can instantly capture trending events on X and generate answers, while the training data of ChatGPT is only up to 2023, with a significant gap in timeliness. This data feedback loop is something that OpenAI, relying on the Microsoft ecosystem, cannot easily replicate.

However, Musk's positioning of xAI is not to disrupt OpenAI, but to recapture the original vision of "OpenAI." xAI insists on a "maximum open source" strategy, such as open-sourcing the Grok-1 model under the Apache 2.0 license, attracting global developers to participate in ecosystem building. This is in stark contrast to OpenAI's recent trend towards closed-source (such as only providing GPT-4 as an API service).

The third faction is some "game-changers" who are restructuring industry logic.

Perplexity, founded by former OpenAI research scientist Aravind Srinivas, is one of the first companies to use AI large models to transform search engines. Perplexity generates answers directly through AI, replacing the list of links on search result pages. It now has over 20 million daily searches and has raised over $500 million (valued at $9 billion).

The founder of Adept is David Luan, the former VP of Engineering at OpenAI, who was involved in language, supercomputing, and reinforcement learning research, as well as the security and policy development of projects like GPT-2, GPT-3, CLIP, and DALL-E. Adept focuses on developing AI Agents, aiming to help users automate complex tasks (such as generating compliant reports, designing drawings, etc.) through large models combined with tool calling capabilities. Its developed ACT-1 model can directly operate office software and Photoshop. Currently, the core founding team of this company, including David Luan, has joined Amazon's AGI team.

Covariant is a robotics AI startup valued at $1 billion. Its founding team all came from the disbanded robotics team at OpenAI, and their technical genes are derived from GPT model development experience. They focus on developing robotic foundation models, aiming to achieve autonomous robot operation through multi-modal AI, especially focusing on warehouse logistics automation. However, the three "OpenAI Mafia" members in Covariant's core founding team, Pieter Abbeel, Peter Chen, and Rocky Duan, have all joined Amazon.

Some "OpenAI Mafia" Startup Companies

Data source: Public information, compiled by Flagship

The transition of AI technology from "tool attribute" to "factor of production" has given rise to three types of industrial opportunities: Substitution scenarios (such as disrupting traditional search engines), Incremental scenarios (such as intelligent transformation of manufacturing), and Reconstructive scenarios (such as breakthroughs in life sciences). The common features of these scenarios are: the potential to build data flywheel (user interaction data feeding back to models), deep interaction with the physical world (robot action data/biological experimental data), and a gray area of ethical regulation.

The technology spillover from OpenAI is providing the underlying driving force for this industrial transformation. Its early open-source strategy (such as the partial open-sourcing of GPT-2) has formed a "dandelion effect" of technology diffusion, but when the technological breakthrough enters deep water, closed-source commercialization becomes an inevitable choice.

This contradiction has given rise to two phenomena: on the one hand, the departing talents have migrated the Transformer architecture, reinforcement learning and other technologies to vertical scenarios (such as manufacturing, biotechnology), building barriers through scenario data; on the other hand, the giants have achieved technology positioning through talent acquisition, forming a "technology harvesting" closed loop.

When the moat becomes a watershed

The "OpenAI gang" is advancing rapidly, while the old home OpenAI is "struggling to move forward".

In terms of technology and products, the release date of GPT-5 has been repeatedly postponed, and the mainstream ChatGPT product is generally considered by the market to be unable to keep up with the pace of industry development.

In the market, the newcomer DeepSeek has already begun to gradually catch up with OpenAI, with its model performance approaching ChatGPT but the training cost being only 5% of GPT-4, and this low-cost replication path is eroding OpenAI's technological barriers.

However, a large part of the reason for the rapid growth of the "OpenAI gang" is the internal contradictions within the OpenAI company.

Currently, the core research team of OpenAI can be said to have disintegrated, with only Sam Altman and Wojciech Zaremba remaining from the 11 co-founders, and 45% of the core researchers have already left.

Wojciech Zaremba

Co-founder Ilya Sutskever left to start SSI, chief scientist Andrej Karpathy publicly shared his experience in Transformer optimization, and Tim Brooks, the head of the Sora video generation project, joined Google DeepMind. In the technical team, more than half of the authors of the early versions of GPT have left, and many of them have joined the ranks of OpenAI's competitors.

At the same time, according to data compiled by Lightcast, which tracks recruitment information, OpenAI's own recruitment focus seems to have changed. In 2021, 23% of the company's job postings were for general research positions. In 2024, general research accounted for only 4.4% of its job postings, which also reflects the changing status of research personnel at OpenAI.

The organizational culture conflict caused by the commercialization transformation is becoming increasingly apparent, and while the employee scale has expanded by 225% in three years, the early hacker spirit has gradually been replaced by the KPI system, with some researchers openly stating that they have been "forced to shift from exploratory research to product iteration".

This strategic oscillation has led OpenAI into a double dilemma: it needs to continue to produce breakthrough technologies to maintain its valuation, but it also has to face the competitive pressure from former employees who can quickly replicate its methodologies.

The key to winning in the AI industry is not in the parameter breakthroughs in the laboratory, but in who can inject the technological genes into the capillaries of the industrial world - in the flow of answers in search engines, the motion trajectories of robotic arms, and the molecular dynamics of biological cells, reconstructing the underlying logic of the business world.

Is Silicon Valley going to split OpenAI?

The rapid rise of the "OpenAI gang" and the "PayPal gang" is largely due to the "blessing" of California law.

Since California banned non-compete agreements in 1872, its unique legal environment has become a catalyst for innovation in Silicon Valley. According to Section 16600 of the California Business and Professions Code, any clause restricting professional freedom is invalid, and this institutional design has directly driven the free flow of technical talents.

Silicon Valley programmers have an average tenure of only 3-5 years, much lower than other tech centers, and this high-frequency flow has formed a "knowledge spillover" effect - for example, the former employees of Fairchild Semiconductor founded 12 semiconductor giants such as Intel and AMD, laying the industrial foundation of Silicon Valley.

The law prohibiting non-compete agreements, while seemingly not protective enough for innovative companies, actually promotes innovation more. The flow of technical personnel accelerates the diffusion of technology and lowers the threshold for innovation.

The U.S. Federal Trade Commission (FTC) predicts that in 2024, after the full ban on non-compete agreements, the innovative vitality of the United States will be further released, and the first year of policy implementation may see the addition of 8,500 new companies, a surge of 17,000-29,000 patents, and an increase of 3,000-5,000 new patents, with an annual patent growth rate of 11-19% over the next 10 years.

Capital is also an important driving force for the rise of the "OpenAI gang".

Venture capital in Silicon Valley accounts for more than 30% of the total in the U.S., and institutions like Sequoia Capital and Kleiner Perkins have built a complete financing chain from seed rounds to IPOs, and this capital-intensive model has created a dual effect.

First, capital is the engine that drives innovation, and angel investors provide not only funding but also industry resource integration. The seed capital for Uber's founding was only $200,000 from the two founders, with only 3 registered taxis. After receiving $1.25 million in angel investment, it began rapid financing and had a valuation of $40 billion by 2015.

The long-term focus of venture capital on the tech industry has also promoted the upgrading of the tech industry. Sequoia Capital invested in Apple in 1978 and Oracle in 1984, establishing its influence in the semiconductor and computer fields; in 2020, it began to deeply layout artificial intelligence, participating in projects like OpenAI. The billions of dollars of investment from international capital (such as Microsoft) in AI has also shortened the commercialization cycle of generative AI technology from years to months.

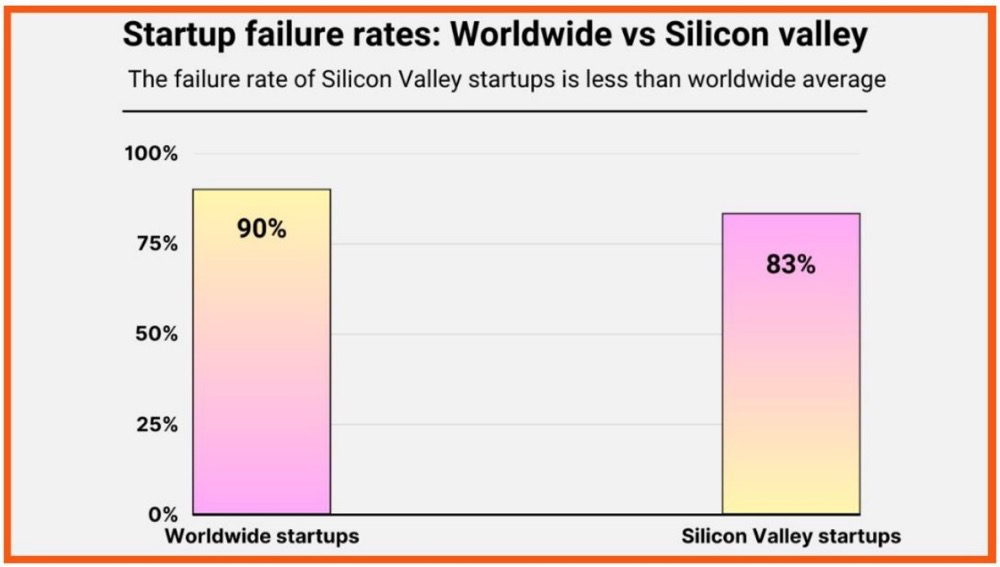

Capital also provides a higher tolerance for failure for innovative companies. The speed of screening failed projects by accelerators is as important as the success projects, and according to the startup analysis firm startuptalky, the global startup failure rate is 90%, and the failure rate of startups in Silicon Valley is 83%. Although it is not easy for startups to succeed, in the investment grid of venture capital, the experience of failure can be quickly transformed into the nutrients for new projects.

Source: startuptalky.com

However, capital has also changed the development path of these innovative companies to a certain extent.

Top AI projects have received valuations of over $10 billion even before launching products, which has indirectly increased the difficulty for other small and medium-sized innovation teams to obtain resources. This structural imbalance is more prominent in regional distribution, with Dealroom's research showing that the venture capital obtained by the San Francisco Bay Area in a single quarter ($24.7 billion) is equivalent to the total of the next 2-5 venture capital centers (London, Beijing, Bangalore, Berlin). At the same time, while India's financing has grown by 133%, 97% of the funds have flowed to "unicorn" companies with valuations over $10 billion.

In addition, capital has a strong "path dependence", and capital prefers quantifiable return areas, which has also led to many emerging basic scientific innovations struggling to obtain strong financial support. For example, in the field of quantum computing, the founder of the domestic quantum computing startup Origin Quantum, Guo Guoping, had to sell his house to start a business in the early days due to lack of funding. Guo Guoping's first fundraising was in 2015, and the data released by the Ministry of Science and Technology that year showed that China's total investment in scientific research was less than 2.2% of GDP, with basic research funding accounting for only 4.7% of R&D investment.

Not only is there a lack of support, but big capital is also using the "temptation of money" to lock in top talent, which has basically locked the CTO-level positions in startups at the level of tens of millions (in the U.S. in dollars, in China in RMB), forming a cycle of "giants monopolizing talent - capital chasing giants".

However, the high pre-valuation of these "OpenAI gang" companies also carries certain risks.

Mira Murati and Ilya Sutskever's two companies have both raised billions of dollars in funding based on just one idea. This is due to the premium placed on the technical capabilities of the top team at TRON, but this trust also carries risks - whether AI technology can maintain exponential growth in the long term, and whether vertical scenario data can form monopolistic barriers. When these two risks encounter real challenges (such as a slowdown in breakthroughs in multimodal models, and a sharp increase in the cost of acquiring industry data), overheated capital may trigger an industry reshuffle.