Here is the English translation of the text, with the specified terms preserved:

What? The AI world has come up with a new trick again?

Although this time it's not as well-known as DeepSeek and Manus, it may still have a significant impact on the development of the AI industry.

Recently, a little-known small company called Inception Labs made big news by claiming to have developed the world's first commercial-grade diffusion large language model (dLLM) called Mercury.

This so-called diffusion large language model may trigger a wave that could completely overturn the basic trajectory of large models over the past three years.

The company has also released actual data, and in a series of tests, Mercury Coder basically outperformed GPT-4o Mini and Claude 3.5 Haiku, models that have become quite common.

Moreover, these competing models have been specifically optimized for generation speed, but Mercury is still up to 10 times faster than them.

Not only that, Mercury can achieve a processing speed of over 1000 tokens per second on the NVIDIA H100 chip, while typical large models often require specialized AI chips to achieve such speeds.

It's worth noting that to achieve higher token processing speeds, customized AI chips have almost become a new battlefield for various manufacturers.

In addition to being incredibly fast, we can also see from Artificial Analysis's test coordinate system that the generation quality of Mercury is also excellent.

Although it is not yet as good as the top-tier Claude3.5 Haiku, considering its one-shot efficiency, maintaining such generation quality is already very impressive.

We also tried a few examples in the official Mercury Coder Playground, and after some testing, we found the generation effect to be quite good, and the speed is indeed extremely fast.

Prompt: Implement the game Snake in HTML5. Include a reset button. Make sure the snake doesn't move too fast.

Prompt: Write minesweeper in HTML5, CSS, and Javascript. Include a timer and a banner that declares the end of the game.

Prompt: Create a pong game in HTML5.

The impressive thing about Mercury is not its actual performance, but the new possibilities it brings to the AI world: who says large language models have to follow the Transformer path?

In this AI era, everyone is probably sick of hearing about Transformer and Diffusion, with Transformer going solo, Diffusion flying solo, or the two collaborating.

Essentially, these two represent different evolutionary directions of AI, and it can even be said that the "cognitive" mechanisms of Transformer and Diffusion are different.

Transformer has a typical human-like chain of thought, with an autoregressive property, meaning it has a concept of sequence, and you have to generate the previous results before coming up with the next.

So what we see in AI generation now is a gradual, step-by-step process from top to bottom.

Diffusion, on the other hand, is counterintuitive, directly going from blurry to clear through a denoising process.

It's like when you put on glasses, the entire scene becomes clear, but you didn't go through a step-by-step process to get there.

So the results generated by Mercury are initially a blurry mess, and then quickly, quickly, biu biu biu, it all comes together.

CPU is like the current Transformer, while GPU is like the current Diffusion.

Gradually, Transformer has begun to reveal its shortcomings, with the most troublesome being the exponential growth in computational complexity behind its attention mechanism.

This explosive growth in computational complexity has brought about various constraints, such as a significant decline in model inference speed, which is obviously unable to meet the actual needs in areas like long-text and video.

Further, the constantly increasing complexity also means exponential growth in hardware resource requirements, which has hindered the true integration of AI into people's lives.

So the industry has always been trying to alleviate the computational complexity of Transformer models.

The emergence of Mercury seems to be reminding everyone that if it's too difficult to reduce the computational complexity of Transformer, why not try a new approach?

And the Diffusion route behind Mercury is not unfamiliar to everyone, as we've seen its application in early hits like Stable Diffusion, Midjourney, and DALL-E 2.

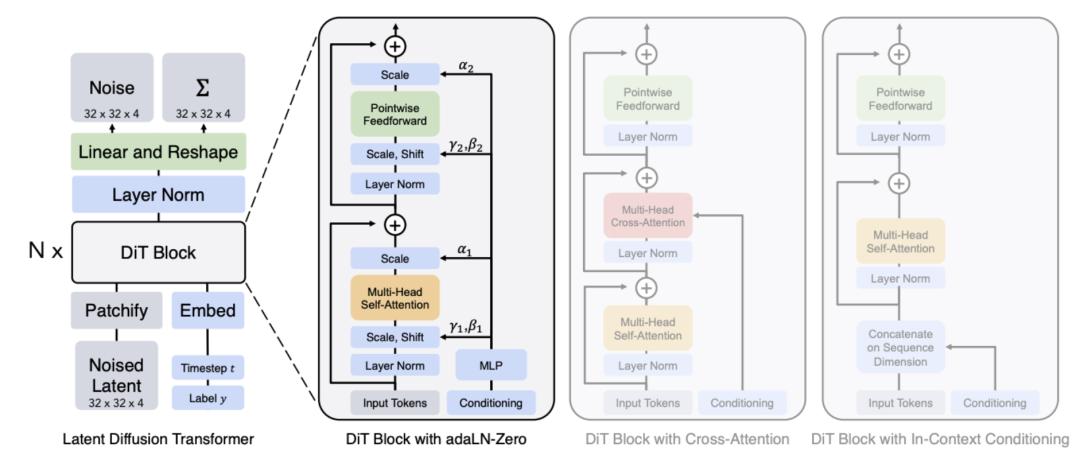

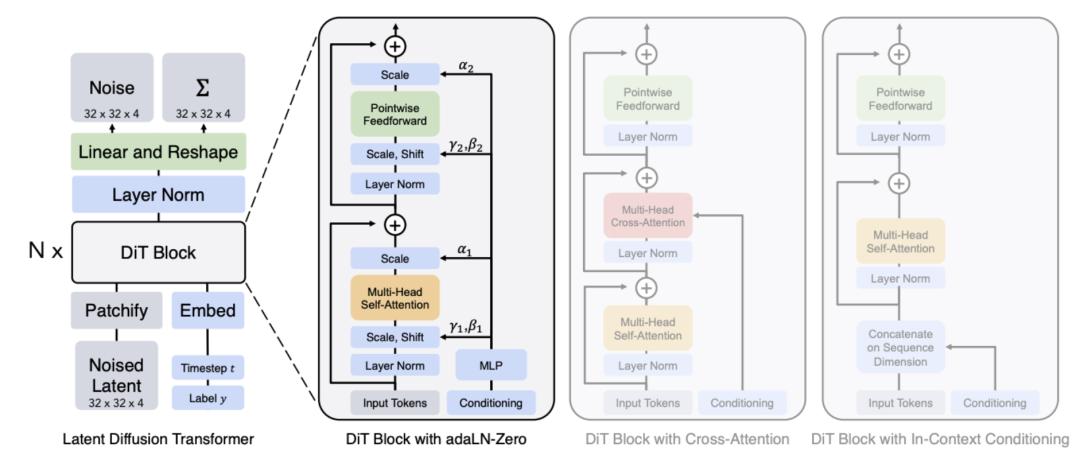

Even the OpenAI's sora model appeared, which uses a Diffusion Transformer (DiTs) model, a combination of Transformer and Diffusion.

Although the ideal is beautiful, Diffusion has almost been sentenced to death by all kinds of people, because they think it cannot handle language models.

Because the feature of Diffusion is that it has no self-regression, and does not rely on context, so it can save resources and speed up generation.

But in this way, the generation accuracy is a bit difficult to grasp, just like the current text-to-image, text-to-video models, it is still difficult to control the high-precision generation of hands, eating noodles, and text.

However, the breakthrough achievement of Mercury has indeed verified for the first time that Diffusion can also do the work of Transformer.

Unfortunately, Mercury has not yet released any technical documents, so we cannot further know how it solved the challenge of generating content quality.

But from the opponents it has chosen, such as Claude3.5 Haiku, GPT4-omini, Qwen2.5 coder 7B, DeepSeek V2 lite and other mini-models, we can also see that the most powerful Diffusion language model Mercury is still not big enough.

Even after our testing, we found that except for the relatively accurate generation effect of the official recommended prompts, if using some custom prompts, its error probability is a bit exaggerated.

And the stability of the generation is also quite general, often the first generation effect is still good, but the next test result may not be.

Prompt: Use HTML to write an animation of the solar system simulation operation

But undoubtedly, the achievement of Mercury is remarkable, especially considering the strong position of Diffusion in multi-modal generation, it is hard not to imagine that if the path of Diffusion is the more correct way for AI large models (which may not be impossible), the future interconnected evolution may be more natural.

Recently, I just watched a movie called "Arrival", in which the aliens did not think in the chain logic of 1, 2, 3, 4... like humans, and different ways of thinking will obviously bring more possibilities.

Then the question arises, who says that AI must think like humans? For them, isn't the way of thinking of Diffusion more in line with the attributes of "silicon-based life"?

Of course, these are just my nonsense, but interestingly, Mercury is both the planet Mercury in the solar system and the messenger in Roman mythology, whose characteristics are to run very fast, and in astrology, it also represents a person's way of thinking and communication ability.

We can also look forward to the appearance of Mercury bringing new ways for AI.

Image and data sources:

X.com

Mercury official website

OpenAI: Generating videos on Sora

techcrunch: Inception emerges from stealth with a new type of AI model

AimResearch: What Is a Diffusion LLM and Why Does It Matter?

Zhihu: How to evaluate Inception Lab's diffusion large language model Mercury coder?

This article is from the WeChat public account "Chaping", author: Bajie, editors: Jiangjiang & Mianxian, authorized by 36Kr for release.