Author:Pavel Paramonov

Compiled by: TechFlow

In a recent tweet, @newmichwill, the founder of Curve Finance, stated that the primary purpose of cryptocurrencies is DeFi (Decentralized Finance), and that AI (Artificial Intelligence) does not need cryptocurrencies at all. While I agree that DeFi is an important component of the crypto space, I do not agree with the view that AI does not need cryptocurrencies.

With the rise of AI agents, many agents often come with a token, leading people to believe that the intersection of cryptocurrencies and AI is merely these AI agents. Another important and overlooked topic is "Decentralized AI," which is closely related to the training of AI models themselves.

My dissatisfaction with certain narratives is that most users blindly believe that something is important and useful just because it is popular, and even worse, they believe that the sole purpose of these narratives is to extract value (in other words, to make money) as much as possible.

When discussing Decentralized AI, we should first ask ourselves: Why does AI need to be decentralized? And what are the consequences of this?

It turns out that the idea of decentralization is almost inevitably associated with the concept of "Incentive Alignment."

In the field of AI, there are many fundamental issues that can be solved through cryptographic technology, and some mechanisms not only can solve existing problems, but also can add more credibility to AI.

So, why does AI need cryptocurrencies?

1. High computational costs limit participation and innovation

Whether fortunate or not, large AI models require a vast amount of computational resources, which naturally limits the participation of many potential users. In most cases, AI models require massive data resources and actual computing power, which are almost impossible for individuals to bear.

This problem is particularly prominent in open-source development. Contributors not only need to invest time to train the models, but also must invest computing resources, which makes open-source development inefficient.

Indeed, individuals can invest a lot of resources to run AI models, just as users can allocate computing resources to run their own blockchain nodes.

However, this does not fundamentally solve the problem, as the computing power is still insufficient to complete the relevant tasks.

Independent developers or researchers cannot participate in the development of large AI models like LLaMA, simply because they cannot afford the computational costs required to train the models: thousands of GPUs, data centers, and additional infrastructure are needed.

Here are some scale-aware data points:

→ Elon Musk stated that the latest Grok 3 model used 100,000 Nvidia H100 GPUs for training.

→ Each chip is worth about $30,000.

→ The total cost of the AI chips used to train Grok 3 is around $3 billion.

This problem is somewhat similar to the process of building a startup, where individuals may have the time, technical capabilities, and execution plan, but initially lack the resources to realize their vision.

As @dbarabander pointed out, traditional open-source software projects only require contributors to donate their time, while open-source AI projects require both time and a large amount of resources, such as computing power and data.

Relying solely on goodwill and the efforts of volunteers is not enough to motivate enough individuals or teams to provide these high-cost resources. Additional incentive mechanisms are a necessary condition to drive participation.

2. Cryptographic technology is the best tool for achieving incentive alignment

Incentive Alignment refers to the establishment of rules that encourage participants to contribute to the system while also benefiting themselves.

Cryptographic technology has numerous successful cases in helping different systems achieve Incentive Alignment, and one of the most prominent examples is the Decentralized Physical Infrastructure Network (DePIN) industry, which perfectly aligns with this concept.

For example, projects like @helium and @rendernetwork have achieved Incentive Alignment through distributed node and GPU networks, becoming exemplars.

So, why can't we apply this model to the AI field to make its ecosystem more open and accessible?

It turns out that we can.

The core of driving the development of Web3 and cryptographic technology is "ownership."

You own your own data, you own your own incentive mechanisms, and even when you hold certain tokens, you also own a part of the network. Granting ownership to resource providers can motivate them to contribute their assets to the project, expecting a return from the success of the network.

To make AI more widespread, cryptographic technology is the optimal solution. Developers can freely share model designs across projects, while computing and data providers can earn ownership shares (incentives) by providing resources.

3. Incentive Alignment is closely related to verifiability

If we envision a Decentralized AI system with proper Incentive Alignment, it should inherit some characteristics of classic blockchain mechanisms:

Network Effects.

Low initial requirements, where nodes can be rewarded through future earnings.

Slashing Mechanisms, to punish malicious actors.

Especially for Slashing Mechanisms, we need verifiability. If we cannot verify who the malicious actors are, we cannot punish them, which will make the system highly vulnerable to cheaters, especially in the case of cross-team collaboration.

In a Decentralized AI system, verifiability is crucial, as we do not have a centralized point of trust. Instead, we seek a trustless but verifiable system. Here are some components that may require verifiability:

Benchmark Phase: The system outperforms others on certain metrics (e.g., x, y, z).

Inference Phase: The system's operation is correct, i.e., the "thinking" stage of AI.

Training Phase: The system is trained or adjusted correctly.

Data Phase: The system collects data correctly.

Currently, there are hundreds of teams building projects on @eigenlayer, but I've recently noticed that the focus on AI is higher than ever, and I'm wondering if this aligns with its initial reStaking vision.

Any AI system aiming to achieve Incentive Alignment must be verifiable.

In this case, Slashing Mechanisms are equivalent to verifiability: if a Decentralized system can punish malicious actors, it means it can identify and verify the existence of such malicious behavior.

If the system is verifiable, then AI can leverage cryptographic technology to access global computing and data resources, creating larger and stronger models. Because more resources (computing + data) usually lead to better models (at least in the current technological world).

@hyperbolic_labs has already demonstrated the potential of collaborative computing resources. Any user can rent GPUs to train more complex AI models than they could run at home, and at a lower cost.

How to make AI verification both efficient and verifiable?

One might say that there are now many cloud solutions available to rent GPUs, which have already solved the problem of computing resources.

However, cloud solutions like AWS or Google Cloud are highly centralized and employ a so-called "Waitlist Strategy," artificially creating high demand to drive up prices. This phenomenon is particularly common in the oligopolistic landscape of this field.

In fact, there are vast amounts of idle GPU resources in data centers, mining farms, and even in the hands of individuals, which could be used to contribute to AI model training computations, but are being wasted.

You may have heard of @getgrass_io, which allows users to sell their unused bandwidth to enterprises, avoiding bandwidth waste and earning rewards.

I'm not saying that computing resources are infinite, but any system can be optimized to achieve a win-win situation: on the one hand, providing a more open market for those who need more resources to train AI models; on the other hand, rewarding those who contribute these resources.

The Hyperbolic team has developed an open GPU market. Here, users can rent GPUs for AI model training, saving up to 75% of the cost, while GPU providers can monetize their idle resources and earn income.

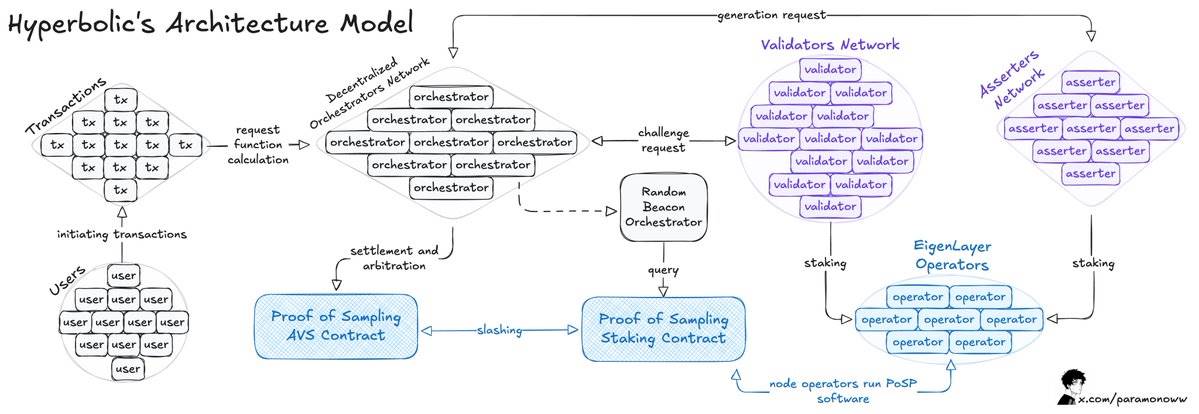

The following is an overview of how it works:

Hyperbolic organizes the connected GPUs into clusters and nodes, allowing computing power to be scaled according to demand.

The core of the architectural model is the "Proof of Sampling" model, which features sampling transactions: by randomly selecting and verifying transactions, the workload and computational requirements are reduced.

The main issue arises in the AI inference process, where each inference running on the network needs to be verified, and it is best to avoid the significant computational overhead introduced by other mechanisms.

As I mentioned earlier, if something can be verified, then if the verification result shows that the behavior violates the rules, it must be punished (Slashing).

When Hyperbolic adopts the AVS (Adaptive Verification System) model, it adds more verifiability to the system. In this model, the verifiers are randomly selected to verify the output results, thus aligning the incentives - in this mechanism, dishonest behavior is unprofitable.

To train an AI model and make it more robust, the main resources needed are computing power and data. Renting computing power is a solution, but we still need to obtain data from somewhere, and we need diverse data to avoid potential biases in the model.

Verifying data from different sources for AI

The more data, the better the model; but the problem is that you usually need diverse data. This is a major challenge facing AI models.

Data protocols have existed for decades. Whether the data is public or private, data brokers collect this data in some way, possibly paying or not, and then sell it to make a profit.

The problems we face in obtaining suitable data for AI models include: single points of failure, censorship, and a lack of a trustless way to provide real and reliable data to "feed" the AI models.

So, who needs this kind of data?

First, it's AI researchers and developers who want to train and infer their models with real and appropriate inputs.

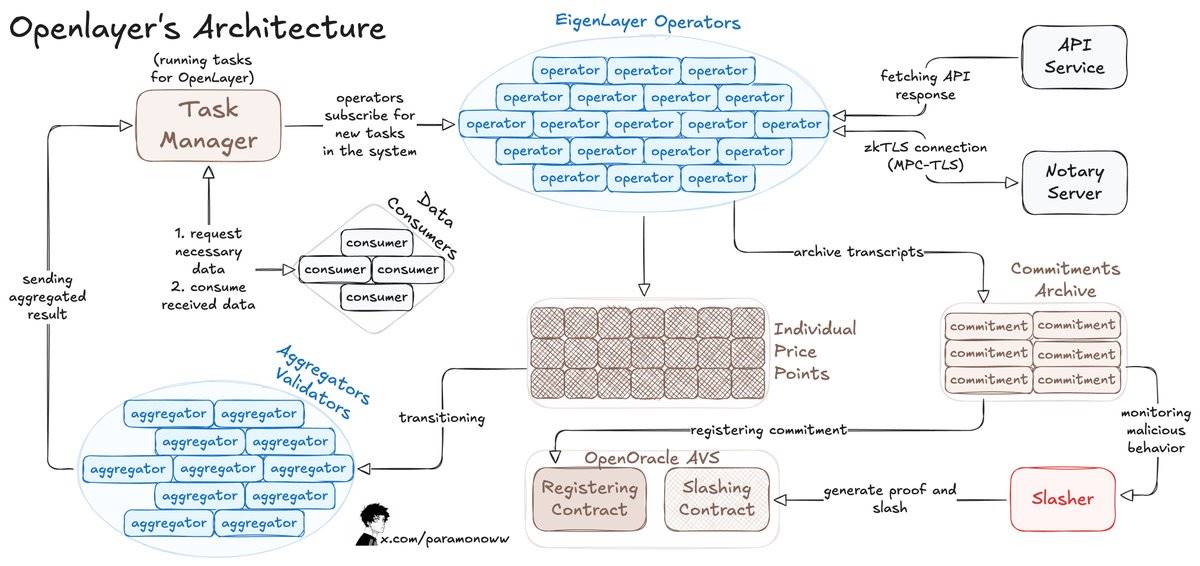

For example, OpenLayer allows anyone to add data streams to the system or AI model without permission, and the system can record each available data in a verifiable way.

OpenLayer also uses zkTLS (Zero-Knowledge Transport Layer Security), which I described in detail in a previous article. This protocol ensures that the operators report the data they actually obtained from the sources (verifiability).

This is how OpenLayer works:

Data consumers publish data requests to OpenLayer's smart contract and retrieve the results using a contract-based (on-chain or off-chain) API similar to a primary data oracle.

Operators register with EigenLayer to secure the staked assets for OpenLayer's AVS, and run the AVS software.

Operators subscribe to tasks, process and submit data to OpenLayer, while storing the original responses and proofs in decentralized storage.

For variable results, aggregators (special operators) will standardize the outputs.

Developers can request the latest data from any website and integrate it into the network. If you are developing an AI-related project, you can access reliable real-time data.

After discussing the AI computation process and ways to obtain verifiable data, the next focus should be on the two core components of AI models: the computation itself and its verification.

AI computation must be verified to ensure correctness

Ideally, nodes must prove their computational contributions to ensure the proper functioning of the system.

In the worst case, nodes may falsely claim to provide computing power, but actually do no real work.

Requiring nodes to prove their contributions can ensure that only legitimate participants are recognized, thereby avoiding malicious behavior. This mechanism is very similar to traditional Proof of Work, but the type of work performed by the nodes is different.

Even if we introduce appropriate incentive alignment mechanisms, if nodes cannot prove their work without permission, they may receive rewards that do not match their actual contributions, or even lead to unfair reward distribution.

If the network cannot evaluate computational contributions, it may result in some nodes being assigned tasks beyond their capabilities, while other nodes remain idle, ultimately leading to inefficiency or system failures.

By proving computational contributions, the network can use standardized metrics (e.g., FLOPS, Floating-Point Operations Per Second) to quantify the effort of each node. This way, rewards can be allocated based on the actual work completed, rather than just on whether the node is present in the network.

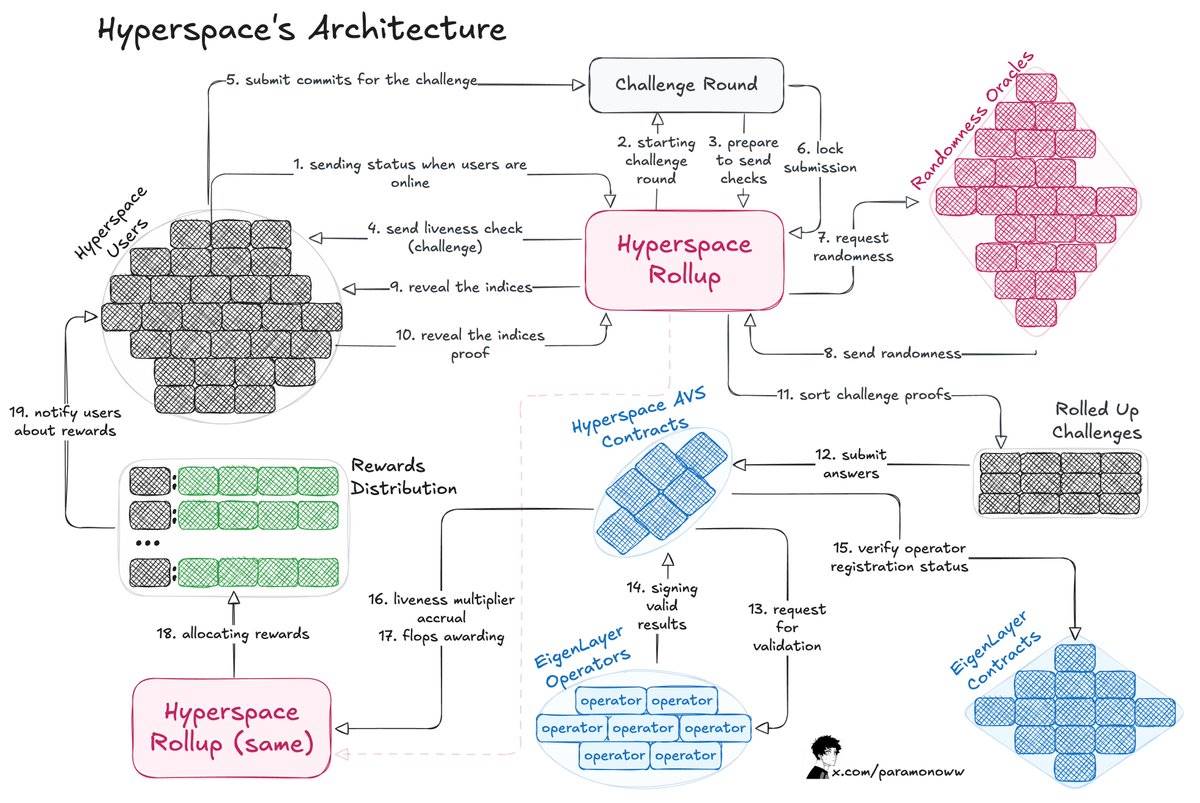

The team from @HyperspaceAI has developed a "Proof-of-FLOPS" system, allowing nodes to rent out their unused computing power. In exchange, they will receive "flops" credits, which will serve as the network's universal currency.

The workflow of this architecture is as follows:

The process starts with issuing a challenge to the user, who responds by submitting a commitment to the challenge.

The Hyperspace Rollup manages the process, ensuring the submission's security and obtaining a random number from an oracle.

The user reveals the index, and the challenge process is completed.

Operators check the responses and notify the Hyperspace AVS contract of the valid results, which are then confirmed through the EigenLayer contract.

Liveness Multipliers are calculated, and flops credits are granted to the user.

Proving computational contributions provides a clear picture of each node's capabilities, allowing the system to intelligently allocate tasks - assigning complex AI computations to high-performance nodes and lighter tasks to lower-capability nodes.

The most interesting part is how to make this system verifiable, so that anyone can prove the correctness of the work completed. Hyperspace's AVS system continuously sends challenges, random number requests, and executes multi-layer verification processes, as shown in the architectural diagram mentioned earlier.

Operators can participate in the system with confidence, as the results are verified, and the reward distribution is fair. If the results are incorrect, the malicious actors will undoubtedly be punished (Slashed).

There are several important reasons for verifying AI computation results:

Encouraging nodes to join and contribute resources.

Fairly distributing rewards based on effort.

Ensuring contributions directly support specific AI models.

Effectively allocating tasks based on the nodes' verification capabilities.

Decentralization and Verifiability in AI

As @yb_effect pointed out, "Decentralized" and "Distributed" are completely different concepts. Distributed only means that the hardware is distributed in different locations, but there is still a centralized connection point.

Decentralization, on the other hand, means that there is no single master node, and the training process can handle failures, similar to how most blockchains operate today.

If an AI network is to achieve true decentralization, it will require multiple solutions, but one thing is certain: we need to verify almost everything.

If you want to build an AI model or agent, you need to ensure that every component and every dependency is verified.

Inference, training, data, oracles - all of these can be verified, not only introducing crypto-based rewards aligned with incentives, but also making the system more fair and efficient.