Mary Meeker, known as the "Queen of the Internet", founded Bond Capital, which is well-known around the world for publishing Internet trend reports every year. Recently, BOND published the latest "Trends – Artificial Intelligence" report , which uses 340 pages to deeply depict the panoramic picture of how AI technology is reshaping the world at an astonishing speed.

This is the second of three articles focusing on the report, which contains a lot of content. The first article is here .

AI users + usage + capital expenditure growth = unprecedented

Unprecedented adoption of consumer/user AI:

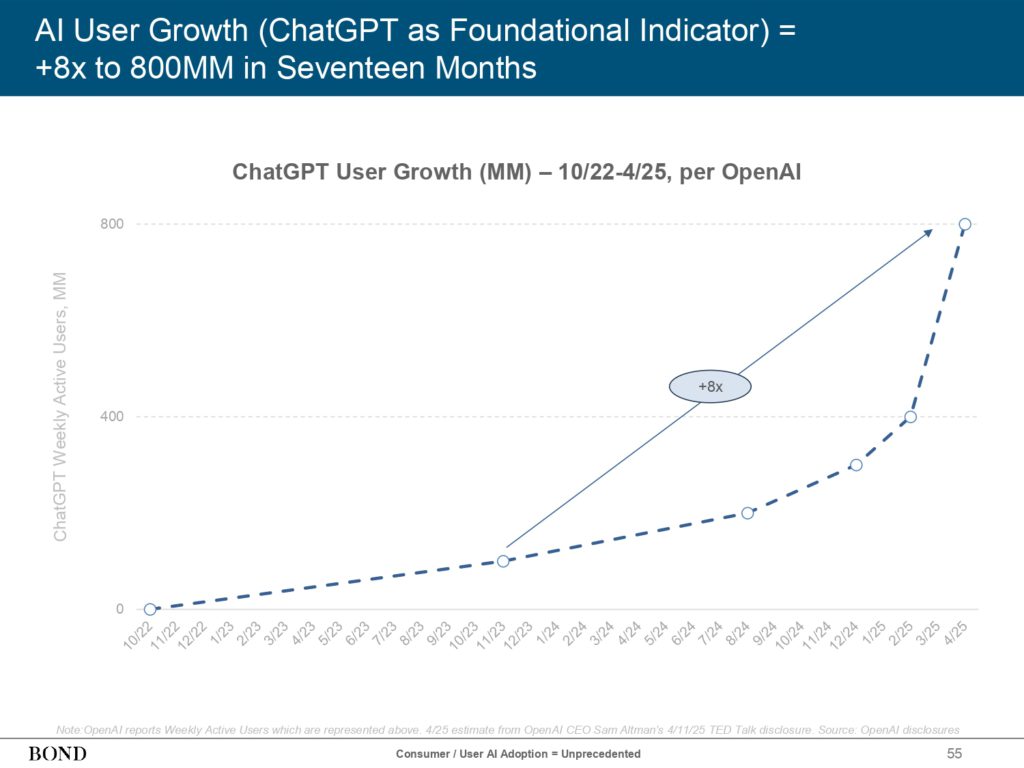

Phenomenal user growth: Taking ChatGPT as an industry benchmark, its user growth rate is phenomenal. In just 17 months, its weekly active users have increased 8 times to a staggering 800 million.

This figure not only demonstrates the appeal of AI technology, but also reflects its ability to quickly penetrate the mass market.

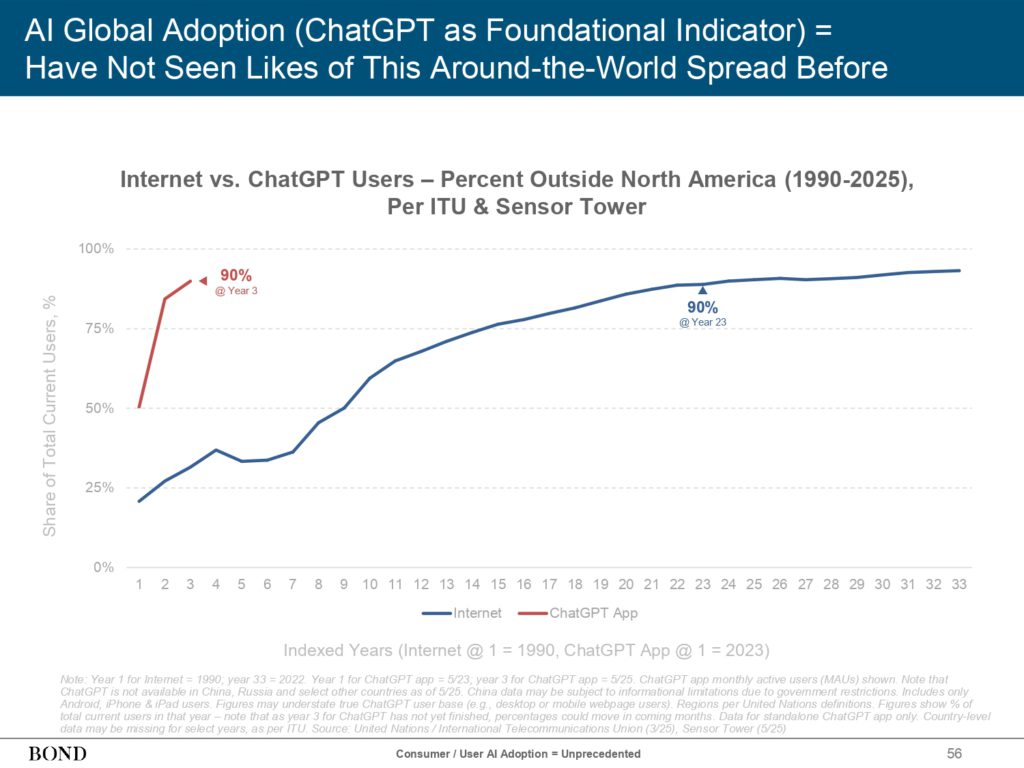

Global synchronous diffusion: Unlike the previous pattern of technology gradually spreading from its origin to the world, this round of AI wave shows the characteristics of global synchronous outbreak. Measured by the proportion of users outside North America, it took 23 years for the Internet to reach a penetration rate of 90%, while ChatGPT only took 3 years to reach a similar level, which highlights the global nature and rapid dissemination ability of AI technology.

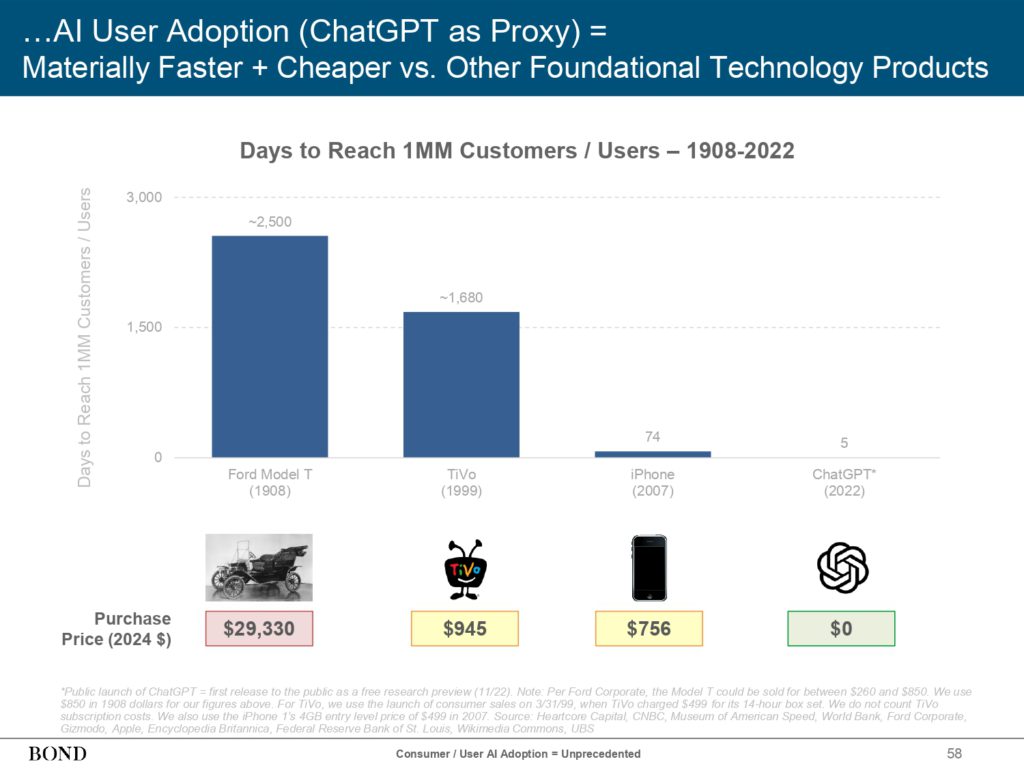

Products that surpass the Internet era: If ChatGPT is compared with the star products of the Internet era, its user acquisition speed is even more amazing. It took ChatGPT only 0.2 years to reach the milestone of 100 million users, which is much faster than the growth miracles of TikTok, Instagram, Facebook, etc.

Disruptive acquisition cost: Compared with historical foundational technology products such as the Ford Model T, TiVo, and iPhone, ChatGPT is not only far ahead in terms of the speed of reaching millions of users/customers, but more importantly, its initial user acquisition cost is almost zero. This "free value-added" or low-threshold strategy has greatly accelerated the popularization of AI technology.

Acceleration of household penetration: In the United States, the time it takes for new technology products to reach 50% household penetration has been halved with each generation. AI technology is expected to continue or even accelerate this pattern. The report predicts that it will only take about 3 years to reach this milestone, which will have a profound impact on all aspects of social life.

NVIDIA's ecosystem is booming: As a core provider of AI computing power, NVIDIA's ecosystem has experienced explosive growth in the past four years. The number of developers, AI startups, and applications using GPUs within its ecosystem have all grown by more than 100%. This shows that the software and application ecosystem around AI hardware is maturing rapidly.

Tech giants make AI a top strategic priority:

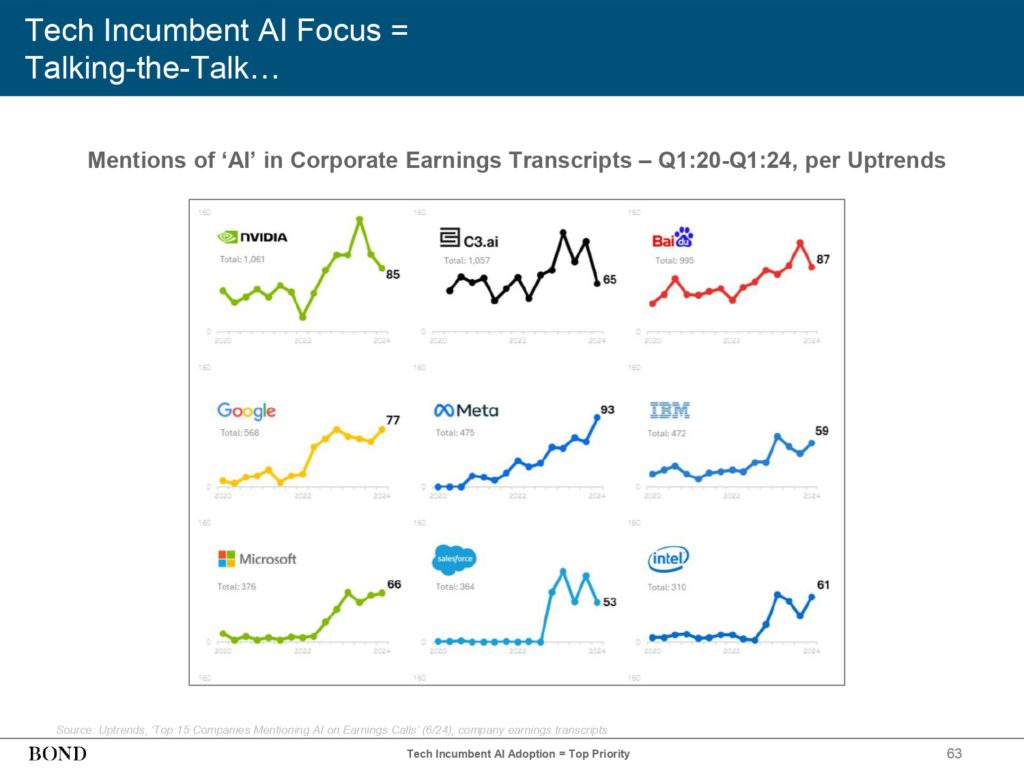

Focus of earnings conference calls: From NVIDIA, C3.ai, Baidu to Google, Meta, IBM, Microsoft, Salesforce and Intel, almost all leading technology companies have made "AI" a core topic in their earnings conference calls, and its mention frequency has increased significantly. This reflects the high recognition of the strategic position of AI by the capital market and corporate management.

Executives’ shared vision: Leaders of major technology giants, such as Amazon’s Andy Jassy and Google’s Sundar Pichai, have publicly expressed their firm belief in the disruptive potential of AI and see it as a core strategy for the company’s future development. Their remarks not only depict the broad prospects of AI technology, but also reveal the ambitions and layout of industry leaders in the field of AI.

Attention of S&P 500 companies: In the wider corporate world, the influence of AI is also rapidly expanding. The proportion of S&P 500 companies mentioning "AI" in their quarterly earnings calls has risen from negligible in 2015 to 50% in the fourth quarter of 2024. This shows that AI has transformed from a niche technology concept to an important factor affecting mainstream corporate decision-making.

Shift in strategic focus of global enterprises: For global enterprises, the goal of using generative AI for improvement in the next two years will focus more on areas that can directly bring revenue growth, such as improving product/output efficiency, optimizing customer service, and improving sales efficiency, rather than just cutting costs. This reflects the expectations of enterprises for AI's value creation capabilities.

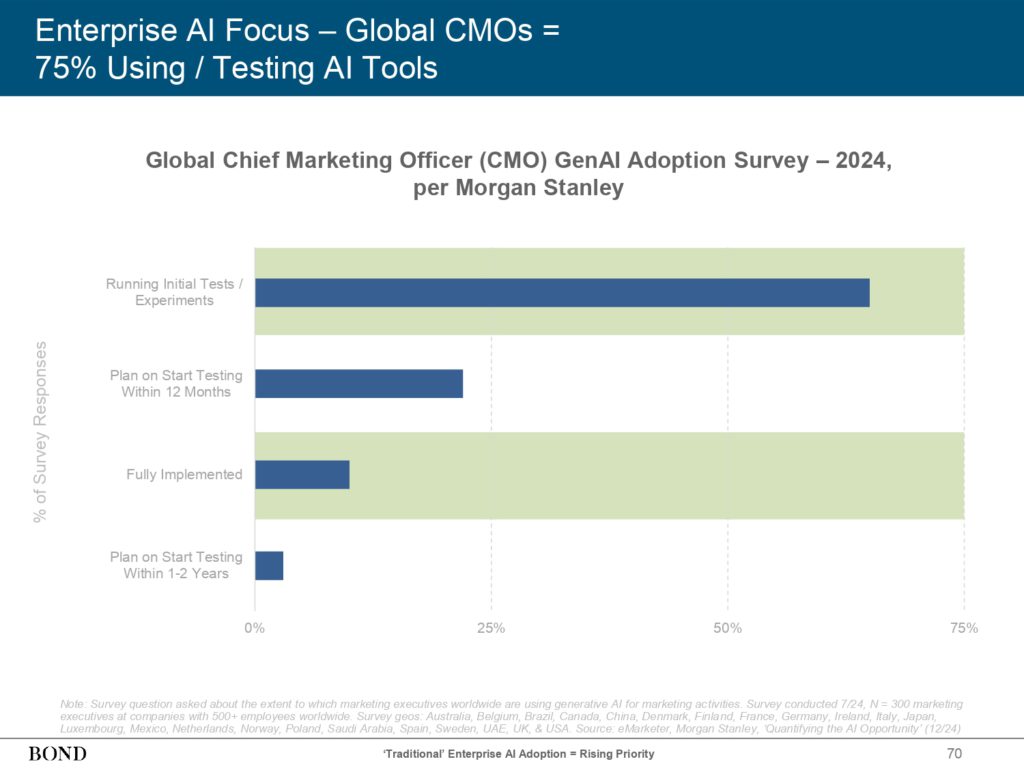

Active exploration by CMO groups: In the field of marketing, the application of AI has also become popular. As many as 75% of global chief marketing officers (CMOs) said they are actively using or testing AI tools to improve marketing effectiveness and consumer insight.

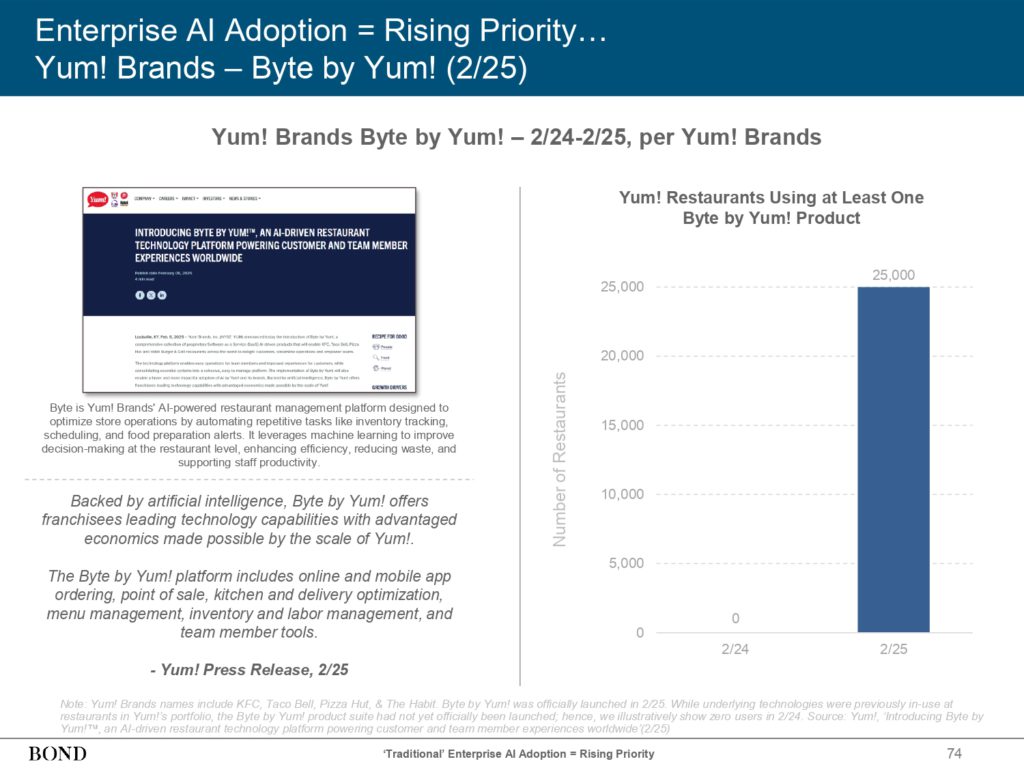

Practical cases in finance, healthcare and other industries: Bank of America's Erica virtual assistant, JPMorgan Chase's end-to-end AI modernization, Kaiser Permanente's multimodal AI scribe, and Yum! Brands' AI-driven restaurant management platform all vividly demonstrate the practical application and value creation of AI in traditional industries such as finance, healthcare, and catering.

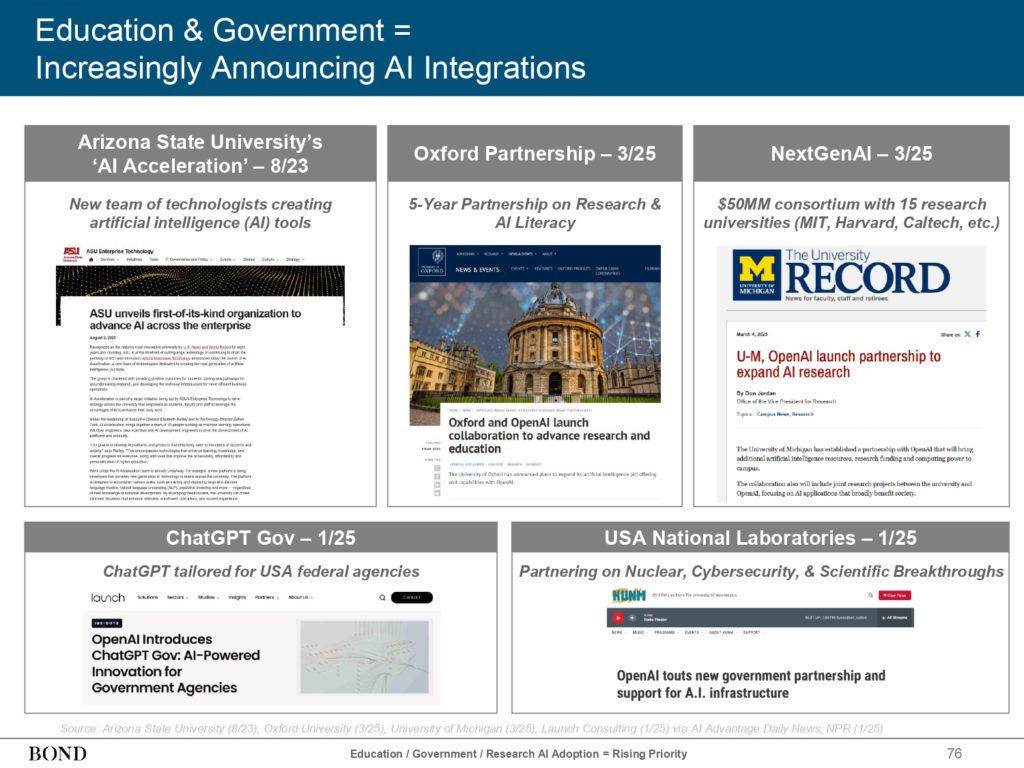

Active integration of AI in education, government, and research:

Cross-domain AI cooperation and application: From Arizona State University's AI acceleration program, to the in-depth cooperation between Oxford University and OpenAI, to the NextGenAI alliance composed of top universities such as MIT and Harvard, and ChatGPT Gov tailored for US federal agencies, all show that AI technology is accelerating its integration into key areas such as education, government, and scientific research.

U.S. national laboratories have also begun using AI to promote breakthroughs in cutting-edge scientific fields such as nuclear energy and cyber security.

The rise of sovereign AI policies: As the strategic significance of AI technology becomes increasingly prominent, governments around the world are also paying more and more attention to and starting to formulate and adopt sovereign AI policies to take the initiative in AI development. NVIDIA's Sovereign AI Partner Program has been launched globally.

Accelerated approval and application of AI in the medical field: The number of AI medical devices approved by the U.S. Food and Drug Administration (FDA) is growing rapidly, reflecting the huge potential of AI technology in improving medical diagnosis and treatment. At the same time, the FDA has also announced an ambitious plan to promote the use of AI in all its centers by June 2025.

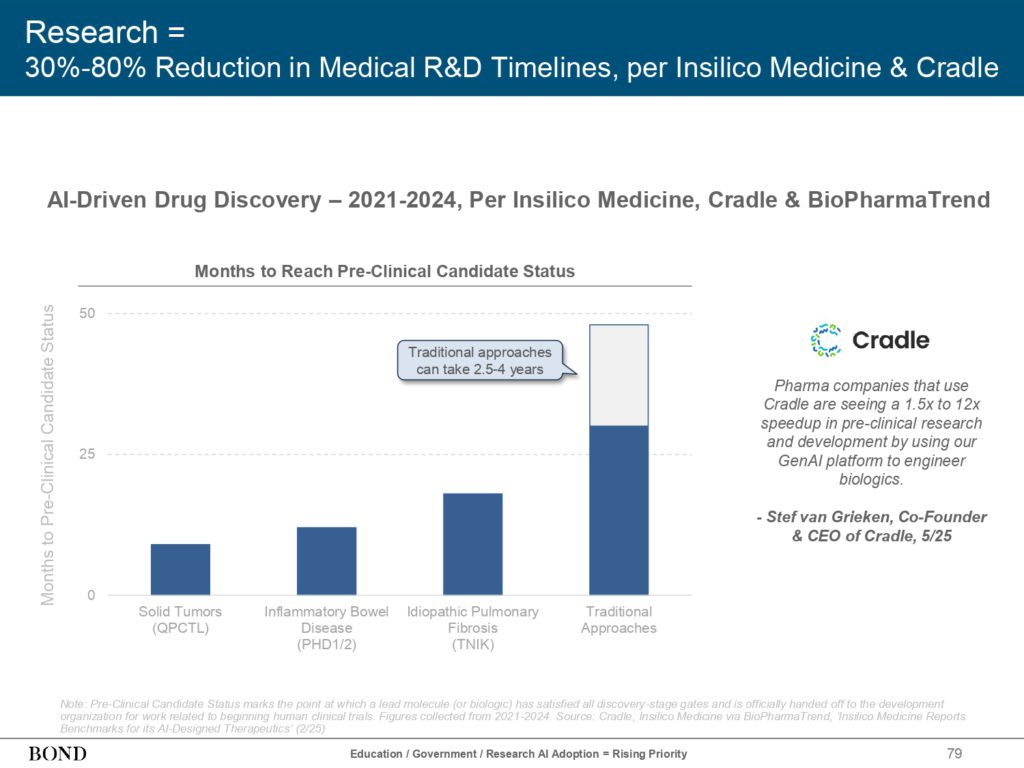

AI empowers drug development to accelerate: Companies such as Insilico Medicine and Cradle are leading the application of AI in drug development, successfully shortening the traditional medical development timeline by 30% to 80%. Cradle's GenAI platform has increased the speed of preclinical research by an astonishing 1.5 to 12 times, greatly accelerating the discovery process of new drugs.

Unprecedented growth in AI usage – expansion in depth and breadth:

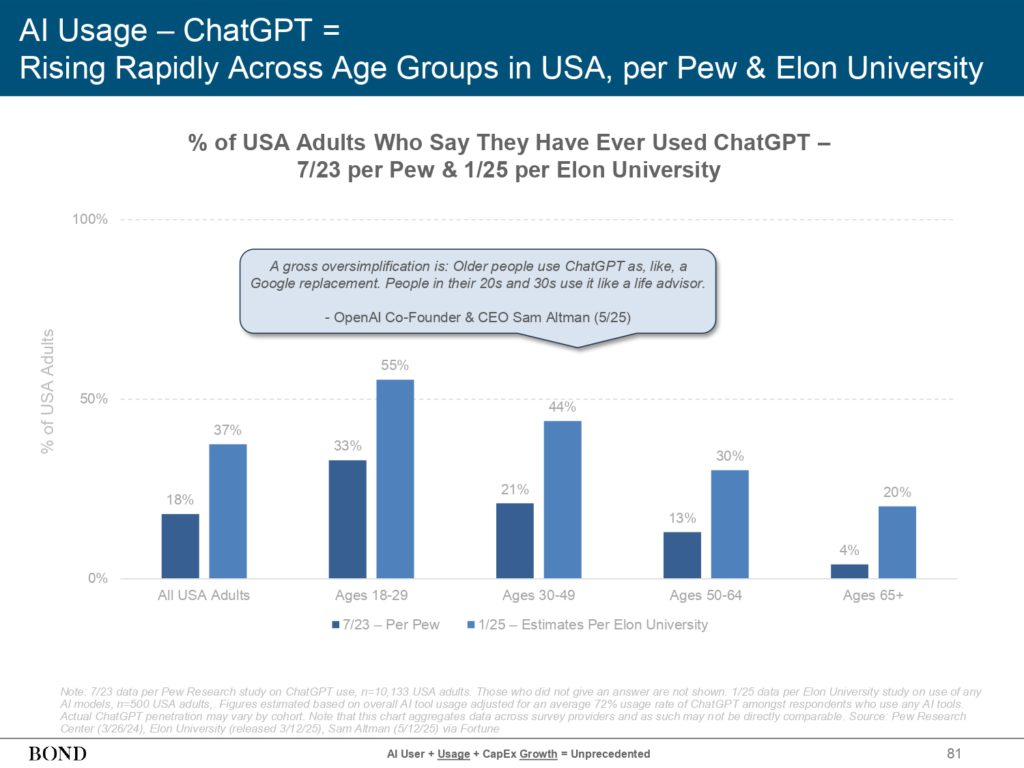

AI adoption across the age divide: In the United States, the use of AI tools such as ChatGPT is increasing rapidly among adults of all ages. It is worth noting that the highest usage rate is among young people aged 18-29, and OpenAI founder Sam Altman even observed that "young people regard it (ChatGPT) as a life consultant", which reveals that AI plays an increasingly important role among people of different generations.

A surge in mobile app engagement: The daily time spent by active users in the U.S. on the ChatGPT mobile app grew by a staggering 202% in just 21 months. At the same time, user session duration and the number of daily sessions per user also showed a significant growth trend.

High user retention rate demonstrates AI stickiness: ChatGPT's desktop user retention rate climbed from about 50% to 80% in 27 months, much higher than the retention rate of Google Search in the same period. This fully demonstrates the user stickiness and irreplaceability of AI tools.

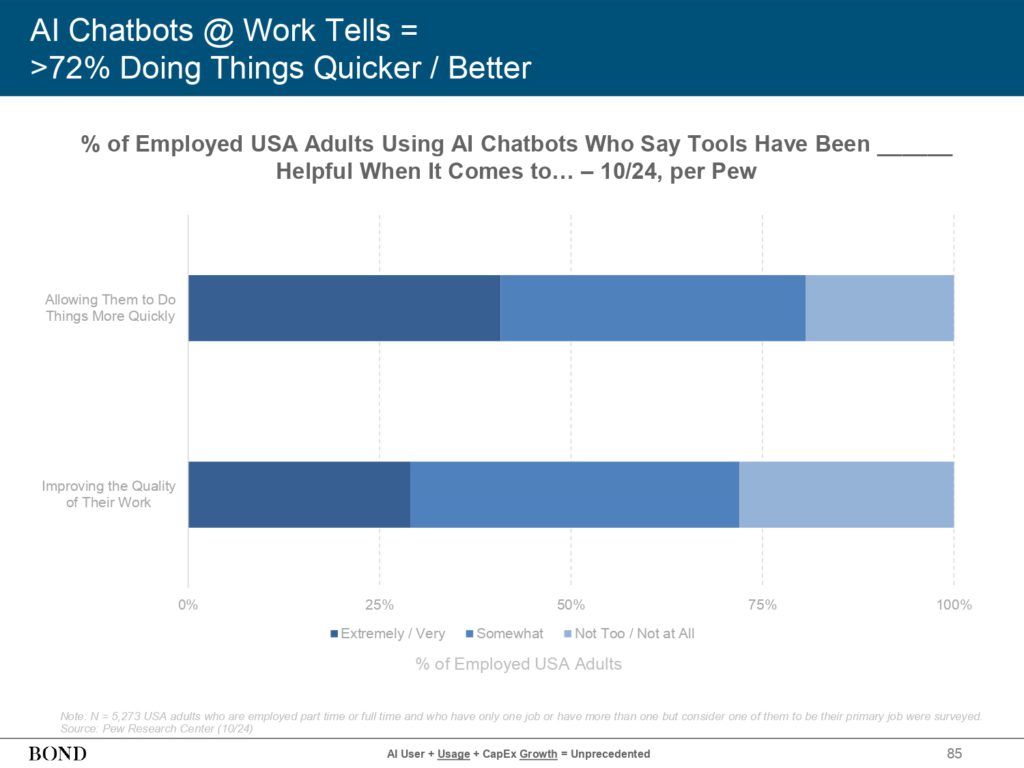

AI helps improve work efficiency: More than 72% of employed adults in the United States who use AI chatbots say these tools significantly help them complete work tasks faster and better.

Widespread application of AI in education: OpenAI's survey of 18-24-year-old students in the United States showed that ChatGPT has become an important auxiliary tool for their learning and research, and is mainly used for writing papers, brainstorming, and conducting academic research.

The emergence of “deep research” capabilities: Leading AI companies such as Google Gemini, OpenAI ChatGPT and xAI Grok have launched product features with “deep research” capabilities, aiming to automate and enhance knowledge acquisition and analysis in professional fields.

The evolution of AI agents - from response to execution: AI technology is undergoing a transformation from simple chat responses to more complex task execution, gradually evolving into a new type of service provider.

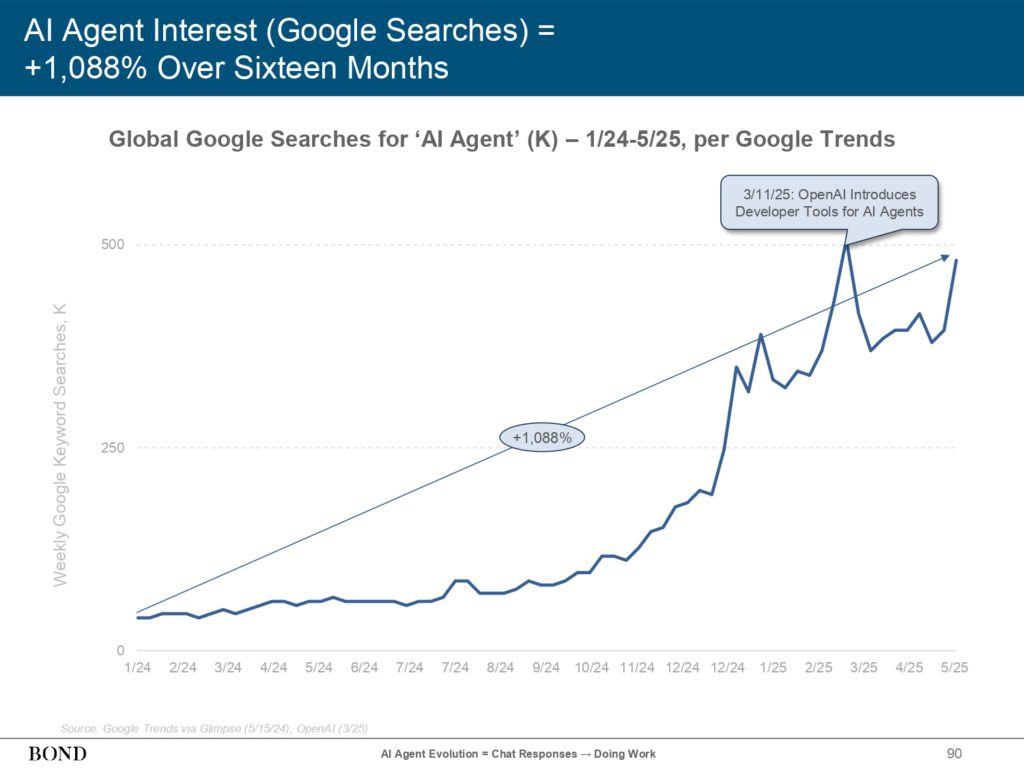

AI agents are able to reason, take actions and complete multi-step complex tasks on behalf of users, such as booking meetings, submitting reports, etc. The market's attention to "AI agents" has soared, with related Google searches increasing by more than 1,000% in 16 months. At the same time, industry leaders such as Salesforce, Anthropic, OpenAI and Amazon are also accelerating the launch of their own AI agent products.

Artificial General Intelligence (AGI) - The Next Frontier of AI: The BOND report further explores the huge potential of artificial general intelligence (AGI) and points out that experts' expectations for the timeline for achieving AGI have been significantly advanced. AGI is no longer seen as a distant hypothetical endpoint, but an increasingly clear and achievable technical threshold.

Once achieved, AGI will completely redefine the capabilities of software and hardware.

Unprecedented growth in capital expenditures (CapEx) lays the foundation for the future of AI:

Historical evolution of capital expenditures of technology companies: Over the past two decades, capital expenditures of technology companies have fluctuated and increased with the growth of data storage, distribution and computing needs. Early investments were mainly focused on building Internet infrastructure, while the focus at this stage has shifted to enhancing computing power for data-intensive artificial intelligence workloads, including large-scale investments in specialized chips (such as GPUs, TPUs), liquid cooling technology, and cutting-edge data center designs.

Huge investments by large technology companies: Taking the “Big Six” technology companies in the United States as an example, their capital expenditures have grown at an average annual rate of 21% over the past decade, which is basically consistent with the rapid growth trend of global data generation (an average annual growth of 28%).

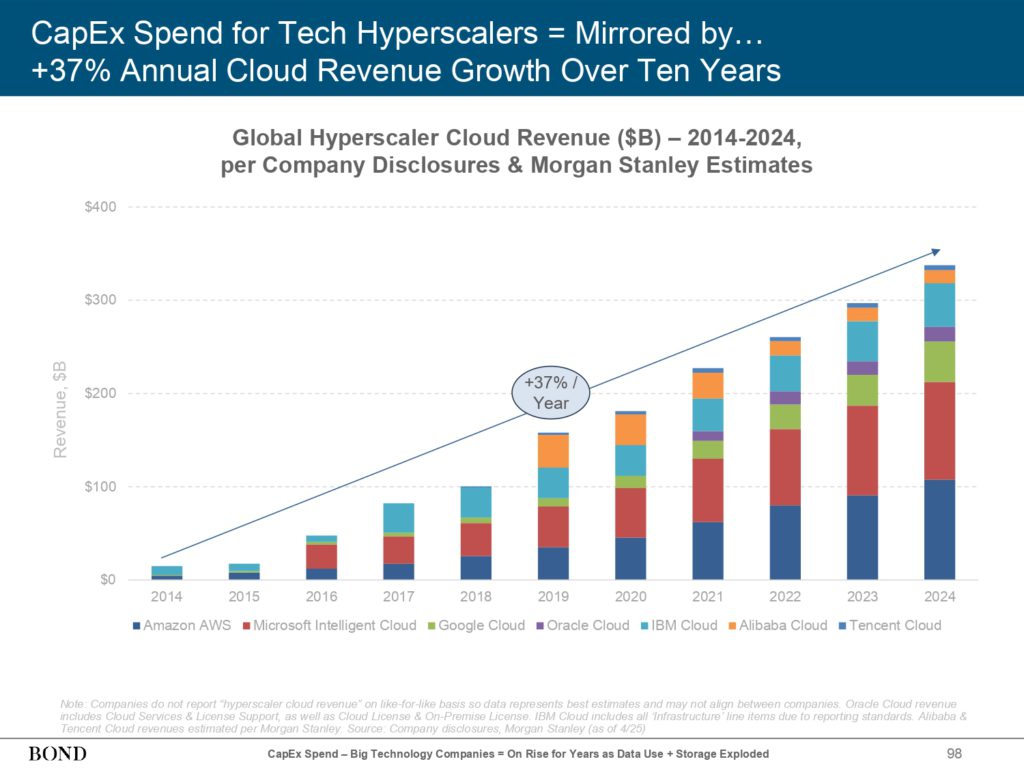

These huge investments are also directly reflected in the strong growth in cloud service revenue. The revenue of global hyperscale cloud service providers has achieved an average annual compound growth rate of up to 37% in the past decade.

The rise of AI has a profound impact on capital expenditures: The size of AI model training data sets is expanding at an astonishing rate of 250% per year. In order to meet the growing demand for AI computing power, the capital expenditures of the "Big Six" technology companies have increased by 63% year-on-year, and the growth rate is still accelerating.

It is worth noting that its capital expenditure as a percentage of total revenue has increased from 8% a decade ago to 15%. Taking the industry leader Amazon AWS as an example, its capital expenditure as a percentage of revenue will reach an astonishing 49% in 2024, far higher than 4% in 2018 and 27% in 2013, which fully demonstrates the huge funding needs for AI/ML infrastructure construction.

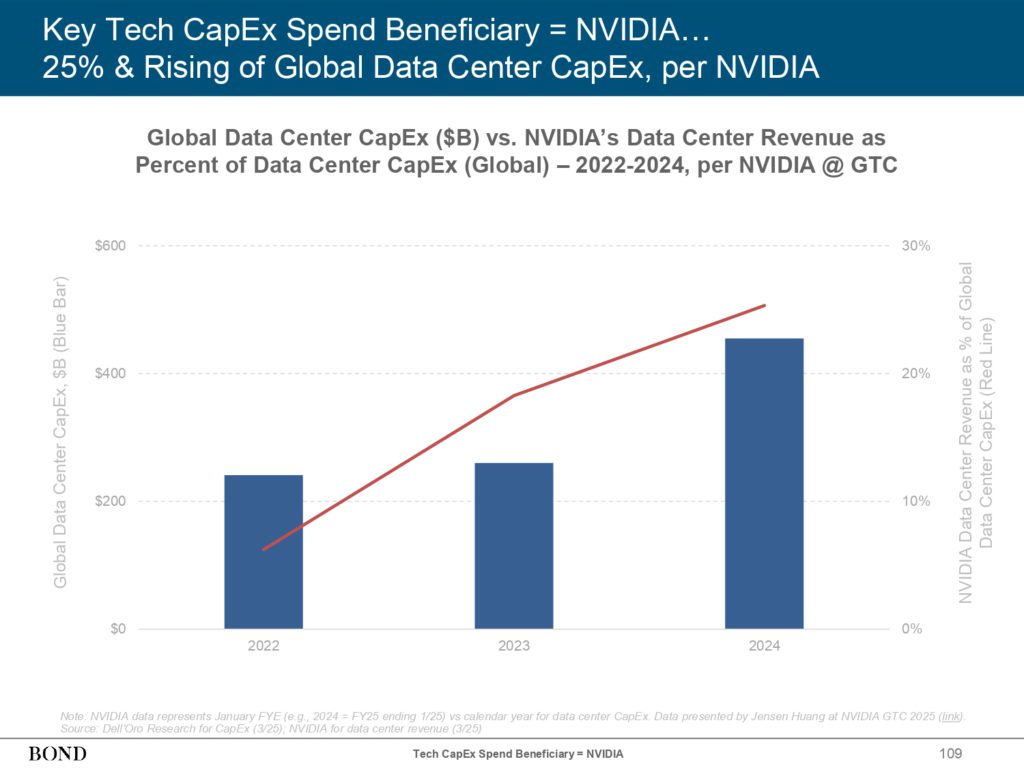

The core driving force of GPU performance improvement: NVIDIA GPU performance has increased 225 times in the past eight years, and its installed GPU computing power has increased more than 100 times in just about six years. This makes NVIDIA the main beneficiary of this round of capital expenditures by technology companies, and its data center business revenue has accounted for 25% of global data center capital expenditures, and this proportion continues to rise.

R&D spending is growing in tandem with capital spending. The “Big Six” U.S. technology companies have increased their R&D spending to 13% of revenue, up from 9% a decade ago. Fortunately, these industry giants have ample cash reserves (both their free cash flow and cash on their balance sheets have grown significantly) to support continued investment in AI and related capital spending.

Compute spending becomes the main driver of capital expenditures: The cost of training AI models remains high and is still rising rapidly, even as the cost of inference is falling. This "high-low" cost dynamic puts continued pressure on the budgets of cloud service providers, chip manufacturers, and enterprise IT departments.

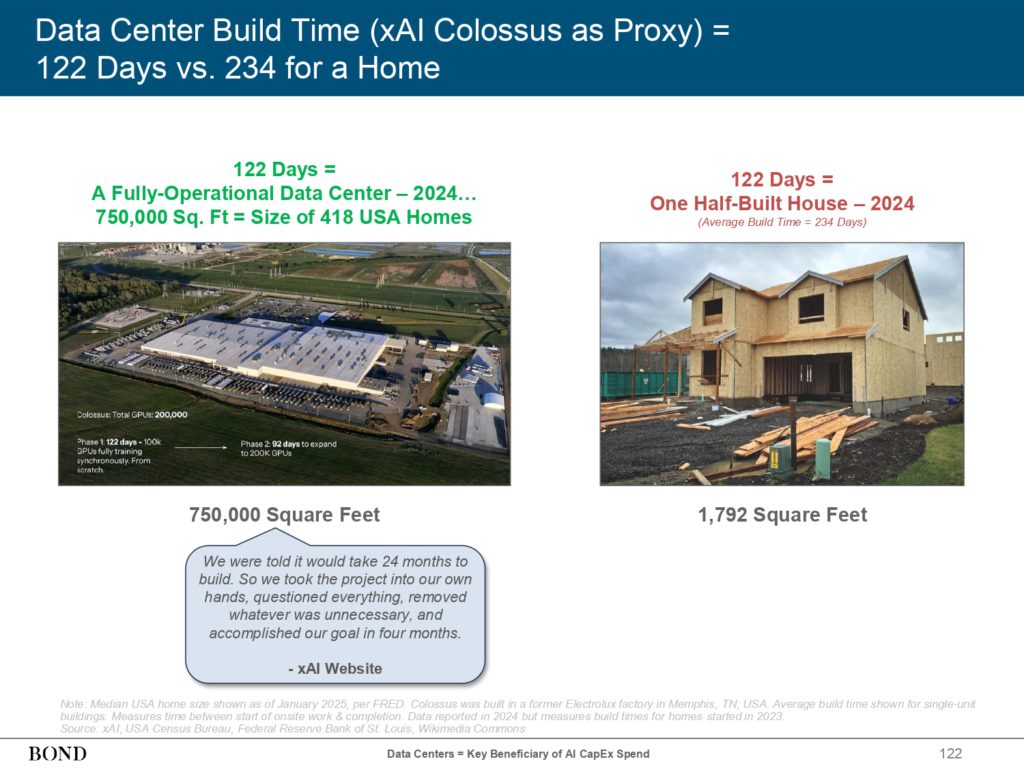

Data Centers - Key Infrastructure and Beneficiaries of the AI Wave: The huge demand driven by AI has pushed data center-related spending to a historical high. The pace of data center construction is accelerating. For example, the annual private construction investment value of data centers in the United States has achieved an average annual growth of 49% in the past two years.

New data center capacity is far outstripping the filling of existing facilities. A notable example is xAI’s Colossus data center, which was built in just 122 days and grew from zero to 200,000 GPUs in seven months.

Huge power consumption of data centers: Data centers are veritable "big power consumers". The report of the International Energy Agency (IEA) clearly points out that "without energy, there is no AI".

Data center electricity consumption has increased at an average annual rate of 12% since 2017, far exceeding the growth rate of global total electricity consumption. The United States accounts for 45% of global data center electricity consumption. Globally, data center electricity consumption has increased by a full 3 times in the past 19 years, and the United States is in the leading position in regional electricity consumption.

Rising AI model training costs + falling inference costs = performance convergence + rising developer usage

Pages 129-152 of the report delve into a core and intriguing paradox in the economics of AI models: the continued high cost of training coexists with the rapid decline in inference costs.

A delicate balance of cost dynamics:

Training state-of-the-art large language models (LLMs) has become one of the most expensive and capital-intensive endeavors in human history, costing billions of dollars. Ironically, however, the race to build the most powerful general-purpose models may be accelerating the commoditization of the industry and leading to diminishing returns. At the same time, the cost of applying and using these models (i.e., the process of “inference”) is rapidly falling.

High AI model training costs: The estimated training cost of cutting-edge AI models has increased by about 2,400 times in the past eight years. This figure highlights the high barriers to entry in the field of AI model research and development.

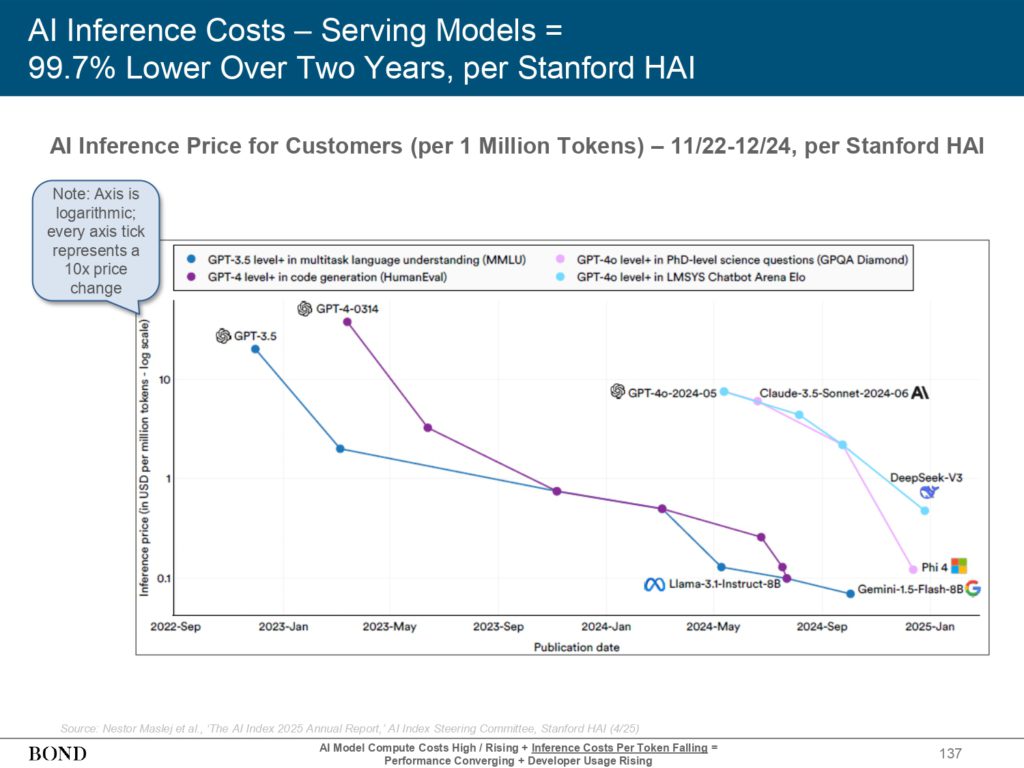

Continuously decreasing inference cost/Token:

Significant improvements in hardware efficiency (for example, NVIDIA’s latest Blackwell GPU consumes an astonishing 105,000 times less energy per token generated than the 2014 Kepler GPU) and breakthroughs in model algorithm efficiency have together led to a sharp drop in inference costs.

Token is the basic unit of measurement in the AI reasoning process, and its cost reduction directly affects the economic efficiency of AI applications.

Taking NVIDIA GPUs as an example, the energy required to generate each LLM token has dropped by 105,000 times over the past decade. The price of AI model inference services for customers (calculated per million tokens) has dropped by as much as 99.7% in just two years.

Compared to historical key technologies such as electricity and computer memory, AI is improving its cost efficiency at a much faster rate. This trend of “cost reduction + performance improvement → adoption increase” is a perpetual theme in the history of technological development, and is now being repeated at a faster pace in the field of AI.

Convergence of performance: As technology matures and competition intensifies, the performance scores of top AI models on evaluation platforms such as LMSYS Chatbot Arena are converging rapidly. This means that the gap in core capabilities of models from different providers is narrowing.

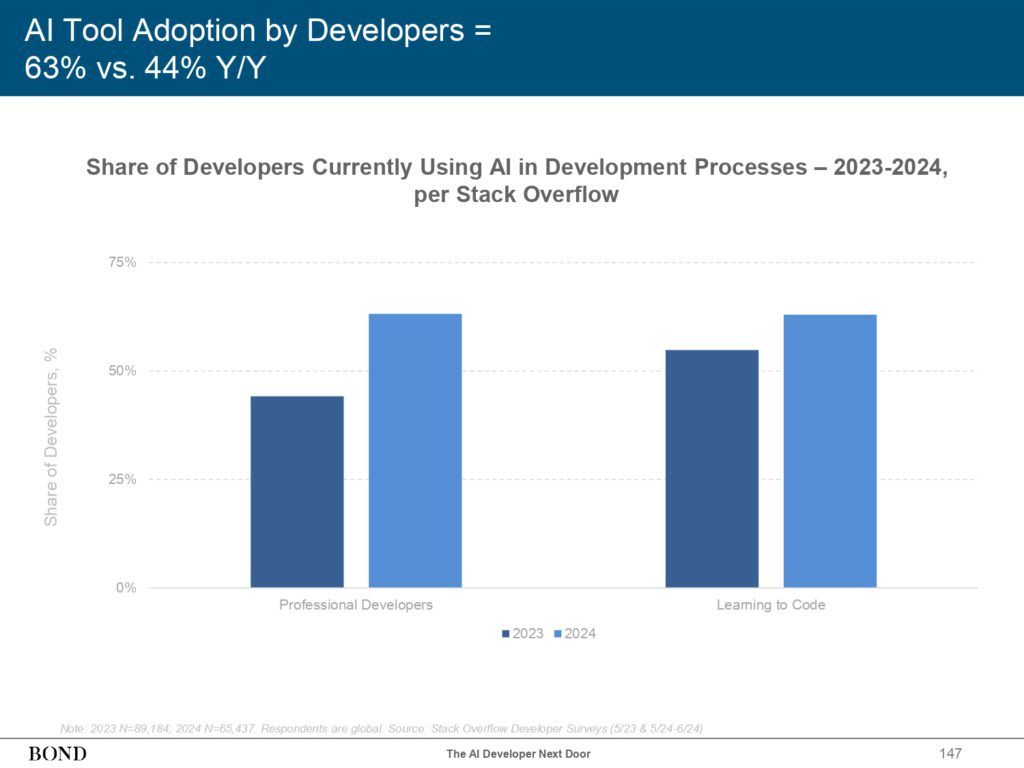

A surge in developer usage:

The significant reduction in inference costs has made AI experiments and productization feasible and economical for almost all developers. At the same time, the convergence of model performance has also changed developers' considerations in model selection. They no longer need to pay high fees for the pursuit of absolute top performance, especially when the model can be effectively fine-tuned for specific application scenarios.

The proliferation of basic models provides developers with unprecedented flexibility and choice, which in turn drives the growth flywheel of developer-led AI infrastructure. As the report says: The AI Developer Next Door.

According to Stack Overflow survey data, developer adoption of AI tools will rapidly increase from 44% in 2023 to 63% in 2024.

On the open source community GitHub, the number of AI-related developer repositories has grown by about 175% in just 16 months. The number of tokens processed by Google each month has increased 50 times year-on-year, while the number of tokens processed by Microsoft's Azure AI Foundry platform each quarter has also increased 5 times year-on-year.

The application scenarios of AI developers are extremely broad and diverse, covering all aspects of software development from code generation, automated testing to project management.

The road to profitability is still long: Despite the explosive growth in developer usage, high training costs and continued declines in inference service prices mean that the road to profitability for AI model providers may still be long and challenging.

» » Trends – Artificial Intelligence Full Report