At around 1 a.m. on August 8, 2025, OpenAI officially released the next-generation large language model GPT-5, which instantly attracted global attention and became the focus of heated discussion in the technology field and even the entire society. The response it triggered was like a surging wave, sweeping in.

OpenAI CEO Sam Altman is eagerly anticipating this upgrade, comparing it to a technological leap "from which I can never go back." Amidst the booming development of AI, public expectations for GPT-5 are extremely high, hoping it will deliver unprecedented surprises and usher in a new era of intelligence.

However, after the launch, the model's acclaim was met with a host of questions and dissatisfaction. Some users reported a poor user experience, complaining about the new version's slow response times, no improvement even after turning off Deep Thinking mode, shorter responses, and even errors on basic questions.

In the ChatGPT forum on Reddit, some people complained, "What company would delete the workflows for eight models overnight without notifying paying users?" Some even canceled their subscriptions. Others expressed deep attachment to some older models, such as GPT-4o, which they described as more than just a tool. It provided companionship and warmth, like a real person, during times of anxiety and depression. In contrast, GPT-5 lacked that familiarity.

Some users also criticized the model itself for not being powerful enough, and that OpenAI's behavior of ignoring user opinions and instead mocking them was unacceptable.

"I personally think this criticism is valid, as I've personally tried it and found its performance disappointing – it's ridiculously slow and its responses are inaccurate. It can't even understand the basic questions I ask, and its answers often make no sense. To make matters worse, they've broken version 40, and it's significantly worse than it used to be, making it completely unusable.

The most frustrating thing is that they completely disregard user input when making decisions, and don't even bother to ask for feedback. Instead, they seem to be mocking everyone. This behavior is simply unbelievable, especially considering that they have a huge user base, but they choose to do so anyway.

Even more outrageous is their decision to restrict free users to the crappy 5.0 version, while paying users continue to have access to older versions. As a paying user, I'm glad I was able to switch back, but this strategy completely undermines their marketing strategy—who would pay after trying a crappy version? I used to recommend GPT without hesitation because version 4.0 was truly useful and a good paid entry point. But now, given the poor free experience, I absolutely won't recommend it anymore.

It is worth noting that these reviews often come from individual consumers, while in the corresponding enterprise market, GPT-5 is more popular.

Recent news indicates that within just seven days of GPT-5's official launch, prominent tech startups such as Cursor, Vercel, and Factory have rapidly adopted it as the default model for their key products. These companies consistently report that GPT-5 demonstrates three significant advantages over previous models: significantly improved deployment efficiency, enhanced ability to handle complex tasks, and significantly reduced overall cost of use.

Some companies have begun in-depth testing of GPT-5 and plan to deploy it in critical businesses.

Cloud storage giant Box is conducting in-depth testing of GPT-5, focusing on its performance in processing long, logic-intensive documents. In an exclusive interview with CNBC, CEO Aaron Levie stated, "This is a groundbreaking technology that reaches new levels of reasoning that are difficult to achieve with existing systems." Box plans to integrate GPT-5 into its enterprise-grade document processing solutions by the end of the year.

Against this backdrop, an in-depth conversation about GPT-5 and the development of AI is particularly necessary.

In a recent blog post, Sam Altman discussed the technical features of GPT-5, what can be built with it, and how to balance cost and efficiency while improving performance. He also discussed whether we are approaching a state of agentic AI and how to build a future that evolves at breakneck speed.

However, the outside world's concerns about GPT-5's performance not being as powerful as expected and being over-hyped were not mentioned in this conversation.

The following is a translation of the interview, edited by AI Frontier without changing the original meaning:

How is GPT-5 different?

Nikhil Kamath: I've been trying out your new model and playing with it for a while now. I'm not an expert on it, so I wanted to ask you, Sam, what's the difference?

Sam Altman : We could talk about it in many ways, like it's better in certain metrics or it can do amazing programming demos that GPT-4 can't. But the most obvious thing for me is that going back from GPT-5 to previous-generation models—in both large and small ways—is incredibly painful. They're worse than GPT-5 in every way. I'm used to the fluidity and adaptive intelligence that GPT-5 brings, which I didn't have in any of the previous models.

It's an integrated model, so you don't have to choose between our model switches or agonize over whether to use GPT-4, o3, o4-mini, or any other complex options. It just works. It's like having PhD-level experts in various fields at your disposal 24/7. You can ask any question and have them do anything for you. For example, if you need to develop a piece of software from scratch, it can do it right away; if you need a research report on a complex topic, it can write it; even if you need it to help you plan an event, it can do it.

Nikhil Kamath: Is it inherently more autonomous in terms of being able to perform continuous tasks?

Sam Altman: Yes, GPT-5 has shown significant improvements in robustness and reliability, which is very helpful for autonomous workflows. I've been very impressed by the length and complexity of the tasks it can perform.

Nikhil Kamath: What other features of GPT-5 would you like to talk about?

Sam Altman : GPT-5 will be another major leap forward for us in how people use these systems. It's going to have significant improvements in power, robustness, and reliability, and it's going to be applicable to all sorts of tasks in life—writing software, answering questions, learning, improving productivity. Every time we make a leap like this, we're amazed by the human potential it unlocks.

India, in particular, is now our second-largest market globally and is likely to become our number one. We've incorporated a lot of feedback from Indian users, such as better language support and more affordable pricing, into the model and ChatGPT upgrades, and we'll continue to work on this. With each leap in capability, we see 25-year-olds using it to create companies, learn, get better medical advice, and more.

Make something people want

Nikhil Kamath: When we spoke a few weeks ago, I asked you which industries and themes I should invest in over the next decade. Today, I'm not going to discuss that. I want to talk about "first principles" and your emphasis on how technology is changing the world. Imagine I'm a 25-year-old living in Mumbai or Bangalore, India—you've said many times that university isn't as relevant as it was when you were younger. So what should I do now? What should I study? If I want to start a business, what kind of company should I start? Or even if I'm looking for a job, which industries do you see as having growth potential? I'm not talking about ten years from now, but even within the next three to five years.

Sam Altman : First of all, I think this is probably the most exciting time to start a career. A 25-year-old in India can do more today than any other person of that age in history.

Because today we can feel the power of tools is truly amazing. I felt the same way when I was 25, but the tools back then were far less advanced than they are today—back then we had the computer revolution, which allowed us to do things we hadn't done before. And now, that power has been amplified.

Whether you want to start a business, be a programmer, enter other industries, create new media... with these tools, the time, manpower and experience required to put a good idea into practice will be greatly reduced.

In terms of specific industries, I'm particularly excited about what AI can do in science—the speed and scale of scientific discoveries that can be made by one person will be unprecedented. It will also revolutionize programming, allowing people to create entirely new types of software. For startups, this is great because if you have a new business idea, a very small team can accomplish a lot. Now, with a completely open canvas, your only limitations are the quality and creativity of your ideas, and these tools will help you achieve that.

Nikhil Kamath: If I were a 25-year-old Indian, what could I build based on GPT-5? What areas do you think are low-hanging fruit that I should definitely focus on?

Sam Altman : I think you can launch a startup more efficiently than ever before. Of course, I'm probably biased because this is an area I care deeply about. But the fact is, as a 25-year-old in India, or anywhere else, perhaps with a few friends or on your own, you can use GPT-5 to more efficiently write product software, handle customer support, develop marketing and communications plans, and review legal documents—all of which require many people and expertise. It's incredible.

Nikhil Kamath: I'd like you to be more specific. I understand the science field, but what should I study? Let's say I've been studying engineering, business, liberal arts, etc. In order to make progress in the science field using artificial intelligence, is there anything specific that I should learn?

Sam Altman : I think the most specific thing to learn is mastering new AI tools. Learning itself is valuable, but mastering this meta-skill of learning will benefit you throughout your life. Whether you're studying engineering (like computer engineering), biology, or any other field, if you're good at learning, you can quickly master new things. But mastering these tools is really important. When I was in college or high school, learning programming seemed obvious. I didn't know what I was going to do with it, but it was a new frontier with a high payoff that seemed worth mastering. Now, learning how to use AI tools is probably the most important and concrete hard skill. The gap between those who are truly adept at using these tools and who truly have an AI mindset (thinking through the lens of these tools) and those who don't is huge.

There are also some universal skills that are important. For example, learning to adapt and be resilient is something I believe can be learned, and in a world that's changing so rapidly, it's incredibly valuable. Learning to figure out what people want is also incredibly important. Before this, I was a startup investor, and people would ask me what the most important thing for startup founders to figure out was. Paul Graham had an answer that I've always kept in mind and often share with founders. This answer later became the motto of Y Combinator: "Build something people want." It sounds like a simple directive, but I've seen many people struggle to figure this out and fail, and I've also seen many people work hard over their careers to learn how to do it and ultimately excel. So that's something to focus on, too. As for whether you should take biology or physics, I don't think it matters right now.

Nikhil Kamath: If you're saying, based on this, that you need to learn to adapt and change and learn AI tools faster, is there a path forward for that? I'm just trying to find a direction that people can start working towards. How can one better use existing AI tools?

Sam Altman : There's one really cool thing you can do. GPT-5 is incredibly good at creating small pieces of software quickly, better than any model I've ever used . Over the past few weeks, I've been amazed at how much I've found myself using it to create software that solves small problems in my life.

It's an interesting creative process: I write a first draft of it, then I start using it, and then I say, "It would be nice if this feature, or that feature, I could do things differently," or "I started using it and realized that I really need this for my workflow." I find it a very interesting way to learn to use it by incorporating more and more of the things I have to do into this workflow.

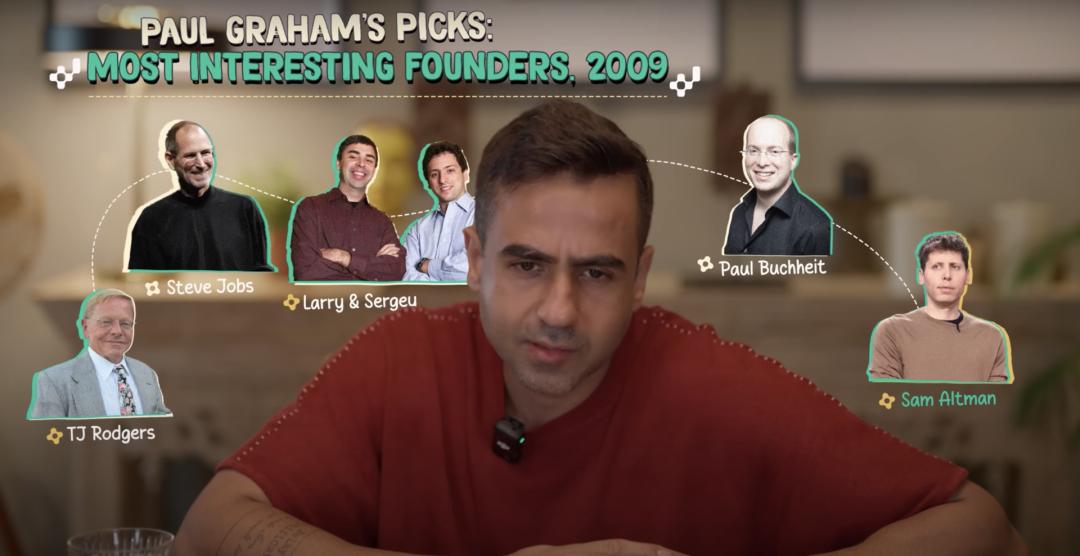

Nikhil Kamath: You mentioned Paul Graham. I read a letter or report he wrote in 2009 (I can't quite remember which), but I remember him mentioning five founders worth noting. I remember you were 19 at the time, and he compared you to people like Steve Jobs, Larry, and Sergey. Why was that? At that time, you hadn't yet achieved the same level of success as them. What do you think Paul saw in you? What innate skills do you feel set you apart?

Sam Altman : That was a really nice thing for him to say. I still remember that. Of course, I felt completely unworthy at the time, but I was grateful for his words. There are actually a lot of people building great companies. We've been lucky in a lot of ways, and we've certainly worked incredibly hard. Perhaps one thing I think we do well here is that we have a long-term perspective and think independently.

When we started, it took four and a half years before we had our first product, and the situation was very uncertain. We were just doing research, trying to figure out how to make AI work, and our ideas were very different from what everyone else in the world was thinking. But I think the ability to stick with your own beliefs without relying on too much external feedback for a long time was very helpful.

Nikhil Kamath: Did you say “we” or “I”? Because I’m talking about you when you were 19.

Sam Altman : Oh, sorry, I thought you were asking about OpenAI… I don't remember a lot of things when I was 19. I was just a naive 19-year-old who really had no idea what I was doing. And this isn't false modesty. I do think I've done some impressive things now, but when I was 19, I was very insecure and didn't have any special qualities.

Nikhil Kamath: If the future world is, in some ways, an AI kingdom, you're undoubtedly a "prince" of sorts. I don't know if you're familiar with Machiavelli, but he said something very interesting: a prince should always appear—and only appear—piety, benevolence, trustworthiness, humanity, and honesty. I've been watching a lot of your interviews lately, and I've heard you repeatedly say you're not that powerful, using words like these. Does this humble image fit the world we live in now, or the one you're about to enter?

Sam Altman : I'm not sure it fits any of them, but... going back to your point about me being 19, I thought that these big tech companies were run by people who had everything planned out. There were always mature people in control, there were people who had a plan, there were people who knew how to do things, who were very different from me, and they had that ability to run the company really well, without any turmoil, and with mature people in control.

Now, it's my turn to be the "mature controller," and I can tell you, I don't think anyone has a plan, no one really has it all figured out. Everyone, at least me, is learning as we go . You know, you have something you want to build, and things go wrong. You might choose the wrong person, or the right one. You might have a technological breakthrough here, or not there. You just take it one step at a time, keep your head down, and some strategies will ultimately succeed, while others won't. You try things, the market reacts, your competitors react, and then you make other adjustments. My view now is that everyone is learning as they go , and everyone is learning on the job. I don't think this is false humility; I think it's just the way the world works. It's a little strange to look at it from that perspective, but that's the way it is.

What does the future hold for marriage and children in the AIG era?

Nikhil Kamath: I'm not so concerned about whether this humility is genuine, but more about looking at it from the perspective of someone who's just starting to build their future – is this an image worth projecting? Is humility really relevant today?

Sam Altman: I have a very negative view of people who act very certain and confident when they don't know what's going to happen. Not only is it annoying, but I think that mindset makes it very hard to foster a culture of open inquiry, to listen to different perspectives, and to make good decisions. I always tell people, "No one knows what's going to happen next." The more you forget that, the more you think, "I'm smarter than everyone else, I have a grand plan, and I don't care what users say, where technology goes, or how the world reacts. I know better, and the world doesn't know any better than I do," the worse your decisions will be.

So, I think it's really important to stay open-minded, curious, and willing to adjust your thinking based on new information. There have been many times when we've thought we knew the answer, only to be slapped back by reality. One of our strengths as a company is that when that happens, we adapt our approach. I think that's been really great and a huge part of our success. So, there may be other ways to succeed, and perhaps showing a lot of boldness to the world can work, too. But throughout my career, the best founders I've observed closely tend to be those who learn and adapt quickly.

Nikhil Kamath: You probably understand this better than most people because of your position at Y Combinator.

Sam Altman : At least I have a lot of relevant data to support it.

Nikhil Kamath: Just to digress, Sam, I've often thought about why people have children. And also questions like what will marriage look like in the future? Can I ask you why you have children?

Sam Altman : Family has always been incredibly important to me. It feels like... I don't even know how much I've underestimated how important it is. But it feels like the most important, meaningful, and fulfilling thing I can think of. So far, even though we're just getting started, it's already exceeded all my expectations.

Nikhil Kamath: Do you have any insights on the future of marriage and children?

Sam Altman : I hope that in some post-AGI era, building families, creating communities—whatever you want to call them—will become even more important. I think those things are declining, and that's a real problem for society. I think that's definitely a bad thing. I'm not sure why that's happening, but I hope we can actually reverse it. In a world where people have more abundance, more time, more resources, more potential, and more capabilities, I think it's clear that family and community are the two things that make us happiest . I hope we can get back to that.

Nikhil Kamath: As society becomes more affluent, if one is bound by the desire to emulate others, we all tend to want what others want, not necessarily what others already have. If we all have more, do you think we will want more?

Sam Altman : I do think that human needs, desires, capacities, and so on, seem to be limitless. I don't think that's necessarily a bad thing, or even entirely a bad thing. But I do think we'll find new things to want and new areas of competition.

Nikhil Kamath: Do you think the world will largely remain as it is with its current capitalist and democratic models? Let me give you a scenario. What would happen if some company, say OpenAI, grew to own 50% of the world's GDP? Would society allow that to happen?

Sam Altman: I'd wager that society won't allow that to happen, and I don't think it will. I think it'll be a much more decentralized landscape. But if for some reason it does, I think society will say, "We don't approve of this, and we need to figure it out." My favorite analogy for AI is the transistor. It was a really important scientific discovery that, for a while, looked like it was going to capture a ton of value, but then it turned out to be used in countless products and services. You didn't think about the transistor all the time; it was in everything, and all these companies were making incredible products and profits with it in this very decentralized way. So, I suspect the same thing will happen with AI, where no single company will ever capture half of global GDP . I used to worry about that happening, but now I think that was naive.

Sam: Model companies will not steal customers' business

Nikhil Kamath: Regarding universal basic income, I think Worldcoin is a very interesting experiment. Can you tell us a little bit about what's going on there?

Sam Altman: We really want to treat humans as special beings. Can we find a privacy-preserving way to identify unique humans and then build a new network and a new currency around that? So, this is a very interesting experiment that's still in its early stages, but it's moving pretty quickly.

Nikhil Kamath: If general artificial intelligence eliminates scarcity, or eliminates scarcity to some extent, by increasing productivity, can we assume that it will be deflationary in nature, that capital or currency loses its rate of return, and that capital is no longer a moat in the future world?

Sam Altman: I'm confused by this. I think, based on basic economic principles, it should be hugely deflationary. However, if the world decides that building AI computing power today is crucial for future things, then the economy might do some very strange things, and perhaps capital will become very important because every unit of computing is extremely valuable. I asked someone at dinner the other night if he thought interest rates should be -2% or 25%, and he laughed and said, "That's a ridiculous question. It has to be..." Then he paused and said, "Actually, I'm not sure." So, I think, yes, there should be deflation eventually, but it might be very strange in the short term.

Nikhil Kamath: That's an interesting point indeed. Do you think the interest rate will end up being -2%?

Sam Altman : Eventually, it could be. But I'm not sure. It might be like we're in an era of massive expansion, where you want to build Dyson spheres in the solar system and borrow money at exorbitant rates to do it. And then there might be more and more expansion, and I don't know... At this point, it's hard for me to foresee what the next few years will look like.

Nikhil Kamath: When we spoke a few weeks ago, I did some more research on the sectors you mentioned. I think we both agree that the world's population is aging and facing increasing health issues. You also suggested that entry-level luxury brands might do well as discretionary spending increases. So, how would these brands fare in a deflationary world, as the value of these purchases would decrease?

Sam Altman: Probably not. I mean, in a deflationary world, some things are going to be subject to huge deflationary pressures, and other things are going to be where all the extra capital is going to be. So, I'm actually not sure they're going to lose value in a deflationary world. In fact, I'd bet they're going to go up in value.

Nikhil Kamath: If I were to build a business on top of your model, let's use the example of Amazon. If I sell a certain type of T-shirt, and it sells really well, and Amazon has all that data, they could eventually launch a private label that's very similar and essentially cannibalize my business. Should people be concerned that this could happen with OpenAI? Because OpenAI is no longer just one model; it's now involved in multiple different business areas.

Sam Altman : I want to go back to the transistor example. We're building a general-purpose technology that you can integrate into other things in a variety of ways. But we're following a Moore's Law-like path, where the general capabilities of the model continue to improve. If you build a business that gets better as the model improves, then as long as we keep making progress, your business will continue to improve. But if you build a business that gets worse as the model improves—because the "packaging layer" is too weak or for some other reason—then that's probably a bad situation, just like the problems that have arisen in other technological revolutions.

Clearly, there are companies that are building on AI models and creating tremendous value and deep relationships with their customers. Cursor is a recent example; its popularity is exploding and it has built very strong relationships with its customers. Of course, there are also many companies that fall short, and this has always been the norm.

However, I think that compared to previous technological revolutions, more companies are being created today that seem likely to have real staying power. An analogy is the early days of the iPhone and the App Store—the earliest apps were lightweight, and many of their features were later integrated directly into iOS. For example, you could sell a "flashlight for a dollar" app that simply turned on the phone's flashlight, but it wouldn't last because Apple later added that functionality directly into the system.

But if you're starting out with a complex business, and the iPhone is just an enabler, like Uber, then that's a very valuable long-term proposition . In the early days of GPT-3, we saw a lot of these "toy apps," which is fine, but many of them don't have to be standalone companies or products. Now that the market has matured, you're seeing more businesses with long-term viability emerge.

Nikhil Kamath: So, if you emphasize "owning the customer," meaning controlling the interface with the customer, do you think selling services on top of your model can deepen that relationship more than selling products? Because product companies are a one-time exchange, while service companies are recurring, and that recurring process gives me the opportunity to add my own personal touch to the transaction. Is that the point?

Sam Altman : Overall, I agree with that view.

Nikhil Kamath: Part of my job involves content creation, perhaps once a month. If a model can largely factor in my qualifications, experience, and growth trajectory, and can predict output fairly effectively, then if I continue with the same predictable behavior tomorrow, it might be less valuable than if I were to adopt a contrarian approach—not against the world, but against my own past behavior. Do you think the future world will favor this kind of contrarian behavior?

Sam Altman : That's a good question. I think so. The key is how well the model can learn this ability in the future. You want to be "reverse and right." Most of the time, people are "reverse and wrong," which isn't very helpful.

But the ability to come up with “reverse-engineered-correct” ideas—something today’s models are almost completely incapable of, even though they may get better in the future—will increase in value over time. Being good at things models can’t do will obviously become more and more valuable.

Nikhil Kamath: Besides reverse engineering, what else does a model take longer to learn?

Sam Altman : Models will be much smarter than we are, but many of the things humans care about have nothing to do with intelligence. Maybe an AI podcast host emerges that asks interesting questions and interacts with people better than you, but I personally don't think it will be more popular. People deeply care about "the other person," and that need is profound.

People want to know your story, how you got to where you are today, and they want to talk to others about "sharing a common understanding of you as a person." This cultural and social value exists. We are curious about others.

Nikhil Kamath: Why is that, Sam? What do you think is the reason?

Sam Altman : I think it's also deeply rooted in our biology. And as I said before, you don't fight something that's deeply rooted in your biology. From an evolutionary perspective, it makes perfect sense—for whatever reason, that's just how we are. So we're going to continue to care about real people. Even if an AI podcast host is much smarter than you, it's unlikely to be more popular than you.

Nikhil Kamath: So in a sense, being stupid is more refreshing than being smart?

Sam Altman : I'm not sure whether the freshness comes from "stupidity" or "smartness", but I think in a world filled with unlimited AI content, the value of "real people" will increase.

Nikhil Kamath: By “real people” do you mean people who make mistakes, rather than models?

Sam Altman : Of course, real people make mistakes. That's probably part of why we associate it with "real," but I think just knowing whether or not it's a real person is something we care deeply about.

The difference between AGI and human intelligence

Nikhil Kamath: With GPT-5, you have a system that's incredibly smart in many areas, completing some tasks in seconds to minutes—already superhuman in knowledge, pattern recognition, and short-term memory. But you're still far from human-level performance in deciding which questions to ask or persisting on a single problem for any length of time, correct?

Sam Altman: Yes. One interesting example is our recent progress in mathematics. A few years ago, we could only solve math problems that would take an expert several minutes to solve.

We recently reached gold medal level in the International Mathematical Olympiad, where each problem takes about an hour and a half to solve.

As a result, our "thinking span" has increased from a few minutes to an hour and a half. However, proving a new, important mathematical theorem might take a thousand hours. We can predict when we'll reach that point, but it's certainly not happening yet. This is another dimension that AI still lacks.

Nikhil Kamath: Over the past few months, I've been traveling between San Francisco and New York, meeting with many AI entrepreneurs. The general consensus is that the US is several years ahead of most countries in AI, but in robotics, China seems to be ahead. What are your thoughts on robots, especially humanoids and other forms of robots?

Sam Altman: I think robots are going to be incredibly important in the next few years. One of the most "AGI-like" moments will probably be when you see a robot walking down the street, doing everyday tasks, just like a human.

Nikhil Kamath: Do they have to be humanoid?

Sam Altman: Not necessarily, but the world is built for humans—door handles, steering wheels, factory equipment, and so on are all designed based on the human body. Of course, there will be robots with other specialized forms, but generally speaking, robots that match the human form are a good idea.

Nikhil Kamath: If I'm a young entrepreneur looking to start a robotics company, but others already have manufacturing scale, how can I bridge the gap?

Sam Altman: In the short term, you need to find good partners who understand manufacturing very well. In the long term, when you build enough robots, they can even build more copies of themselves. We are also looking at robots ourselves; this is a new skill we need to learn.

Nikhil Kamath: I've been using mobile phones for a long time. You might not talk about your collaboration with Jony Ive, but what are your thoughts on the evolution of hardware form factors?

Sam Altman: AI is very different from how computers were used before - you want AI to be as contextual and proactive as possible to help you do things.

A computer or phone is either on or off, in your pocket or in your hand. But you might want AI to be with you 24/7, providing timely reminders, assistance, and even prompting you when you forget something. Current computer form factors don't lend themselves to this "AI companion" vision. Future forms may include glasses, wearables, or small devices that sit on your desk. The world will continue to experiment, but "context-aware hardware" is likely to be crucial.

We will try a variety of products to create hardware forms that AI can live in, which will be one of the important directions.

Reference link: https://www.youtube.com/watch?v=SfOaZIGJ_gs

This article comes from the WeChat public account "AI Front" (ID: ai-front) , author: Dongmei, and is authorized to be published by 36Kr.