OpenAI's Path to AGI, as President Greg Brockman clarified in his latest interview -

On the technical level, shifting from text generation to the reasoning paradigm of reinforcement learning, trial and error in the real world and obtaining feedback;

In terms of resource strategy, continuous investment in large-scale computing resources;

In the implementation phase, encapsulating the model as an Agent, packaging model capabilities into an auditable service process.

This interview was hosted by the AI podcast Latent Space, discussing OpenAI's overall technical route and resource strategy for AGI with Brockman.

Meanwhile, OpenAI's implementation layout and Brockman's thoughts on the future also emerged during the interview.

In summary, Brockman expressed these core viewpoints:

The model is continuously enhancing its real-world interaction capabilities, which is also a key component of the next-generation AGI;

The main bottleneck of AGI is computing, and the amount of computation directly determines the speed and depth of AI research and development;

The true goal of AGI is to have large models permanently reside in enterprise and personal workflows, with the means being Agents;

Integrating models into real-world application domains is extremely valuable, with many untapped opportunities in various fields.

Transformation of Model Reasoning Paradigm

Regarding the recently released GPT-5, Brockman believes this is a major paradigm shift in the AI field, and as OpenAI's first hybrid model, it aims to bridge the gap between the GPT series and AGI.

After training GPT-4, OpenAI posed a question to themselves:

Why is it not AGI?

Although GPT-4 can engage in coherent contextual dialogue, its reliability is lacking, prone to errors and going off track.

Therefore, they realized the need to test ideas in the real world and obtain feedback through reinforcement learning to improve reliability.

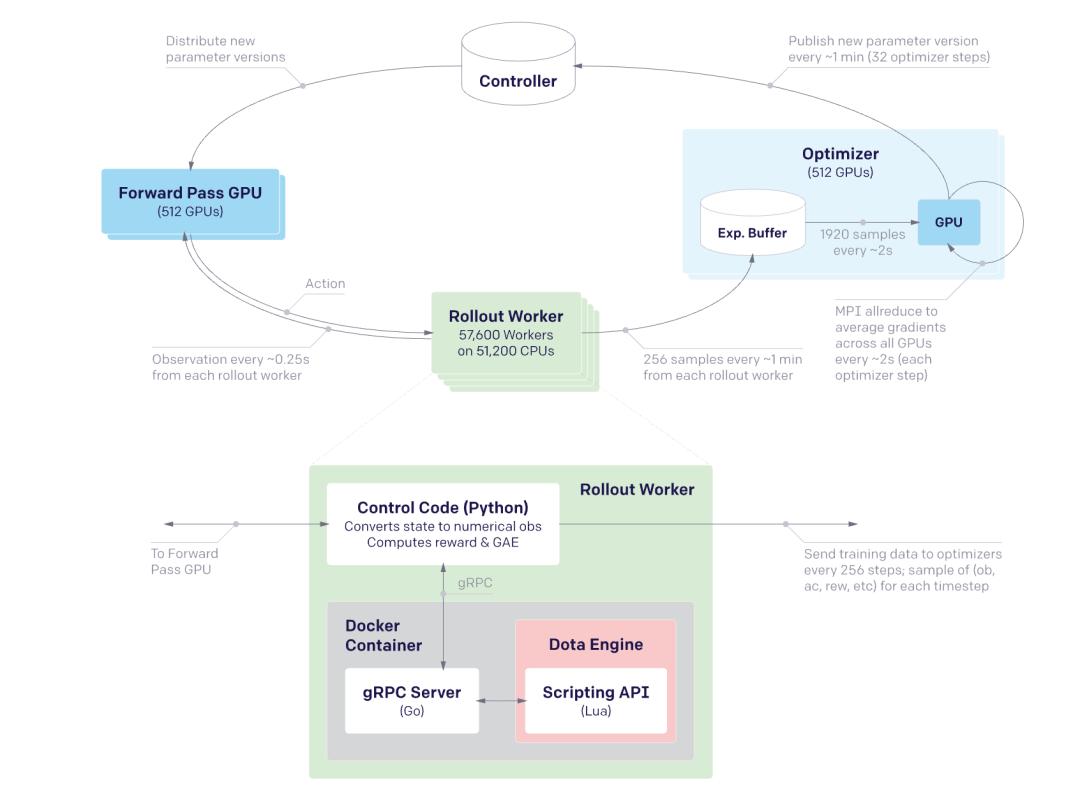

This was partially implemented in OpenAI's early Dota project, which used pure reinforcement learning to learn complex behaviors from random initial states.

△

So from the moment GPT-4 was completed, OpenAI began attempting to shift to a new reasoning paradigm, first having the model learn dialogue through supervised data, then using reinforcement learning to repeatedly trial and error in the environment.

Traditional model training is a one-time training followed by extensive inference, while GPT-5 uses reinforcement learning to continuously generate data during inference, then retrain based on these data, feeding the model's observations from the real world back into the model.

This new paradigm changes the scale of required data, where pre-training might have needed hundreds of thousands of examples, but reinforcement learning can learn complex behaviors from just 10 to 100 tasks.

It also demonstrates that the model is continuously enhancing its real-world interaction capabilities, which is a key component of the next-generation AGI.

[The translation continues in the same manner for the rest of the text...]Additionally, to enhance ecological stickiness, OpenAI has also set lightweight open source as the second driving force.

Brockman's judgment is that when developers precipitate tool chains on these models, they are actually adopting OpenAI's technology stack by default.

"There are still a lot of untapped fruits in various fields"

Looking to the future, Brockman believes that the truly worthwhile opportunities do not lie in creating another more dazzling "model wrapper", but in deeply embedding existing intelligence into the real processes of specific industries.

For many people, it seems that all good ideas have been done, but he reminds that each industry chain is incredibly vast.

Integrating models into real-world application domains is extremely valuable, and there are still many untapped fruits in various fields.

Therefore, he suggests that developers and entrepreneurs who feel they are "starting too late" should first immerse themselves in the front lines of the industry, understand the details of stakeholders, regulations, and existing systems, and then use AI to fill the real gaps, rather than just doing a one-time interface encapsulation.

When asked what he would write on a note to himself in 2045, his vision is "multi-stellar living" and a "truly abundant society".

In his view, with the current technological acceleration, almost all science fiction scenarios will be hard to deny their feasibility in twenty years, with the only hard constraint being the physical limits of material transportation itself.

At the same time, he also warns that computing resources will become scarce assets; even if material needs are met by automation, people will still crave more computing power for higher resolution, longer thinking time, or more complex personalized experiences.

If he could go back to age 18, he would tell his younger self that the problems worth solving will only increase, not decrease.

I once thought I had missed the golden age of Silicon Valley, but the opposite is true - now is the best time for technological development.

In the context of AI permeating every industry, opportunities are not exhausted, but rather multiplied with the steep rise of the technological curve.

The real challenge is to maintain curiosity and dare to venture into new fields.

Reference link: [1]https://www.youtube.com/watch?v=35ZWesLrv5A

This article is from the WeChat public account "Quantum Bit", author: Focus on Frontier Technology, published with authorization from 36kr.