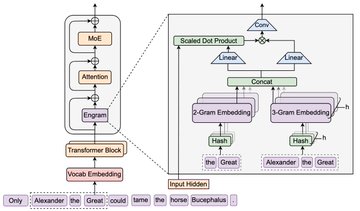

DeepSeek is back! "Conditional Memory via Scalable Lookup: A New Axis of Sparsity for Large Language Models" They introduce Engram, a module that adds an O(1) lookup-style memory based on modernized hashed N-gram embeddings Mechanistic analysis suggests Engram reduces the need for early-layer reconstruction of static patterns, making the model effectively "deeper" for the parts that matter (reasoning) Paper: github.com/deepseek-ai/Engram/...…

From Twitter

Disclaimer: The content above is only the author's opinion which does not represent any position of Followin, and is not intended as, and shall not be understood or construed as, investment advice from Followin.

Like

Add to Favorites

Comments

Share

Relevant content