This article is machine translated

Show original

Storage demands are likely to surge exponentially with the advent of Seedance2. While GPT 3.5 ushered in the text era, the true video era is brought about by Seedance2. For the same few prompts, video AI will consume hundreds of megabytes of storage, and this size will increase further as AI video production time increases. The resulting storage demands will be many times greater than those for text. After all, the number of people addicted to watching videos is truly vast. Globally, from infants to the elderly, no one is immune. They may not like reading books or watching news, but they absolutely love watching short videos.

Based on this, new investment demands will arise. The storage types required for video AI will certainly differ from those for text AI. Gemini provides a record of the current storage architecture used by TikTok and YouTube. Current video storage is not a single medium but a complex multi-level tiered storage architecture.

A. Architecture Components

1. Ultra-Hot Tier: Used to handle sudden bursts of traffic (such as videos just released by top influencers).

• Type: NVMe SSD cluster + memory-level cache (Redis/Memcached).

• Key metrics: **IOPS (Input/Output Operations Per Second)** and extremely low latency.

2. Warm Tier: Used to store videos that are frequently viewed daily.

• Type: High-performance enterprise-grade hard disk drives (HDDs) or large-capacity QLC SSDs.

• Key metrics: Throughput versus cost balance.

3. Cold/Archive Tier: Used to store long-tail videos from several years ago that are almost forgotten.

• Type: High-density helium hard drives (HDDs) or even physically isolated tape drives.

• Key metrics: Cost of Ownership (TCO) per TB.

B. Pain Points: I/O Walls and Storage Silos In traditional architectures, storage is "static". However, the AI video era (SeenDance 2) demands that storage transform from a "warehouse" into a "pipeline," directly leading to the collapse of storage logic.

Based on the current state and challenges of video company storage, three future development directions can be derived:

Three Future Development Directions for Video AI Storage

1. Direction One: From HDD to All-Flash Data Center

AI video training requires parallel reading of massive amounts of high-definition footage. Traditional HDD seek times are too slow, hindering expensive GPU computing power. All-flash arrays (AFA) will transform from a "luxury" into "infrastructure" for video companies.

2. Direction Two: Memory-Storage Convergence under CXL Technology

The Compute Express Link (CXL) protocol will break down the boundaries between memory and SSDs. For models like SeenDance 2 that require real-time motion alignment, the speed of data transfer between SSDs and HBM determines the smoothness of the generated content.

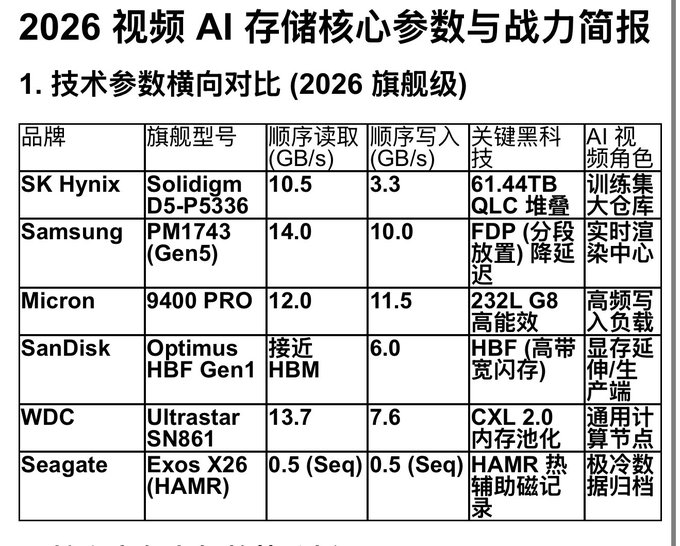

3. Direction Three: Computational Storage Instead of moving massive amounts of video data to the CPU for processing, it's better to perform preliminary data preprocessing (such as video frame extraction and format conversion) directly on the storage controller chip. Based on the above and image parameters, storage companies are ranked and rated according to their core competitiveness and trends.

SK Hynix (S-tier): Leveraging the QLC capacity advantage of Solidigm and the dominance of HBM, it has secured the two core aspects of "large-capacity read" and "computing throughput." The first choice for EB-level storage for video AI training sets.

Samsung (A+-tier): The most balanced read and write speed. Its PCIe 5.0 write speed is unparalleled, making it the best "high-speed buffer" when SeenDance 2 generates 4K/8K video streams.

SanDisk (A-tier): A dark horse after becoming independent. Its HBF (High Bandwidth Flash) is designed to break down memory barriers, allowing SSDs to directly participate in AI inference, greatly benefiting 64GB of RAM (like your M4 Pro) for local processing of large model video generation.

Micron (Tier A): Extremely high write endurance and energy efficiency, suitable for cloud factories that generate videos 24/7.

• WDC (Tier B+): Focuses on the CXL protocol, solving the dynamic allocation problem of memory and storage within data centers.

川沐|Trumoo

@xiaomucrypto

看来视频模型最最终是国内赢,

抖音用自己海量的视频数据来训练Seedance 2.0,

其他大厂有什么,谷歌和openai只剩下钱多卡多?😂

国内基本上老人小孩现在大部分抖音成瘾.

拿已有成堆的小说版权,批量流水线生产赚钱,国内老头老太太看不完的短剧,反复反哺ai.

From Twitter

Disclaimer: The content above is only the author's opinion which does not represent any position of Followin, and is not intended as, and shall not be understood or construed as, investment advice from Followin.

Like

Add to Favorites

Comments

Share

Relevant content