ByteDance 's Seedance 2.0 video model has become a sensation overseas, with Elon Musk marveling at its "rapid development." The model has been fully integrated with Doubao and Jimeng, and is simultaneously open for enterprise trials. Its "multimodal input" and "multi-camera long narrative" capabilities directly target professional production scenarios. ByteDance acknowledges that while the product is leading, it is far from perfect and will continue to explore deeper alignment between large models and human feedback. Doubao's large model 2.0 will be released on February 14th.

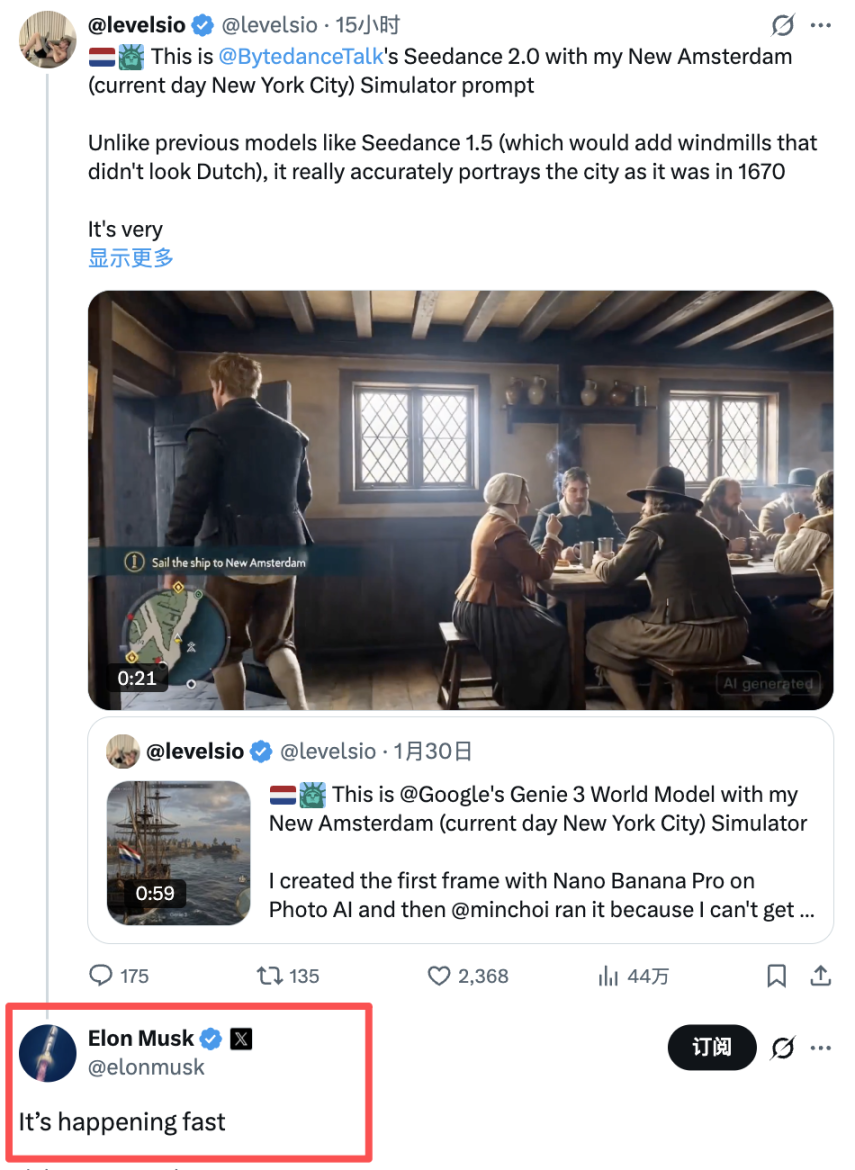

Generative video models are rapidly entering mainstream products and enterprise toolchains. ByteDance's Seedance 2.0 video creation model quickly gained popularity overseas, and Elon Musk commented on it on X, saying "It's happening fast," further amplifying market attention to the leap forward in video generation capabilities.

The latest developments come from social media. Musk commented on a Seedance 2.0 tweet on X, marveling at its rapid development, which has further fueled discussion about the model overseas, increasing external attention to its controllability and production capabilities.

ByteDance today signaled a clear productization strategy. Seedance 2.0 has been officially released, fully integrated with Doubao and Jimeng products, and launched at the Volcano Ark Experience Center for users to try out. The model focuses on original sound and picture synchronization, multi-camera long narratives, and multi-modal controllable generation capabilities, targeting a wider range of creators and commercial content scenarios.

However, the company has remained restrained in its statements. ByteDance's official Weibo account stated that Seedance 2.0 is "far from perfect," with many flaws in the generated results, and that they will continue to explore deeper alignment between large models and human feedback. For market participants, this combination of "high exposure + rapid productization + continuous iteration" reinforces expectations of an accelerated pace of competition in the video generation sector.

Musk retweeted it, pushing the buzz overseas.

After its beta launch, Seedance 2.0 garnered significant global attention thanks to its multimodal creation methods and "built-in camera movement" presentation. Elon Musk's retweet and "It's happening fast" comment on X further amplified the model's reach from tech circles to a broader audience of technology investors and product enthusiasts.

Musk's public comments, while not delving into specific technical details, reinforced the market narrative of "rapid development." This signal helps increase external attention to ByteDance's multimodal capabilities and may also have a marginal impact on valuation expectations for related industry chains.

From internal testing to full access: Doubao, Jimeng, and Volcano Ark are progressing simultaneously.

ByteDance announced today that its Doubao video generation model Seedance 2.0 has been officially integrated into the Doubao App, PC, and web versions, and is fully integrated into Doubao and Jimeng products. At the same time, the Volcano Ark Experience Center has been launched for users to try out.

For enterprise clients, ByteDance stated that Seedance 2.0's API service is expected to launch on Volcano Ark in mid-to-late February to help enterprise customers better implement their creative ideas. This means that Seedance 2.0 is not only positioned as a creation tool, but is also preparing for more standardized B2B calls.

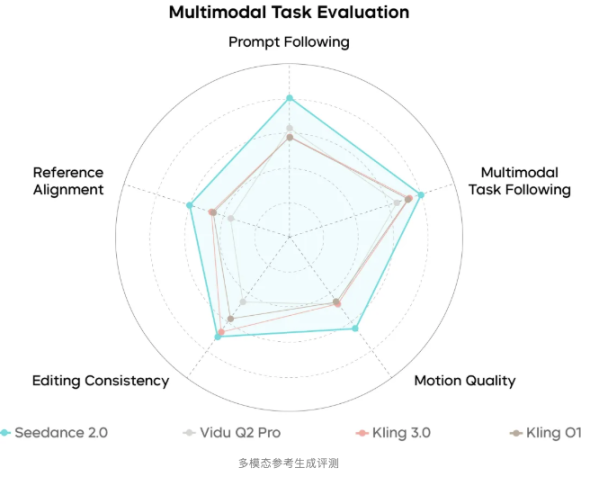

Multimodal, long-form narratives, and synchronized audio and visuals target "professional production scenarios."

ByteDance emphasizes that Seedance 2.0's positioning prioritizes "quality and controllability to meet the requirements of professional production scenarios." Key functional signals include:

1. Multimodal input, supporting mixed input of four modes: text, image, audio, and video, with reference to elements such as composition, action, camera movement, special effects, and sound.

2. Original audio and video synchronization and multi-track parallel output, supporting multi-track output of background music, environmental sound effects or character narration, and emphasizing alignment with the rhythm of the picture.

3. Multi-shot long narrative and "director's thinking": The model can automatically analyze the narrative logic, generate shot sequences, and maintain the unity of characters, lighting, style, and atmosphere.

4. Added video editing and video extension capabilities, enhancing the workflow attributes of "director-level control".

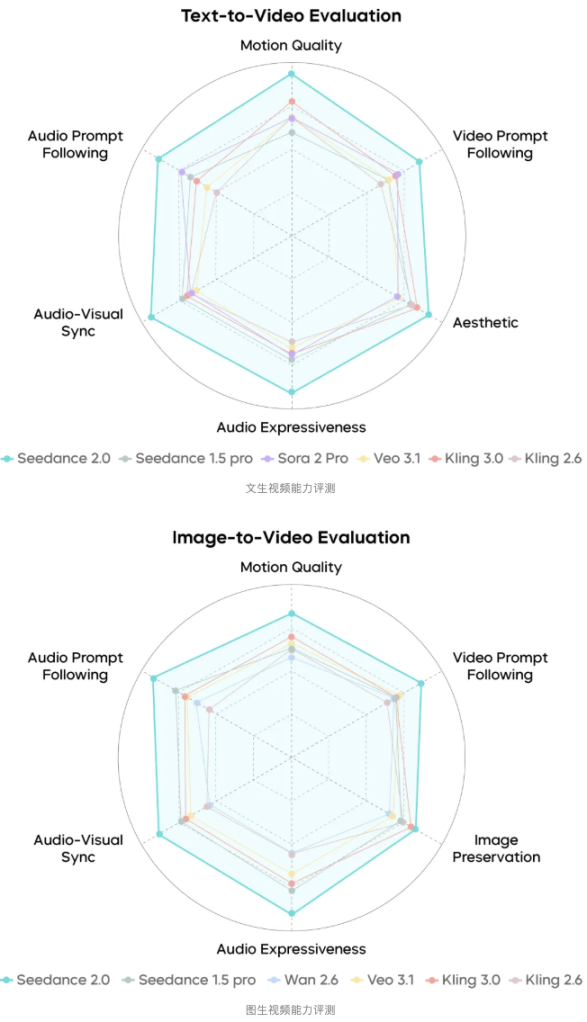

ByteDance also stated that Seedance 2.0 has effectively solved the challenges of adhering to physical laws and maintaining long-term consistency, and its generation availability in motion scenarios has reached the industry's state-of-the-art level.

"Far from perfect": Shortcomings and limitations are clearly stated in the product introduction.

ByteDance stated that Seedance 2.0's overall performance has reached an industry-leading level, but there is still room for optimization, including in terms of detail stability, multi-person lip-syncing, multi-subject consistency, text restoration accuracy, and complex editing effects. They will continue to explore the deep alignment between large models and human feedback.

Compliance and usage boundaries are becoming clearer. ByteDance stated that Seedance 2.0 currently restricts the use of real-life images or videos as primary references. If a real person is to be used as a primary reference, verification by the individual or authorization from the individual is required. These restrictions will directly impact how some commercial materials are produced and distributed.

With the release scheduled for February 14th, the upgrade schedule has become a new variable.

ByteDance's Volcano Engine has tentatively confirmed that it will release a series of important upgrades to the Doubao Big Model on February 14, 2026, involving Doubao Big Model 2.0, Audio and Video Creation Model Seedance 2.0, and Image Creation Model Seedream 5.0 Preview. It also stated that the basic model capabilities and enterprise-level agent capabilities will be greatly improved.

Amidst Musk's external lament about the "rapid pace of development," the market will now focus on two key aspects: first, whether the speed of Seedance 2.0's API launch and enterprise adoption matches the product narrative; and second, whether the pace of improvement in areas such as consistency, lip-syncing, and complex editing can support its transition from a "viral demo" to "stable productivity."