This article aims to briefly summarize the working principle of opBNB and its commercial significance, and to sort out the important step taken by the BSC public chain in the era of modular blockchain.

Written by: Faust, geek web3

Introduction: If you use one keyword to summarize Web3 in 2023, most people may instinctively think of "Layer 2 Summer". Although application layer innovations continue one after another, it is rare to see a long-term hot spot that lasts as long as L2. With Celestia successfully promoting the concept of modular blockchain, Layer 2 and modularity have almost become synonymous with infrastructure, and the past glory of monolithic chains seems difficult to return. After Coinbase, Bybit and Metamask successively launched exclusive second-layer networks, the Layer 2 war is in full swing, much like the scene of fierce gunfire among new public chains.

In this second-tier network war led by exchanges, BNB Chain will definitely not be willing to take the back seat. As early as last year, they launched the zkBNB test network, but because the performance of zkEVM cannot meet large-scale applications, opBNB using the Optimistic Rollup solution has become a better solution to implement universal Layer2. This article aims to briefly summarize the working principle of opBNB and its commercial significance, and to sort out the important step taken by the BSC public chain in the era of modular blockchain.

BNB Chain’s road to big blocks

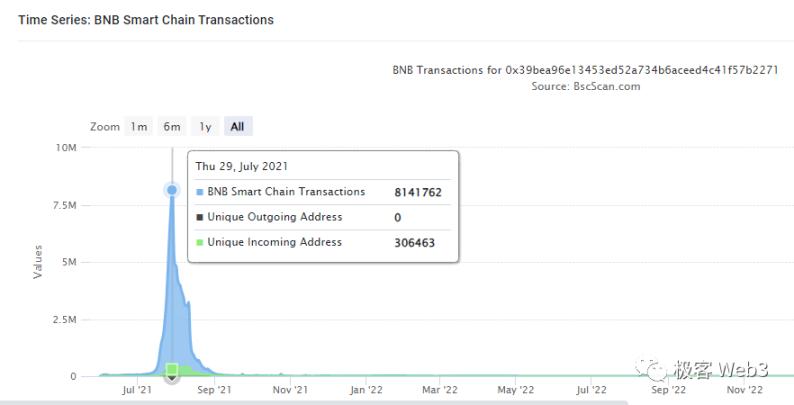

Similar to public chains supported by exchanges such as Solana and Heco, BNB Chain's public chain BNB Smart Chain (BSC) has a long history of pursuing high performance. Since its launch in 2020, the BSC chain has set the gas capacity limit of each block at 30 million, and the block interval has been stable at 3 seconds. Under such parameter settings, BSC has achieved a TPS upper limit of 100+ (TPS of various transactions mixed together). In June 2021, BSC's block gas limit was raised to 60 million. However, in July of that year, a chain game called CryptoBlades became popular on BSC, causing the number of daily transactions to once exceed 8 million, resulting in handling fees Soaring. Facts have proved that the BSC efficiency bottleneck at this time is still quite obvious.

(Data source: BscScan)

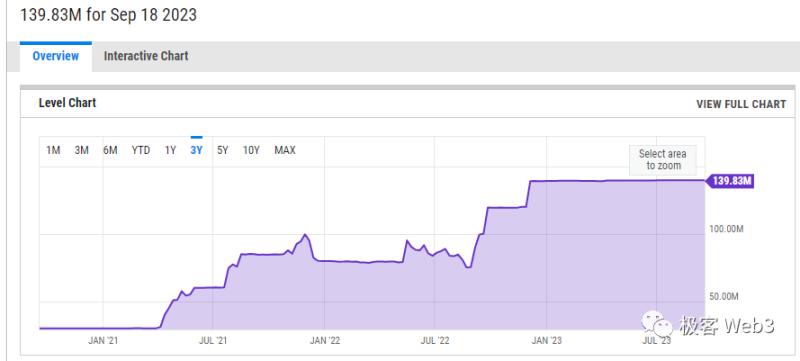

In order to solve the network performance problem, BSC once again raised the gas limit of each block, and it has been stable at around 80 million to 85 million for a long time. In September 2022, the gas limit for a single block of BSC Chain was raised to 120 million, and by the end of the year it was raised to 140 million, nearly five times that of 2020. Previously, BSC had planned to increase the upper limit of block gas capacity to 300 million. Perhaps considering that the burden on the Validator node was too heavy, the above-mentioned super-large block proposal has not been implemented.

(Data source: YCHARTS)

Later, BNB Chain seemed to focus on the modular/Layer2 track, and no longer focused on the expansion of Layer1. From zkBNB launched in the second half of last year to GreenField at the beginning of this year, this intention has become increasingly obvious. Out of a strong interest in modular blockchain/Layer2, the author of this article will use opBNB as the research object, and briefly reveal the performance bottleneck of Rollup for readers based on the differences between opBNB and Ethereum Layer2.

BSC’s high throughput adds to opBNB’s DA layer

As we all know, Celestia has summarized 4 key components according to the modular blockchain workflow:

- Execution layer : Execution environment for executing contract code and completing state transition;

- Settlement layer Settlement : handles fraud proof/validity proof, and also handles the bridging issue between L2 and L1.

- Consensus layer Consensus: Reach a consensus on the ordering of transactions.

- Data availability layer (DA): publishes data related to the blockchain ledger so that validators can download the data.

Among them, the DA layer and the consensus layer are often coupled together. For example, the DA data of optimistic rollup contains the transaction sequence in a batch of L2 blocks. When the L2 full node obtains the DA data, it actually knows the order of each Tx in this batch of transactions. (For this reason, when the Ethereum community layers Rollup, it believes that the DA layer and the consensus layer are related)

But for Ethereum Layer 2, the data throughput of the DA layer (Ethereum) has become the biggest bottleneck restricting Rollup performance. Because the current data throughput of Ethereum is too low, Rollup has to try to suppress its own TPS to prevent Ethereum masters from The network cannot carry the data generated by L2.

At the same time, the low data throughput has left a large number of transaction instructions in the Ethereum network pending processing, which has pushed the gas fee to an extremely high level, further increasing the data release cost of Layer 2. Finally, many second-layer networks have to use DA layers other than Ethereum such as Celestia . OpBNB, which is "first-come-first-serve", directly uses high-throughput BSC to implement DA to solve the bottleneck problem in data release.

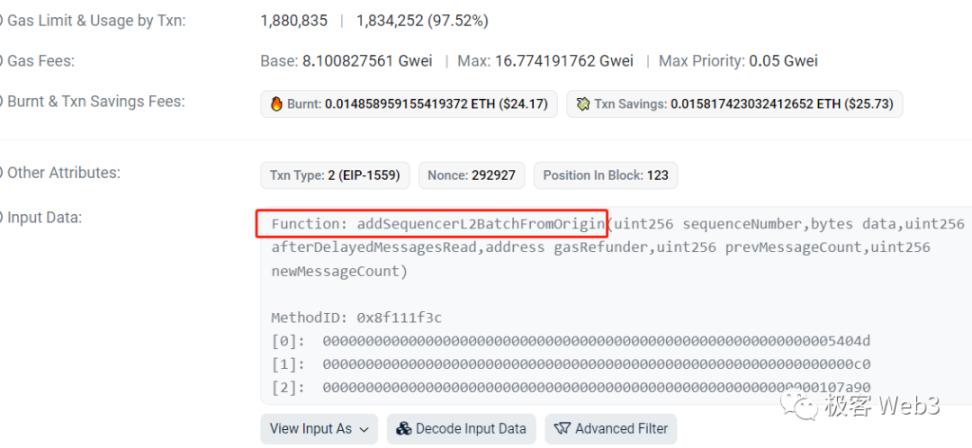

For ease of understanding, Rollup's DA data publishing method needs to be introduced here. Taking Arbitrum as an example, the EOA address on the Ethereum chain controlled by the Layer2 sequencer will regularly send Transactions to the specified contract. In the input parameter calldata of this command, the packaged transaction data is written and the corresponding on-chain events are triggered. , leaving a permanent record in the contract log.

In this way, Layer 2 transaction data is stored in the Ethereum block for a long time. People who have the ability to run L2 nodes can download the corresponding records and parse the corresponding data, but Ethereum's own nodes do not execute these L2 transactions. It is not difficult to see that L2 only stores the transaction data in the Ethereum block, which incurs storage costs, while the computational cost of executing the transaction is borne by L2's own nodes.

What is mentioned above is Arbitrum's DA implementation, and Optimism is an EOA address controlled by the sequencer, which transfers to another specified EOA address and carries a new batch of Layer2 transaction data in the additional data. As for opBNB, which uses OP Stack, it is basically the same as Optimism's DA data release method.

Obviously, the throughput of the DA layer will limit the size of data that Rollup can publish per unit time, thereby limiting TPS. Taking into account EIP1559, the gas capacity of each ETH block is stable at 30 million, and the block generation time after merge is about 12 seconds, so the total amount of gas processed by Ethereum per second is only 2.5 million at most.

Most of the time, the calldata that accommodates L2 transaction data consumes gas = 16 per byte, so the calldata size that Ethereum can process per second is only 150 KB at most. The average calldata size that BSC can process per second is about 2910 KB, which is 18.6 times that of Ethereum. The difference between the two as DA layers is obvious.

To summarize, Ethereum can carry up to about 150 KB of L2 transaction data per second. Even after EIP 4844 goes online, this number will not change much, except that the DA handling fee will be reduced. So 150KB per second, approximately how many transaction data can be included?

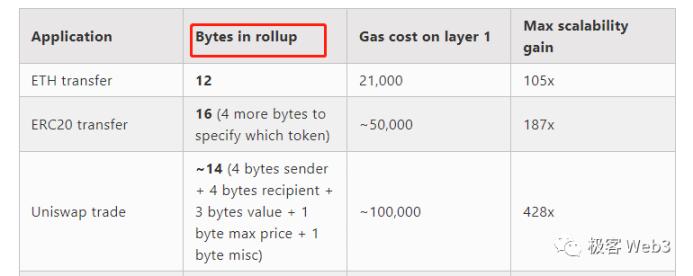

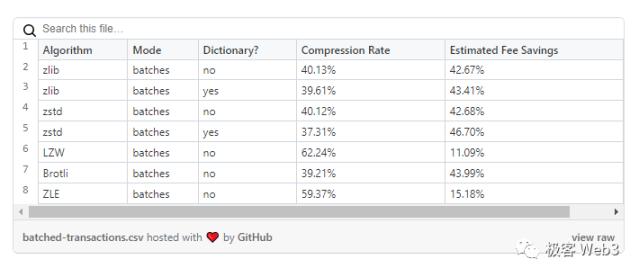

Here we need to explain the data compression rate of Rollup. Vitalik had overly optimistic estimates in 2021, optimistic that Rollup can compress the transaction data size to 11% of the original size. For example, a basic ETH transfer, which originally occupies a calldata size of 112 bytes, can be compressed to 12 bytes by optimistic rollup, an ERC-20 transfer can be compressed to 16 bytes, and a Swap transaction on Uniswap can be compressed to 14 bytes. . According to him, Ethereum can record up to about 10,000 L2 transaction data per second (all types are mixed together). However, according to data officially disclosed by Optimism in 2022, the actual data compression rate can only reach a maximum of about 37%, which is 3.5 times different from Vitalik's estimate.

(Vitalik’s estimate of Rollup’s expansion effect deviates greatly from the actual situation)

(The actual compression rates achieved by various compression algorithms officially announced by Optimism)

So we might as well give a reasonable number, that is, even if Ethereum reaches its throughput limit, the maximum TPS of all optimistic rollups combined is only more than 2,000. In other words, if the entire space of the Ethereum block is used to carry the data released by optimistic rollups, such as being divided by Arbitrum, Optimism, Base, Boba, etc., the total TPS of these optimistic rollups is simply not enough to 3000. This is still in the compression algorithm efficiency. highest case. In addition, we must also consider that after EIP1559, the average amount of gas carried by each block is only 50% of the maximum value, so the above number should be cut in half. After EIP4844 goes online, although the handling fee for publishing data will be greatly reduced, the maximum block size of Ethereum will not change much (too many changes will affect the security of the ETH main chain), so the above estimated value will not be How much progress.

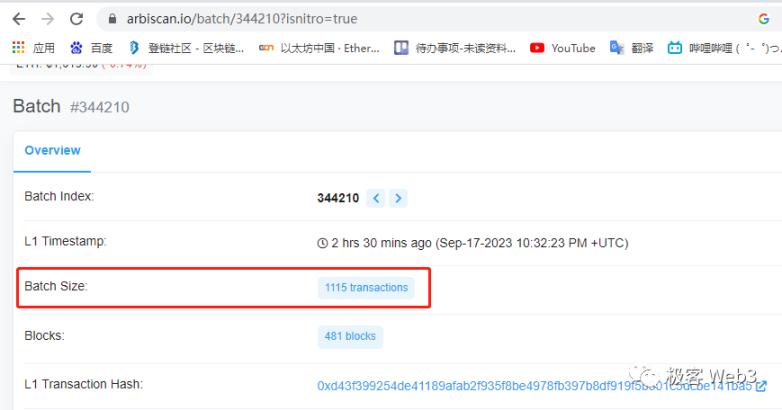

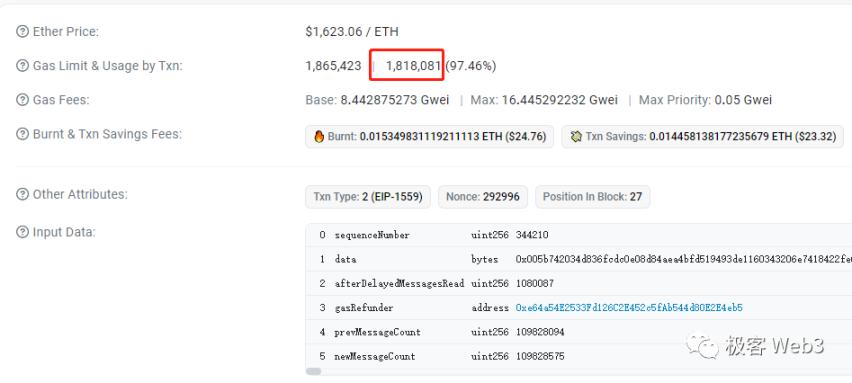

According to data from Arbiscan and Etherscan, a certain Arbitrum transaction batch contained 1,115 transactions and consumed 1.81 million gas on Ethereum. Based on this calculation, if every block in the DA layer is filled, Arbitrum’s theoretical TPS limit is about 1,500. Of course, taking into account the L1 block reorganization problem, Arbitrum cannot publish transaction batches on every Ethereum block. , so the numbers above are only on paper for now.

At the same time, after EIP 4337-related smart wallets are adopted on a large scale, the DA problem will become more serious. Because after supporting EIP 4337, the way users verify their identity can be customized, such as uploading binary data of fingerprints or iris, which will further increase the data size occupied by regular transactions. Therefore, the low data throughput of Ethereum is the biggest bottleneck limiting the efficiency of Rollup. This problem may not be properly solved for a long time in the future.

On BNB chain's public chain BSC, the average calldata size that can be processed per second is up to about 2910 KB, which is 18.6 times that of Ethereum. In other words, as long as the execution layer speed can keep up, the theoretical TPS upper limit of Layer 2 in the BNB Chain system can reach about 18 times that of ARB or OP. This number is calculated based on the current BNB chain's maximum gas capacity of 140 million per block and block generation time of 3 seconds.

In other words, the current public chain under the BNB Chain system, the total TPS limit of all rollups is 18.6 times that of Ethereum (this is true even if ZK Rollup is taken into account). From this point, we can also understand why so many Layer2 projects use the DA layer under the Ethereum chain to publish data, because the difference is obvious.

However, the problem is not that simple. In addition to data throughput issues, the stability of Layer1 itself will also affect Layer2. For example, most Rollups tend to publish a transaction batch to Ethereum every few minutes. This is due to the possibility of reorganization of Layer 1 blocks. If the L1 block is reorganized, it will affect the L2 blockchain ledger. Therefore, every time the sequencer releases an L2 transaction batch, it will wait until multiple new L1 blocks are released and the block rollback probability drops significantly before releasing the next L2 transaction batch. This will actually delay the final confirmation of the L2 block and reduce the confirmation speed of large transactions (large transactions must have irreversible results to ensure safety).

Briefly summarized, transactions occurring in L2 are irreversible only after they are published in the DA layer block and the DA layer generates a certain number of new blocks. This is an important reason that limits the performance of Rollup. However, Ethereum is slow to produce blocks. It only takes 12 seconds for a block to be produced. Assuming that Rollup releases an L2 batch every 15 blocks, there will be a 3-minute interval between different batches, and after each batch is released, there will be Waiting for multiple L1 blocks to be generated before it becomes irreversible (provided it will not be challenged). Obviously, the transaction on Ethereum L2 has a long waiting time and slow settlement speed from initiation to irreversibility; while BNB Chain can generate a block in just 3 seconds, and it only takes 45 seconds for the block to be irreversible (generating 15 new blocks). time).

According to the current parameters, under the premise that the number of L2 transactions is the same and the irreversibility of the L1 block is taken into account, the number of times opBNB publishes transaction data per unit time can reach up to 8.53 times that of Arbitrum (the former is published once every 45 seconds, and the latter is published every 6.4 minutes Send once), obviously the settlement speed of large-value transactions on opBNB is much faster than that of Ethereum L2. At the same time, the maximum amount of data released by opBNB each time can reach 4.66 times that of Ethereum L2 (the gas limit of the L1 block of the former is 140 million, while the gas limit of the latter is 30 million).

8.5 three

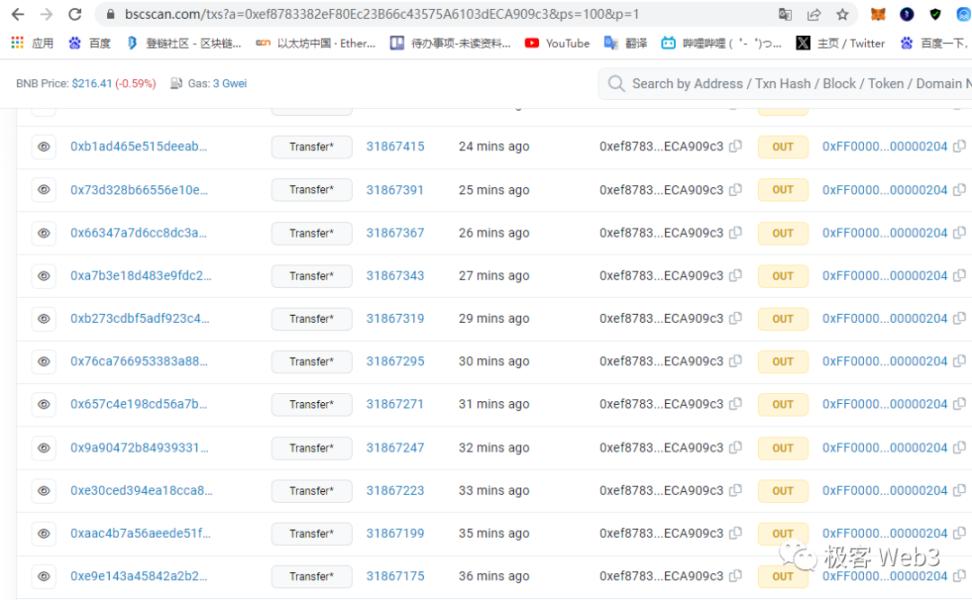

(Arbitrum’s sequencer publishes transaction batches every 6~7 minutes)

(opBNB’s sequencer will publish a transaction batch every 1 to 2 minutes, and it only takes 45 seconds at the fastest)

Of course, there is a more important issue to consider, which is the gas fee of the DA layer. Every time L2 publishes a transaction batch, there is a fixed cost that is independent of the calldata size - 21,000 gas, which is also an overhead. If the DA layer/L1 handling fee is high, making L2's fixed cost of publishing a transaction batch each time high, the sequencer will reduce the frequency of publishing transaction batches. At the same time, when considering the L2 handling fee component, the cost of the execution layer is very low. Most of the time, it can be ignored and only the impact of DA cost on handling fees is considered.

To sum up, when calldata data of the same size is released on Ethereum and BNB Chain, although the gas consumed is the same, the gas price charged by Ethereum is about 10 to dozens of times that of BNB chain, which is transmitted to the L2 handling fee. Currently, Ethereum The user handling fee of Layer 2 is about 10 to dozens of times that of opBNB. Taken together, the difference between opBNB and optimistic rollup on Ethereum is still obvious.

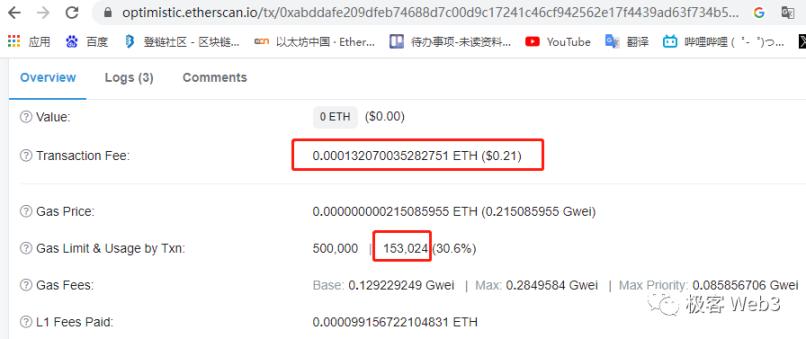

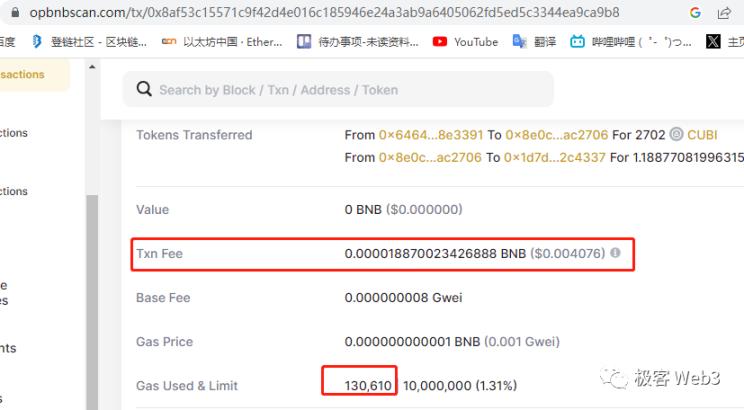

(Optimism’s last transaction that consumed 150,000 gas had a handling fee of $0.21)

(opBNB’s last transaction consumed 130,000 gas, with a handling fee of US$0.004)

However, although expanding the data throughput of the DA layer can improve the throughput of the entire Layer 2 system, the performance improvement for a single Rollup is still limited, because the execution layer often does not process transactions fast enough, even if the limitations of the DA layer can be ignored. The execution layer will also become the next bottleneck affecting Rollup performance. If the execution speed of Layer 2 is very slow, the overflow transaction demand will spread to other Layer 2, eventually causing liquidity fragmentation. Therefore, it is also important to improve the performance of the execution layer, which is another threshold above the DA layer.

opBNB’s addition at the execution layer: cache optimization

When most people talk about the performance bottlenecks of the blockchain execution layer, they will inevitably mention: the single-threaded serial execution method of EVM cannot fully utilize the CPU, and the Merkle Patricia Trie used by Ethereum is too inefficient to search for data. These are two reasons. This is an important execution layer bottleneck. To put it bluntly, the idea of expanding the execution layer is nothing more than making fuller use of CPU resources, and then allowing the CPU to obtain data as quickly as possible. Optimization solutions for EVM serial execution and Merkle Patricia Tree are often complex and not easy to implement, and more cost-effective work often focuses on caching optimization.

In fact, the issue of cache optimization has returned to a point often discussed in traditional Web2 and even textbooks.

Normally, the speed at which the CPU reads data from memory is hundreds of times faster than the speed at which it reads data from disk. For example, for a piece of data, it only takes 0.1 seconds for the CPU to read it from memory, while it takes 10 seconds to read it from disk. Therefore, reducing the overhead caused by disk reading and writing, that is, cache optimization, has become a necessary part of the optimization of the blockchain execution layer.

In Ethereum and even most public chains, the database that records the address status on the chain is completely stored on the disk, and the so-called World State trie is just the index of this database, or the directory used when searching for data. Every time the EVM executes a contract, it needs to obtain the relevant address status. If the data has to be fetched one by one from the database stored on the disk, it will obviously seriously reduce the speed of executing transactions. Therefore, setting up a cache outside of the database/disk is a necessary means of speeding up.

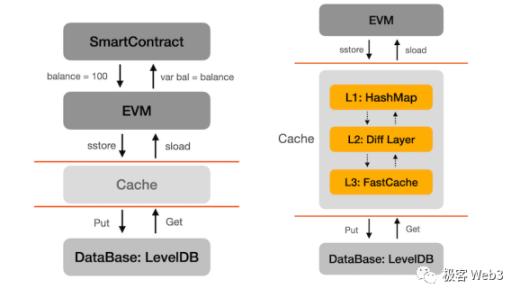

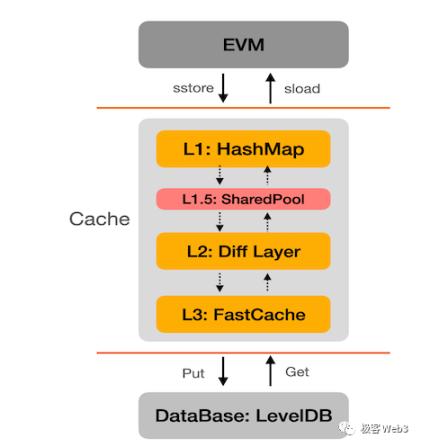

opBNB directly adopts the cache optimization solution used by BNB Chain. According to the information disclosed by opBNB's partner NodeReal, the earliest BSC chain set up three caches between the EVM and the LevelDB database where the state is stored. The design idea is similar to the traditional three-level cache, and the data with higher access frequency is placed in the cache. , so that the CPU can first go to the cache to find the required data. If the cache hit rate is high enough, the CPU does not need to rely too much on the disk to obtain data, and the speed of the entire execution process can be greatly improved.

Later, NodeReal added a function on top of this, mobilizing the idle CPU cores not occupied by the EVM, reading the data that the EVM will process in the future from the database in advance, and putting it into the cache, so that the EVM can directly obtain the required data from the cache in the future. Data, this function is called "status read-ahead".

The principle of status pre-reading is actually very simple: the CPU of the blockchain node is multi-core, and the EVM is a single-threaded serial execution mode, using only 1 CPU core, so that the other CPU cores are not fully utilized. In this regard, the CPU cores not used by the EVM can be asked to help do something. They can learn from the unprocessed transaction sequence of the EVM what data the EVM will use in the future. Then, these CPU cores outside the EVM will read the data that the EVM will use in the future from the database, helping the EVM solve the overhead of data acquisition and improve the execution speed.

After fully optimizing the cache and combining it with hardware configurations with sufficient performance, opBNB has actually pushed the performance of the node execution layer to the limit of the EVM: it can handle up to 100 million gas per second. 100 million gas is basically the performance ceiling of the unmodified EVM (from experimental test data of a certain star public chain).

To summarize specifically, opBNB can handle up to 4761 of the simplest transfers per second, 1500~3000 ERC20 transfers, and about 500~1000 SWAP operations (these data are based on transaction data on the block explorer). Comparing the current parameters, the TPS limit of opBNB is 40 times that of Ethereum, more than 2 times that of BNB Chain, and more than 6 times that of Optimism.

Of course, due to the serious limitations of the DA layer itself, the execution layer of Ethereum Layer 2 cannot be used at all. If the factors such as the block time and stability of the DA layer mentioned before are taken into account, the actual performance of Ethereum Layer 2 will depend on the execution. The layer performance is greatly reduced. For a high-throughput DA layer like BNB Chain, opBNB with a capacity expansion effect of more than 2 times is very valuable, not to mention that BNB Chain can carry multiple such expansion projects.

It is foreseeable that BNB Chain has included the Layer 2 solution led by opBNB in its layout plan, and will continue to incorporate more modular blockchain projects in the future, including the introduction of ZK proof in opBNB and matching with GreenField and other supporting facilities Infrastructure to provide a highly available DA layer, trying to compete or cooperate with the Ethereum Layer 2 system. In this era where layered expansion has become a general trend, whether other public chains will also rush to imitate BNB Chain to support their own Layer 2 projects will all wait for time to be verified, but there is no doubt that modular blockchains will A revolution in infrastructure paradigm for the general direction is and has already taken place.