The resurrection of cloud computing power — in the name of AI

Fashion is a cycle, and so is Web 3.

Near has "re-become" an AI public chain. Its founder's identity as one of the authors of Transformer enabled him to attend NVIDIA's GTC conference and talk with Huang in leather jacket about the future of generative AI. Solana, as the gathering place of io.net, Bittensor, and Render Network, has successfully transformed into an AI concept chain. In addition, there are also emerging players such as Akash, GAIMIN, and Gensyn involving GPU computing.

If we raise our sights, we can find several interesting facts while the price of the currency is rising:

The competition for GPU computing power has come to decentralized platforms. More computing power means stronger computing effects. CPU, storage and GPU are sold together.

The computing paradigm is transitioning from cloud computing to decentralization. Behind this is the shift in demand from AI training to reasoning. The on-chain model is no longer just empty talk.

The underlying software and hardware composition and operating logic of the Internet architecture have not changed fundamentally, and the decentralized computing power layer plays a more important role in incentivizing networking.

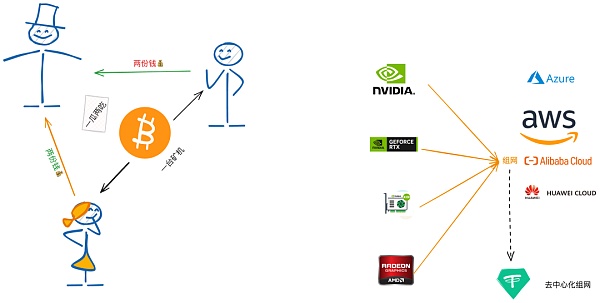

Let's first make a conceptual distinction. The cloud computing power in the Web3 world was born in the era of cloud mining. It refers to the sale of the computing power of mining machines in packages to save users the huge expenses of purchasing mining machines. However, computing power manufacturers often "oversell", such as selling the computing power of 100 mining machines in a mixed manner to 105 people to obtain excess profits, which ultimately makes the term equivalent to deception.

The cloud computing power in this article specifically refers to the computing power resources of cloud vendors based on GPUs. The question here is whether the decentralized computing power platform is a front-end puppet of the cloud vendor or the next version update.

The integration of traditional cloud vendors and blockchain is deeper than we imagined. For example, public chain nodes, development and daily storage are basically carried out around AWS, Alibaba Cloud and Huawei Cloud, eliminating the expensive investment in purchasing physical hardware. However, the problems it brings cannot be ignored. In extreme cases, unplugging the network cable will cause the public chain to crash, which seriously violates the spirit of decentralization.

On the other hand, decentralized computing platforms either directly build a "computer room" to maintain network robustness, or directly build an incentive network, such as IO.NET's airdrop strategy to promote GPU quantity, just like Filecoin's storage and FIL tokens. The starting point is not to meet usage needs, but to empower tokens. One piece of evidence is that large companies, individuals or academic institutions rarely actually use them for ML training, reasoning or graphics rendering, resulting in serious waste of resources.

However, in the face of rising coin prices and FOMO sentiment, all accusations that decentralized computing power is a cloud computing scam have disappeared.

Inference and FLOPS, quantifying GPU computing power

The computing power requirements of AI models are evolving from training to inference.

Let’s take OpenAI’s Sora as an example. Although it is also based on Transformer technology, its parameter volume is less than 100 billion compared to the trillion-level GPT-4. The academic community speculates that it is less than 3 billion. Yang Lekun even said that it is only 3 billion, which means that the training cost is lower. This is also very easy to understand. The computing resources required for a small number of parameters also decrease proportionally.

But on the other hand, Sora may need stronger "reasoning" capabilities. Reasoning can be understood as the ability to generate specific videos according to instructions. Videos have long been regarded as creative content, so AI needs stronger understanding capabilities. Training is relatively simple, and can be understood as summarizing rules based on existing content, mindlessly piling up computing power, and working hard to create miracles.

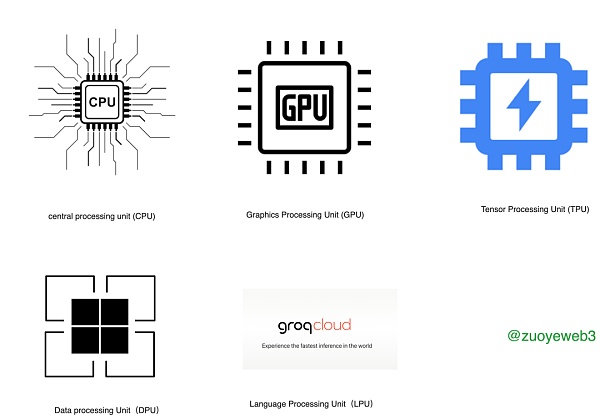

In the past, AI computing power was mainly used for training, with a small portion used for reasoning, and was basically monopolized by NVIDIA's various products. However, after the launch of the Groq LPU (Language Processing Unit), things began to change. Better reasoning capabilities, coupled with the slimming down of large models and improved accuracy, are slowly becoming the mainstream.

In addition, we should add the classification of GPUs. We often see that gaming has saved AI. This makes sense in that the gaming market's strong demand for high-performance GPUs covers the R&D costs. For example, the 4090 graphics card can be used by both gaming and AI alchemy. However, it should be noted that gaming cards and computing power cards will gradually be decoupled. This process is similar to the development of Bitcoin mining machines from personal computers to dedicated mining machines. The chips used also follow the order of CPU, GPU, FPGA and ASIC.

With the maturity and progress of AI technology, especially the LLM route, there will be more and more similar attempts at TPU, DPU and LPU. Of course, the current main product is still NVIDIA's GPU. All the discussions below are also based on GPU. LPU and others are more of a supplement to GPU, and it will take some time to completely replace it.

The decentralized computing power competition is not about competing for GPU supply channels, but about trying to establish a new profit model.

At this point, NVIDIA is almost becoming the protagonist. The fundamental reason is that NVIDIA occupies 80% of the graphics card market. The competition between N cards and A cards only exists in theory. In reality, everyone is just saying one thing and doing another.

The absolute monopoly has created a grand scene of competition among various companies for GPUs, from consumer-grade RTX 4090 to enterprise-grade A100/H100, and cloud vendors are the main stockpilers. However, AI companies such as Google, Meta, Tesla and OpenAI have actions or plans to make their own chips, and domestic companies have turned to domestic manufacturers such as Huawei, so the GPU track is still extremely crowded.

For traditional cloud vendors, what they actually sell is computing power and storage space, so whether or not to use their own chips is not as urgent as for AI companies. However, for decentralized computing power projects, they are currently in the first half, that is, competing with traditional cloud vendors for computing power business, focusing on cheapness and easy availability. However, like Bitcoin mining, the probability of the emergence of Web3 AI chips in the future is not high.

One more thing to complain about is that since Ethereum switched to PoS, there has been less and less dedicated hardware in the crypto. The market size of Saga phones, ZK hardware acceleration, and DePIN is too small. I hope that decentralized computing power can explore a Web3-specific path for dedicated AI computing power cards.

Is decentralized computing power the next step or a supplement to the cloud?

The computing power of GPUs is usually compared in terms of FLOPS (Floating Point Operations Per Second) in the industry. This is the most commonly used indicator of computing speed. Regardless of the GPU specifications or optimization measures such as application parallelism, FLOPS is ultimately used to measure performance.

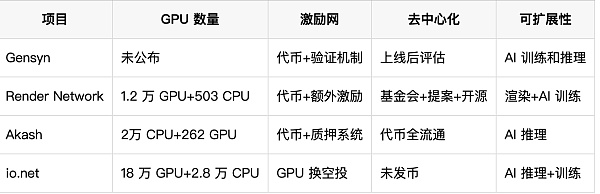

It took about half a century from local computing to cloud computing, and the concept of distribution has existed since the birth of computers. Driven by LLM, the combination of decentralization and computing power is no longer as illusory as before. I will summarize as many existing decentralized computing power projects as possible, and the inspection dimensions are only two points:

The number of hardware such as GPUs, that is, the computing speed. According to Moore's Law, the newer the GPU, the stronger its computing power. The more GPUs with the same specifications, the stronger its computing power.

The incentive layer organization method is an industry feature of Web3, with dual tokens, additional governance functions, airdrop incentives, etc. It is easier to understand the long-term value of each project, rather than focusing too much on short-term currency prices and only looking at how many GPUs can be owned or dispatched in the long run.

From this perspective, decentralized computing power is still based on the DePIN route of "existing hardware + incentive network", or the Internet architecture is still the bottom layer. The decentralized computing power layer is the monetization after "hardware virtualization", focusing on unauthorized access. Real networking still requires the cooperation of hardware.

Computing power should be decentralized, GPU should be centralized

With the help of the blockchain trilemma framework, the security of decentralized computing power does not need to be specially considered. The main issues are decentralization and scalability. The latter is the purpose of GPU networking, which is currently in a state where AI is far ahead.

Starting from a paradox, if a decentralized computing power project wants to succeed, the number of GPUs on the network must be as large as possible. The reason is that the number of parameters of large models such as GPT has exploded, and without a GPU of a certain scale, it is impossible to achieve training or inference effects.

Of course, compared to the absolute control of cloud vendors, at the current stage, decentralized computing power projects can at least set up mechanisms such as no access and free migration of GPU resources. However, due to the improvement of capital efficiency, it is unclear whether products similar to mining pools will be formed in the future.

In terms of scalability, GPUs can be used not only in AI, but cloud computers and rendering are also a feasible path. For example, Render Network focuses on rendering work, while Bittensor and others focus on providing model training. From a more straightforward perspective, scalability is equivalent to usage scenarios and purposes.

Therefore, we can add two additional parameters in addition to GPU and incentive network, namely decentralization and scalability, to form comparison indicators from four angles. Please note that this method is different from technical comparison and is purely for fun.

In the above projects, Render Network is actually very special. It is essentially a distributed rendering network and has no direct relationship with AI. In AI training and reasoning, each link is closely linked. Whether it is SGD (Stochastic Gradient Descent) or back propagation algorithms, they all require consistency. However, rendering and other tasks do not necessarily have to be like this. Videos and pictures are often segmented to facilitate task distribution.

Its AI training capability is mainly connected to io.net and exists as a plug-in of io.net. Anyway, it is the GPU that is working, so it doesn’t matter how it is done. What is more forward-looking is its defection to Solana when it was underestimated. Later it was proved that Solana is more suitable for the high-performance requirements of rendering and other networks.

Secondly, io.net's scale development route of violently replacing GPUs is adopted. Currently, the official website lists 180,000 GPUs, which puts it in the first tier of decentralized computing power projects. There is an order of magnitude difference between it and other competitors. In terms of scalability, io.net focuses on AI reasoning, and AI training is a side job.

Strictly speaking, AI training is not suitable for distributed deployment. Even for lightweight LLMs, the absolute number of parameters is not small. Centralized computing is more cost-effective. The combination of Web 3 and AI in training is more about data privacy and encryption operations, such as ZK and FHE technologies. Web 3 has great potential for AI reasoning. On the one hand, it has relatively low requirements for GPU computing performance and can tolerate a certain degree of loss. On the other hand, AI reasoning is closer to the application side, and incentives from the user's perspective are more substantial.

Filecoin, another company that mines for tokens, has also reached a GPU utilization agreement with io.net. Filecoin will connect its own 1,000 GPUs to io.net for use. This can be seen as a collaboration between seniors and juniors. I wish you both good luck.

Next is Gensyn, which has not been launched yet. Let's also do a cloud evaluation. Because it is still in the early stages of network construction, the number of GPUs has not been announced. However, its main usage scenario is AI training. I personally feel that the number of high-performance GPUs required will not be small, at least it must exceed the level of Render Network. Compared with AI reasoning, AI training and cloud vendors are in direct competition, and the specific mechanism design will be more complicated.

Specifically, Gensyn needs to ensure the effectiveness of model training. At the same time, in order to improve training efficiency, it uses the off-chain computing paradigm on a large scale. Therefore, the model verification and anti-cheating system require multi-party role games:

Submitters: Task initiators who ultimately pay for the training costs.

Solvers: Train models and provide proof of effectiveness.

Verifiers: Verify the validity of the model.

Whistleblowers: Checks validator work.

In general, the operation mode is similar to PoW mining + optimistic proof mechanism, and the architecture is very complex. Perhaps transferring the calculation to the off-chain can save costs, but the complexity of the architecture will bring additional operating costs. At the current juncture where the main decentralized computing power is focused on AI reasoning, I also wish Gensyn good luck.

Finally, there is Akash, which is still a veteran. It basically started at the same time as Render Network. Akash focuses on the decentralization of CPU, while Render Network initially focused on the decentralization of GPU. Unexpectedly, after the outbreak of AI, both parties entered the field of GPU + AI computing. The difference is that Akash pays more attention to reasoning.

The key to Akash's new year is that it has taken a fancy to the mining farm problem after the Ethereum upgrade. Idle GPUs can not only be put on Xianyu in the name of second-hand self-use by female college students, but now they can also be used to develop AI. Anyway, they are all contributions to human civilization.

However, one advantage of Akash is that the tokens are basically fully circulated. After all, it is a very old project, and it also actively adopts the staking system commonly used by PoS. However, the team seems to be more Buddhist, and does not have the youthful feeling of io.net.

In addition, there is THETA for edge cloud computing, Phoenix that provides segmented solutions for AI computing power, and old and new computing upstarts such as Bittensor and Ritual. Due to space limitations, I cannot list them one by one, mainly because some of them cannot find parameters such as the number of GPUs.

Conclusion

Throughout the history of computer development, decentralized versions of various computing paradigms can be built. The only regret is that they have no impact on mainstream applications. The current Web3 computing projects are mainly for self-entertainment in the industry. The founder of Near went to the GTC conference because of his identity as the author of Transformer, not as the founder of Near.

What is more pessimistic is that the current scale and players of the cloud computing market are too powerful. Can io.net replace AWS? If there are enough GPUs, it is really possible. After all, AWS has long used open source Redis as the underlying component.

In a sense, the power of open source and decentralization is not equal. Decentralized projects are overly concentrated in financial fields such as DeFi, and AI may be a key path to enter the mainstream market.