When people are confident that their data will be protected by encryption and are willing to contribute their data, perhaps we will achieve an AGI breakthrough.

Written by hmalviya9

Compiled by: Frank, Foresight News

Editor's note: The paper "Attention Is All You Need " was published in 2017 and has been cited more than 110,000 times so far. It is not only one of the origins of large model technology represented by ChatGPT today, but also introduces the Transformer architecture and attention mechanism. It is also widely used in many AI technologies that may change the world, such as Sora and AlphaFold.

"Attention Is All You Need", this research paper completely changes the future of modern artificial intelligence (AI). In this article, I will dive into the Transformer model and the future of AI.

On June 12, 2017, eight Google engineers published a research paper called "Attention Is All You Need." This paper discusses a neural network architecture that will change the future of modern AI.

At the GTC conference just past March 21, 2024, NVIDIA founder Huang Jensen held a group discussion with those 8 Google engineers and thanked them for introducing the Transformer architecture to make modern AI possible. Surprisingly Yes, the founder of NEAR is actually on this list of 8 people.

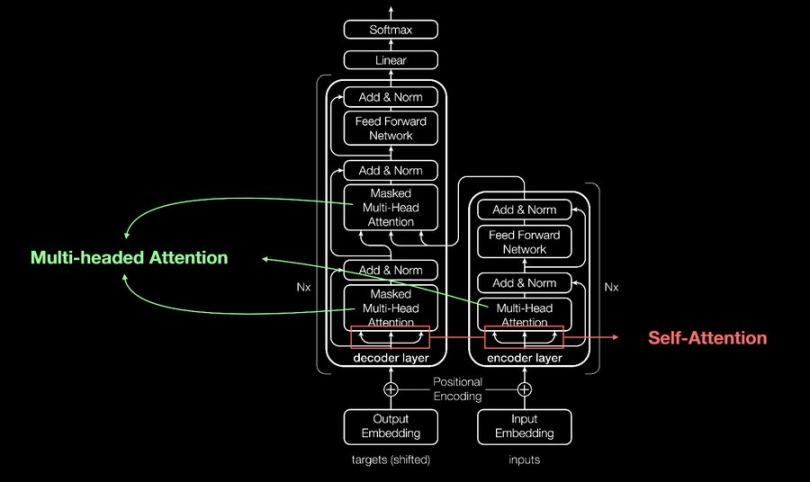

What is a Transformer?

Transformer is a neural network.

What is a neural network? It is inspired by the structure and function of the human brain and processes information through a large number of artificial neurons connected to each other, but it is not a complete replica of the human brain .

To put it simply, the human brain is like the Amazon rainforest, with many different areas and many pathways connecting these areas. Neurons are like connectors between these pathways. They can send and receive signals to any part of the rainforest, so the connection is the pathway itself, responsible for connecting two different brain areas.

This gives our brain a very powerful learning ability, which allows it to learn quickly, recognize patterns, and provide accurate output. Neural networks like Transformer attempt to achieve the same learning capabilities as the human brain, but their current technology level is less than 1% of the human brain .

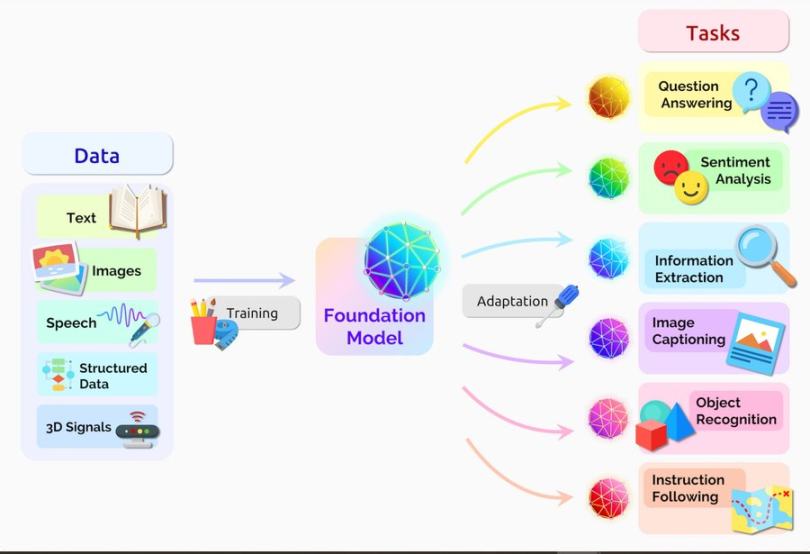

Transformers have made impressive progress in generative AI in recent years. Looking back at the evolution of modern artificial intelligence, we can see that early artificial intelligence was mainly like Siri and some other voice/recognition applications.

These applications are built using Recurrent Neural Networks (RNN). RNNs have some limitations that have been addressed and improved upon by Transformers, which introduce self-attention mechanisms that enable them to analyze all parts of any sequence simultaneously, thereby capturing long-range dependencies and contextual content.

We're still very early in Transformer's innovation cycle. Transformer has several different derivatives, such as XLNet, BERT, and GPT.

GPT is the most well-known one, but it still has limited capabilities in event prediction.

When large language models (LLMs) are able to predict events based on past data and patterns, it will mark the next major leap forward in modern AI and accelerate our journey toward artificial general intelligence (AGI) .

To achieve this predictive power, large language models (LLMs) employ a temporal fusion transformer (TFT), a model that predicts future values based on different data sets and is also able to explain the predictions it makes.

In addition to being used for prediction, TFT can also be used in the blockchain field. By defining specific rules in the model, TFT can automatically perform the following operations: effectively manage the consensus process, increase the speed of block production, reward honest validators, and punish malicious validators .

Blockchain networks can essentially provide larger block rewards to validators with higher reputation scores, which can be based on their voting history, block proposal history, slash history, staking amount, activity, and a number of other parameters. Establish.

The consensus mechanism of the public chain is essentially a game between validators, which requires more than two-thirds of the validators to agree on who will create the next block. During this process, many disagreements and arguments may arise, which is also a factor in the inefficiency of public chain networks such as Ethereum.

TFT can be used as a consensus mechanism to improve efficiency by improving block time and rewarding validators based on block production reputation . For example, BasedAI, which applies the TFT model to the consensus process, will use this model to allocate token issuance between validators and network participants.

BasedAI also proposes to use FHE technology to allow developers to host privacy-preserving large-scale language models (Zk-LLMs) on its decentralized AI infrastructure called "Brains". By integrating FHE technology into large-scale language models, It can protect users' privacy data when they choose to enable personalized AI services.

When people are willing to contribute data with the confidence that their data will be encrypted and completely private, perhaps we will achieve a breakthrough in general artificial intelligence (AGI). This gap is being filled by privacy-focused technologies, such as nillionnetwork Blind Computation, zero-knowledge machine learning (ZkML) and homomorphic encryption (FHE) technologies.

However, all these privacy-focused technologies require large amounts of computing resources, which puts them at an early stage of application.