Written by: @rargulati , Martin Shkreli

Compiled by: Vernacular Blockchain

@ionet is a decentralized computing network built on Solana, belonging to the Depin and AI sectors. It has received financing from Mult1C0in Capital and Moonhill Capital, and the amount of financing has not been disclosed.

io.net is a decentralized cloud platform for machine learning training on GPUs based on Solana, providing instant, permissionless access to a global network of GPUs and CPUs. The platform has 25,000 nodes and uses revolutionary technology to cluster GPU clouds together, saving up to 90% of computing costs for large-scale AI startups.

Currently built on Solana, it belongs to the currently hot Depin and AI sectors. Today, let’s take a look at the analysis of its GPU and existing problems by two people on X:

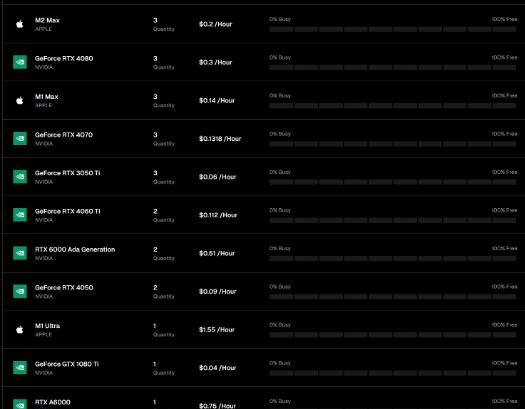

How many GPUs does @ionet have?

@MartinShkreli on X analyzed four answers:

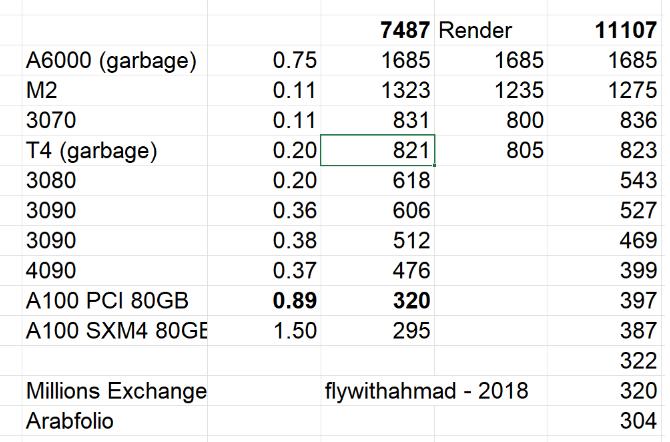

1) 7648 (when trying at deploy time)

2) 11107 (manually calculated from their explorer)

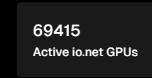

3) 69415 (unexplained number, unchanged?)

4) 564306 (No support, transparency, or substantive information here. Not even CoreWeave or AWS have this much)

I think the real answer is actually 320.

Why 320?

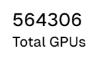

Look with me at the Explorer page. All the GPUs are "free", but you still can't rent one. If they're free, why can't you rent one? People want to get paid, right?

There are only 320 you can actually rent.

If you can't rent them, they're not real. Even if you can rent them, it adds...

@rargulati said Martin was absolutely right to question this. Decentralized AI protocols have the following problems:

1) There is no cost-effective and time-efficient way to do useful online training on highly distributed general-purpose hardware architectures. This would require a major breakthrough that I am currently unaware of. This is why FANGs spend more money than all the liquidity in cryptocurrencies on expensive hardware, network connectivity, datacenter maintenance, etc.

2) Inference on general-purpose hardware sounds like a good use case, but the hardware and software sides are evolving so rapidly that a general-purpose decentralized approach doesn’t perform well on most key use cases. See the latest OpenAI delays and Groq growth.

3) Infer from correctly routed requests, cluster gpus that coexist closely with requests, and use decentralized cryptocurrencies to drive down the cost of capital to compete with AWS and incentivize enthusiasts to participate. Sounds like a good idea, but with so many suppliers and liquidity in the GPU spot market fragmented, no one is consolidating enough supply to provide to people running real businesses.

4) Software routing algorithms have to be very good, otherwise there are a lot of operational issues with the general purpose hardware of consumer carriers. Forget network breakouts and congestion control, if someone decides to play games or use anything that uses webgl, you may experience service outages on one of the carriers. Unpredictable supply side creates operational headaches and uncertainty for demand side requesters.

These are tough problems that will take a long, long time to resolve. All the bids are just gimmicks.