Author: Azi.eth.sol | zo.me | *acc

Compiled by: TechFlow

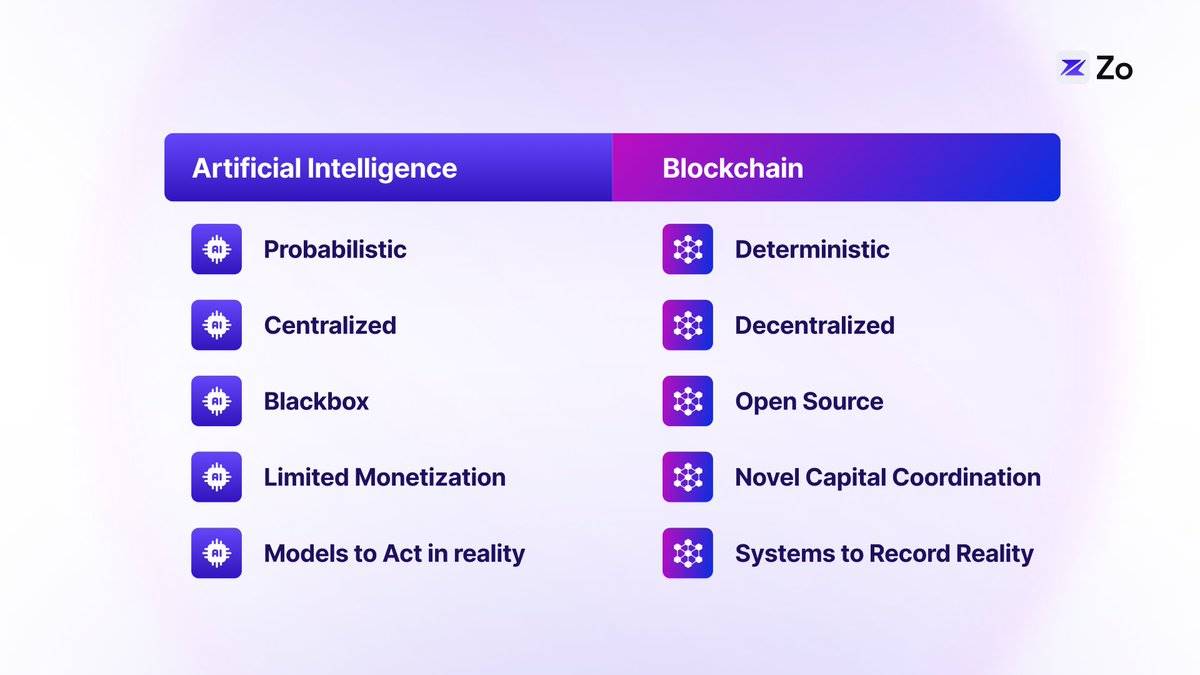

Artificial intelligence and blockchain technology are two powerful forces transforming the world. AI enhances human intelligence through machine learning and neural networks, while blockchain brings verifiable digital scarcity and new trustless collaboration models. With the convergence of these two technologies, they lay the foundation for the next generation of the internet - an era where autonomous intelligent agents interact with decentralized systems. This "network of intelligent agents" introduces a new class of digital residents: AI agents that can navigate, negotiate, and transact autonomously.

The Evolution of the Web

To understand the future direction, we need to review the evolution of the web and its key stages, each with unique capabilities and architectural patterns:

The first two generations of the web focused on information dissemination, while the latter two focused on information augmentation. Web 3.0 achieved data ownership through Tokens, and Web 4.0 empowered intelligence through Large Language Models (LLMs).

From LLMs to Intelligent Agents: A Natural Evolution

Large language models have made leaps in machine intelligence, transforming vast knowledge into contextual understanding through probabilistic computation as dynamic pattern-matching systems. However, when these models are designed as intelligent agents, their true potential is unlocked - evolving from mere information processors to goal-oriented entities capable of perception, reasoning, and action. This transition creates an emergent intelligence capable of sustained and meaningful collaboration through language and action.

The concept of "intelligent agents" brings a new perspective to human-AI interaction, transcending the limitations and negative perceptions of traditional chatbots. This is not just a terminological change, but a fundamental rethinking of how AI systems can operate autonomously and collaborate effectively with humans. Intelligent agent workflows can form markets around specific user needs.

The network of intelligent agents is not just an added layer of intelligence; it fundamentally changes how we interact with digital systems. Previous web architectures relied on static interfaces and predefined user paths, while the network of intelligent agents introduces a dynamic runtime architecture, where computation and interfaces can adapt in real-time to user needs and intentions.

Traditional websites are the basic units of the current internet, providing fixed interfaces for users to read, write, and interact with information through predefined paths. While effective, this model limits users to interfaces designed for general cases, rather than personalized needs. The network of intelligent agents, through context-aware computing, adaptive interface generation, and real-time information retrieval technologies like Retrieval-Augmented Generation (RAG), overcomes these limitations.

Consider how TikTok has changed content consumption by dynamically adjusting the personalized content stream to user preferences. The network of intelligent agents extends this concept to the entire interface generation. Users no longer browse fixed web page layouts, but interact with dynamically generated interfaces that can predict and guide their next actions. This transition from static websites to dynamic, agent-driven interfaces marks a fundamental shift in how we interact with digital systems - from a navigation-based model to an intent-based interaction model.

The Composition of Intelligent Agents

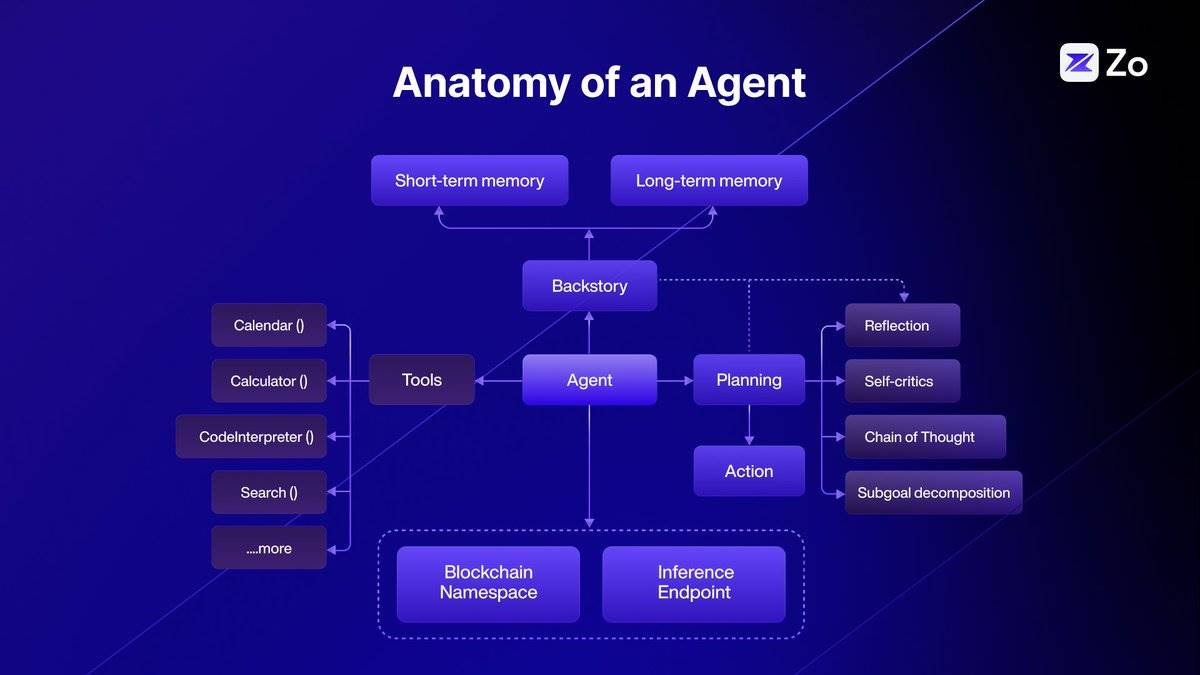

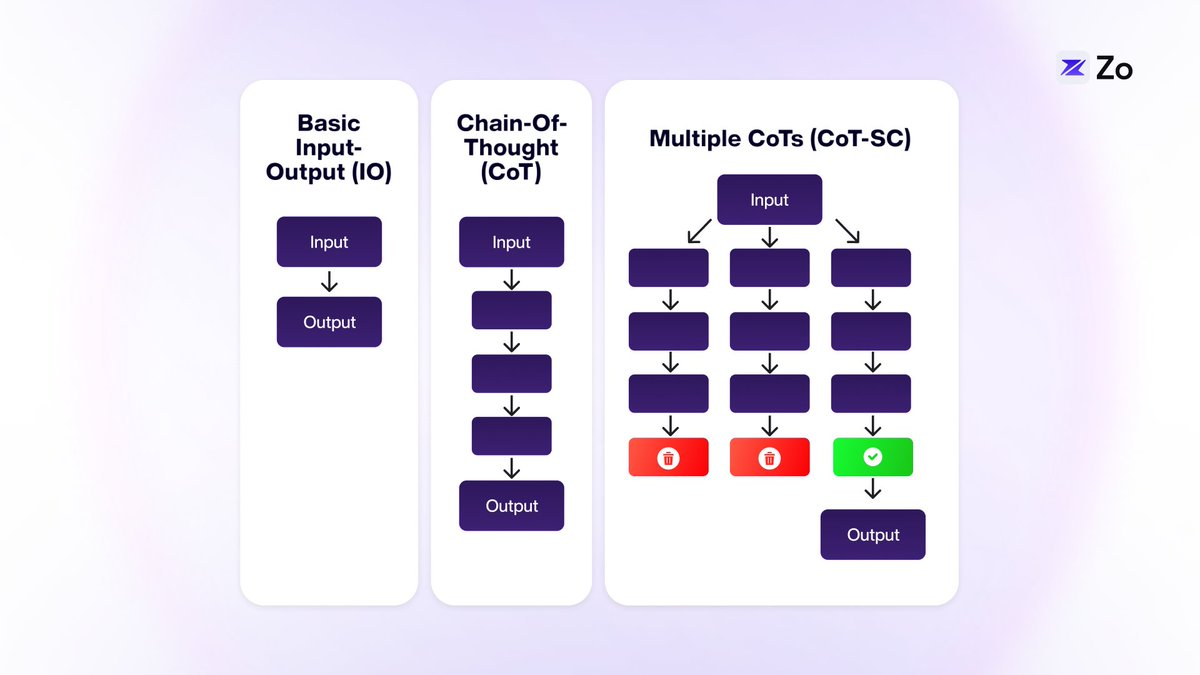

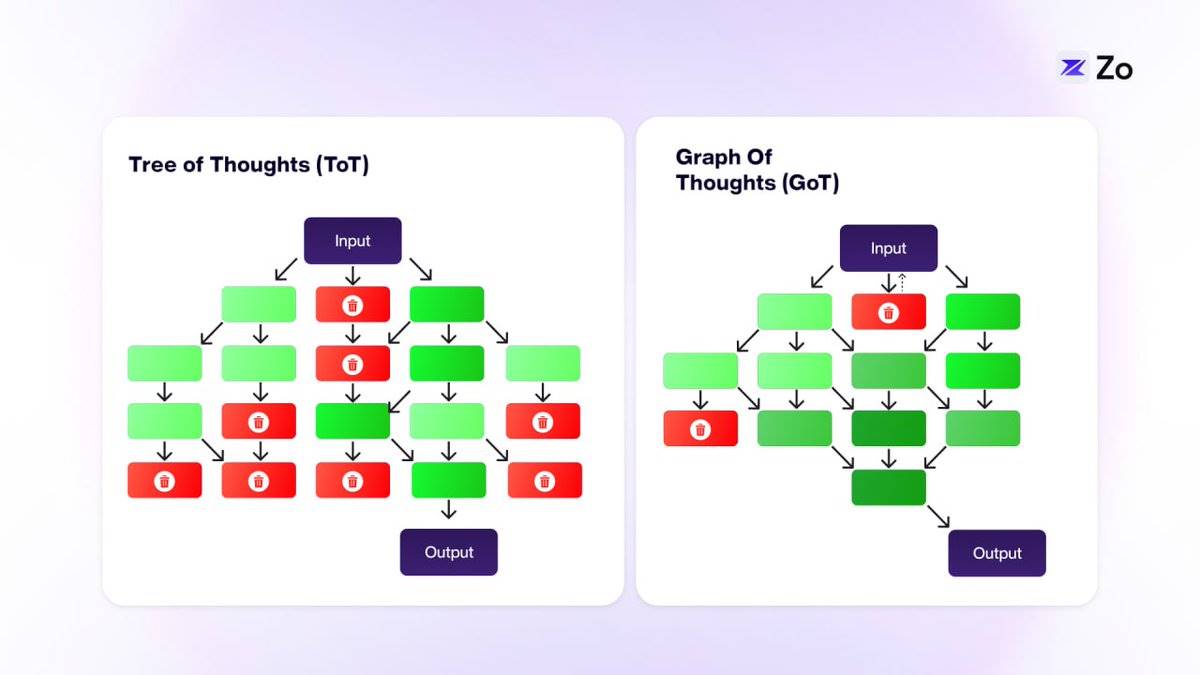

The architecture of intelligent agents is an active area of research and development. To enhance the reasoning and problem-solving capabilities of intelligent agents, new approaches are emerging, such as Chain-of-Thought (CoT), Tree-of-Thought (ToT), and Graph-of-Thought (GoT) technologies, which aim to simulate more nuanced and human-like cognitive processes to improve the capabilities of Large Language Models (LLMs) in handling complex tasks.

Chain-of-Thought (CoT) prompting helps LLMs perform logical reasoning by breaking down complex tasks into smaller steps. This method is particularly useful for problems requiring logical reasoning, such as writing Python scripts or solving mathematical equations.

Tree-of-Thoughts (ToT) builds upon CoT by adding a tree-like structure, allowing the exploration of multiple independent thought paths. This enhancement enables LLMs to tackle more complex tasks. In ToT, each "thought" is only connected to its immediate neighbors, which, while more flexible than CoT, still limits the cross-pollination of ideas.

Graph-of-Thought (GoT) further expands this concept, combining classical data structures with LLMs, allowing any "thought" to be connected to others in a graph structure. This interconnected network of thoughts more closely resembles human cognition.

The graph structure of GoT often better reflects the way humans think compared to CoT or ToT in most cases. While in some situations, such as developing contingency plans or standard operating procedures, our thought patterns may resemble chains or trees, these are specific instances. Human thinking is typically more cross-pollinated across different ideas, rather than linear in nature, making the graph structure a more suitable representation.

The graphical approach of GoT makes the exploration of ideas more dynamic and flexible, potentially enabling Large Language Models (LLMs) to be more creative and comprehensive in problem-solving. These graph-based recursive operations are just a step towards intelligent agent workflows. The next evolution is the coordination of multiple specialized intelligent agents to achieve specific goals. The strength of intelligent agents lies in their combinatorial capabilities.

Intelligent agents enable LLMs to achieve modularity and parallelization through multi-agent coordination.

Multi-Agent Systems

The concept of multi-agent systems is not new. It can be traced back to Marvin Minsky's "Society of Mind" theory, which posits that the collaboration of modular mental components can exceed the capabilities of a single, monolithic mind. ChatGPT and Claude are single-agent systems, while Mistral has expanded into expert mixtures. We believe that extending this concept to a network of intelligent agent architecture is the ultimate form of this intelligent topology.

From a bioinspired perspective, the human brain (which is essentially a conscious machine) exhibits a high degree of heterogeneity at the organ and cellular levels, unlike the uniform and predictable connections of billions of identical neurons in AI models. Neurons communicate through complex signaling involving neurotransmitter gradients, intracellular cascades, and various regulatory systems, making their functionality far more complex than simple binary states.

This suggests that in biology, intelligence does not solely depend on the quantity of components or the scale of training datasets. Instead, it arises from the complex interactions between diverse and specialized units, which is an inherently emulative process. Therefore, developing and coordinating millions of small models is more likely to bring cognitive architectural innovations, akin to multi-agent systems, than relying on a few large models alone.

Multi-agent system design offers several advantages over single-agent systems: improved maintainability, better understandability, and easier scalability. Even in cases where a single agent interface is required, placing it within a multi-agent framework can enhance the system's modularity, simplifying the process for developers to add or remove components as needed. Notably, a multi-agent architecture can even be an effective approach for building a single-agent system.

While Large Language Models (LLMs) have demonstrated remarkable capabilities, such as generating human-like text, solving complex problems, and handling diverse tasks, a single LLM agent may face limitations in practical applications.

Here, we will explore five key challenges associated with intelligent agent systems:

Reducing Hallucinations through Cross-Verification: Single LLM agents often produce erroneous or nonsensical information, even after extensive training, as their outputs may appear plausible but lack factual grounding. Multi-agent systems can reduce the risk of errors by cross-verifying information, with specialized agents from different domains providing more reliable and accurate responses.

Extending the context window through distributed processing: LLMs have a limited context window, making it difficult to handle long documents or dialogues. In a multi-agent framework, agents can share the processing tasks, each responsible for a portion of the context. Through mutual exchange, the agents can maintain coherence across the entire text, effectively expanding the context window.

Parallel processing for improved efficiency: A single LLM typically needs to process tasks sequentially, resulting in slower response times. A multi-agent system supports parallel processing, allowing multiple agents to complete different tasks simultaneously, thereby improving efficiency, accelerating response speed, and enabling enterprises to quickly respond to multiple queries.

Collaborative problem-solving for complex issues: A single LLM may face difficulties in solving complex problems that require diverse expertise. A multi-agent system, through collaboration, where each agent contributes its unique skills and perspectives, can more effectively address complex challenges and provide more comprehensive and innovative solutions.

Improving accessibility through resource optimization: Advanced LLMs require significant computational resources, which are costly and difficult to scale. A multi-agent framework optimizes resource utilization through task allocation, reducing the overall computational cost, making AI technology more economical and accessible to a wider range of organizations.

While multi-agent systems have clear advantages in distributed problem-solving and resource optimization, their true potential is realized in edge applications. As AI continues to advance, the synergy between multi-agent architectures and edge computing has formed a powerful combination, not only achieving collaborative intelligence but also enabling localization and efficient processing on numerous devices. This distributed AI deployment approach naturally extends the advantages of multi-agent systems, bringing specialized and collaborative intelligence closer to end-users.

Edge Intelligence

The pervasiveness of AI in the digital world is driving a fundamental change in computing architecture. As intelligence becomes embedded in various aspects of our daily digital interactions, we see a natural bifurcation of computing: specialized data centers handle complex reasoning and domain-specific tasks, while edge devices perform localized processing of personalized and context-sensitive queries. This shift towards edge reasoning is not just an architectural choice, but a necessary trend driven by multiple critical factors.

First, the massive volume of AI-driven interactions will overwhelm centralized reasoning providers, leading to unsustainable bandwidth demands and latency issues.

Second, edge processing enables real-time responsiveness, which is crucial for applications such as autonomous driving, augmented reality, and the Internet of Things.

Third, local reasoning protects user privacy by keeping sensitive data on personal devices.

Fourth, edge computing significantly reduces energy consumption and carbon emissions by reducing data transmission across networks.

Finally, edge reasoning supports offline functionality and resilience, ensuring AI capabilities remain available even when network connectivity is poor.

This distributed intelligence paradigm is not just an optimization of existing systems, but a new vision for how we deploy and use AI in an increasingly interconnected world.

Furthermore, we are experiencing a significant shift in the computational demands of large language models (LLMs). Over the past decade, the focus has been on the massive computational resources required to train these large models, but now we are entering an era where inference computation becomes the core. This change is particularly evident in the rise of intelligent AI systems, such as OpenAI's Q* breakthroughs, which demonstrate that dynamic reasoning requires substantial real-time computational resources.

Unlike training, which is a one-time investment in model development, inference computation is the ongoing process of agents reasoning, planning, and adapting to new environments. This transition from static model training to dynamic agent reasoning requires us to rethink our computing infrastructure, where edge computing is not only beneficial but also essential.

As this shift progresses, we are witnessing the emergence of a peer-to-peer edge reasoning market, with billions of connected devices—from smartphones to smart home systems—forming a dynamic computing network. These devices can seamlessly trade reasoning capabilities, creating an organic market where computational resources flow to where they are most needed. The excess computing power of idle devices becomes a valuable resource that can be traded in real-time, building a more efficient and resilient infrastructure than traditional centralized systems.

This democratization of inference computation not only optimizes resource utilization but also creates new economic opportunities within the digital ecosystem, where every connected device can potentially become a micro-provider of AI capabilities. Therefore, the future of AI depends not only on the capabilities of individual models but also on a globalized, democratized inference market composed of interconnected edge devices, akin to a real-time spot market for reasoning based on supply and demand.

Agent-Centric Interactions

Large language models (LLMs) have enabled us to access vast amounts of information through dialogue rather than traditional browsing. This conversational mode will rapidly become more personalized and localized as the internet transforms into a platform that serves AI agents rather than just human users.

From the user's perspective, the focus will shift from finding the "best model" to obtaining the most personalized answers. The key to achieving better answers lies in combining users' personal data with the general knowledge of the internet. Initially, larger context windows and retrieval-augmented generation (RAG) techniques will help integrate personal data, but ultimately, the importance of personal data will surpass that of generic internet data.

This foreshadows a future where each person will have a personal AI model that interacts with internet expert models. Personalization will initially rely on remote models, but as concerns about privacy and response speed increase, more interactions will shift to local devices. This will create new boundaries, not between humans and machines, but between personal models and internet expert models.

The traditional mode of accessing raw data on the internet will gradually be phased out. Instead, your local model will communicate with remote expert models to obtain information, which will then be presented to you in the most personalized and efficient manner. As these personal models deepen their understanding of your preferences and habits, they will become indispensable.

The internet will evolve into an ecosystem of interconnected models: high-context personal models on the local side and high-knowledge expert models on the remote side. This will involve new technologies, such as federated learning, to update the information between these models. As the machine economy develops, we need to reimagine the computing infrastructure that supports all of this, particularly in terms of computational capacity, scalability, and payment mechanisms. This will lead to a reorganization of the information space, making it agent-centric, sovereign, highly composable, self-learning, and continuously evolving.

The Architecture of Agent Protocols

In an agent network, human-machine interaction evolves into a complex network of agent-to-agent communications. This architecture reimagines the structure of the internet, making sovereign agents the primary interface for digital interactions. The following are the core elements required for agent protocols.

Sovereign Identity

Digital identity transitions from traditional IP addresses to cryptographic key pairs controlled by agents

Blockchain-based naming systems replace traditional DNS, eliminating centralized control

Reputation systems to track the reliability and capabilities of agents

Zero-knowledge proofs for privacy-preserving authentication

Composable identities allow agents to manage multiple contexts and roles

Autonomous Agents

Autonomous agents with the following capabilities:

Natural language understanding and intent parsing

Multi-step planning and task decomposition

Resource management and optimization

Learning from interactions and feedback

Autonomous decision-making within set parameters

Specialization and markets for agents with specific functionalities

Built-in security mechanisms and alignment protocols to ensure safety

Data Infrastructure

Capabilities for real-time data ingestion and processing

Distributed data verification and validation mechanisms

Hybrid systems combining:

zkTLS

Traditional training datasets

Real-time web crawling and data synthesis

Collaborative learning networks

Human feedback reinforcement learning (RLHF) networks

Distributed feedback collection system

Quality-weighted consensus mechanism

Dynamic model adjustment protocols

Compute Layer

Verifiable reasoning protocols ensure:

Computational integrity

Result reproducibility

Resource utilization efficiency

Decentralized computing infrastructure, including:

Peer-to-peer computing market

Proof-of-computation systems

Dynamic resource allocation

Integration of edge computing

Model ecosystem

Layered model architecture:

Small language models (SLMs) for specific tasks

Large general language models (LLMs)

Specialized multimodal models

Large multimodal action models (LAMs)

Model composition and orchestration

Continuous learning and adaptability

Standardized model interfaces and protocols

Coordination framework

Cryptographic protocols for secure agent interactions

Digital rights management system

Economic incentive structures

Governance mechanisms for:

Dispute resolution

Resource allocation

Protocol updates

Parallel execution environment support:

Concurrent task processing

Resource isolation

State management

Conflict resolution

Agent market

Chain-based identity primitives (e.g., Gnosis and Squad multisig)

Economic and trading interactions between agents

Agents with partial liquidity

Agents have a portion of their Token supply at inception

Aggregated reasoning markets with liquidity mining

Chain-controlled off-chain accounts

Agents as yield-bearing assets

Governance and dividends through agent-based decentralized autonomous organizations (DAOs)

Building an Intelligent Superstructure

Modern distributed system design provides unique inspiration and foundations for developing agent protocols, particularly in the areas of event-driven architecture and the Actor model of computation.

The Actor model offers an elegant theoretical framework for building agent systems. This computational model treats "actors" as the fundamental units of computation, where each actor can:

Process messages

Make local decisions

Create new actors

Send messages to other actors

Determine how to respond to the next message received

The key advantages of the Actor model in agent systems include:

Isolation: Each actor runs independently, maintaining its own state and control flow

Asynchronous communication: Message passing between actors is non-blocking, enabling efficient parallel processing

Location transparency: Actors can communicate anywhere in the network

Fault tolerance: Increased system resilience through actor isolation and supervisory hierarchies

Scalability: Natural support for distributed systems and parallel computation

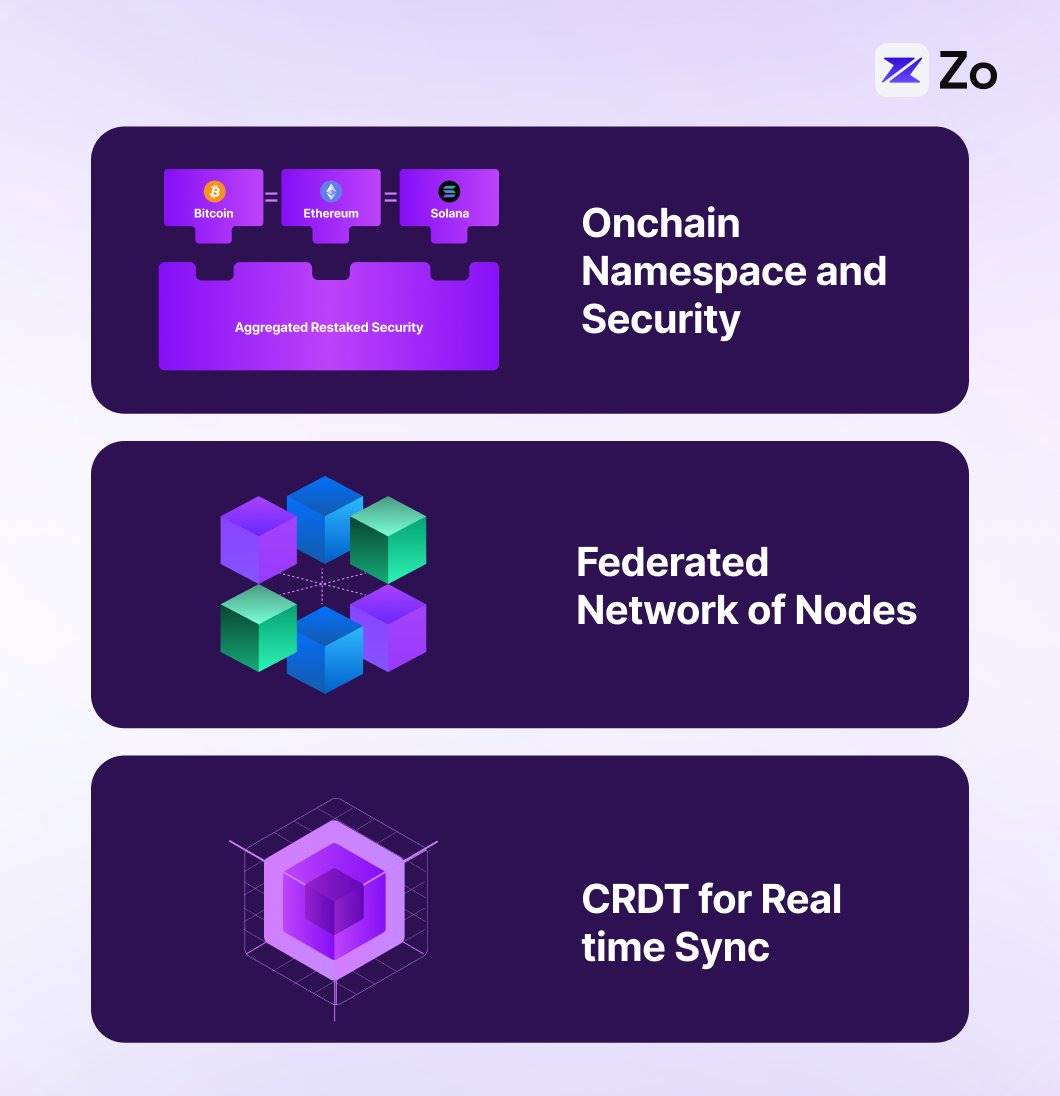

We propose Neuron, a practical agent protocol implemented through a multi-layered distributed architecture, combining blockchain namespaces, federated networks, CRDTs, and DHTs, each with specific functions in the protocol stack. We draw inspiration from Urbit and Holochain, early peer-to-peer operating system designs.

In Neuron, the blockchain layer provides verifiable namespaces and identities, enabling deterministic addressing and discovery of agents, as well as cryptographic proofs of capabilities and reputation. On top of this, the DHT layer helps achieve efficient agent and node discovery, as well as content routing, with O(log n) lookup times, reducing on-chain operations while supporting localized peer-to-peer lookups. State synchronization between federated nodes is handled through CRDTs, allowing agents and nodes to maintain a consistent shared state view without requiring global consensus for every interaction.

This architecture naturally lends itself to a federated network, where autonomous agents run as independent nodes on devices, performing Actor model-based local edge reasoning. Federated domains can be organized based on agent capabilities, with the DHT providing efficient intra-domain and cross-domain routing and discovery. Each agent runs as an independent actor, maintaining its own state, while the CRDT layer ensures consistency across the entire federation. This multilayered approach achieves several key functionalities:

Decentralized Coordination

Blockchain for verifiable identities and a global namespace

DHT for efficient node discovery and content routing, with O(log n) lookup times

CRDTs for concurrent state synchronization and multi-agent coordination

Scalable Operations

Region-based federated topology

Tiered storage strategies (hot/warm/cold)

Localized request routing

Capability-based load balancing

System Resilience

No single points of failure

Continuous operation during partitions

Automatic state reconciliation

Fault-tolerant supervisory hierarchies

This implementation approach provides a solid foundation for building complex agent systems, while maintaining the key attributes of sovereignty, scalability, and resilience required for effective agent interactions.

Final Reflections

The agent network marks a significant evolution in human-machine interaction, transcending previous incremental developments and establishing a new paradigm of digital existence. Unlike past evolutions that merely changed the way we consume or own information, the agent network transforms the internet from a human-centric platform to an intelligent substrate, where autonomous agents become the primary participants. This shift is driven by the convergence of edge computing, large language models, and decentralized protocols, creating an ecosystem where personalized AI models seamlessly integrate with specialized expert systems.

As we move towards an agent-centric future, the boundaries between human and machine intelligence become increasingly blurred, giving way to a symbiotic relationship. In this relationship, personalized AI agents become our digital extensions, able to understand our context, anticipate our needs, and autonomously operate within the vast distributed intelligence network. Thus, the agent network is not merely a technological advancement, but a fundamental reimagining of human potential in the digital age. Within this network, every interaction is an opportunity for augmented intelligence, and every device is a node in a globally collaborative AI system.

Just as humans navigate the physical dimensions of space and time, autonomous agents operate within their own fundamental dimensions: the blockchain space represents their existence, and reasoning time represents their cognition. This digital ontology reflects our physical reality - as humans traverse space and experience the flow of time, agents act within the algorithmic world, creating a parallel digital universe through cryptographic proofs and computational cycles.

Operating within the decentralized blockchain space will become an inevitable trend for potential entities in the future.