The plan is to further optimize the Ethereum protocol to improve network efficiency and sustainability. It may not only break through technical bottlenecks, but also revolutionize blockchain security and privacy.

Original text: Possible futures of the Ethereum protocol, part 6: The Splurge (vitalik.eth)

Author: vitalik.eth

Compiled by: Yewlne, LXDAO

Cover: Galaxy

Translator’s Preface

As blockchain technology develops rapidly, the complexity of system architecture is gradually increasing, bringing new challenges to developers and users. The "Splurge" plan emphasizes improving the efficiency and sustainability of the network by optimizing and improving the details of existing protocols. The exploration of these cutting-edge technologies is not only expected to solve the current technical bottlenecks, but may also completely change the security and privacy mechanisms of blockchain, making it closer to the ideal state of "trustlessness". I hope that this translation can help more Chinese readers understand the latest developments in the Ethereum protocol and stimulate everyone's interest in these important but often overlooked topics.

Overview

This article is approximately 10,000 words and has 5 parts. It is estimated to take 55 minutes to read the article.

- EVM improvements

- Account Abstraction

- EIP-1559 Improvements

- Verifiable Delay Functions (VDFs)

- Obfuscation and One-Time Signatures: The Future of Cryptography

Content

The Possible Future of the Ethereum Protocol (VI): The Splurge

Special thanks to Justin Drake and Tim Beiko for their feedback and reviews.

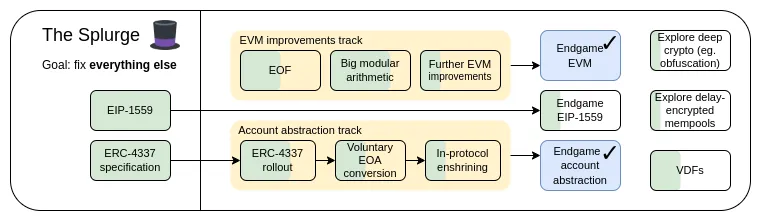

Some things are hard to put into a single category. There are many "little details" in the Ethereum protocol design that are critical to Ethereum's success, but are difficult to properly classify into larger subcategories. In practice, about half of them involve various forms of EVM improvements, and the rest contain various sub-topics. This is what the "Splurge" upgrade aims to do.

The Splurge: Key Objectives

- Boot the EVM into a high-performance and stable "final state".

- Introducing account abstraction into the protocol layer allows all users to benefit from more secure and convenient accounts.

- Optimize the transaction fee economic model to improve scalability while reducing risk.

- Exploring advanced cryptography techniques that can make Ethereum better in the long run.

In this chapter :

- EVM improvements

- Account Abstraction

- EIP-1559 Improvements

- Verifiable Delay Functions (VDFs)

- Obfuscation and One-Time Signatures: The Future of Cryptography

EVM improvements

What problem does it solve?

The current EVM is difficult to statically analyze, which makes it difficult to achieve efficient deployment, formal code verification, and subsequent expansion. In addition, due to its low efficiency, it is difficult to implement many advanced cryptographic operations unless they are explicitly supported through precompilation.

What is it and how does it work?

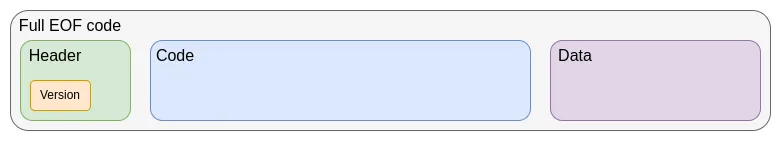

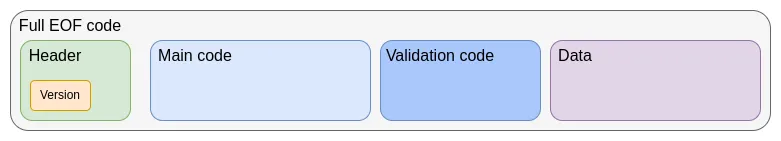

The first step in the current EVM improvement roadmap is the EVM Object Format (EOF) planned to be introduced in the next hard fork. EOF includes a series of EIP proposals that define a new version of the code format for EVM with the following key features:

- Separation of code (executable but not readable from the EVM) and data (readable but not executable) is achieved

- Disable dynamic jump mechanism, only keep static jump

- EVM code will not be able to obtain gas related information

- Added explicit subroutine calling mechanism

Older versions of contracts will continue to be usable and allowed to be created, but they may be phased out in the future (and may even be forced to convert them to EOF format). Newer versions of contracts can benefit from the efficiency improvements brought by EOF: on the one hand, the subroutine feature can slightly reduce the size of the bytecode, and on the other hand, they can benefit from EOF's unique new features and lower gas consumption.

The introduction of EOF makes subsequent upgrades easier. The most mature one is EVM Modular Arithmetic Extension (EVM-MAX). EVM-MAX specifically designs a set of new operations for modular operations and places them in a new memory space that other instructions cannot access. This allows the system to use some optimized algorithms, such as Montgomery multiplication.

A newer idea is to combine EVM-MAX with the Single Instruction Multiple Data (SIMD) feature. The idea of SIMD has been around in the Ethereum community for a long time, dating back to EIP-616 proposed by Greg Colvin. SIMD can be used to speed up various cryptographic calculations, including hash functions, various 32-bit STARK proofs (32-bit STARKs), and lattice-based cryptography. EVM-MAX combined with SIMD, these two extensions, can well meet the performance improvement needs of EVM.

The initial design of this combined EIP will be based on EIP-6690 and will be extended as follows:

- The following types of moduli are supported: (i) any odd integer, or (ii) a power of 2 up to 2 to the power of 768.

- Added new version for each EVMMAX instruction (add, sub, mul) that instead of accepting 3 immediate variables x, y, z, accepts 7 immediate variables: x_start, x_skip, y_start, y_skip, z_start, z_skip, count.

In Python code, these instructions are equivalent to:

for i in range(count): mem[z_start + z_skip * count] = op( mem[x_start + x_skip * count], mem[y_start + y_skip * count] )Except in actual implementation it will be processed in parallel.

- For the case where the modulus is a power of 2, it is planned to add operation instructions such as XOR, AND, OR, NOT, and SHIFT (supporting loops and non-loops). In addition, an ISZERO instruction will be added to push the operation results to the EVM main stack.

These features are sufficient to support the implementation of elliptic curve cryptography, small domain cryptography (such as Poseidon, circle STARKs), traditional hash functions (such as SHA256, KECCAK, BLAKE) and lattice cryptography.

Other EVM upgrade options are also possible, but they are not receiving much attention at present.

How does it relate to current research?

- EOF: https://evmobjectformat.org/

- EVM-MAX: https://eips.ethereum.org/EIPS/eip-6690

- SIMD: https://eips.ethereum.org/EIPS/eip-616

What else needs to be done, and what are the trade-offs?

The plan is to introduce EOF in the next hard fork. While it is still possible to remove the feature - there have been cases of features being removed at the last minute before a hard fork - this will face a lot of resistance. If EOF is removed, it means that all subsequent EVM upgrades will need to be performed without EOF, which is feasible but will bring more technical challenges.

The main trade-off of EVM is the balance between L1 complexity and infrastructure complexity. EOF will add a significant amount of code to the EVM implementation, and static code checking is also very complex. In return, we can get simplification of high-level languages, simplification of EVM implementation, and other benefits. It can be said that any roadmap that prioritizes the continued improvement of Ethereum L1 should include EOF and build on it.

One of the important tasks is to implement functions similar to EVM-MAX combined with SIMD and benchmark the gas consumption of various cryptographic operations.

How does it interact with the rest of the roadmap?

Adjustments to the EVM by L1 can prompt corresponding changes in L2. If one layer is adjusted separately while the other layer remains unchanged, some compatibility issues will arise, which will have other negative effects. In addition, EVM-MAX combined with SIMD functions can reduce the gas cost of various proof systems, thereby improving the execution efficiency of the L2 layer. This also makes it easier to remove more precompiled modules, because these modules can be replaced with EVM code with little impact on efficiency.

Account Abstraction

What problem was solved?

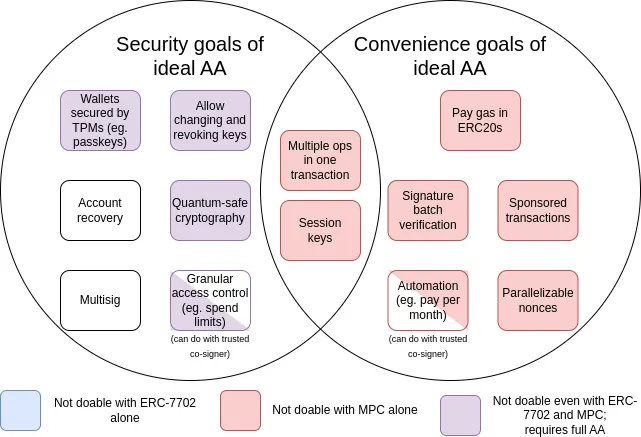

Currently, transactions only support one verification method: ECDSA signature. Initially, account abstraction aims to break this limitation and enable account verification logic to be implemented using arbitrary EVM code. This will support a variety of application scenarios:

- Migrating to quantum-resistant cryptography

- Rotate old keys (this is a generally accepted security practice)

- Multi-signature wallets and social recovery wallets.

- Use a single key for low-value operations and a different key (or key groups) for high-value operations

- Implement a privacy protocol without a repeater, significantly reducing system complexity and eliminating core dependencies

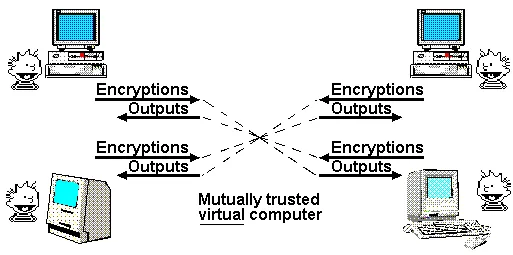

Since the account abstraction concept was proposed in 2015, its scope of goals has expanded, adding many "convenience goals", such as allowing an account that does not have ETH but holds a specific ERC20 token to pay gas fees directly with that ERC20 token. The following figure summarizes these goals:

MPC here refers to multi- party computation : a 40-year-old technology that shards keys into multiple devices and uses cryptographic methods to generate signatures without directly combining the key shards.

EIP-7702 is a proposal planned to be introduced in the next hard fork. EIP-7702 came about because of the growing awareness of the need to provide convenience features of account abstraction to all users (including EOA users) to improve the user experience for everyone in the short term and avoid the ecosystem from splitting into two independent systems. This work started with EIP-3074 and culminated in EIP-7702. EIP-7702 makes the "convenience features" of account abstraction immediately available to all users, including EOAs ( externally owned accounts , i.e. accounts controlled by ECDSA signatures).

As you can see from the chart, while some challenges (particularly those around “convenience”) can be addressed through incremental techniques like multi-party computation protocols or EIP-7702, most of the security goals that drove the original account abstraction proposal can only be achieved by going back and solving the original problem: namely, allowing smart contract code to directly control the transaction verification process. However, the reason this has not been achieved to date is that implementing this functionality securely is a challenge in itself.

What is this and how does it work?

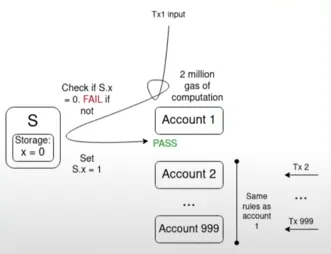

At its core, account abstraction is simple: allow smart contracts, not just externally owned accounts (EOAs), to initiate transactions. All the complexity comes from how to do this in a way that helps maintain a decentralized network while also protecting against denial of service (DoS) attacks.

A classic example that illustrates this key challenge is the Multi-invalidation Problem:

If the verification functions of 1,000 accounts all rely on a shared value S, and there are many valid transactions in the memory pool at the current S value, then a transaction that changes the S value may invalidate all other transactions in the memory pool. This allows attackers to send junk transactions to the memory pool at a very low cost, occupying the resources of network nodes.

After years of efforts to expand functionality while limiting the risk of Denial of Service (DoS) attacks, a solution has finally emerged that has led to consensus on how to achieve the “ideal account abstraction”: ERC-4337.

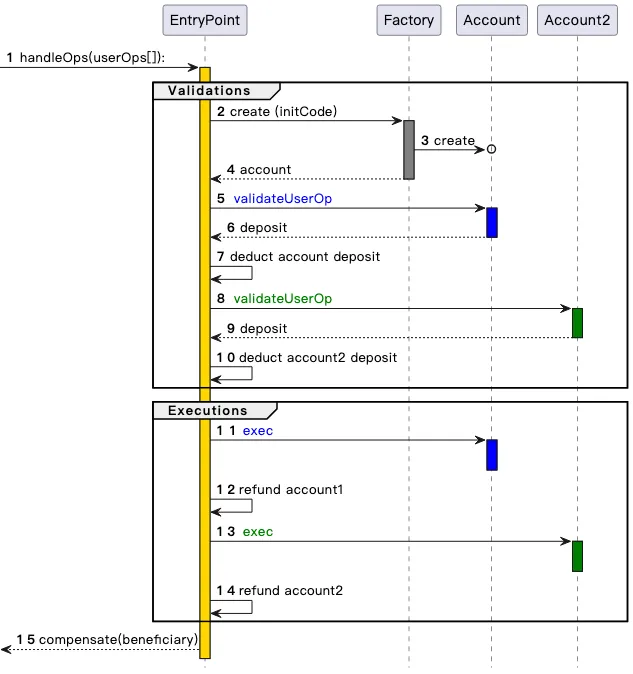

ERC-4337 works by dividing the processing of user actions into two phases: verification and execution . All verifications are processed first, and then all executions. In the memory pool, a user action will only be accepted if the verification phase only involves its own account and does not read environment variables. This prevents multiple invalidation attacks. At the same time, strict gas limits are set for the verification step.

ERC-4337 was originally designed as an out-of-protocol standard (ERC) because Ethereum client developers were focused on upgrading "The Merge" and had no extra energy to develop other features. That's why ERC-4337 uses custom objects called User Operations instead of regular transactions. However, recently we realized that it is necessary to incorporate some of its content into the protocol. There are two main reasons:

- EntryPoint has inherent inefficiencies as a contract: each bundle operation requires an additional ~100k gas, and each user operation requires an additional several thousand gas.

- There is a need to ensure that features of Ethereum, such as the inclusion guarantees provided by inclusion lists, work for users of the account abstraction.

In addition, ERC-4337 also extends two functions:

- Paymasters : This feature allows one account to pay fees on behalf of another account. This violates the rule that only the sending account itself can be accessed during the verification phase, so special processing mechanisms are introduced to support the payment master mechanism and ensure its security.

- Aggregators : This feature supports signature aggregation, such as BLS aggregation or SNARK-based aggregation. This is essential to achieve the highest level of data efficiency on Rollups.

What existing research materials are there?

- A talk on the historical evolution of account abstraction: https://www.youtube.com/watch?v=iLf8qpOmxQc

- ERC-4337: https://eips.ethereum.org/EIPS/eip-4337

- EIP-7702: https://eips.ethereum.org/EIPS/eip-7702

- BLSWallet code (aggregation function): https://github.com/getwax/bls-wallet

- EIP-7562 (Account abstraction written into the protocol): https://eips.ethereum.org/EIPS/eip-7562

- EIP-7701 (EOF-based write protocol account abstraction): https://eips.ethereum.org/EIPS/eip-7701

What else needs to be done, and what are the trade-offs?

The main remaining issue is to determine how to fully incorporate account abstraction into the protocol. A recent proposal that has received much attention for writing account abstraction into the protocol is EIP-7701, which implements account abstraction on top of EOF. An account can have an independent verification code segment, and if the account sets the corresponding code segment, the code will be executed during the verification step of transactions initiated by the account.

Interestingly, this approach clearly shows two equivalent implementations of the native account abstraction:

- Incorporating EIP-4337 into the protocol

- A new type of EOA whose signature algorithm is executed by EVM code

If we start out with strict limits on the complexity of the code that can be executed during verification - no access to external state, and even an initial gas limit that is too low to be useful for quantum-resistant or privacy-preserving applications - then the security of this approach is pretty clear: it just replaces ECDSA verification with an EVM code execution that takes a similar amount of time. However, we need to relax these limits over time, as allowing privacy-preserving applications to run without relays and achieving quantum resistance are both important . To do this, we do need to find more flexible ways to deal with denial of service (DoS) risks, rather than requiring extremely simple verification steps.

The main trade-off seems to be whether to get a less popular solution into the protocol sooner, or wait longer and potentially get a more ideal solution. The ideal approach may be some kind of hybrid. One hybrid would be to get some use cases into the protocol sooner, leaving more time to work out other issues. Another approach would be to deploy a more radical version of account abstraction on L2 first. However, the challenge is that in order for L2 teams to be willing to adopt a proposal, they need to be convinced that L1 and other L2s will adopt compatible solutions in the future.

Another application we need to consider explicitly is keystore accounts, which store account-related state on L1 or a dedicated L2, but can be used on L1 and any compatible L2. To implement this efficiently, L2 support for instructions such as L1SLOAD or REMOTESTATICCALL may be required, but this also requires that the account abstraction implementation on L2 can support it.

How does it interact with the rest of the roadmap?

Inclusion Lists need to support account abstraction transactions. In practice, the requirements for inclusion lists and decentralized memory pools end up being very similar, although inclusion lists have more flexibility. Additionally, ideally, the account abstraction implementation on L1 and L2 should be as consistent as possible. If we expect that most users will use keystore Rollups in the future, the account abstraction design should take this into account.

EIP-1559 Improvements

What problem does it solve?

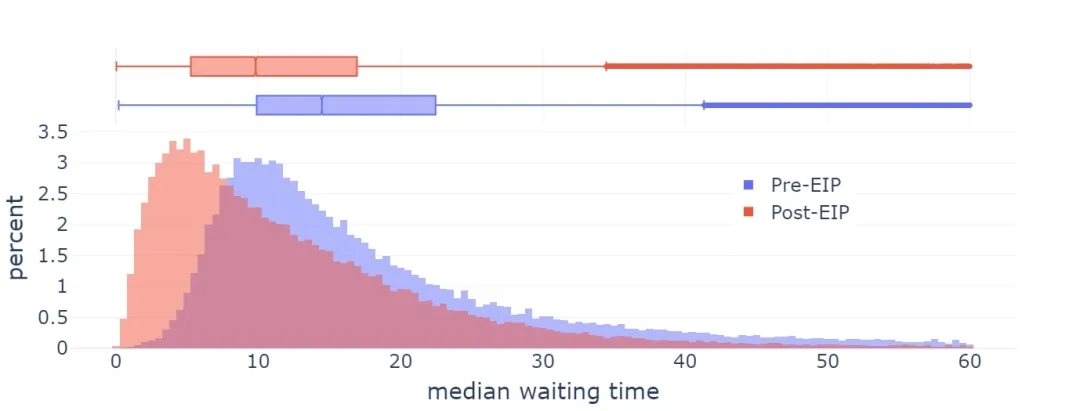

EIP-1559 was implemented on Ethereum in 2021 and significantly improved the average block inclusion time.

However, the current implementation of EIP-1559 has several imperfections:

- The formula is slightly flawed: it aims to fill about 50-53% of the blocks (depending on the variance), not 50% (this has to do with what mathematicians call the “arithmetic-geometric mean inequality”)

- In extreme cases , adjustments are not fast enough.

The later formula for data blocks (EIP-4844) was designed with the first problem in mind and is much simpler overall. Neither EIP-1559 itself nor EIP-4844 attempted to address the second problem. As a result, the current situation is a confusing transitional state involving two different mechanisms, and it is reasonable to think that both mechanisms will need to be improved over time.

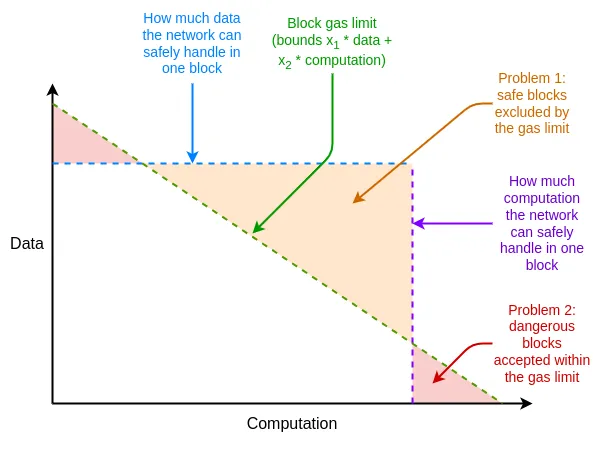

In addition, Ethereum’s resource pricing has other weaknesses that are distinct from EIP-1559, but can be addressed by tweaking EIP-1559. One of the main issues is the difference between the average and worst-case scenarios : Ethereum’s resource pricing must be set to handle the worst-case scenario (i.e. all gas consumption for a block takes up one resource), but average usage is much lower than this, which leads to inefficiencies.

What is it and how does it work?

The solution to these inefficiencies is multi-dimensional gas: set independent prices and limits for different resources. Technically, this concept is independent of EIP-1559, but EIP-1559 makes it easier to implement: without EIP-1559, optimally packing blocks under multiple resource constraints is a complex multidimensional knapsack problem. With EIP-1559, most blocks are not at full capacity on any resource, so a simple "accept any transaction that pays enough" algorithm will suffice.

We now have multi-dimensional gas for execution and blobs; in principle, we could add more dimensions: calldata , state reads and writes , and state size expansion .

EIP-7706 introduces a new gas dimension for calldata. It also solves the mathematical flaws of EIP-1559 by simplifying the multi-dimensional gas mechanism by making all three types of gas follow a (EIP-4844-style) framework.

EIP-7623 is a more sophisticated solution to the average-case and worst-case resource problem, which more strictly limits the maximum calldata without introducing a whole new dimension.

A further direction is to solve the update rate problem and find a faster base fee algorithm while maintaining the key invariants introduced by the EIP-4844 mechanism (ie: in the long run, average usage will be closer to the target value more accurately).

What existing research materials are there?

- EIP-1559 FAQ: https://notes.ethereum.org/@vbuterin/eip-1559-faq

- EIP-1559 Empirical Analysis: https://dl.acm.org/doi/10.1145/3548606.3559341

- Improvement proposal for rapid adjustments: https://kclpure.kcl.ac.uk/ws/portalfiles/portal/180741021/Transaction_Fees_on_a_Honeymoon_Ethereums_EIP_1559_One_Month_Later.pdf

- EIP-4844 FAQ, basic fee mechanism section: https://notes.ethereum.org/@vbuterin/proto_danksharding_faq#How-does-the-exponential-EIP-1559-blob-fee-adjustment-mechanism-work

- EIP-7706: https://eips.ethereum.org/EIPS/eip-7706

- EIP-7623: https://eips.ethereum.org/EIPS/eip-7623

- Multi-dimensional Gas: https://vitalik.eth.limo/general/2024/05/09/multidim.html

What else needs to be done, and what are the trade-offs?

Multidimensional Gas has two main tradeoffs:

- Increased complexity of the protocol

- Increased complexity of the optimal algorithm required to fill block capacity

Protocol complexity is a relatively minor issue for calldata, but it is more of an issue for gas dimensions that are internal to the EVM, such as storage reads and writes. The problem is that it's not just users who set gas limits: when contracts call other contracts, they also set limits. And currently, the way they set limits is still single-dimensional.

A simple way to eliminate this problem is to only provide multi-dimensional gas inside EOF, because EOF does not allow contracts to set gas limits when calling other contracts. Non-EOF contracts must pay for all types of gas when performing storage operations (for example, if a SLOAD operation consumes 0.03% of the block storage access gas limit, then the non-EOF user will also be charged 0.03% of the execution gas limit).

More research on multi-dimensional Gas will be very helpful in understanding these trade-offs and finding the ideal balance.

How does it interact with the rest of the roadmap?

Successful implementation of multi-dimensional Gas can significantly reduce some “worst case” resource usage, thereby alleviating the pressure to optimize performance to support, for example, STARK-based hash trees. Setting clear targets for state size growth makes it easier for client developers to plan and estimate their future needs.

As mentioned above, due to the gas unobservability feature of EOF, it makes more advanced versions of multi-dimensional Gas easier to implement.

Verifiable Delay Functions (VDFs)

What problem does it solve?

Currently, Ethereum uses RANDAO-based random numbers to select proposers. RANDAO random numbers work by requiring each proposer to reveal a secret they pre-committed to, and mixing each secret revealed into the random number. Therefore, each proposer has "1 bit of manipulation power": they can change the random number by not producing a block (at a cost). This is acceptable for selecting proposers, because it is very rare to give up one opportunity to get two new proposal opportunities. But for on-chain applications that require random numbers, this is unacceptable. Ideally, we need to find a more robust source of random numbers.

What is it and how does it work?

A Verifiable Delay Function (VDF) is a function that can only be computed sequentially and cannot be accelerated by parallelization. A simple example is a repeated hashing computation: execute for i in range(10**9): x = hash(x). The output, together with a SNARK proving its correctness, can be used as a random value. The idea is that the input is chosen based on information available at time T, while the output is not known at time T: it is only available some time later, after someone has fully run the computation. Since anyone can run this computation, the result cannot be withheld and therefore cannot be manipulated.

The main risk of a verifiable delay function is accidental optimization : someone finds a way to run the function much faster than expected, allowing them to manipulate the information they reveal at time T based on future outputs. Accidental optimization can happen in two ways:

- Hardware acceleration : Someone builds an ASIC that can run computational cycles faster than existing hardware.

- Accidental parallelization : Someone finds a way to run the function faster by parallelizing it, even if doing so requires 100x more resources.

The task of creating a successful VDF is to avoid the two problems above while being efficient enough to be practical (for example, one problem with hash-based approaches is that SNARKing hashes in real time requires extremely high hardware requirements). The hardware acceleration problem is usually solved by having public good participants create and distribute near-optimal VDF-specific ASICs themselves.

What existing research materials are there?

- VDF research website: https://vdfresearch.org/

- Thoughts on attacks against VDFs used in Ethereum, 2018: https://ethresear.ch/t/verifiable-delay-functions-and-attacks/2365

- Research on attacks against MinRoot (a proposed VDF): https://inria.hal.science/hal-04320126/file/minrootanalysis2023.pdf

What else needs to be done, and what are the trade-offs?

Currently, there is no VDF construction that fully satisfies Ethereum researchers in every way. Finding such a function will require more work. If we have such a function, the main trade-off is whether to include it in the protocol: it is simply a trade-off between functionality and protocol complexity and security risk. If we believe a VDF is secure, but it turns out to be insecure, then depending on how it is implemented, the security will be downgraded to the RANDAO assumption (1 bit of manipulation power per attacker) or slightly worse. Therefore, even if a VDF is flawed, it will not break the protocol itself, although it will break applications that rely heavily on it or any new protocol features.

How does it interact with the rest of the roadmap?

VDF is a relatively independent component of the Ethereum protocol. In addition to improving the security of proposer selection, it can also be used for: (i) on-chain applications that rely on random numbers, and potentially (ii) encrypted memory pools. However, the implementation of VDF-based encrypted memory pools still relies on other cryptographic breakthroughs that have not yet been realized.

It is important to note that, given hardware uncertainty, there may be some "delay" between when a VDF output is produced and when it is needed. This means that information may be available several blocks earlier. This may be an acceptable price to pay, but it should be taken into account when designing mechanisms such as finality or committee selection.

Obfuscation and One-Time Signatures: The Future of Cryptography

What problem does it solve?

One of Nick Szabo’s most famous articles is a 1997 article on “The God Protocol.” In this article, he noted that multi-party applications often rely on a “trusted third party” to manage interactions. In his view, the role of cryptography is to create a simulated trusted third party to do the same job without actually trusting any specific participants.

So far, we have only partially approached this ideal. If all we need is a transparent virtual computer where data and computations cannot be shut down, censored, or tampered with, and privacy is not the goal, then blockchains can achieve this, albeit with limited scalability. If privacy is indeed the goal, then until recently we could only create a few specialized protocols for specific applications: digital signatures for basic authentication, ring signatures and linkable ring signatures for primitive forms of anonymity, identity-based cryptography for more convenient cryptography under certain trusted issuer assumptions, blind signatures for Chaumian electronic cash, etc. This approach requires a lot of work for each new application.

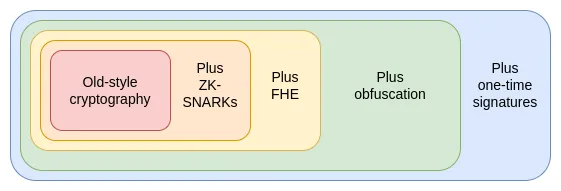

In the 2010s, we saw the first signs of a new and more powerful approach based on programmable cryptography. Instead of developing specialized protocols for each new application, we could use new powerful protocols - specifically ZK-SNARKs - to add cryptographic guarantees to arbitrary programs. ZK-SNARKs allow users to prove arbitrary statements about data they hold in a way that is (i) easy to verify and (ii) does not reveal any data other than the statement itself. This is a breakthrough in both privacy and scalability that I liken to the impact of the Transformer in AI. Thousands of years of human effort devoted to specific applications were suddenly replaced by a general solution that could plug and play to solve a wide range of unexpected problems.

But ZK-SNARKs are just one of three super powerful general-purpose foundational protocols. These protocols are so powerful that when I think about them, they remind me of Yu-Gi-Oh. Yu-Gi-Oh was the card game and anime I played a lot in my childhood: the Egyptian God Cards. The Egyptian God Cards are three extremely powerful cards that legend has it that their appearance can be fatal, and they are so powerful that they are banned in duels. Similarly, in cryptography, we also have three Egyptian God protocols:

What is it and how does it work?

ZK-SNARKs is the most mature of the three protocols. Over the past five years, with the significant improvement in proof generation speed and developer friendliness, ZK-SNARKs has become the foundational technology for Ethereum in terms of scalability and privacy. However, ZK-SNARKs has a key limitation: data must be mastered in order to generate proofs for it. In ZK-SNARK applications, each state data must have a unique "owner", and any read and write operations require the owner's presence and authorization.

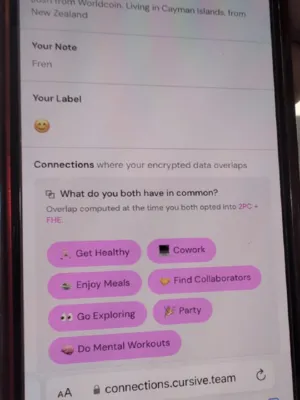

The second protocol that overcomes this limitation is Fully Homomorphic Encryption ( FHE ). FHE is able to perform arbitrary computations on encrypted data without access to the original data . This allows us to process data for users while protecting the privacy of both the data and the algorithms. It also enhances voting systems such as MACI, providing near-perfect security and privacy guarantees. FHE was long considered too inefficient to be practical, but now it is efficient enough to be used and applications are beginning to emerge.

But FHE also has limitations: any FHE-based technology still requires a specific subject to hold the decryption key. Although an M-of-N multi-party storage solution can be adopted, or even a trusted execution environment (TEE) can be used as a second layer of protection, this is still an inherent limitation.

This leads to a third protocol, which is more powerful than the first two combined: Indistinguishability Obfuscation. Although it is still a long way from being practical, in 2020 we have established a theoretically feasible protocol based on standard security assumptions, and we have recently started to implement it. Indistinguishability Obfuscation allows you to create a "cryptographic program" that can perform arbitrary computations while completely hiding its internal implementation details . As a simple example, you can encapsulate the private key into an obfuscated program so that it can only be used to sign prime numbers, and then distribute the program to others. Users can use the program to sign any prime number, but they will not be able to extract the private key. But this is just the tip of the iceberg of its capabilities: combined with hash functions, it can implement any other cryptographic primitives and support more advanced functions.

The only limitation of obfuscating a program is that it cannot prevent the program itself from being copied. However, a more powerful technology has emerged in this field, but it requires all participants to have quantum computers: Quantum One-Shot Signatures.

Combining obfuscation techniques and one-time signatures, we can almost build a perfect trustless third-party system. The only thing that relies on blockchain and cannot be achieved through cryptography alone is censorship resistance. These technologies can not only enhance the security of Ethereum itself, but also support the construction of more powerful upper-layer applications.

To understand how each of these foundational protocols improves system capabilities, let’s take a typical example: voting . Voting is a very challenging problem because it needs to satisfy many complex security properties, especially in terms of verifiability and privacy. Although strong security voting protocols have existed for decades, let’s set a higher goal: design a system that can support arbitrary voting protocols, including quadratic voting, pairing-constrained quadratic financing, cluster-matched quadratic financing, etc. In other words, we expect the “vote counting” link to support arbitrary program logic.

- First, let’s assume that we record voting data on a blockchain . This enables public verification (anyone can verify the correctness of the final result, including the rules for counting votes and eligibility to vote) and censorship resistance (users cannot be prevented from voting), but lacks privacy protection.

- Then, we introduce ZK-SNARKs technology. This achieves privacy protection : each vote is anonymous, while ensuring that only authorized users can vote, and each user can only vote once.

- Next, we introduce the MACI mechanism. The vote is encrypted and transmitted to the decryption key of the central server. The central server is responsible for executing the counting process, including filtering duplicate votes, and publishing ZK-SNARKs that prove the correctness of the results. This not only maintains the aforementioned guarantees (even if the server cheats!), but also provides anti-coercion protection when the server is honest: users cannot prove their voting choices, even if they actively want to prove it. This is because although users can prove a certain vote, they cannot prove that they did not cast another vote to offset the previous vote. This effectively prevents vote buying and other attacks.

- We perform vote counting in a fully homomorphic encryption (FHE) environment and decrypt via an N/2-of-N threshold decryption scheme. This upgrades the anti-coercion protection mechanism from a single point of trust to an N/2 threshold trust .

- We obfuscate the counting program and design the obfuscation program so that it can only output results when authorized, which can be blockchain consensus proof, proof of work, or a combination of the two. This makes the anti-coercion protection mechanism almost perfect: in the blockchain consensus mode, 51% of the verification nodes need to collude to crack; in the proof of work mode, even if the whole network colludes, it will cost a lot to restore the voting behavior of a single user by running the counting of different user subsets. We can also introduce small random perturbations in the final statistical results to further improve the anonymity of a single user's voting behavior.

- We introduce a one-time signature mechanism, a quantum computing-based protocol that ensures that a signature can only be used to sign a specific type of message once. This achieves complete anti-coercion protection .

Indistinguishable obfuscation can also support other powerful application scenarios. For example:

- Supports DAOs, on-chain auctions, and other applications with arbitrary internal encrypted state .

- Fully general trusted setup : developers can create an obfuscated program containing a key that can execute any program and output the result, taking hash(key, program) as input parameters. Anyone who obtains this program can embed the program into themselves and merge the original key of the program with their own key, thereby expanding the scope of initialization. This can be used to generate 1-of-N trusted initialization for arbitrary protocols.

- ZK-SNARKs that only require signature verification . The implementation is simple: through trusted initialization, create an obfuscated program that only signs with the key when the ZK-SNARK is verified to be valid.

- Encrypted transaction memory pool . It greatly simplifies the transaction encryption process: transactions are only decrypted when a specific on-chain event occurs in the future. This can even include the successful execution of a VDF (Verifiable Delay Function).

Through the one-time signature mechanism, we can protect the blockchain from 51% attacks that destroy finality, but it may still face censorship attacks. Basic protocols like one-time signatures can support quantum currency and solve the double-spending problem without relying on blockchain, but more complex application scenarios still require blockchain support. If these basic protocols can achieve sufficient performance levels, most applications in the world will have the potential to be decentralized. The main bottleneck is how to verify the correctness of the implementation.

What existing research materials are there?

- 2021 Research on Indistinguishable Obfuscation Protocols: https://eprint.iacr.org/2021/1334.pdf

- How obfuscation can help Ethereum: https://ethresear.ch/t/how-obfuscation-can-help-ethereum/7380

- The first one-time signature construction scheme: https://eprint.iacr.org/2020/107.pdf

- Exploration of obfuscation technology implementation (1): https://mediatum.ub.tum.de/doc/1246288/1246288.pdf

- Exploration of obfuscation technology implementation (2): https://github.com/SoraSuegami/iOMaker/tree/main

What else needs to be done, and what are the trade-offs?

There are still many technical challenges . Indistinguishable obfuscation technology is still very immature, and existing construction schemes run millions of times slower (or more) than practical applications. The technology is widely known for its "theoretical" running time is polynomial level, but in practice it takes longer than the lifetime of the universe. Although recent protocols have reduced the extreme time overhead, the performance loss is still too high for everyday applications: one developer estimated that a single execution would take a year.

Quantum computers are not yet available: all the ideas you see online are either prototype systems that can only process 4-bit operations, or are not real quantum computers - although they may contain quantum components, they cannot perform actual effective quantum algorithms such as Shor's algorithm or Grover's algorithm. There are recent signs that "real" quantum computers are not that far away from being realized. However, even if "real" quantum computers appear soon, the day when ordinary people use quantum computers on their laptops or mobile phones may be decades later than the day when large institutions obtain quantum computers capable of cracking elliptic curve cryptography.

In indistinguishable obfuscation technology, a core trade-off lies in security assumptions. Although some radical schemes that adopt unconventional assumptions have more practical execution efficiency, these unconventional assumptions often have the risk of being broken. With the deepening of our understanding of lattice cryptography theory, we may eventually be able to establish unbreakable assumptions. But this path is risky. A relatively conservative path is to stick with protocols that can be provably reduced to "standard assumptions", but this means that it will take longer to implement protocols with sufficient execution efficiency.

How does it interact with the rest of the roadmap?

Strong cryptography could be a game changer. For example:

- If verification of ZK-SNARKs is as simple as signing, we may no longer need any aggregation protocol and complete verification directly on the chain.

- One-time signatures could mean more secure proof-of-stake protocols.

- Many complex privacy protocols may be replaced by an EVM that "only" supports privacy protection.

- The implementation of crypto transaction mempools has become easier.

Initially, these innovative benefits will be reflected in the application layer, as Ethereum L1 inherently needs to be conservative in its security assumptions. However, adoption at the application layer alone could also bring revolutionary breakthroughs, comparable to the advent of ZK-SNARKs.

Disclaimer: As a blockchain information platform, the articles published on this site only represent the personal opinions of the author and the guest, and have nothing to do with the position of Web3Caff. The information in the article is for reference only and does not constitute any investment advice or offer. Please comply with the relevant laws and regulations of your country or region.

Welcome to join the Web3Caff official community : X (Twitter) account | WeChat reader group | WeChat public account | Telegram subscription group | Telegram exchange group