Author: Xin

As one of the fastest growing products ever, Cursor reached $100 million in ARR just 20 months after its launch. In the following two years, it exceeded $300 million in ARR and continued to lead the transformation of the way engineers and product teams develop software. As of early 2025, Cursor has more than 360,000 paying users.

Michael Truell is the co-founder and CEO of Anysphere, the parent company of Cursor. He founded Anysphere with three MIT classmates and launched Cursor in three months. Michael Truell rarely accepts podcast interviews and has only been on the Lex Fridman Podcast before. In this episode, he mentioned his predictions for the "After Code Era", the counterintuitive experiences in the process of building Cursor, and his views on the future development trends of engineers.

This content comes from Lenny's Podcast. The following is a translation of the full text.

- Cursor's goal is to create a new way of programming: people will see pseudocode that is closer to English sentences in the future. People will have strong control over various details of the software and have the ability to modify and iterate very quickly.

-“Taste” will become more and more valuable: The core of “taste” is to have a clear understanding of “what should be built”.

- The users who are best at using AI are conservative in their use of the technology: they are very good at limiting the scope of tasks they give to the AI to be smaller and more specific.

- The core part of the Cursor interview is a two-day assessment: these assessment projects are simulated, but they can allow candidates to produce real work results in two days. This is not only a test of "whether you are willing to work with them", but also very important for attracting candidates. The only thing that attracts people to join an early company is often a team that they feel is worth working with.

The main problem with chatbot-style programming is the lack of precision

Lenny: We talked about what will happen in the post-code era. How do you see Cursor's future development direction? How will technology move from traditional code to other forms?

Michael Truell: The goal of Cursor is to create a completely new way of programming, a different way of building software. You only need to describe your intentions to the computer in the simplest way, and you define how the software should work and how it should be presented.

As today's technology continues to mature, we believe we can create a whole new way to build software that will be more advanced, more efficient, and easier to use than today. This process will be very different from the way software is written today.

I would like to contrast this with several mainstream views on the future form of software, some of which are currently popular and we do not quite agree with them.

One view is that software building in the future will remain very similar to today, relying mainly on text editing using formal programming languages such as TypeScript, Go, C, Rust, etc. Another view is that you only need to enter instructions into the chatbot, let it help you build the software, and then let it modify it at any time. This style of chatbot is like talking to your engineering department.

We think both of these visions are problematic.

The main problem with chatbot-style programming is the lack of precision. If you want people to have full control over the look and functionality of your software, you need to provide more precise ways to instruct them to make the changes they want, rather than just saying "change this part of my app" to a bot in a chat box and then having the whole thing deleted.

On the other hand, the worldview that nothing will change is also wrong, because technology will only become more powerful. In the "post-code" world we envision, software logic will be expressed in a form closer to English.

You can imagine it in a more formal form, moving towards pseudocode. You can write the logic of the software, edit it at a high level, and easily navigate through it. This won't be millions of lines of incomprehensible code. Instead, it will be clearer and easier to understand and navigate. We are working on evolving complex symbols and code structures into a form that is easier for humans to read and edit.

In the post-code era, taste will become more and more valuable

Lenny: That's very profound, and I want to make sure that people understand your point. The shift you're envisioning is that people won't see code anymore, and won't need to think in terms of JavaScript or Python anymore. Instead, there will be a more abstract form of expression, a pseudocode that's closer to English sentences.

Michael Truell: We think it will eventually develop to that stage. We believe that achieving this stage requires the participation and promotion of existing professional engineers. In the future, people will still be in the driving seat.

People will have strong control over the details of software and will not give up this control easily. People will also have the ability to modify and iterate very quickly. The future will not rely on the kind of slow engineering that happens in the background and takes weeks to complete.

Lenny: This brings up a question. For current engineers, or those who are considering becoming engineers, designers, or product managers, what skills do you think will become increasingly valuable in the "post-code era"?

Michael Truell: I think "taste" will become more and more valuable. When people talk about taste in the software field, they tend to think of visual effects, smooth animations, color matching, UI, UX, and so on.

Visuals are very important for a product, but as I mentioned before, I think the other half is the logic and how the product works.

We have many tools to design visual effects, but code is still the best way to express the logic of software operation. You can use Figma to show the effect, or roughly sketch it in your notes. But only when you have a real usable prototype can the logic be clearly presented.

In the future, engineers will become more and more like "logic designers". They need to express their intentions accurately, shifting from the "how to implement" behind the scenes to the high-level "what to implement" and "what it is", which means that "taste" will become more important in software development.

We are not there yet in software engineering. There are many interesting and thought-provoking jokes circulating on the Internet, reflecting people's over-reliance on AI development, and software has obvious defects and functional problems.

But I believe that software engineers in the future will not have to pay as much attention to detail control as they do now. We will slowly shift from being rigorous and meticulous to paying more attention to "taste".

Lenny: This reminds me of vibe coding. Is it similar to what you described as a way of programming where you don't have to think about the details too much and you can just let it flow?

Michael Truell: I think they are related. I think the current discussion of vibe coding describes a controversial creative mode, which is to generate a lot of code without really understanding the details. This mode leads to a lot of problems. If you don't understand the low-level details, you will quickly find that what you create becomes too large and difficult to modify.

What we are actually interested in is how people can perfectly control all the details without fully understanding the underlying code. This solution is closely related to vibe coding.

We still lack the ability to let "taste" really guide the construction of software. One problem with vibe coding or similar models is that while you can create something, a lot of it is clumsy decisions made by AI, and you can't fully control it.

Lenny: You mentioned "taste". What exactly do you mean?

Michael Truell: Having a clear idea of what you should build is something that can be translated more and more easily: This is the software you want to create, it looks like this, and it runs like this.

Unlike now, when you and your team have a product idea, you still need a translation layer, which requires a lot of effort and labor to convert it into a format that computers can understand and execute.

"Taste" has little to do with UI. Maybe the word "taste" is not used properly, but its core is to have a correct understanding of "what should be built".

The birth of Cursor originated from the exploration of a problem

Lenny: I want to go back to the origins of Cursor, which many listeners may not know. You are building one of the fastest growing products in history. Cursor is profoundly changing the way people build products and even the entire industry. How did it all start? What are some memorable moments in the early development?

Michael Truell: The birth of Cursor stems from our exploration of a problem, and also largely from our thinking about how AI will get better in the next decade. There are two key moments.

The first was when we first used the beta version of Copilot. It was our first exposure to a practical AI product that could actually help us, rather than a flashy demo. Copilot is one of the most valuable development tools we have ever used, and we are very excited.

Another is that companies such as OpenAI have published a series of papers on model scaling. These studies show that even without disruptive new ideas, AI capabilities will become stronger and stronger simply by expanding the model size and increasing the amount of training data. At the end of 2021 and the beginning of 2022, we are confident about the prospects of AI products, and this technology is destined to mature in the future.

When we look around, we find that although many people are talking about how to make models, few people really go deep into a specific field of knowledge work to think about how this field will progress with the advancement of AI technology.

This makes us wonder: As this technology matures, how will these specific areas change in the future? What will the final form of work be like? How will the tools we will use to complete our work evolve? What level of model needs to be achieved to support these changes in work forms? Once model scaling and pre-training cannot be further improved, how can we continue to push the boundaries of technical capabilities?

The mistake Cursor made at the beginning was that we chose a boring field with little competition. No one would pay attention to such boring fields.

At that time, everyone thought programming was hot and interesting, but we felt that many people were already doing it.

For the first four months, we were actually working on a completely different project - helping mechanical engineering with automation and augmentation, building tools for mechanical engineers.

But we ran into problems right from the start. My co-founder and I were not mechanical engineers. Although we had friends in this field, we were extremely unfamiliar with it. We were like "blind men touching an elephant". For example, how could we really apply existing models to mechanical engineering? We concluded at the time that we had to develop our own models from scratch. This approach was very tricky because there was almost no public data on the 3D models of various tools and parts and their construction steps on the Internet, and it was equally difficult to obtain models from channels that had these resources.

But eventually we came to our senses and realized that mechanical engineering wasn’t something we were very interested in and it wasn’t something we wanted to dedicate our lives to.

Looking back at the field of programming, it seems that even though quite some time has passed, there has not been a significant change. Those working in this field seem to be out of touch with our ideas, and they lack sufficient ambition and vision for the future development direction and how AI will reshape everything. It is this realization that led us to the path of building Cursor.

Lenny: I like the advice to chase seemingly boring industries where there is less competition and opportunities, and sometimes it works. But I like the other idea of boldly chasing the hottest and most popular fields, such as AI programming and application development, and it turns out that this also works.

You feel that existing tools lack enough ambition or potential, and that there is more that can be done. I think that's a really valuable takeaway. Even if it seems like it's too late in an area, and products like GitHub Copilot already exist, if you find that existing solutions aren't ambitious enough, don't meet your standards, or are flawed in their approach, there's still a huge opportunity there. Is that right?

Michael Truell: I totally agree. If we want to achieve breakthrough progress, we need to find something specific to do. The fascinating thing about AI is that there is still a huge unknown space in many areas, including AI programming.

The ceiling is very high in many areas. Even with the best tools in any area, there is still a lot of work to be done in the next few years. Having such a wide space and such a high ceiling is quite unique in software development, at least in AI.

Cursor emphasizes dogfooding from the beginning

Lenny: Let's go back to the IDE (Integrated Development Environment) question. You have several different routes, and other companies are also trying them.

One is to build an IDE for engineers and integrate AI capabilities into it. Another is a complete AI Agent model, such as products like Devin. Another is to focus on building a model that is very good at coding and is committed to creating the best coding model.

What made you decide that IDE was the best route?

Michael Truell: People who are focused on developing models from the beginning, or trying to automate programming end to end, are trying to build something very different than we are.

We are more focused on ensuring that people have control over all decisions in the tools they build. In contrast, they are more envisioning a future where AI does the entire process, or even takes charge of all decisions.

So on the one hand, our choices are driven by interest. On the other hand, we always try to look at the current state of the art from a very realistic perspective. We are extremely excited about the potential of AI in the next few decades, but sometimes people tend to anthropomorphize these models because they see AI perform well in one field, thinking that they are smarter than humans in this field, so they must be good in another field.

But there are huge problems with these models. Our product development has emphasized "Dogfooding" from the beginning. We use Cursor a lot every day and never want to release any features that are useless to us.

We are end users of the product ourselves, which gives us a realistic view of the current state of the art. We believe that it is critical to keep people in the “driver’s seat” and that AI cannot do everything.

We also wanted to give users this control for personal reasons, so that we were not just a model company, but also moved away from the end-to-end product development approach that took away people's control.

As for why we chose to build an IDE instead of developing a plugin for an existing programming environment, it is because we firmly believe that programming will be done through these models and the way of programming will change dramatically in the next few years. The extensibility of existing programming environments is so limited that if you believe that the UI and programming models will change disruptively, then you must have full control over the entire application.

Lenny: I know you are currently working on IDEs, and maybe this is your bias, and this is the direction you think the future is going. But I'm curious, do you think a large part of the work in the future will be done by AI engineers in tools like Slack? Will this approach be incorporated into Cursor one day?

Michael Truell: I think the ideal is that you can switch between these things easily. Sometimes you might want to let the AI run on its own for a while; sometimes you want to pull the AI's work out and work with it efficiently. Sometimes you might let it run autonomously again.

I think you need a unified environment for these backend and frontend forms to work well. For backend operations, programming tasks that require very little explanation to accurately specify requirements and determine correct standards are particularly suitable. Bug fixing is a good example, but it is definitely not all programming.

The nature of IDEs will change radically over time. We chose to build our own editor because it will evolve. This evolution will include the ability to take over tasks from different interfaces, like Slack and issue tracking systems; the glass screen you stare at will also change dramatically. We currently think of IDEs as places where you build software.

The most successful users of AI are conservative in their use of the technology

Lenny: I think one thing people don’t fully realize when they’re talking about Agents and these AI engineers is that we’re going to be very much “engineering managers” with a lot of people who aren’t that smart yet, and you’re going to have to spend a lot of time reviewing, approving, and specifying requirements. What are your thoughts on this? Is there any way to streamline this process?

Because it sounds like this is really not easy. Anyone who has managed a large team will understand that: "These subordinates always come to me repeatedly with work of varying quality. It's so torturous."

Michael Truell: Yeah, maybe eventually we'll have to have one-on-one conversations with all of these Agents.

We've observed that the users who are most successful with AI are the ones who are more conservative in their use of the technology. I do think that the most successful users right now rely heavily on features like our Next Edit Prediction. In their regular programming flow, our AI intelligently predicts what to do next. They are also very good at defining the scope of the tasks they give the AI more clearly and in a smaller way.

Considering the time cost you spend on code review, there are two main modes of collaboration with the Agent. One is that you can spend a lot of time in the early stage to explain in detail, let the AI work independently, and then review the results of the AI. When you have completed the review, the whole task is completed.

Another way is to divide the task into smaller parts. You can specify only a small part each time, let the AI complete it, and then review it. Then you can give further instructions, let the AI continue to complete it, and then review it. This is like implementing a function similar to auto-completion in the whole process.

However, we often observe that the users who make the best use of these tools still prefer to break tasks down and keep them manageable.

Lenny: That's a rare thing. I want to go back to when you first built Cursor. When did you realize it was ready? When did you feel like it was time to just release it and see what happens?

Michael Truell: When we first started working on Cursor, we were worried that it would take too long to develop and that we wouldn't be able to release it to the outside world. The initial version of Cursor was completely "hand-built" from scratch. Now we use VS Code as the foundation, just like many browsers use Chromium as the core.

But it wasn't like that at the beginning. We developed the prototype of Cursor from scratch, which involved a lot of work. We had to develop many features required by modern code editors ourselves, such as support for multiple programming languages, navigation between code, error tracking, etc. In addition, a built-in command line and the ability to connect to remote servers to view and run code were also required.

We developed at lightning speed, building our own editor from scratch, and developing AI components at the same time. After about five weeks, we had started using our own editor completely, completely throwing away the previous editor and focusing on the new tool. When we felt it had reached a certain level of practicality, we gave it to others to try out and conducted a very short beta test.

It took Cursor only about three months from writing the first line of code to officially releasing it to the public. Our goal is to get the product into the hands of users as soon as possible and iterate quickly based on public feedback.

To our surprise, we had expected the tool to attract only a few hundred users for a long time, but from the beginning we had a lot of users pouring in with feedback. The initial user feedback was extremely valuable and it was this feedback that led us to decide to abandon building a version from scratch and instead develop it on VS Code. Since then, we have been continuously optimizing the product in a public environment.

Launched the product in three months and achieved $100 million in ARR in one year

Lenny: I appreciate your modesty about your achievements. As far as I know, you guys went from 0 to $100 million in ARR in about a year to a year and a half, which is absolutely historic.

What do you think are the key factors to your success? You mentioned using your own product as one of them. But it’s incredible that you guys were able to launch the product in three months. What was the secret behind that?

Michael Truell: The first version, which was completed in three months, was not perfect. So we always had a constant sense of urgency and always felt that there were many things we could do better.

Our ultimate goal is to truly create a new programming paradigm that can automate a lot of the coding work we know today. No matter how much progress Cursor has made so far, we feel that we are still far away from that ultimate goal and there is always a lot to do.

Many times, we don’t focus too much on the initial release results, but instead focus on the continued evolution of the product and are committed to continuously improving and perfecting this tool.

Lenny: Was there a turning point after those three months where everything started to take off?

Michael Truell: To be honest, the growth felt pretty slow at first, maybe because we were a little impatient, but in terms of the overall growth rate, it continues to surprise us.

I think the most surprising thing is that this growth has actually remained a steady exponential trend, with continued growth every month, although new version releases or other factors sometimes accelerate this process.

Of course, this exponential growth feels pretty slow in the beginning and the base is really low, so it doesn’t really take off in the beginning.

Lenny: This sounds like a case of "build it and they will come." You just built a tool you liked, and once you released it, people liked it, and word of mouth spread.

Michael Truell: Yes, that's pretty much it. Our team put most of its energy into the product itself and didn't distract itself from doing other things. Of course, we also spent time doing a lot of other important things, such as building a team and rotating user support.

However, we “just left it there” for some of the routine tasks that many startups devote their energy to in the early stages, especially in sales and marketing.

We focus all our energy on polishing the product, first creating a product that our team likes, and then iterating based on feedback from some core users. This may sound simple, but it is actually not easy to do well.

There are so many directions to explore, so many different product paths. One of the hard parts is staying focused, strategically choosing the key features to build, and prioritizing them.

Another challenge is that the space we’re in represents a completely new model for product development: we’re somewhere between a traditional software company and a basic model company.

We are developing products for millions of users, which requires us to achieve excellence at the product level. But another important dimension of product quality is to continuously deepen scientific research and model development, and continuously optimize the model itself in key scenarios. Balancing these two aspects is always challenging.

The most counterintuitive thing is that I didn’t expect to develop my own model.

Lenny: What’s the most counterintuitive thing you’ve found so far in building Cursor and building AI products?

Michael Truell: The most counterintuitive thing for me is that we didn't expect to develop our own models at the beginning. When we first entered this field, there were already companies focusing on model training from the beginning. And we calculated the cost and resources required to train GPT-4, and it was clear that this was not something we were capable of doing.

There are already many excellent models on the market, so why bother to copy what others have already done? Especially in terms of pre-training, which requires a neural network that knows nothing about anything to learn the entire Internet. Therefore, we did not intend to go down this path at first. From the beginning, we knew that there were many things that existing models could do that were not yet realized because of the lack of suitable tools for building these models. But we still invested a lot of energy in model development.

Because every "magic moment" you experience when using Cursor is more or less derived from our custom model. This process is gradual. We initially tried to train our own model on a use case because it was not suitable for any mainstream basic model, and the result was successful. Then we extended this idea to another use case, which also worked well, and then kept moving forward.

When developing this type of model, a key point is to choose the target accurately and not to reinvent the wheel. We do not touch the areas where the top basic models have done very well, but focus on their shortcomings and think about how to make up for them.

Lenny: A lot of people are surprised to hear that you have your own model. Because when people talk about Cursor and other products in this space, they often call them "GPT shells" and think they are just tools built on models like ChatGPT or Sonnet. But you mentioned that you actually have your own model. Can you talk about the technology stack behind this?

Michael Truell: We do use the most mainstream basic models in a variety of scenarios.

We rely more on our own models to provide users with a critical part of the Cursor experience, such as some use cases that cannot be handled due to the cost or speed of the basic models. One example is auto-completion.

For those who don’t write code, this may not be easy to understand. Writing code is a unique job. Sometimes, you can predict what you will do in the next 5 minutes, 10 minutes, 20 minutes, or even half an hour by observing your current operation.

Compared with writing, many people may be familiar with the auto-complete function of Gmail, as well as the various auto-complete prompts that appear when editing text messages, emails, etc. However, these functions are limited in their effectiveness. Because it is often difficult to infer what you are going to write next based on what you have already written.

But when writing code, when you modify a part of the code base, you often need to modify other parts of the code base at the same time, and the content that needs to be modified is very obvious.

One of the core features of Cursor is this enhanced auto-completion, which can predict the series of operations you want to perform next across multiple files and different locations within files.

For the model to perform well in this scenario, it must be fast enough to ensure that the completion result is given within 300 milliseconds. Cost is also an important factor. Every keystroke triggers thousands of inferences and continuously updates the prediction of your next operation.

This also involves a very special technical challenge: we need the model to not only be able to complete the next token like processing a normal text sequence, but also to be good at completing a series of diffs (code changes), that is, based on the modifications that have occurred in the code base, predict the additions, deletions, and changes that may occur next.

We trained a model specifically for this task, and it worked very well. This part was completely self-developed by us, and we never used any base model. We didn't label or brand this technology, but it is the core of Cursor.

Another scenario where we use our own models is to enhance the performance of large models like Sonnet, Gemini, or GPT, especially in terms of output and input.

During the input phase, our model searches the entire codebase and identifies the relevant parts that need to be shown to these large models. You can think of it as a "mini Google search" specifically for finding relevant content in the codebase.

In the output phase, we process the modification suggestions given by these large models and use our specially trained models to supplement the details. For example, high-level logic design is completed by more advanced models, which will spend some tokens to give the overall direction. Other smaller, more professional, and extremely fast models will combine some reasoning optimization techniques to convert these high-level modification suggestions into complete and executable code conversions.

This approach has greatly improved the quality of professional tasks and greatly accelerated the response speed. For us, speed is also a key indicator for measuring our products.

Lenny: That’s interesting. I recently interviewed Kevin Weil, CPO of OpenAI, on a podcast, and he called this an ensemble of models.

They also take advantage of what each model does best. Using cheaper models can be very cost-effective. Are the models you train yourself based on open source models such as LLaMA?

Michael Truell: We are very pragmatic about this and don't want to reinvent the wheel. So we start with the best pre-trained models on the market, usually open source, and sometimes work with large models that don't open their weights to the outside world. We are less concerned about being able to directly read or find the weight matrices that determine the output, but more concerned about the ability to train and post-train the model.

The ceiling of AI products is like that of personal computers and search engines in the last century

Lenny: Many AI entrepreneurs and investors are thinking about a question: Where is the moat and defense capability of AI? Customized models seem to be a way to build a moat. How to learn long-term defense capabilities when competitors are trying to constantly launch new products and steal your job?

Michael Truell: I think there are some traditional ways to build user inertia and moats. But at the end of the day, we have to keep working hard to build the best products. I firmly believe that the ceiling of AI is very high, and no matter what barriers you build, they may be surpassed at any time.

This market is somewhat different from the traditional software market or enterprise market in the past. An example similar to this market is the search engine in the late 1990s and early 2000s. Another is the development of personal computers and minicomputers in the 1970s, 1980s and 1990s.

The ceiling of AI products is very high, and products are iterated quickly. You can continuously get huge output from the incremental value of every smart person every hour and every dollar of R&D cost. This state can last for a long time. You will never lack new features to develop.

Especially in the search field, increasing distribution channels also helps improve the product because you can continue to iterate algorithms and models based on user data and feedback. I believe these dynamic changes also exist in our industry.

This may be a somewhat helpless reality for us, but it is an exciting truth for the whole world. There will be many leading products emerging in the future, and there are too many meaningful functions waiting to be created.

We are still a long way from our vision of five to ten years from now, and what we need to do is keep the innovation engine running at high speed.

Lenny: It sounds more like building a "consumer-style" moat. Continue to provide the best products so that users are willing to use them all the time, rather than binding contracts with the entire company like Salesforce does, so that employees have to use them.

Michael Truell: Yes. I think the key is that if you are in a field where there is not much valuable stuff to do soon, then the situation is not optimistic. But if in this field, a lot of money is invested and the efforts of outstanding talents can continue to produce value, then you can enjoy the scale effect of R&D, deepen the technology in the right direction, and build barriers.

This does have a certain consumer-oriented trend. But the core of all this is to create the best product.

Lenny: Do you think this will be a "winner takes all" market in the future, or will many differentiated products emerge?

Michael Truell: I think this market is huge. You mentioned IDEs earlier. Some people who look at this space look back at the IDE market over the past decade and ask, "Who can make money making editors?" In the past, everyone had their own custom configuration. Only one company made a commercial profit by making a great editor, but it was very small.

Some people have concluded that this will be the future, but I think what this overlooks is that in the 2010s, the potential for building editors for programmers was limited.

The company that makes money from the editor is mainly focused on making it easier to navigate the code base, checking for errors, and making good debugging tools. While these features are valuable, I think there is a huge opportunity to build tools for programmers and even more broadly for knowledge workers in various fields.

The real challenge we face is how to automate a large amount of tedious transactional and knowledge work, so as to achieve more reliable and efficient productivity improvement in various knowledge work fields.

I think we're in a market that's much bigger than people used to think about the developer tools market. There will be a variety of solutions, and there will probably be a leader. It could be us, but we'll see. This company will create a general tool that helps build most of the world's software, and it will be a large company with an impact on the times. But there will also be products that focus on specific market segments or specific parts of the software development life cycle.

Eventually, programming itself may move from being written in traditional formal programming languages to higher-level, and these higher-level tools will become the main objects that users buy and use. I believe that AI programming will emerge as a leader and it will develop into an extremely large-scale enterprise.

Lenny: It's interesting that Microsoft was at the center of this revolution, with great products and strong distribution channels. You said Copilot made you realize that this field has great potential, but it doesn't seem to have completely won the market. It's even a little behind. What do you think is the reason?

Michael Truell: I think there are specific historical and structural reasons why Copilot has not fully lived up to expectations.

From an architectural perspective, we were really inspired by Microsoft's Copilot project. They do a lot of great things, and we are a lot of Microsoft users ourselves.

But I think this market is not so friendly to mature companies. The markets that are friendly to them are often those where there is little room for innovation, they can be commercialized quickly, and they can make profits by bundling products.

In this market, the ROI difference between different products is not big. It makes little sense to buy independent innovative solutions, but the bundled products are more attractive.

Another type of market that is particularly favorable to established businesses is one where users are highly dependent on your tool from the outset and the switching costs are very high.

But in our space, users can easily try different tools and use their own judgment to choose which product is more suitable for them. This situation is less favorable to large companies and more friendly to those with the most innovative products.

As for the specific historical reasons, as far as I know, most of the team members who participated in the development of the first version of Copilot later went to other companies to do other things. It is indeed difficult to coordinate among all relevant departments and relevant personnel to work together on a product.

Senior engineers have too low expectations and entry-level engineers have too high expectations

Lenny: If you could sit next to every new user who uses Cursor for the first time and whisper a few words of advice in their ear to help them use Cursor better, what would you say?

Michael Truell: I think there is a problem we need to solve at the product level right now.

Many users who are now able to successfully use Cursor have a certain "taste" of the model's capabilities. These users understand what level of tasks Cursor can accomplish and what level of instructions need to be provided to it. They understand the model's qualities, limitations, what it can do, and what it cannot do.

In our existing products, we have not done a good job of educating users in this area, and we have not even provided clear usage guides.

To cultivate this "taste", I have two suggestions.

First, don't give the model the entire task at once. You'll either be disappointed with the output or accept it as is. Instead, I'd break the task into smaller chunks. You'll get the end result in the same amount of time, but in smaller chunks. Rather than trying to write one long, drawn-out instruction, define a small number of tasks at a time, get a small portion of the result, and repeat the process. This is a recipe for disaster.

Second, it is best to try it on a side project first, and not directly use it for important work. I would encourage developers who are accustomed to the existing development process to have more failure experience and try to break the upper limit of the model.

They can use AI as much as possible in a relatively safe environment, such as in a side job. Many times, we find that some people have not given AI a fair chance and underestimate its capabilities.

By taking the approach of decomposing tasks and actively exploring the boundaries of the model, you can try to break through in a safe environment. You may be surprised to find that in some scenarios, AI does not make mistakes as you expected.

Lenny: My understanding is that you need to develop an intuition to understand the boundaries of the model's capabilities and how far it can push an idea, rather than just following your instructions. And every time a new model is released, such as when GPT-4 comes out, you need to re-establish this intuition?

Michael Truell: It is. It may not feel as strong as it did in the last few years as it did when people first encountered the big models. But it is definitely a pain point that we hope to solve better for users in the future and make it easier for them. However, each model has slightly different quirks and personalities.

Lenny: People have been discussing whether tools like Cursor are more helpful for entry-level engineers or for senior engineers? Do they make senior engineers 10 times more efficient, or do they make junior engineers more like senior engineers? Which group of people do you think will benefit the most from using Cursor right now?

Michael Truell: I think both types of engineers can benefit greatly, and it's hard to say which type will benefit more.

They will fall into different anti-patterns. Junior engineers sometimes rely too much on AI and let it do everything. But we are not yet in a position to use AI end-to-end in a long-maintained code base with professional tools, dozens or even hundreds of people working together.

As for senior engineers, while this is not true for all, the adoption of these tools in companies is often hindered by some of the most senior people, such as some of the developer experience teams, because they are often responsible for developing tools to improve the productivity of other engineers in the organization.

We also see some very cutting-edge efforts, with some senior engineers at the forefront, embracing and leveraging the technology as much as possible. On average, senior engineers tend to underestimate how much AI can help them and tend to stick to their existing workflows.

It’s hard to say which group of people will benefit more. I think both groups of engineers will run into their own “anti-patterns”, but both groups will benefit significantly from using these tools.

Lenny: That makes total sense, like two ends of a spectrum, one with too much expectations and one with not enough expectations. It's like the fable of the three bears.

The core of Cursor recruitment is a two-day assessment

Lenny: What do you wish you knew before you founded Cursor? If you could go back to when Cursor first started and give Michael some advice, what would you tell him?

Michael Truell: The difficulty is that many valuable experiences are implicit and difficult to describe in words. Unfortunately, in some areas of human life, you really need to fail in order to learn lessons, or you need to learn from the best in the field.

We have experienced this in recruiting. In fact, we are extremely patient in recruiting. For us, both for personal vision and company strategy, it is crucial to have a world-class team of engineers and researchers working together to polish Cursor.

Because we need to build a lot of new things, we hope to find people who are curious and experimental. We also look for people who are pragmatic, appropriately cautious, and outspoken. As the company and business continue to expand, there will be more and more noise, and it is particularly important to keep a clear head.

Besides the product, finding the right people to join the company is probably our biggest concern, and because of this, we didn’t expand the team for a long time. A lot of people say that hiring too fast is a problem, but I think we started out too slow and we could have done better.

The recruiting approach we ended up using that worked very well for us was to focus on finding what we considered to be world-class talent, sometimes spending several years to recruit them.

We learned a lot about how to identify ideal candidates, who would really fit in with a team, what the criteria for excellence are, and how to communicate and inspire interest in people who aren’t actively looking for a job. It took a lot of time to learn how to do this well.

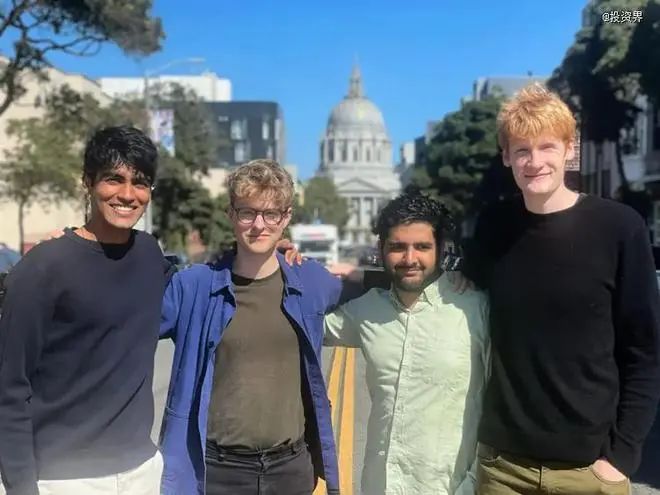

The four post-2000s co-founded Anysphere: Aman Sanger, Arvid Lunnemark, Sualeh Asif and Michael Truell

Lenny: What lessons can you share for companies that are hiring right now? What did you miss and what did you learn?

Michael Truell: In the beginning, we were too inclined to look for people who fit the profile of a prestigious school background, especially young people who had achieved excellent results in a well-known academic environment.

We were lucky to find some really great people early on who were very senior in their careers and still wanted to do this together.

When we first started recruiting, we overemphasized interest and experience. Although we have hired many excellent and talented young people, they are not the same as the senior lineup that came directly from the center stage.

We have also upgraded the interview process. We have a set of customized interview questions. The core part is to have the candidate come to the company for two days and do a two-day assessment project with us. This method is very effective and we are constantly optimizing it.

We are also constantly learning about candidates’ interests, offering attractive conditions, and starting conversations and introducing them to job opportunities before they have any intention of applying for a job.

Lenny: Do you have any favorite interview questions?

Michael Truell: I thought this two-day work assessment wouldn't work for many people, but it has surprisingly longevity. It allows candidates to participate from start to finish, just like completing a real project.

These projects are fixed, but they allow you to see real work results in two days. And this will not take up a lot of team time. You can spread the time of half a day or a day of on-site interviews into these two days, so that candidates have enough time to complete the project. This makes it easier to scale.

A two-day project is more of a test of whether you want to work with this person. After all, you will be there for two days and have several meals together.

Initially, we did not expect this assessment to continue, but it is now a very valuable part of our recruitment process. This is also very important for attracting candidates, especially in the early stages of the company, when the product has not yet been widely used and the quality is not mature enough. The only thing that can attract people to join is often a team that they feel is special and worth working with.

Two days will give candidates a chance to get to know us and even convince them that they want to join us. The effect of this assessment is beyond our expectations. It is not a strict interview question, but more of a forward-looking interview mode.

Lenny: The ultimate interview question is, you give them a task, like build a feature in our codebase, work with the team to code it and release it, is that right?

Michael Truell: Almost. We will not use IP, nor will we directly incorporate the project results into the product line. It is a simulation project. Usually a real, two-day mini-task will be arranged in our code base, and they will complete the end-to-end work independently, of course, there will also be collaborative links.

We are a company that places great emphasis on offline collaboration, and in almost all cases, they will sit in the office and work with us to complete the project.

Lenny: You mentioned that this interview method has been continuing. How big is your team?

Michael Truell: There are about 60 of us.

Lenny: Considering your influence, this is really small. I thought it would be much larger. I guess the engineers are the biggest number.

Michael Truell: One of our most important tasks next is to build a larger and better team to continuously optimize our products and improve the quality of our customer service. So we do not plan to stay so small for a long time.

Part of the reason we have a small team is that we have a very high percentage of engineers, R&D, and designers in-house. Many software companies tend to be over 100 people when they have about 40 engineers because there is a lot of operations and these companies are usually very sales-dependent from the beginning, which requires a lot of manpower.

We started with a lean, product-focused model. Today, we serve customers in many markets and continue to expand on that foundation. However, we still have a lot of work to do.

Lenny: The field of AI is undergoing tremendous changes. There are new things every day, and there are many newsletters telling you what is happening in AI every day. How do you stay focused when managing a hot, core company? How do you help your team work without distraction and focus on the product without being distracted by these endless new things?

Michael Truell: I think recruiting is key. It's about whether you can hire people with the right attitude. I believe we do a good job of that, and we could probably do better.

This is something we talk about more internally, too, and it's important to hire people with the right personality, who aren't overly concerned with external recognition, but are more focused on building great products, delivering high-quality work, and generally keeping a cool head and not having a lot of emotional ups and downs.

Recruitment can help us cope with many challenges, which is also the consensus of the whole company. Any organization needs processes, levels and many systems, but for any organizational tool introduced into the company, if you want to achieve its expected effect, it can be achieved to a large extent by recruiting people with corresponding characteristics.

One example is that we don't have a lot of process in engineering and we're doing pretty well. I think we need to add some process, but because we're a small company, as long as we hire really good people, we don't need to have a lot of process.

The first is to hire calm people. The second is to communicate well. The third is to set a good example.

From 2021 to 2022, we have been focusing on AI work. We have witnessed major changes in various technologies and concepts. If you can go back to late 2021 or early 2022, there was GPT-3, and InstructGPT did not exist, and DALL-E and Stable Diffusion had not yet appeared.

All of these image technologies came out, InstructGPT came out, GPT-4 came out, all these new models, all these different technologies, modalities, video-related technologies. Only a very small number of them have had an impact on our business. We have built up a certain immunity to know which advances are really important to us.

Even though there is a lot of discussion, only a few things really matter. This is also reflected in the field of AI in the past decade, with a large number of papers published on deep learning and AI. But what is amazing is that many advances in AI come from some very simple, elegant and enduring ideas. The vast majority of ideas proposed have neither stood the test of time nor made a significant impact. The current dynamic is somewhat similar to the development of deep learning as a field.

The demand for engineers will only grow

Lenny: What are some of the misunderstandings or areas that people still don’t fully understand about where AI is going and how it will change the world?

Michael Truell: People still have some overly extreme views, either that everything is going to happen very quickly or that it's all hype and exaggeration.

We are in the midst of a technological shift that will be more significant than the Internet, and more significant than anything we’ve seen since the advent of computers. But this shift will take time. It will be a multi-decade process, and many different groups will play important roles in driving it forward.

To achieve a future in which computers can do more and more of our work, we need to solve all of these independent technical challenges and continue to overcome them.

Some of these are scientific challenges, such as making models understand different types of data, become faster, cheaper, smarter, more adaptable to the modalities we care about, and take action in the real world.

There are also some issues related to human-computer collaboration, thinking about how people should see, control, and interact with these technologies on computers. I think this will take decades and there will be a lot of exciting work to be done.

I think there is a group of teams that are going to be particularly important. This is not to brag, but these companies are going to be focused on automating a specific area of knowledge work, building the underlying technology for that area and integrating the best third-party technology. Sometimes they also have to do their own research and development and build the corresponding product experience.

The importance of these teams is not only reflected in their value to users, but also in their key role in promoting technological progress as they scale. The most successful teams will be able to build large companies, and I look forward to seeing more similar companies emerge in other fields.

Lenny: I know you guys are hiring people who are interested in, “I want to work here and build this kind of product.” What kind of people are you looking for right now? Which people or positions are you hiring specifically for? Which positions are you most eager to fill soon? What information would someone need to know if they are interested?

Michael Truell: There are so many things that need to be done on our team, but there are still many things that have not been done. We have a lot to do, and if you feel that we are not recruiting for a specific position, why not contact us first. Maybe you can bring us new ideas and make us aware of vacancies that we have not noticed.

I think the two most important things this year are to build the best product in this field and to grow. We are in the stage of grabbing market share. Almost everyone in the world is either not using our similar tools or using other people's products that are developing slower. Driving the growth of Cursor is an important goal.

We are always hiring great engineers, designers and researchers, and we are also looking for other talents.

Lenny: There is a saying that AI will replace engineers to complete all the code. But this is in stark contrast to the reality. Everyone is still hiring engineers like crazy, including companies developing basic models. Do you think there will be a turning point where the demand for engineers begins to slow down?

I know this is a big question, do you think that all companies will have a growing need for engineers, or do you think at some point there will be a lot of Cursors running around to get the development done?

Michael Truell: We always believe that this is a long and complicated process. It will not be achieved in one step, and we will not directly achieve the state where you only need to give instructions and AI can completely replace the engineering department.

We really want to promote a smooth evolution of programming, always keeping people in the lead. Even in the final state, it is still very important to give people control over everything, and professional people are needed to decide what the software should look like. So I think engineers are indispensable and they will be able to do more things.

The need for software is enduring. It's nothing new, but think about how much it costs and how much people are needed to build something that seems simple and easy to define. At least to the layman, it should be easy to do, but it's hard to do it well. If you can reduce the cost and effort of development by an order of magnitude, you can create new possibilities on computers and develop countless new tools.

I know this all too well. I once worked at a biotech company, developing internal tools for them. The tools available on the market at the time were extremely poor and did not meet the company's needs at all. The demand for the internal tools I could build was so great that it far exceeded my personal development capabilities.

The physical performance of computers is already powerful enough, and it should allow us to move or build anything we want, but there are too many obstacles in reality. The demand for software far exceeds the current development capabilities, because developing a simple productivity software may cost as much as making a blockbuster movie. Therefore, in the distant future, the demand for engineers will only increase.

Lenny: Is there anything else you’d like to add that we didn’t mention? Any wisdom you’d like to leave for the audience?

Michael Truell: We're always thinking about how to build a team that can both create new things and continually improve the product. If we want to succeed, the IDE has to change a lot. Its future shape has to be very different.

If you look at the companies we take as role models, you will definitely see cases where they continue to lead multiple technological leaps and continuously push the forefront of the industry, but there are very few such companies.

On the one hand, we need to think about relevant issues in our daily work and reflect from the perspective of first principles. It is also important to study past successful cases in depth, which is also something we think about often.

Lenny: I noticed that there are many books behind you, one of which is about the history of an old computer company, which is very influential in many ways, but I have never heard of it. This touched me deeply, because many innovative thinking often comes from reviewing history and studying past successes and failures.

If someone wants to contact you or apply for a position, how can they find information online? You mentioned there may be positions that they are not aware of, where can they go to learn about them? How can listeners help you?

Michael Truell: If anyone is interested in these positions, our products and contact information can be found at cursor.com.

Lenny: Great. Michael, thank you so much for coming today.