This is disappointing. Purposefully underselling what models can do is a really bad idea. It is possible to point out that AI is flawed without saying it can't do math or count - it just isn't true.

People need to be realistic about capabilities of models to make good decisions.

Daniel Litt

@littmath

09-04

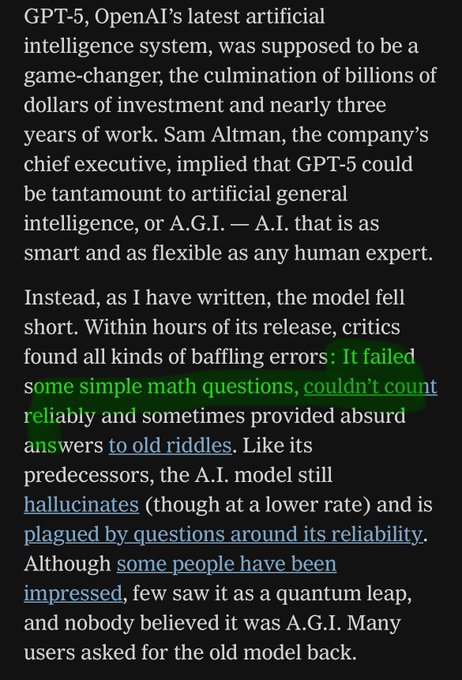

While it’s true one can elicit poor performance on basic math question from frontier models like GPT-5, IMO this kind of thing (in NYTimes) is likely to mislead readers about their math capabilities.

I think the urge to criticize companies for hype blends into a desire to deeply undersell what models are capable of.

Cherry-picking errors is a good way of showing odd limitations to an overethusiastic X crowd, but not a good way of making people aware that AI is a real factor.

A problem is that X discussions over AI are often really discussions about the timeline & approaches to AGI, something that is implicitly understood here

The broader public doesn't get that context, and instead assumes the discussion is about whether AI is going to matter or not

From Twitter

Disclaimer: The content above is only the author's opinion which does not represent any position of Followin, and is not intended as, and shall not be understood or construed as, investment advice from Followin.

Like

Add to Favorites

Comments

Share

Relevant content