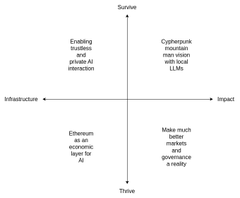

Two years later, V is back on Twitter. I'll reiterate what I said in that research report from two years ago—even the date is exactly the same: February 10th. Two years ago, Vitalik) had already subtly expressed his skepticism about the then-popular Crypto Helps AI initiatives. At that time, the three main drivers of the industry were the assetization of computing power, data, and models. My research report from two years ago primarily discussed some phenomena and skepticism I observed in the primary market regarding these three drivers. From Vitalik perspective, he still favored AI Helps Crypto. The examples he gave at the time were: AI as a participant in the game AI as a game interface AI as the game rules Over the past two years, we've made numerous attempts with AI as a game objective in Crypto Helps AI, but with limited success. Many tracks and projects have simply issued a token and called it a day, lacking genuine business product-market fit (PMF). I call this the "tokenization illusion." 1. Computing power assetization - Most cannot provide commercial-grade SLAs, are unstable, and frequently disconnect. They can only handle simple to small-to-medium-sized model inference tasks, mostly serving peripheral markets, and revenue is not linked to tokens... 2. Data Assetization - On the supply side (individual users), there is significant friction, low willingness, and high uncertainty. On the demand side (enterprises), what is needed are structured, context-dependent, and professional data providers with trustworthy and legally responsible entities. Web3 projects based on DAOs are unlikely to provide this. 3. Model Assetization - Models are inherently non-scarce, replicable, fine-tunable, and rapidly depreciating process assets, rather than final-state assets. Hugging Face is a collaboration and dissemination platform, more like GitHub for ML than an App Store for models. Therefore, attempts to tokenize models using a so-called "decentralized Hugging Face" have almost always failed. Furthermore, in the past two years, we've also tried various "verifiable inference" methods, which is a classic case of looking for a nail with a hammer. From ZKML to OPML to Gaming Theory, even EigenLayer has shifted its restaking narrative to be based on Verifiable AI. However, it's essentially the same issue that's happening in the restaking sector – few AVS providers are willing to continuously pay for additional verifiable security. Similarly, verifiable inference is basically about verifying "things nobody really needs to be verified," and the demand-side threat model is extremely vague – who exactly are they defending against? AI output errors (model capability issues) far outweigh malicious tampering of AI output (adversarial issues). We've seen the various security incidents on OpenClaw and Moltbook recently; the real problem stems from flawed strategy design, granting too many permissions, unclear tool combinations, and unexpected interactions. ... The hypothetical scenarios of "model tampering" or "maliciously rewriting the inference process" are virtually nonexistent. I posted this diagram last year; I wonder if any of you remember it. The ideas Vitalik presented this time are clearly more mature than two years ago, thanks to our progress in privacy, X402, ERC8004, prediction markets, and other areas. We can see from his four quadrants this time that one half belongs to "AI Helps Crypto," and the other half to "Crypto Helps AI," instead of the former clearly leaning towards the upper left and lower left—utilizing Ethereum's decentralization and transparency to solve the trust and economic collaboration problems in AI. 1.Enabling Trustless and private AI interaction (infrastructure + survival): Utilizing technologies such as ZK and FHE to ensure the privacy and verifiability of AI interactions (I'm not sure if the verifiability inference I mentioned earlier counts). 2. Ethereum as an economic layer for AI (infrastructure + prosperity): Enables AI agents to make economic payments, hire other bots, pay deposits, or establish reputation systems through Ethereum, thereby building a decentralized AI architecture rather than being limited by a single giant platform. Top right and bottom right - Leveraging the intelligent capabilities of AI to optimize user experience, efficiency, and governance within the crypto ecosystem: 3. Cypherpunk mountain man vision with local LLMs (Impact + Survival): AI as a "shield" and interface for users. For example, local LLMs (Large Language Models) can automatically audit smart contracts and verify transactions, reducing reliance on centralized front-end pages and safeguarding individual digital sovereignty. 4. Make much better markets and governance a reality (Impact + Prosperity): AI's deep involvement in prediction markets and DAO governance. AI can act as a highly efficient participant, amplifying human judgment through massive information processing, solving various market and governance problems such as insufficient human attention, high decision-making costs, information overload, and apathy in voting. Previously, we were desperately hoping Crypto Help AI, while Vitalik Buterin (Vitalik) stood on the other side. Now we've finally met in the middle, though it doesn't seem to have much to do with various tokenizations or AI Layer 1. Hopefully, looking back at this post two years from now will bring some new directions and surprises.

This article is machine translated

Show original

vitalik.eth

@VitalikButerin

Two years ago, I wrote this post on the possible areas that I see for ethereum + AI intersections: https://vitalik.eth.limo/general/2024/01/30/cryptoai.html…

This is a topic that many people are excited about, but where I always worry that we think about the two from completely separate philosophical

From Twitter

Disclaimer: The content above is only the author's opinion which does not represent any position of Followin, and is not intended as, and shall not be understood or construed as, investment advice from Followin.

Like

Add to Favorites

Comments

Share

Relevant content