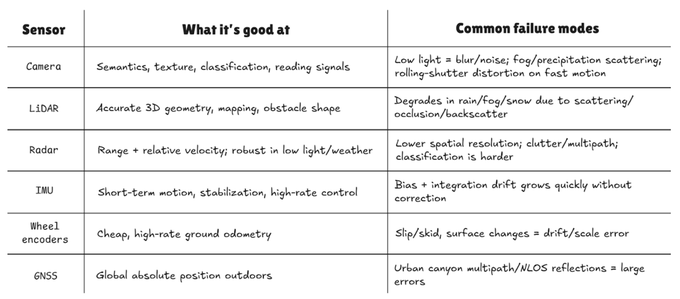

Robotics sensors deep dive: camera vs lidar vs radar vs IMU Robots don’t “see the world.” They collect imperfect measurements and then try to stay stable and safe anyway. The key idea is that each sensor fails in predictable ways, and real deployments are mostly about designing the system so one sensor failing doesn’t mean the robot fails. ➤ Cameras are great at texture and semantics (lanes, signs, object classes), and they’re cheap. The tradeoff is that they’re sensitive to lighting and optics. Low light pushes longer exposure, motion blur; fast motion can also break rolling-shutter cameras with geometric distortion. Optical sensing also degrades in fog/precipitation because of scattering. ➤ LiDAR (Light Detection and Ranging) adds accurate 3D geometry and scale, which cameras struggle with. But LiDAR is still optical: rain/fog/snow can introduce backscatter and occlusion, reducing perception quality. ➤ Radar is the “weather and velocity” sensor that measures range and relative velocity well and is typically more robust in low light and adverse weather than optical sensors, but it has lower spatial resolution and can be harder for fine classification. ➤ IMUs (accelerometers/gyros) are great for short-term motion and control stability, but pure integration drifts quickly due to noise and slowly changing bias - so they must be corrected by other sensors. ➤ Wheel encoders/odometry are simple and high-rate for ground robots, but slip/skid and surface changes create accumulating error. ➤ GNSS gives global position outdoors, but in urban canyons multipath/NLOS reflections can create large errors.

Sector:

From Twitter

Disclaimer: The content above is only the author's opinion which does not represent any position of Followin, and is not intended as, and shall not be understood or construed as, investment advice from Followin.

Like

Add to Favorites

Comments

Share