In 1849, after news spread that gold had been discovered in California, USA, the gold rush began. Countless people flocked to this new land, some from the East Coast, some from the European continent, and the first-generation Chinese immigrants who came to the United States. They first called this place "Golden Mountain" and later called it "San Francisco".

But no matter what, the gold diggers who came to this new land needed food, clothing, shelter, and transportation. Of course, the most important thing was the equipment for gold mining—shovels. As the saying goes, "If a worker wants to do a good job, he must first sharpen his tools." In order to pan for gold more efficiently, people began to flock to shovel sellers frantically, bringing wealth with them.

More than a hundred years later, not far south of San Francisco, two Silicon Valley companies set off a new gold rush: OpenAI was the first to discover the "gold mine" of the AI era, and Nvidia became the first batch of "shovel sellers." As in the past, countless people and companies began to pour into this new hot land, picked up the "shovel" of the new era and started panning for gold.

The difference is that there were almost no technical barriers to the shovel in the past, but today Nvidia's GPU is everyone's choice. Since the beginning of this year, ByteDance alone has ordered more than $1 billion in GPUs from Nvidia, including 100,000 A100 and H800 accelerator cards. Baidu, Google, Tesla , Amazon, Microsoft... These big companies have ordered at least tens of thousands of GPUs from Nvidia this year.

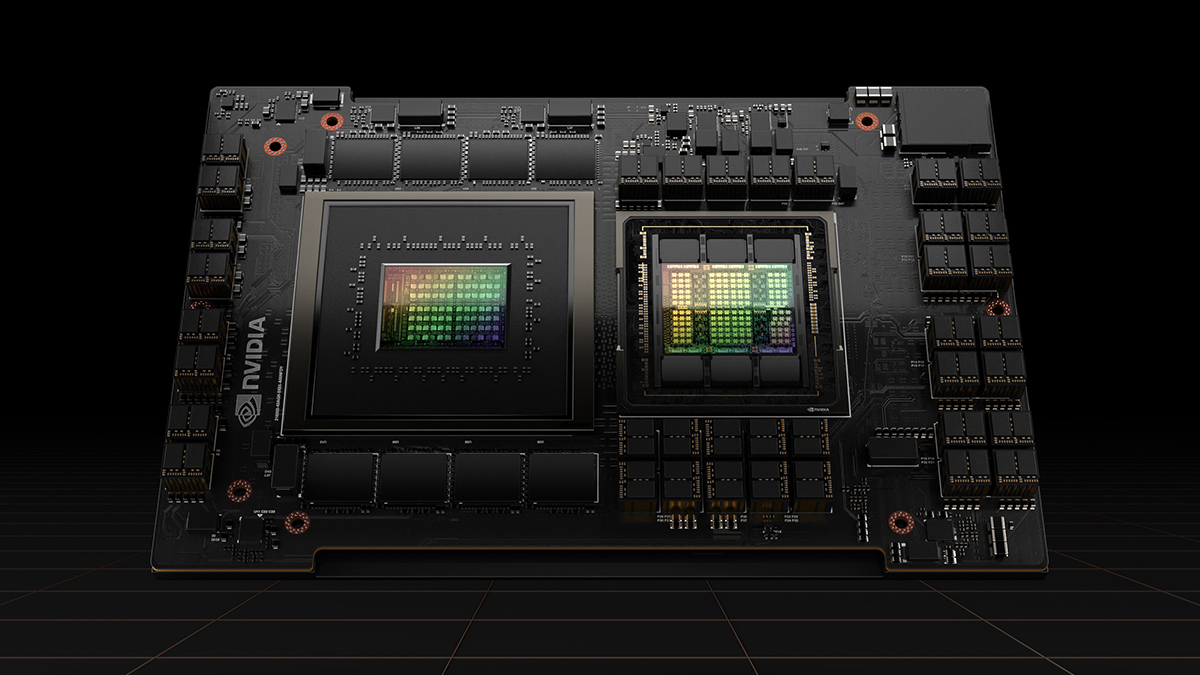

H100 GPU, picture/Nvidia

But this is still not enough. Megvii CEO Yin Qi said in an interview with Caixin at the end of March that there are only about 40,000 A100s in China that can be used for large-scale model training. As the AI boom continues, the castrated version of Nvidia's previous generation of high-end GPU A100 - A800 once rose to 100,000 yuan in China.

At a private meeting in June, OpenAI CEO Sam Altman said again that the severe shortage of GPUs has caused many efforts to optimize ChatGPT to be delayed. According to the calculation of TrendForce, a technical consulting agency, OpenAI needs about 30,000 A100s to support the continuous optimization and commercialization of ChatGPT.

Even from the new outbreak of ChatGPT in January this year, the shortage of AI computing power has lasted for nearly half a year. Why do these big companies still lack GPU and computing power?

ChatGPT lacks a graphics card? Nvidia is missing

To borrow a slogan: not all GPUs are Nvidia. The shortage of GPUs is essentially a shortage of Nvidia's high-end GPUs. For AI large model training, you can either choose Nvidia A100, H100 GPU, or the reduced version A800, H800 specially launched by Nvidia after the ban last year.

The use of AI includes two links, training and reasoning. The former can be understood as creating models, and the latter can be understood as using models. The pre-training and fine-tuning of the AI large model, especially the pre-training process, consumes a lot of computing power, especially the performance provided by a single GPU and the data transmission capability between multiple cards. But today, AI chips that can provide large-scale model pre-training computing efficiency (generalized AI chips only refer to chips for AI):

Can't say not much, can only say very little.

An important feature of a large model is at least 100 billion-level parameters, which require a huge amount of computing power for training. Data transmission and synchronization between multiple GPUs will cause some GPU computing power to be idle, so the higher the performance of a single GPU , the lower the number, the more efficiently the GPU is utilized and the corresponding lower the cost.

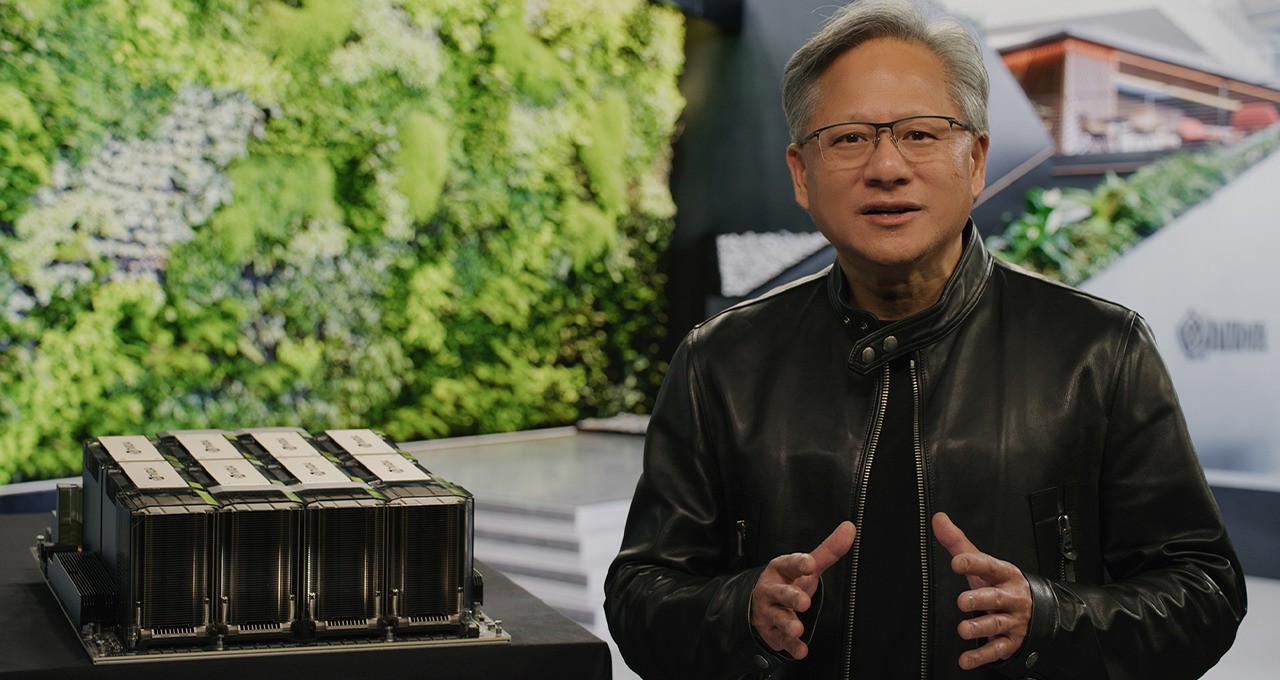

NVIDIA DGX H100 AI supercomputer, picture/Nvidia

The A100 and H100 released by Nvidia since 2020, on the one hand, have the high computing power of a single card, and on the other hand, they have the advantage of high bandwidth. The FP32 computing power of the A100 reaches 19.5 TFLOPS (trillion floating-point operations per second), and the H100 is as high as 134 TFLOPS.

At the same time, investment in communication protocol technologies such as NVLink and NVSwitch has also helped Nvidia build a deeper moat. Up to H100, the fourth-generation NVLink can support up to 18 NVLink links, with a total bandwidth of 900GB/s, which is 7 times the bandwidth of PCIe 5.0.

The A800 and H800 customized for the Chinese market have almost the same computing power, mainly to avoid regulatory standards, and the bandwidth has been cut by about a quarter and half respectively. According to Bloomberg, the H800 takes 10% -30% longer than the H100 for the same AI task.

But even so, the computing efficiency of A800 and H800 still exceeds other GPU and AI chips. This is why there will be a "hundred flowers blooming" imagination in the AI reasoning market, including the self-developed AI chips of major cloud computing companies and other GPU companies can occupy a certain share, but in the AI training market that requires higher performance, only Nvidia "One family dominates".

H800 "knife" bandwidth, picture / Nvidia

Of course, behind the "dominance of one family", the software ecology is also the core technology moat of Nvidia. There are many articles in this regard, but in short, the most important thing is that the CUDA unified computing platform that Nvidia launched and insisted on in 2007 has become the infrastructure of the AI world today. Most AI developers All are developed based on CUDA, just like Android and iOS are to mobile application developers.

However, logically speaking, Nvidia also understands that its high-end GPUs are very popular. After the Spring Festival, there have been many reports that Nvidia is adding wafer foundry orders to meet the strong demand of the global market. In the past few months, it should be able to greatly increase the foundry. Production capacity, after all, is not TSMC's most advanced 3nm process.

However, the problem lies precisely in the foundry link.

Nvidia's high-end GPU is inseparable from TSMC

As we all know, the low tide of consumer electronics and the continued destocking have led to a general decline in the capacity utilization rate of major wafer foundries, but TSMC's advanced manufacturing process is an exception.

Due to the AI boom triggered by ChatGPT, A100 and 4nm H100 based on TSMC's 7nm process are urgently adding orders, and TSMC's 5/4nm production line is close to full capacity. People in the supply chain also predict that Nvidia's large number of SHR (most urgent processing level) orders to TSMC will last for one year.

In other words, TSMC's production capacity is not enough to meet Nvidia's short-term strong demand. No wonder some analysts believe that since the A100 and H100 GPUs are always in short supply, no matter from the perspective of risk control or cost reduction, it is necessary to find Samsung or even Intel for foundry outside TSMC.

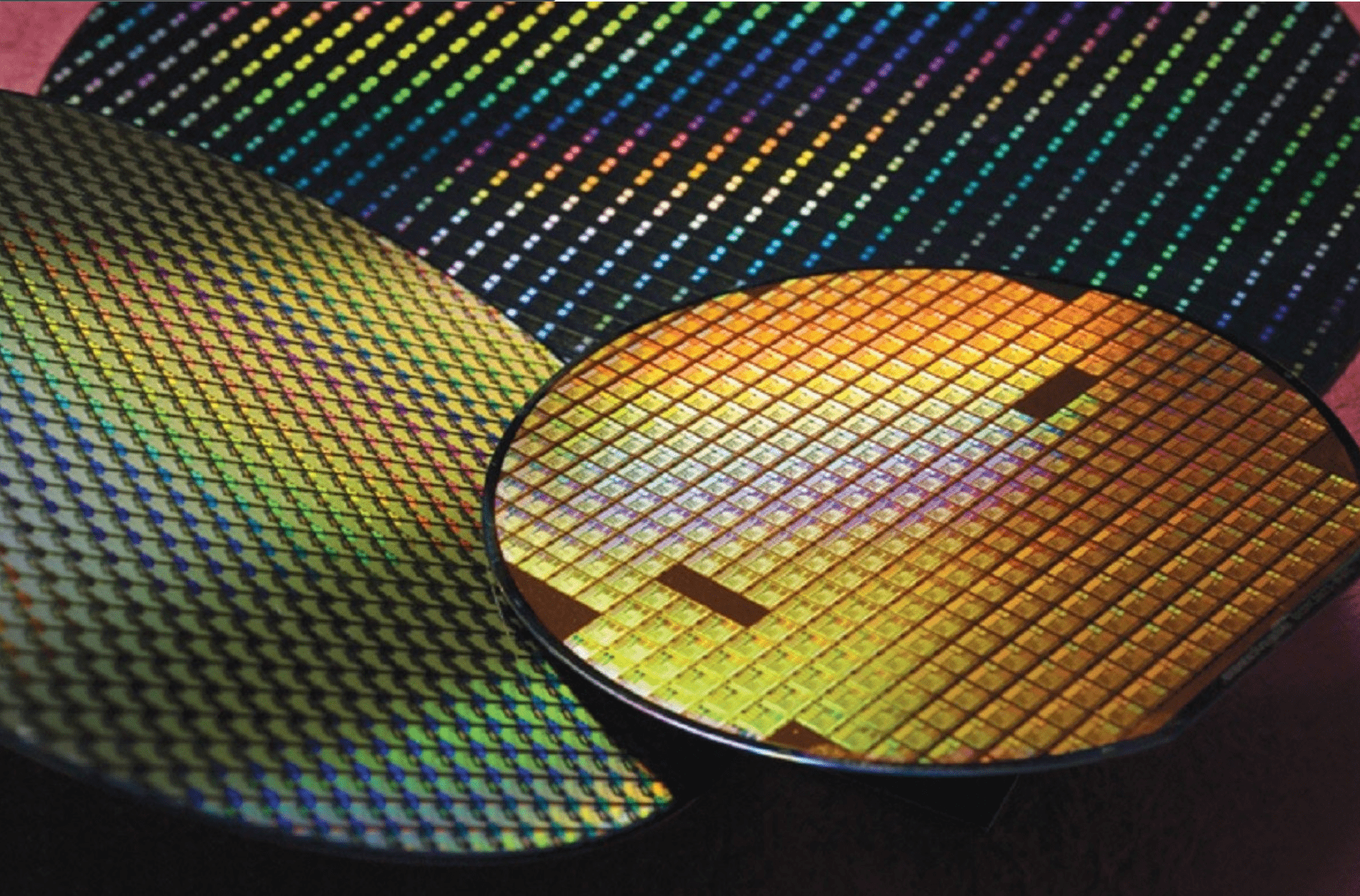

Manufacturing chips on semiconductor silicon wafers, picture/TSMC

But it turns out that Nvidia has no such idea, at least in the short term, and there is no way to leave TSMC. Just before Sam Altman complained that Nvidia's GPU was not enough, Nvidia founder and CEO Huang Renxun said at COMPUTEX that Nvidia's next-generation chips will still be handed over to TSMC.

The core technical reason is that, from V100, A100 to H100, Nvidia's high-end accelerator cards all use TSMC's CoWoS advanced packaging technology to solve the integration of chip storage and computing in the context of high computing power AI. And CoWoS advanced packaging core technology: no TSMC.

In 2012, TSMC launched the exclusive CoWoS advanced packaging technology, which realized a one-stop service from wafer foundry to terminal packaging. Customers including Nvidia, Apple and many other chip manufacturers have adopted it in all high-end products. In order to meet Nvidia's urgent needs, TSMC even adopted a partial subcontracting method, but it did not include the CoWoS process, and TSMC still focused on the most valuable advanced packaging part.

According to the estimates of Nomura Securities, TSMC’s CoWoS annual production capacity will be about 70,000 to 80,000 wafers by the end of 2022, and it is expected to increase to 140,000 to 150,000 wafers by the end of 2023. By the end of 2024, it is expected to challenge the production capacity of 200,000 wafers.

However, far away can’t solve the near fire. The production capacity of TSMC’s advanced CoWoS packaging is seriously in short supply. Since last year, TSMC’s CoWoS orders have doubled. This year, the demand from Google and AMD is also strong. Even Nvidia will further strive for higher priorities through the personal relationship between Huang Renxun and TSMC founder Zhang Zhongmou.

TSMC, Figure /

write at the end

Due to the epidemic and geopolitical changes in the past few years, everyone has realized that a cutting-edge technology built on sand-chips are so important. After ChatGPT, AI has attracted worldwide attention again, and with the desire for artificial intelligence and accelerated computing power, countless chip orders have also poured in.

The design and manufacture of high-end GPUs require a long period of R&D investment and accumulation, and they need to face insurmountable hardware and software barriers. This has also led to the fact that in this "feast of computing power", Nvidia and TSMC can get most of them. The cake and the right to speak.

Whether it is today's concern about generative AI or the last wave of deep learning that focused on image recognition, the speed at which Chinese companies are catching up in AI software capabilities is obvious to all. However, when Chinese companies spend huge sums of money and turn their bows to AI, they seldom focus on the lower-level hardware.

But behind the AI acceleration, two of the four most important GPUs are already restricted in China, and the other two castrated A800 and H800 not only slow down the catching up speed of Chinese companies, but also cannot rule out the risk of restriction. Compared with the competition on the big model, perhaps, we need to see the competition of Chinese companies at a lower level.

This article comes from " Ray Technology ", 36 Krypton is authorized to publish.