Key Insights

- DeCC introduces the ability to preserve data privacy on an inherently transparent public blockchain, enabling private computation and state without sacrificing decentralization.

- DeCC ensures the security of data during its use, solving a key vulnerability in traditional and blockchain systems by implementing encrypted calculations without exposing plaintext.

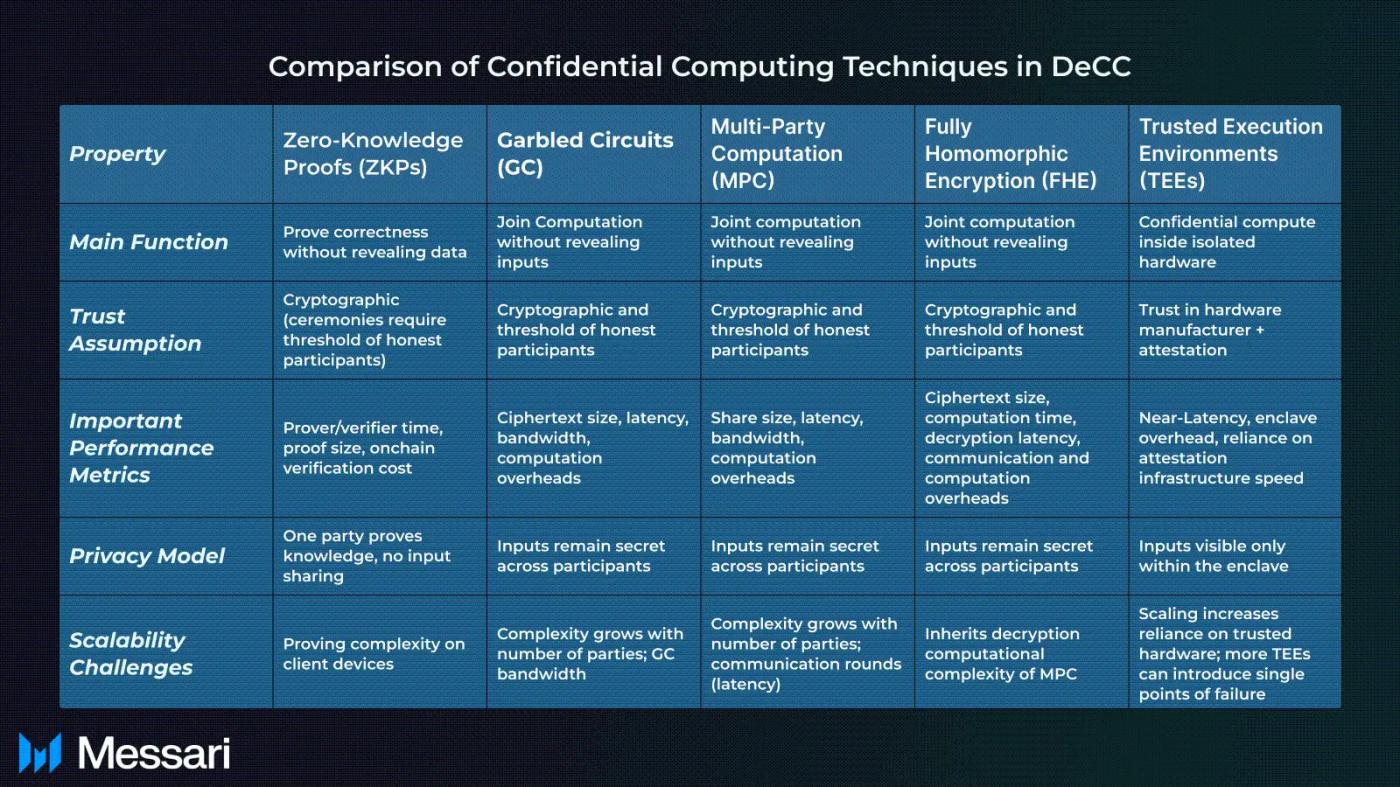

- Trustless confidentiality is achieved by combining cryptographic tools (such as ZKP, MPC, GC, FHE) and TEE with proofs. Each technology provides different trade-offs in performance and trust and can be combined to obtain stronger guarantees.

- Over $1 billion has been invested in DeCC projects, reflecting the growing momentum in the field, with teams focusing on practical integrations and developer-facing infrastructure.

Introduction: The Evolution of Data Computing and Security

Blockchain technology introduced a new paradigm of decentralization and transparency, but it also brought trade-offs. In the first wave of crypto privacy, often referred to as "Privacy 1.0", tools like mixers, coin rollers, and private transactions (e.g., Zcash, Monero, and Beam.mw ) provided users with a degree of anonymity for financial transfers. These solutions were specialized, largely limited to hiding sender and receiver identities, and disconnected from the broader application infrastructure.

The second wave is taking shape. Privacy is no longer limited to hiding transactions, but extends to full computations. This shift marks the emergence of decentralized confidential computing (DeCC), also known as Privacy 2.0. DeCC introduces private computing as a core feature of decentralized systems, allowing data to be processed securely without revealing the underlying inputs to other users or the network.

Unlike typical smart contract environments where all state changes and inputs are publicly visible, DeCC keeps data encrypted throughout the computation process and only reveals what is necessary for correctness and verification. This enables applications to maintain private state on top of a public blockchain infrastructure. For example, by using multi-party computation (MPC), a group of hospitals can analyze their combined data sets without any institution seeing the raw patient data of the others. Where transparency once limited what blockchain could support, privacy unlocks entirely new classes of use cases that require confidentiality.

DeCC is implemented by a series of technologies designed for secure data processing. These technologies include zero-knowledge proofs (ZKP), multi-party computation (MPC), confusion circuits (GC), and fully homomorphic encryption (FHE), all of which rely on cryptography to enforce privacy and correctness. Trusted Execution Environment (TEE) complements these tools by providing hardware-based isolation to enable secure off-chain execution. Together, these technologies form the foundation of the DeCC technology stack.

The potential applications are vast: decentralized financial systems where trading strategies remain confidential, public health platforms that extract insights from private data, or artificial intelligence models trained on distributed datasets without exposing the underlying inputs. All of these require building privacy-preserving computation into the infrastructure layer of blockchain systems.

This report explores the current state of DeCC and its broader significance. We begin by contrasting how data is handled in traditional systems versus the DeCC framework, and why transparency alone is insufficient for many decentralized applications. We then examine the core technologies underpinning DeCC, how they differ, and how they can be combined to balance tradeoffs in performance, trust, and flexibility. Finally, we paint a picture of the ecosystem, highlighting the capital flowing into the space, the teams building in production, and what this momentum means for the future of decentralized computing.

Traditional Data Processing and Decentralized Confidential Computing (DeCC)

To understand the need for DeCC, it helps to understand how data is handled in traditional computing environments and where the weak links are. In classic computing architectures, data typically exists in three states: at rest (stored on disk/database), in transit (moving across the network), and in use (processed in memory or CPU). Thanks to decades of security advances, the confidential computing industry has reliable solutions for two of these states.

- Data at rest : Encrypted using disk-level encryption or database-level encryption (e.g. AES). Commonly found in enterprise systems, mobile devices, and cloud storage.

- Data in Transit : Protected by secure transmission protocols such as TLS/SSL. Ensure data is encrypted when moving between systems or across networks.

- Data in Use : Traditionally, encrypted data received from storage or the network is decrypted before processing. This means workloads run on plaintext, leaving data in use unprotected and exposed to potential threats. DeCC aims to address this vulnerability by enabling computation without revealing the underlying data.

While the first two states are well protected, securing data in use remains a challenge. Whether it’s a bank server calculating interest payments or a cloud platform running a machine learning model, data often has to be decrypted in memory. At that moment, it’s vulnerable: a malicious system administrator, a malware infection, or a compromised operating system could snoop or even change sensitive data. Traditional systems mitigate this with access controls and isolated infrastructure, but fundamentally, there is a period when the “crown jewels” exist in plaintext inside a machine.

Now consider blockchain-based projects. They take transparency to a higher level: data is not only potentially decrypted on one server, but is often replicated in plaintext on thousands of nodes around the world. Public blockchains like Ethereum and Bitcoin intentionally broadcast all transaction data for consensus. This is fine if your data is only financial information that is intended to be public (or pseudonymous). But it completely breaks down if you want to use blockchain for any use case involving sensitive or personal information. For example, in Bitcoin, every transaction amount and address is visible to everyone - which is great for auditability, but terrible for privacy. With smart contract platforms, any data you put into a contract (your age, your DNA sequence, your business's supply chain information) becomes public to every network participant. No bank wants all of its transactions to be public, no hospital wants to put patient records on a public ledger, and no gaming company wants to reveal the secret states of its players to everyone.

Data life cycle and its vulnerabilities

In the traditional data processing lifecycle, users typically send data to a server, which decrypts and processes it, then stores the results (possibly encrypting them on disk) and sends back a response (encrypted via TLS). The vulnerability is obvious: the server holds the raw data while it's in use. If you trust the server and its security, that's fine—but history shows that servers can be hacked or insiders can abuse access. Enterprises deal with this problem with rigorous security practices, but they're still wary of putting extremely sensitive data in the hands of others.

In contrast, in the DeCC approach, the goal is that sensitive data cannot be publicly visible to any single entity at any time, even while it is being processed. Data may be split across multiple nodes, or processed within an encrypted envelope, or cryptographically proven without revealing it. Thus, confidentiality can be maintained throughout its entire lifecycle, from input to output. For example, instead of sending raw data to a server, a user can send an encrypted version or a share of their secret to a network of nodes. These nodes run the computation in a way that none of them can learn the underlying data, and users get an encrypted result that only they (or authorized parties) can decrypt.

Why transparency isn't enough in crypto

While public blockchains solve the trust problem (we no longer need to trust a central operator; the rules are transparent and enforced by consensus), they do so by sacrificing privacy. The mantra is: “Don’t put anything on-chain that you don’t want to be public.” For simple cryptocurrency transfers, this may be fine in some cases; for complex applications, it can get quite complex. As the Penumbra team (building a private DeFi chain) puts it, in DeFi today, “information leakage becomes value leakage when users interact on-chain,” leading to front-running and other vulnerabilities. If we want decentralized exchanges, lending markets, or auctions to operate fairly, participants’ data (bids, positions, strategies) often needs to be hidden; otherwise, outsiders can exploit this knowledge in real time. Transparency makes every user’s actions public, which is different from how traditional markets work, and for good reasons.

Additionally, many valuable blockchain use cases outside of finance involve personal or regulated data that cannot be made public by law. Consider decentralized identity or credit scoring — a user may want to prove attributes about themselves (“I am over 18” or “My credit score is 700”) without revealing their entire identity or financial history. Under a fully transparent model, this is impossible; any proof you put on-chain will leak data. DeCC technologies like zero-knowledge proofs are designed to address this problem, allowing for selective disclosure (prove X without revealing Y). As another example, a company may want to use blockchain for supply chain tracking, but does not want competitors to see its raw inventory logs or sales data. DeCC can submit encrypted data on-chain and only share decrypted information with authorized partners, or use ZK proofs to prove compliance with certain criteria without revealing trade secrets.

How DeCC achieves trustless confidential computing

Addressing the limitations of transparency in decentralized systems requires infrastructure that can maintain confidentiality during active computations. Decentralized confidential computing provides such infrastructure by introducing a set of technologies that apply cryptography and hardware-based methods to protect data throughout its lifecycle. These technologies are designed to ensure that sensitive inputs cannot be compromised even during processing, eliminating the need to trust any single operator or intermediary.

The DeCC technology stack includes zero-knowledge proofs (ZKP), which allow one party to prove that a computation has been performed correctly without revealing the input; multi-party computation (MPC), which allows multiple parties to jointly compute a function without exposing their respective data; confusion circuits (GC) and fully homomorphic encryption (FHE), which allow computations to be performed directly on encrypted data; and trusted execution environments (TEEs), which provide hardware-based isolation for secure execution. Each of these technologies has unique operating characteristics, trust models, and performance profiles. In practice, they are often integrated to address different security, scalability, and deployment constraints in applications. The following sections outline the technical foundations of each technology and how they enable trustless, privacy-preserving computation in decentralized networks.

1. Zero-Knowledge Proof (ZKP)

Zero-knowledge proofs are one of the most influential cryptographic innovations applied to blockchain systems. ZKPs allow one party (the prover) to prove to another party (the verifier) that a given statement is true, without revealing any information other than the validity of the statement itself. In other words, it enables a person to prove that they know something, such as a password, a private key, or the solution to a problem, without revealing the knowledge itself.

Take the “Where’s Wally” puzzle, for example. Let’s say someone claims they found Wally in a crowded picture, but doesn’t want to reveal his exact location. Instead of sharing the full picture, they take a close-up photo of Wally’s face, with a timestamp, that’s zoomed in so that the rest of the picture doesn’t show up. A verifier can confirm that Wally has been found without knowing where he is in the picture. This proves the claim is correct without revealing any additional information.

More formally, a zero-knowledge proof allows a prover to prove that a particular statement is true (e.g., “I know a key that hashes to this public value” or “this transaction is valid according to the protocol rules”) without revealing the inputs or internal logic behind the computation. The verifier is convinced by the proof but is not given any other information. One of the earliest and most widely used examples in blockchain is zk-SNARKs (Zero-Knowledge Succinct Non-Interactive Argument of Knowledge). Zcash uses zk-SNARKs to allow users to prove that they have private keys and are sending valid transactions without revealing the sender address, recipient, or amount. The network only sees a short cryptographic proof that the transaction is legitimate.

How ZKPs enable confidential computing: In the context of DeCC, ZKPs shine when you want to prove that a computation was done correctly on hidden data. Instead of having everyone re-perform the computation (as in traditional blockchain verification), the prover can do the computation privately and then publish a proof. Others can use this tiny proof to verify that the computation result is correct without seeing the underlying input. This preserves privacy and significantly improves scalability (because verifying a concise proof is much faster than re-running the entire computation). Projects like Aleo have built an entire platform around this idea: users run a program offline on their private data and generate a proof; the network verifies the proof and accepts the transaction. The network doesn't know the data or what specifically happened, but it knows that whatever it was, it followed the rules of the smart contract. This effectively creates private smart contracts, which is impossible on Ethereum's public virtual machine without ZKP. Another emerging application is zk-rollups for privacy: not only do they batch transactions for scalability, but they also use ZK to hide the details of each transaction (unlike regular rollups, where the data is usually still public).

ZK proofs are powerful because their security is purely mathematical, usually relying on the honesty of the participants in a "ceremony" (a multi-party cryptographic protocol that generates secret/random information) as a setup phase. If the cryptographic assumptions hold (e.g., certain problems remain hard to solve), the proof cannot be forged, nor can it be forged to assert false statements. Therefore, by design, it does not leak any additional information. This means that you don't have to trust the prover at all; either the proof passes, or it doesn't.

Limitations: The tradeoff historically has been performance and complexity. Generating ZK proofs can be computationally expensive (several orders of magnitude higher than doing the computation normally). In early constructions, even proving simple statements could take minutes or longer, and the cryptography was complex and required special setup (trusted setup ceremony) — though newer proof systems like STARKs avoid some of these issues. There are also functional limitations: Most ZK schemes involve a single prover proving something to many validators. They don’t address private shared state (private data “belongs to” or is composed of multiple users, as is the case in auctions and AMMs). In other words, ZK can prove that a user correctly computed Y from my secret X, but it doesn’t by itself allow two people to jointly compute a function of their two secrets. To address the private shared state problem, ZK-based solutions typically use other techniques like MPC, GC, and FHE. Furthermore, pure ZKPs typically assume that the prover actually knows or possesses the data being proven.

There is also the issue of size: early zk-SNARKs produced very short proofs (only a few hundred bytes), but some newer zero-knowledge proofs (especially those without a trusted setup, like bulletproofs or STARKs) can be larger (tens of KB) and slower to verify. However, continued innovation (Halo, Plonk, etc.) is rapidly improving efficiency. Ethereum and others are investing heavily in ZK as a scaling and privacy solution.

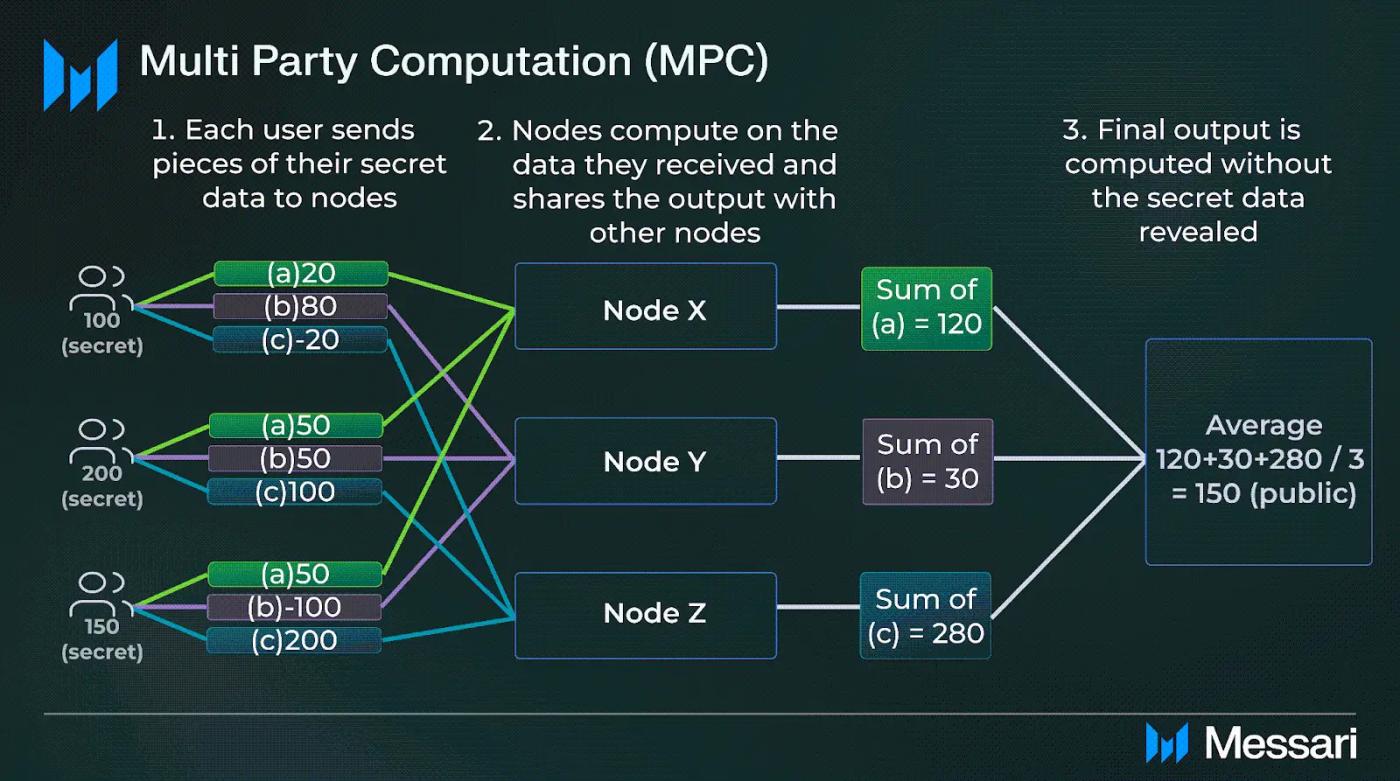

2. Multi-party computation (MPC)

While ZK proofs allow a party to prove something about its own private data, secure multi-party computation (primarily referring to techniques based on secret sharing (SS)) addresses a related but different challenge: how to actually collaboratively compute something without revealing the inputs. In an MPC protocol, multiple independent parties (or nodes) jointly compute a function over all of their inputs, such that each learns only the result and knows nothing about the inputs of the others. The foundations of secret sharing-based MPC were laid in a paper co-authored by Ivan Damgard of the Partsia Blockchain Foundation in the late 1980s. Since then, various techniques have been created.

A simple example is a group of companies that want to calculate an industry-wide average salary for a certain position, but none of them want to reveal their internal data. Using MPC, each company inputs its data into a joint calculation. The protocol ensures that no company can see any other participant's raw data, but all participants receive the final average. The calculation is performed across the group via a cryptographic protocol, eliminating the need for a central authority. In this setup, the process itself acts as a trusted intermediary.

How does MPC work? Each participant's input is mathematically split into a number of pieces (shares) and distributed to all participants. For example, if my secret is 42, I might generate some random numbers that sum to 42 and give each party a share (the random-looking part). No single part reveals any information, but they collectively have that information. The participants then perform calculations on these shares, passing messages back and forth, so that at the end they have shares of the output that can be combined to reveal the result. Throughout this process, no one can see the original input; they can only see the encoded or obfuscated data.

Why is MPC important? Because it is inherently decentralized, it does not rely on a single security box (such as TEE) or a single prover (such as ZK). It eliminates the need to trust any single party. A common definition describes it this way: when computation is distributed among participants, there is no need to rely on any single party to protect privacy or ensure correctness. This makes it a cornerstone of privacy-preserving technology. If you have 10 nodes performing MPC computations, typically you need a large portion of them to collude or be compromised to leak secrets. This fits well with the distributed trust model of blockchain.

Challenges of MPC: Privacy does not come without a cost. MPC protocols often incur overhead, primarily in terms of communication. To jointly compute, parties must exchange multiple rounds of encrypted messages. The number of communication rounds (sequential back-and-forth messages) and their bandwidth requirements grow with the complexity of the function and the number of parties involved. Ensuring that computations remain efficient is tricky with more parties involved. There is also the issue of honest versus malicious actors. Basic MPC protocols assume that participants follow the protocol (may be curious but do not deviate). Stronger protocols can handle malicious actors (who may send false information in an attempt to break privacy or correctness), but this adds more overhead to detect and mitigate cheating. Interestingly, blockchains can help by providing a framework for punishing misbehavior. For example, staking and penalty mechanisms can be used if a node deviates from the protocol, making MPC and blockchain a complementary pair.

In terms of performance, significant progress has been made. Preprocessing techniques allow heavy cryptographic computations to be done before the actual inputs are known. For example, generating correlated random data (called Beaver triples) can be used later to speed up multiplication operations. This way, when the computations on real inputs actually need to be done (the online phase), they can be done much faster. Some modern MPC frameworks can compute fairly complex functions between a small number of parties in a few seconds or less. There is also research on scaling MPC to many parties by organizing it into networks or committees.

MPC is particularly important for applications such as private multi-user dApps (e.g., auctions where bids are kept confidential, executed via MPC), privacy-preserving machine learning (multiple entities jointly training models without sharing data — an active area known as federated learning with MPC), and distributed secret management (as in the threshold key example). A specific crypto example is the Partisia Blockchain, which integrates MPC into its core to enable enterprise-grade privacy on public blockchains. Partisia uses a network of MPC nodes to process private smart contract logic and then publish commitments or encrypted results on-chain.

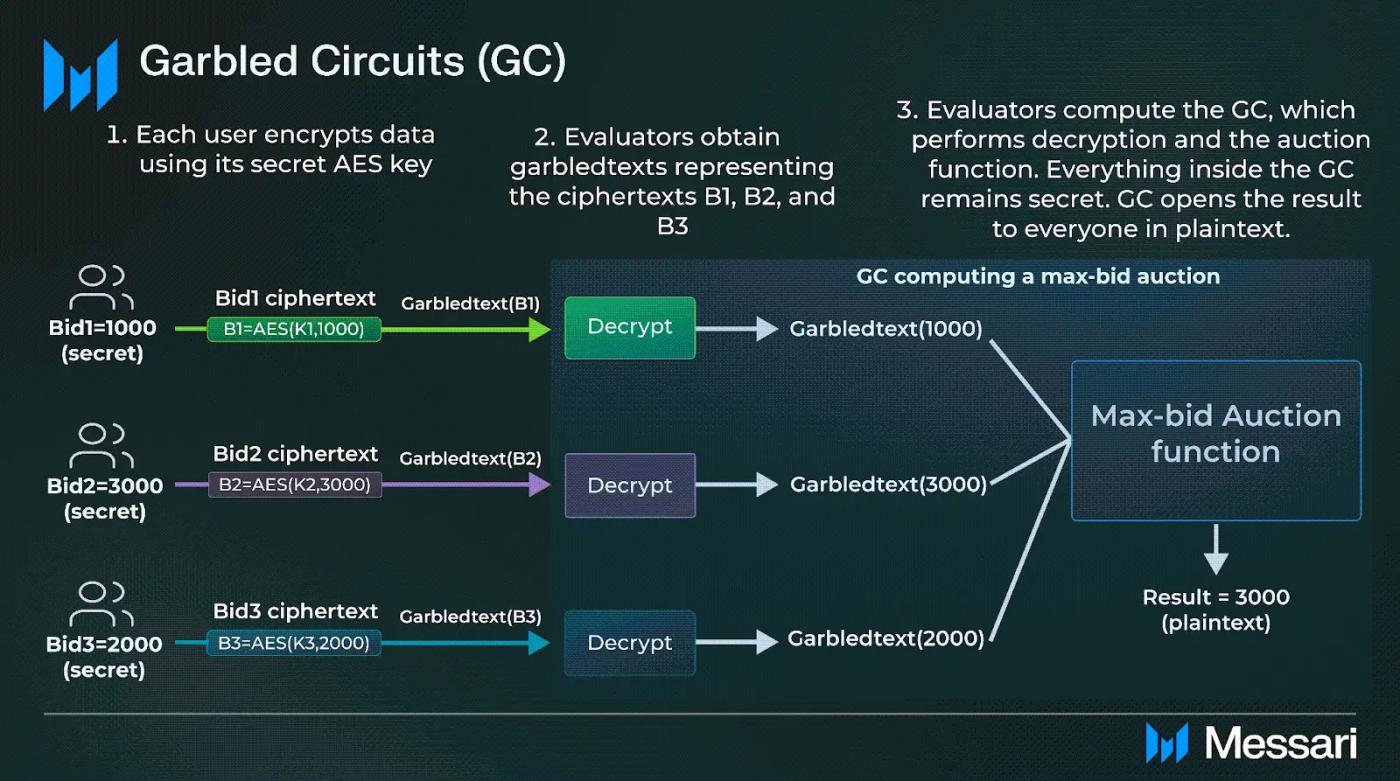

3. Garbled Circuit (GC)

Confused circuits are a fundamental concept in modern cryptography and are the earliest proposed solution for computing on encrypted data. In addition to supporting encrypted computing, GC methods are also used in various privacy-preserving protocols, including zero-knowledge proofs and anonymous/unlinkable tokens.

What is a circuit? A circuit is a general computational model that can represent any function, from simple arithmetic to complex neural networks. Although the term is usually associated with hardware, circuits are widely used in various DeCC technologies, including ZK, MPC, GC, and FHE. A circuit consists of input wires, intermediate gates, and output wires. When values (Boolean or arithmetic) are provided to the input wires, the gates process the values and produce the corresponding output. The layout of the gates defines the function being computed. Functions or programs are converted to circuit representations using compilers such as VHDL or domain-specific cryptographic compilers.

What are obfuscated circuits? Standard circuits leak all data during execution, such as the values on the input and output wires and the outputs of intermediate gates are all plaintext. In contrast, obfuscated circuits encrypt all of these components. Inputs, outputs, and intermediate values are converted to encrypted values (obfuscated text) and gates are called obfuscated gates. Obfuscated circuit algorithms are designed so that evaluating the circuit does not leak any information about the original plaintext values. The process of converting plaintext to obfuscated text and then decoding it is called encoding and decoding.

How do GCs solve the problem of computing on encrypted data? Obfuscated circuits were proposed by Andrew Yao in 1982 as the first general solution for computing on encrypted data. His original example, known as the millionaire's problem, involves a group of people wanting to know who is the richest without revealing their actual wealth to each other. Using obfuscated circuits, each participant encrypts their input (their wealth) and shares the encrypted version with everyone else. The group then uses encrypted gates to step through circuits designed to compute the maximum value. The final output (e.g. the identity of the richest person) is decrypted, but no one knows the exact input of any other participant. While this example used a simple maximum function, the same approach can be applied to more complex tasks, including statistical analysis and neural network inference.

Breakthroughs that make GC suitable for DeCC. Recent research led by Soda Labs has applied obfuscated circuit technology to a decentralized setting. These advances focus on three key areas: decentralization, composability, and public auditability. In a decentralized setting, computation is separated between two independent groups: obfuscators (responsible for generating and distributing obfuscated circuits) and evaluators (responsible for executing obfuscated circuits). Obfuscators provide circuits to a network of evaluators, which run these circuits on demand as directed by the smart contract logic.

This separation enables composability, the ability to build complex computations from smaller atomic operations. Soda Labs achieves this by generating a continuous stream of obfuscated circuits corresponding to low-level virtual machine instructions (e.g., for the EVM). These building blocks can be dynamically assembled at runtime to perform more complex tasks.

For public auditability, Soda Labs proposes a mechanism that allows external parties (regardless of whether they participated in the computation) to verify that the result has been computed correctly. This verification can be done without exposing the underlying data, adding additional trust and transparency.

Importance of GC to DeCC: Confused circuits provide low-latency, high-throughput computation on encrypted inputs. As demonstrated on the COTI Network mainnet, the initial implementation supports approximately 50 to 80 confidential ERC20 transactions per second (ctps), with future versions expected to achieve higher throughput. The GC protocol relies on widely adopted cryptographic standards such as AES and libraries like OpenSSL, which are widely used in fields such as healthcare, finance, and government. AES also offers quantum-resistant variants to support future compatibility with post-quantum security requirements.

GC-based systems are compatible with client environments and do not require specialized hardware or GPUs, unlike some TEE or FHE deployments. This reduces infrastructure costs and enables deployment on a wider range of devices, including lower-capacity machines.

Challenges of GC: The main limitation of obfuscated circuits is the communication overhead. Current implementations require sending approximately 1MB of data to the evaluator for each confidential ERC20 transaction. However, this data can be preloaded long before execution, so no latency is introduced during real-time use. Continued improvements in bandwidth availability, including the trend described by Nielsen's Law (which predicts bandwidth to double every 21 months), and active research into obfuscated circuit compression, can help reduce this overhead.

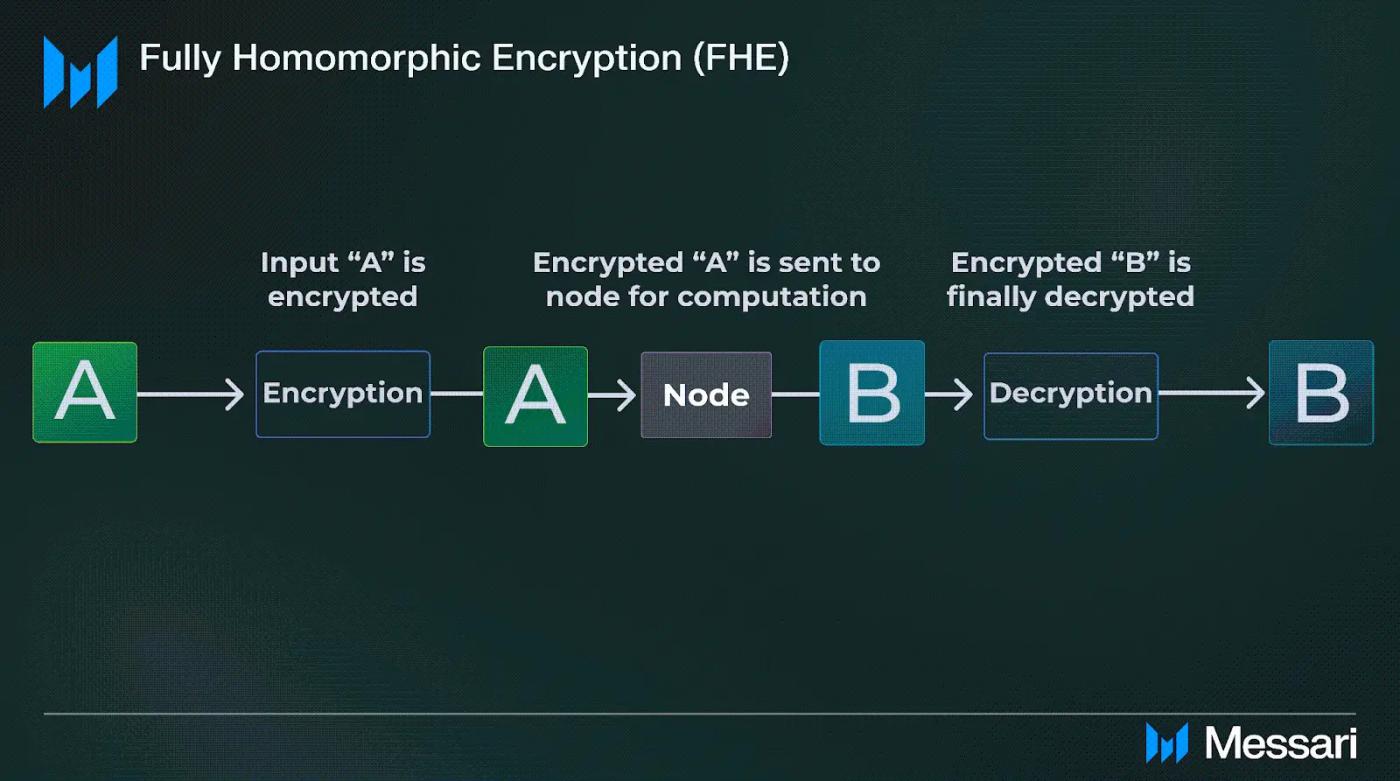

4. Fully Homomorphic Encryption (FHE)

Fully homomorphic encryption is often seen as a cryptographic magic trick. It allows one to perform arbitrary computations on data while it remains encrypted, and then decrypt the result to get the correct answer as if it had been computed on the plaintext. In other words, with FHE, you can outsource computations on private data to an untrusted server that operates only on the ciphertext and still produces a ciphertext that you can decrypt to get the correct answer, all without the server ever seeing your data or the plaintext result.

For a long time, FHE was purely theoretical. The concept has been known since the 1970s, but a practical solution wasn't found until 2009. Since then, there has been steady progress in slowing FHE down. Even so, it's still computationally intensive. Operations on encrypted data can be thousands or millions of times slower than operations on plaintext data. But what was once astronomically slow is now just slow, and optimizations and specialized FHE accelerators are rapidly improving the situation.

Why is FHE revolutionary for privacy? With FHE, you can have a single server or blockchain node do the computation for you, and as long as the encryption remains strong, the node can learn nothing. This is a very pure form of confidential computing, where the data is always encrypted everywhere. For decentralization, you can also have multiple nodes each performing FHE computations for redundancy or consensus, but none of them have any secret information. They are all just operating on ciphertext.

In the context of blockchain, FHE opens up the possibility of fully encrypted transactions and smart contracts. Imagine a network like Ethereum where you send encrypted transactions to miners, they execute smart contract logic on the encrypted data, and include an encrypted result in the chain. You or an authorized party can decrypt the result later. To others, it's a bunch of incomprehensible gibberish, but they may have a proof that the computation was valid. This is where FHE combined with ZK may come into play, proving that the encrypted transaction followed the rules. This is basically what the Fhenix project is pursuing: an EVM-compatible Layer-2 with all computations natively supporting FHE.

Real-world use cases enabled by FHE: In addition to blockchain, FHE is already attractive for cloud computing. For example, it allows you to send encrypted database queries to the cloud and get encrypted answers back, which only you can decrypt. In the context of blockchain, one attractive scenario is privacy-preserving machine learning. FHE can allow decentralized networks to run AI model inference on encrypted data provided by users, so that the network does not learn your inputs or results, which only you know when you decrypt them. Another use case is in the public sector or health data collaboration. Different hospitals can encrypt their patient data using a common key or a federated key setup, and a network of nodes can calculate aggregate statistics on all hospitals' encrypted data and deliver the results to researchers for decryption. This is similar to what MPC can do, but FHE may be implemented with a simpler architecture, requiring only an untrusted cloud or network of miners to crunch the numbers, at the cost of more computation per operation.

Challenges of FHE: The biggest challenge is performance. Although progress has been made, FHE is still typically a thousand to a million times slower than plaintext operations, depending on the computation and the scheme. This means that it is currently only suitable for limited tasks, such as simple functions or batching many operations at once in certain schemes, but it is not yet a technology that you can use to run complex virtual machines that perform millions of steps, at least without powerful hardware support. There is also the issue of ciphertext size. Fully homomorphic operations tend to bloat data. Some optimizations, such as bootstrapping, which refreshes ciphertexts that start to accumulate noise as operations are executed, are necessary for computations of arbitrary length and increase overhead. However, many applications do not need completely arbitrary depth. They can use leveled HE, which performs a fixed number of multiplications before decryption and can avoid bootstrapping.

Integrating FHE is complex for blockchains. If every node had to perform FHE operations for every transaction, this could be prohibitively slow with current technology. This is why projects like Fhenix start with L2 or sidechains, where perhaps a powerful coordinator or subset of nodes does the heavy FHE computations, while the L2 batches the results. Over time, as FHE becomes more efficient, or as specialized FHE accelerators ASICs or GPUs become available, it may become more widely adopted. Notably, several companies and academia are actively researching hardware to accelerate FHE, recognizing its importance to the future of data privacy in Web2 and Web3 use cases.

Combining FHE with other techniques: Often, FHE might be combined with MPC or ZK to address its weaknesses. For example, multiple parties could hold shares of an FHE key so that no single party could decrypt alone, essentially creating a threshold FHE scheme. This combines MPC with FHE to avoid single-point decryption failure. Alternatively, a zero-knowledge proof could be used to prove that an FHE encrypted transaction is formatted correctly without decrypting it, so that blockchain nodes can be sure it is valid before processing it. This is what some call a hybrid model of ZK-FHE. In fact, a composable DeCC approach might be to use FHE to do the heavy lifting of data processing, since it is one of the only methods that can perform computations while always encrypted, and use ZK proofs to ensure that the computation did not do anything invalid, or to allow others to verify the results without seeing them.

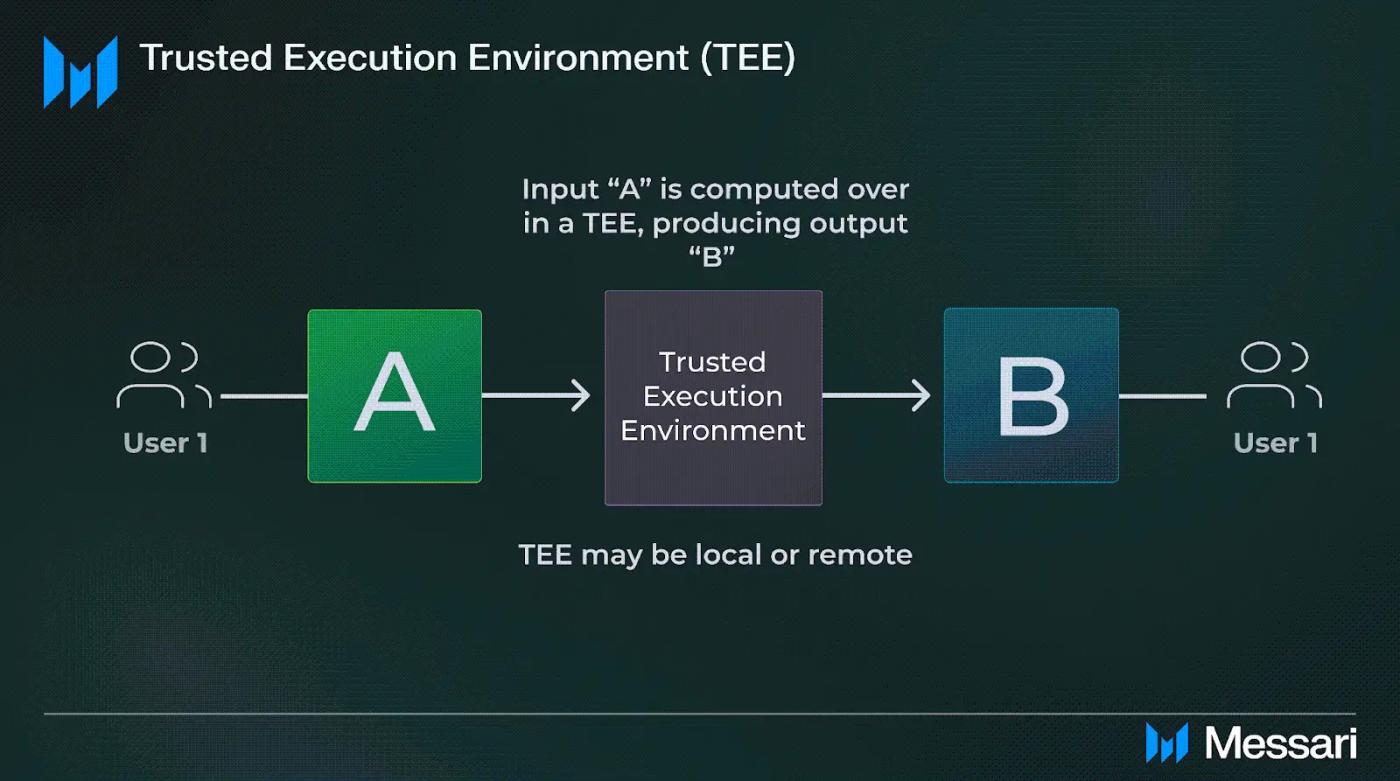

5. Trusted Execution Environment (TEE)

Trusted Execution Environment is a fundamental component of decentralized confidential computing. A TEE is a secure area within the processor that isolates code and data from the rest of the system, ensuring that its contents are protected even if the operating system is compromised. A TEE provides confidentiality and integrity during computation with minimal performance overhead. This makes it one of the most practical technologies available for secure general-purpose computing.

Think of it this way: TEE is like reading a confidential document in a locked room that no one but you can enter or peek into. You are free to review and work on the document, but once you leave the room, you take the results with you and lock everything else behind. Someone outside never sees the document directly, only the end result that you choose to reveal.

Modern TEEs have made significant progress. Intel's TDX and AMD SEV support secure execution of entire virtual machines, and NVIDIA's high-performance GPUs (including the H100 and H200) now have TEE capabilities. These upgrades make it possible to run arbitrary applications in confidential environments, including machine learning models, backend services, and user-facing software. For example, Intel TDX combined with NVIDIA H100 can run inference on models with more than 70 billion parameters with little performance loss. Unlike cryptographic methods that require custom tools or restricted environments, modern TEEs can run containerized applications without modification. This allows developers to write applications in Python, Node.js, or other standard languages while maintaining data confidentiality.

A typical example is Secret Network, the first blockchain to implement general-purpose smart contracts with private state by leveraging TEE (specifically Intel SGX). Each Secret node runs a smart contract execution runtime inside an enclave. Transactions sent to smart contracts are encrypted, so only the enclave can decrypt them, execute the smart contract, and produce encrypted output. The network uses remote attestation to ensure that the nodes are running genuine SGX and approved enclave code. This way, smart contracts on Secret Network can process private data, such as encrypted inputs, and even the node operator cannot read it. Only the enclave can, and it releases only what it should release, usually just a hash or encryption result. Phala Network and Marlin use a similar but different model. Its architecture is built around TEE-driven worker nodes that perform secure off-chain computations and return verified results to the blockchain. This setup enables Phala to protect data confidentiality and execution integrity without leaking the original data to any external party. The network is designed to be scalable and interoperable, supporting privacy-preserving workloads across decentralized applications, cross-chain systems, and AI-related services. Like Secret Network, Phala demonstrates how TEEs can be used to scale confidential computing to decentralized environments by isolating sensitive logic in verifiable hardware enclaves.

Modern deployment of TEEs in DeCC includes several best practices:

- Remote attestation and open source runtime : Projects publish the code that will run inside the enclave (usually a modified WASM interpreter or a specialized runtime) and provide a program to attest to it. For example, each Secret Network node generates an attestation report that proves it is running the Secret enclave code on a real SGX. Other nodes and users can verify this attestation before trusting the node to handle cryptographic queries. By using open source runtime code, the community can audit what the enclave is supposed to do, although they still have to trust the hardware to do only those things.

- Redundancy and consensus : Instead of a single enclave performing the task, some systems have multiple nodes or enclaves perform the same task and then compare the results. This is similar to the MPC approach, but at a higher level. If an enclave deviates or is compromised and produces a different result, it can be detected by majority voting, assuming that not all enclaves are compromised. This was the approach of the early Enigma project (which evolved into Secret). They planned to have multiple SGX enclaves perform computations and cross-check. In practice, some networks currently trust a single enclave per contract for performance, but the design can be extended to multi-enclave consensus for higher security.

- Temporary keys and frequent resets : To reduce the risk of key leakage, TEE can generate new encryption keys for each session or task and avoid storing long-term secrets. For example, if the DeCC service is doing confidential data processing, it may use temporary session keys that are often discarded. This means that even if a leak occurs later, past data may not be exposed. Key rotation and forward secrecy are recommended so that even if the enclave is compromised at time T, the data before time T remains safe.

- Used for privacy, not consensus integrity : As mentioned earlier, TEEs are best used for privacy protection, not core consensus integrity. Thus, a blockchain might use TEEs to keep data confidential, but not for validating blocks or protecting state transitions on the ledger, the latter of which is best left to the consensus protocol. In this setup, a compromised enclave might leak some private information, but cannot, for example, forge token transfers on the ledger. This design relies on cryptographic consensus for integrity and the enclave for confidentiality. This is a separation of concerns that limits the impact of a TEE failure.

- Confidential VM deployments : Some networks have begun deploying complete confidential virtual machines (CVMs) using modern TEE infrastructure. Examples include Phala’s cloud platform, Marlin Oyster Cloud, and SecretVM. These CVMs can run containerized workloads in a secure environment, enabling general privacy-preserving applications in decentralized systems.

TEEs can also be combined with other techniques. One promising idea is to run MPC inside a TEE. For example, multiple enclaves on different nodes, each holding a portion of the secret data, can jointly compute via MPC while each enclave keeps its share secure. This hybrid provides defense in depth: an attacker would need to simultaneously compromise the enclave and corrupt enough parties to access all the secret shares. Another combination is to use ZK proofs to prove what the enclave did. For example, an enclave could output a short zk-SNARK proving that it correctly followed the protocol on some cryptographic input. This can reduce the level of trust in the enclave. Even if the enclave is malicious, if it deviates from the prescribed computation, it cannot produce a valid proof unless it also breaks the ZK cryptography. These ideas are still in the research stage, but are being actively explored.

In current practice, projects like TEN (Tencrypt Network, an Ethereum Layer-2 solution) use secure enclaves to implement confidential rollups. TEN's approach uses enclaves to encrypt transaction data and execute smart contracts privately, while still producing an optimistically verified rollup block. They emphasize that secure enclaves provide a high degree of confidence in the code being run, meaning that users can be sure how their data is processed because the code is known and proven, even if they can't see the data itself. This highlights a key advantage of TEEs: deterministic, verifiable execution. Everyone can agree on the code hash that should be run, and the enclave ensures that only that code is executed while keeping the inputs hidden.

Composable DeCC Technology Stack (Hybrid Approach)

One exciting aspect of Privacy 2.0 is that these technologies are not isolated (although they can and are used independently); they can be combined. Just as traditional cloud security uses multiple layers of defense such as firewalls, encryption, and access controls, DeCC confidential computing can layer technologies to leverage their respective strengths.

Several combinations are already being explored: MPC with TEE, ZK with TEE, GC with ZK, FHE with ZK, etc. The ultimate goal is clear: no single technology is perfect. Combining these approaches can make up for their individual limitations.

Here are some of the modes that are being developed:

- MPC with TEE (MPC inside enclave) : In this approach, an MPC network runs where computations for each node occur inside a TEE. For example, consider a network of ten nodes jointly analyzing encrypted data using MPC. If an attacker compromises a node, they only have access to the enclave that holds a single share of the secret, which itself does not leak any information. Even if the SGX on that node is compromised, only a small portion of the data is exposed. To compromise the entire computation, a certain number of enclaves need to be compromised. This greatly improves security, provided that the integrity of the enclave remains intact. Tradeoffs include higher overhead from MPC and reliance on TEE, but for high-assurance scenarios, this mix is reasonable. This model effectively layers cryptography and hardware trust guarantees.

- ZK Proofs with MPC or FHE : ZK proofs can act as an audit layer. For example, an MPC network can compute a result and then collectively generate a zk-SNARK proving that the computation followed the defined protocol without exposing the inputs. This adds verification confidence to external consumers (e.g., the blockchain receiving the result). Similarly, in a FHE environment, since the data remains encrypted, ZK proofs can be used to prove that the computation was performed correctly on the ciphertext inputs. Projects like Aleo use this strategy. The computation is done privately, but verifiable proofs can prove its correctness. The complexity cannot be underestimated, but the potential for composability is huge.

- ZK Proofs and GC : Zero-knowledge proofs are often used with obfuscated circuits to protect against potentially malicious obfuscators. In more complex GC-based systems involving multiple obfuscators and evaluators, ZK proofs also help verify that individual obfuscated circuits have been correctly combined into larger computational tasks.

- TEE with ZK (shielded execution with proofs) : TEEs can produce proofs of correct execution. For example, in a sealed bid auction, the enclave can compute the winner and output a ZK proof confirming that the computation was performed correctly on the encrypted bid without revealing any bid details. This approach allows anyone to verify the results with limited trust in the enclave. Although still largely experimental, early research prototypes are investigating these confidential proofs of knowledge to combine the performance of TEEs with the verifiability of ZK.

- FHE with MPC (Threshold FHE) : A known challenge of FHE is that the decryption step leaks the result to the entity holding the key. To decentralize this, the FHE private key can be split among multiple parties using MPC or secret sharing. After the computation is complete, the decryption protocol is executed collectively, ensuring that no single party can independently decrypt the result. This structure eliminates centralized key escrow, making FHE suitable for threshold use cases such as private voting, encrypted memory pools, or collaborative analysis. Threshold FHE is an active research area closely related to blockchain.

- Secure hardware and cryptography for performance isolation : Future architectures may assign different workloads to different privacy-preserving technologies. For example, computationally intensive AI tasks can run in a secure enclave, while more security-focused logic (such as key management) is handled by cryptographic protocols such as MPC, GC, or FHE. Conversely, enclaves can be used for lightweight tasks that are performance-critical but have limited consequences for leakage. By decomposing the privacy needs of an application, developers can assign each component to the most appropriate trust layer, similar to how encryption, access control, and HSM are layered in traditional systems.

The composable DeCC technology stack model emphasizes that applications do not need to choose a single privacy approach. They can integrate multiple DeCC technologies and customize them according to the needs of specific components. For example, many emerging privacy networks are being built in a modular way, supporting ZK and MPC, or providing configurable confidentiality layers depending on the use case.

Granted, combining techniques increases engineering and computational complexity, and the performance cost may be prohibitive in some cases. However, for high-value workflows, especially in finance, AI, or governance, this layered security model makes sense. Early examples are already operational. Oasis Labs has prototyped a TEE MPC hybrid scheme for private data markets. Academic projects have demonstrated MPC and GC computations verified by zk-SNARKs, highlighting the growing interest in cross-model verification.

Future dApps may run encrypted storage via AES or FHE, use a mix of MPC, GC, and TEE for computation, and publish verifiable ZK proofs on-chain. Although invisible to users, this privacy stack will enforce strong protections against data breaches and unauthorized inferences. The ultimate goal is to make this level of privacy infrastructure the default and transparent, providing applications that feel familiar but operate with fundamentally different trust assumptions.

Venture Capital and Developer Momentum

Privacy-preserving computing has become a notable area of capital allocation in the crypto space, with investment activity continuing to increase. Investors and builders are increasingly confident that decentralized confidential computing (DeCC) will unlock new market opportunities by enabling private applications that would otherwise not be feasible on public blockchain infrastructure.

Cumulative venture capital funding for leading DeCC projects has exceeded hundreds of millions of dollars. Notable examples include:

- Aleo , a Layer-1 network for building private applications using zero-knowledge proofs, has raised approximately $228 million. This includes a $28 million Series A led by a16z in 2021 and a $200 million Series B at a $1.45 billion valuation in 2022. Aleo is investing in developer tools and its broader ecosystem of privacy-preserving applications.

- Partisia Blockchain , which combines secure multi-party computation (MPC) with blockchain infrastructure, raised $50 million in 2021 to expand its privacy-preserving Layer-1 and Layer-2 platforms. Its funding came from strategic and institutional backers focused on confidential data use cases.

- Fhenix , an Ethereum Layer-2 that implements fully homomorphic encryption (FHE), raised $15 million in a Series A round in June 2024, bringing total funding to $22 million. Early investors included a16z and Hack VC, reflecting confidence in the feasibility of encrypted smart contract execution.

- Mind Network , which focuses on building a privacy layer for data processing based on FHE, raised $10 million in a Pre-A round of funding in September 2024, bringing its total funding to $12.5 million. The project targets applications such as secure voting, private data sharing, and confidential AI execution.

- Arcium , a confidential computing network on Solana, raised $5.5 million from Greenfield Capital in early 2025, bringing its total funding to date to $9 million. Arcium positions itself as an encrypted computing layer for high-throughput chains.

- COTI has partnered with Soda Labs to commit $25 million from its Ecosystem Fund to develop an MPC-based privacy Layer-2. The collaboration focuses on advancing obfuscated circuit technology to enable privacy-preserving payments.

- TEN , an Ethereum-based Layer-2 that uses a trusted execution environment (TEE), raised $9 million in a round led by the R3 consortium in late 2023, bringing total funding to $16 million. The team includes engineers with experience building permissioned blockchain infrastructure.

- Penumbra , a Cosmos-based privacy zone for DeFi, raised $4.75 million in a seed round led by Dragonfly Capital in 2021. The project aims to support private swaps and MEV-resistant transactions.

- Aleph Zero , a privacy-enabled layer-1 using DAG consensus and zero-knowledge technology, has raised about $21 million through public and private token sales. It positions itself as a layer with built-in confidentiality capabilities.

- Traditional projects also continue to contribute to the momentum. Secret Network , which evolved from the Enigma project, raised $45 million in a token sale in 2017 to launch the first TEE-based smart contract platform, bringing total investment to $400 million. iExec , a decentralized cloud platform that supports TEEs, raised about $12 million and has since received funding to advance confidential data tools.

If token distribution, ecosystem funds, and public offering proceeds are included, total investment in the DeCC space could be close to $1 billion. This is comparable to funding levels in areas such as Layer-2 expansion or modular infrastructure.

The DeCC ecosystem is also expanding through partnerships and open source collaboration. Organizations such as the Confidential Computing Consortium have included blockchain-based members such as iExec and Secret Network to explore standards across private computing. Academic initiatives, developer hackathons, and privacy-focused conferences are fostering technical talent and community engagement.

Projects are also improving accessibility through SDKs, languages, and APIs that abstract the complexity of cryptography. For example, tool frameworks like Circom, ZoKrates, and Noir simplify zero-knowledge development, while platforms like Arcis from Arcium lower the barrier to entry for building with MPC. Developers can now integrate privacy into decentralized applications without requiring advanced cryptography expertise.

Collaborations with businesses and government agencies further validate the field. Partisia has collaborated with the Okinawa Institute of Science and Technology Graduate University (OIST) on a joint cryptography research project, while Secret Network has partnered with Eliza Labs to develop confidential AI solutions.

As funding and ecosystem activity continue to grow, DeCC is becoming one of the most well-capitalized and fastest-growing areas of crypto infrastructure, with high interest from builders and institutional stakeholders alike. That being said, as with any emerging technology cycle, many projects in the space may fail to realize their vision or gain meaningful adoption. However, a small number may persist, setting the technical and economic standards for privacy-preserving computing across decentralized systems.

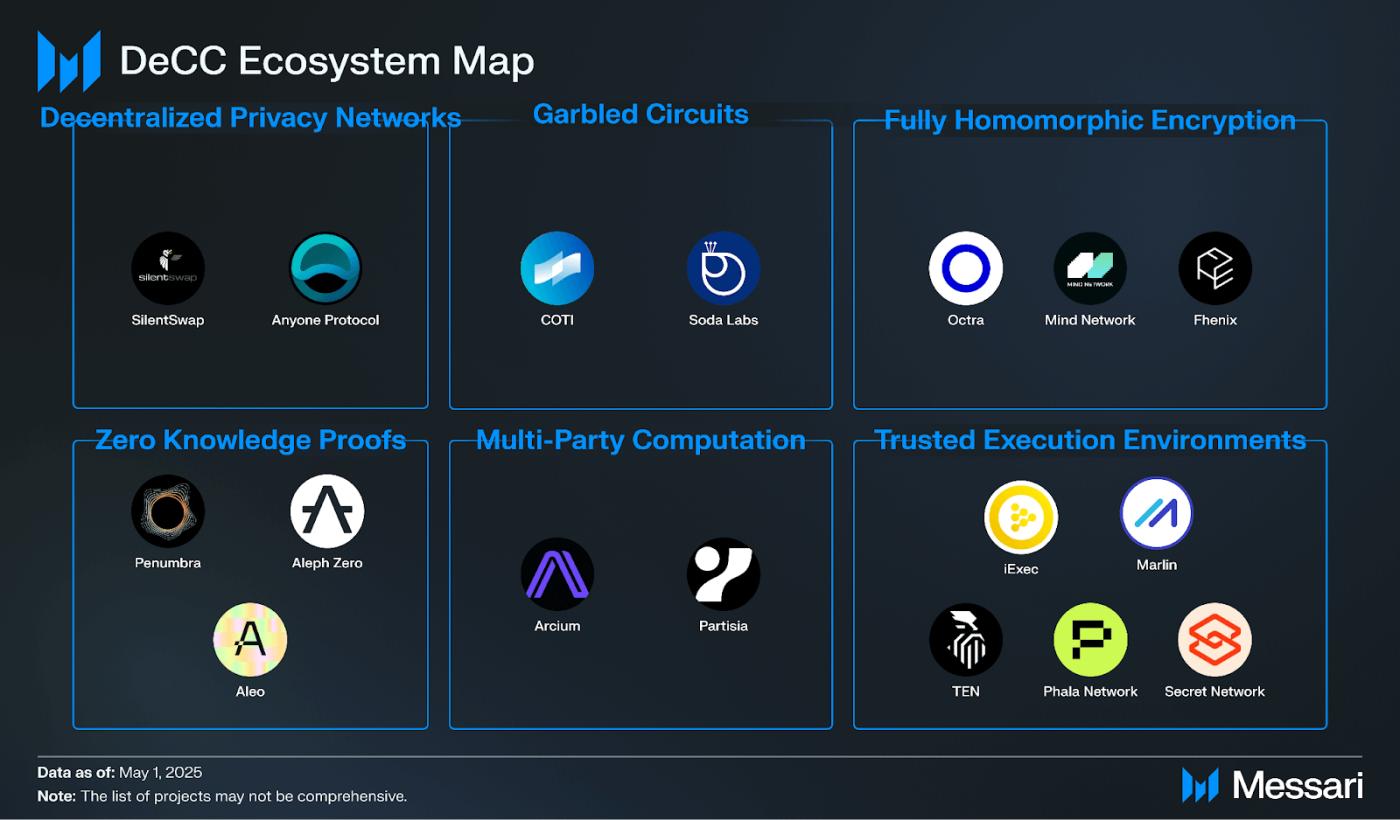

DeCC Ecosystem

The Decentralized Confidential Computing (DeCC) ecosystem consists of technologies and frameworks that support secure computation across distributed systems. These tools enable sensitive data to be processed, stored, and transmitted without being exposed to any single party. By combining cryptographic techniques, hardware-enforced isolation, and decentralized network infrastructure, DeCC addresses key privacy limitations in public blockchain environments. This includes challenges related to transparent smart contract execution, unprotected off-chain data usage, and the difficulty of protecting confidentiality in systems designed for openness.

DeCC infrastructure can be roughly divided into six technical pillars:

- Zero-knowledge proofs (ZKPs) for verifiably private computation.

- Multi-party computation (MPC) for collaborative computation without data sharing.

- A garbled circuit (GC) for performing computations on standard encrypted data.

- Fully homomorphic encryption (FHE) for performing computations directly on encrypted input.

- Trusted Execution Environment (TEE) for hardware-based confidentiality processing.

- Decentralized privacy network for routing and infrastructure-level metadata protection.

These components are not mutually exclusive and are often combined to meet specific performance, security, and trust requirements. The following sections highlight projects that implement these technologies and how they fit into the broader DeCC landscape.

Projects based on Fully Homomorphic Encryption (FHE)

Many DeCC projects use fully homomorphic encryption (FHE) as their primary mechanism for implementing encrypted computations. These teams focus on applying FHE to areas such as private smart contracts, secure data processing, and confidential infrastructure. While FHE is more computationally intensive than other methods, its ability to perform computations on encrypted data without decryption provides strong security guarantees. Major projects in this category include Octra, Mind Network, and Fhenix, each of which is experimenting with different architectures and use cases to bring FHE closer to real-world deployment.

Fhenix

Fhenix is an FHE R&D company dedicated to building scalable, real-world fully homomorphic encryption applications. Fhenix's FHE coprocessor (CoFHE) is an off-chain computing layer designed to securely process encrypted data. It offloads heavy cryptographic operations from the main blockchain (such as Ethereum or L2 solutions) to enhance efficiency, scalability, and privacy without compromising decentralization and providing an easy integration method. This architecture allows computation on encrypted data without decryption, ensuring end-to-end privacy for decentralized applications. Fhenix is fully EVM-compatible, allowing developers to easily and quickly build FHE-based applications using Solidity and existing Ethereum tools such as Hardhat and Remix. Its modular design includes components such as FheOS and Fhenix's Threshold Network, which manage FHE operations and FHE decryption requests respectively, providing a flexible and adaptable platform for privacy-preserving applications.

Key innovations and features

- Seamless integration with EVM chains : Fhenix enables any EVM chain to access cryptographic functions with minimal modification. Developers can integrate FHE-based encryption into their smart contracts using a single line of Solidity code, simplifying the process of adopting confidential computing across various blockchain networks.

- Fhenix Coprocessor : Fhenix provides a coprocessor solution that can be natively connected to any EVM chain to provide FHE-based cryptographic services. This approach allows existing blockchain platforms to enhance their privacy features without completely overhauling their infrastructure.

- Institutional Adoption and Collaboration : FHE-based confidentiality is critical to institutional adoption of Web3 technologies. A proof-of-concept project with JPMorgan Chase demonstrated the platform’s potential to meet stringent privacy requirements in the financial services sector.

- Enhanced decryption solution : The Fhenix team has developed a high-performance threshold network for decryption of FHE operations combining MPC and FHE. Advances in the Fhenix TSN network reduce decryption latency and computational overhead, enhancing the user experience of privacy-preserving applications.

- Various use cases under development : Fhenix focuses on developers who are bringing encrypted computations to existing ecosystems such as EVM chains. Current developments include applications such as confidential lending platforms, dark pools for private transactions, and confidential stablecoins, all of which benefit from FHE's ability to maintain data privacy during computation.

Mind Network

Mind Network is a decentralized platform that pioneered the integration of FHE to build a fully encrypted Web3 ecosystem. Mind Network acts as an infrastructure layer that enhances security across data, consensus mechanisms, and transactions by performing computations on encrypted data without decryption.

Key innovations and features

- First project to implement FHE on mainnet : Mind Network has achieved a major milestone by bringing its FHE to mainnet, enabling quantum-resistant, fully encrypted data computation. This advancement ensures that data remains secure throughout its entire lifecycle - during storage, transmission, and processing - addressing vulnerabilities inherent in traditional encryption methods.

- Introduction of HTTPZ : Based on the standard HTTPS protocol, Mind Network is committed to realizing the HTTPZ vision proposed by Zama, a next-generation framework that maintains continuous data encryption to achieve a fully encrypted network. This innovation ensures that data remains encrypted during storage, transmission, and calculation, eliminating dependence on centralized entities and enhancing security across a variety of applications, including AI, DeFi, DePIN, RWA, and games.

- Agentic World : Mind Network’s FHE computing network is used for AI agents in the Agentic World, which is built on three pillars:

- Consensus safety : AI agents in distributed systems must reach reliable decisions without manipulation or conflict.

- Data privacy : AI agents can process encrypted data without exposing the data.

- Value alignment : Embed ethical constraints into AI agents to ensure that their decisions are aligned with human values.

- FHE Bridge for Cross-Chain Interoperability : Mind Network provides an FHE bridge to facilitate a seamless decentralized ecosystem. The bridge facilitates secure interoperability between different blockchain networks. It enables encrypted data to be processed and transmitted across chains without exposing sensitive information, supporting the development of complex multi-chain applications. Chainlink is currently integrating it with CCIP.

- DeepSeek Integration : Mind Network becomes the first FHE project to be integrated by DeepSeek, a platform known for its advanced AI models. The integration leverages Mind Network’s FHE Rust SDK to secure encrypted AI consensus.

Octra

Octra is a general-purpose, chain-agnostic network founded by former VK (Telegram) and NSO team members with a decade of experience in the crypto space. Since 2021, Octra has been developing a proprietary hypergraph-based fully homomorphic encryption (FHE) scheme (HFHE) that allows near-instant computation on encrypted data. Unlike other FHE projects, Octra is completely independent and does not rely on third-party technology or licenses.

Key innovations and features

- Proprietary HFHE scheme : Octra's unique HFHE uses hypergraphs to achieve efficient binary operations and supports parallel computing, where different nodes and hyperedges are processed independently.

- Isolated Execution Environment : The network supports isolated execution environments, which enhances the security and privacy of decentralized applications.

- Diverse codebase : Developed primarily in OCaml and C++, with support for Rust for contracts and interoperability solutions, Octra offers flexibility and robustness in its infrastructure.

Current Development

- HFHE Sandbox : Octra's HFHE demonstration is available in its sandbox environment, showing its cryptographic technology in action.

- Testnet Progress : The first batch of validators have been connected to the testnet, marking an important step forward in network stability and reliability.

- Academic Contributions : Octra's HFHE progress will be detailed in a paper to be presented at the International Association for Cryptologic Research (IACR), reflecting their commitment to contributing to the broader cryptography community.

- Mainnet and Rollup Builder : Octra plans to launch its mainnet for key management and storage in 2025, and expects to launch the EVM/SVM rollup builder in 2026, aiming to enhance scalability and interoperability across blockchain platforms.

Fundraising and community engagement

In September 2024, Octra received $4 million in pre-seed funding led by Finality Capital Partners, with participation from investors including Big Brain Holdings, Karatage, Presto Labs, and Builder Capital. In January 2025, Octra raised a further $1.25 million through Cobie's angel syndicate investment platform Echo, which sold out in less than a minute. These investments highlight the confidence in Octra's potential to revolutionize data privacy and security. The project actively engages with the developer community through platforms such as GitHub and recently released a public WASM sandbox with an experimental version of its HFHE library for testing and feedback.

By combining proprietary HFHE technology with a powerful and flexible infrastructure, Octra aims to set new standards for secure, efficient and decentralized data processing across a wide range of applications.

Projects based on GC

A garbled circuit (GC) is a specialized form of encrypted data computation that allows two or more parties to jointly evaluate a function without revealing their respective inputs. While GC is primarily used to solve encrypted data computation problems, it can also address a variety of privacy and security issues, such as zero-knowledge proofs and anonymous token-based Internet authentication.

Projects such