This article is machine translated

Show original

Regarding this point that I thought many people knew, but discovered during company lectures that everyone actually doesn't know about agent development key techniques: tool-call, whether function_calling or MCP calls, are divided into explicit and implicit types.

1️⃣ Implicit Calling: Modern Automated Tool Execution

Recent LLMs have built-in automatic tool calling capabilities. For example, (you can control this feature through the automatic_function_calling flag). Since official documentation and examples mainly focus on implicit calls, if you have been in MCP development in the last two months, you have likely been using the implicit mode.

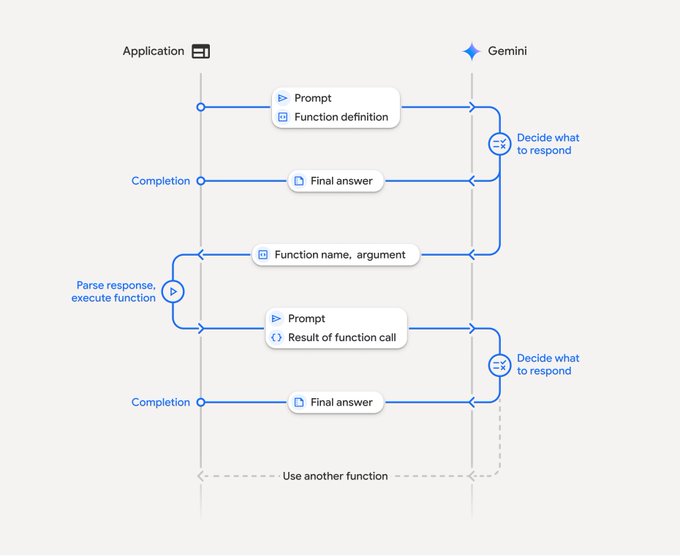

In the implicit mode, the entire tool calling process is a black box for developers. The LLM automatically determines whether tools need to be called, automatically selects appropriate tools and parameters, and automatically executes tools and integrates results. Developers only need to provide a list of tools and then directly obtain the final natural language answer.

Implicit is the newer technology and the future trend. As large model capabilities evolve, this should become mainstream.

Smart colleagues probably guessed it - after the praise, the turning point comes. Yes, implicit calls have temporary, hard-to-avoid drawbacks.

马东锡 NLP

@dongxi_nlp

07-15

Kimi K2 的一大亮点,是将文本任务里基于 token 的处理思路,成功迁移到 Agentic 场景中的 tool-call 级别:在 Agentic 任务中,tool call 就相当于“行动 token”。

什么意思呢?解释如下:

在文本任务中:

CoT 是一串 token

而在Agentic 场景中:

CoT 是一段 tool-call 序列,即planning

3️⃣Explicit call: manual parsing mode with precise control

In contrast, the explicit call mode requires developers to manually handle each step of the tool call. You need to tell LLM how to format the tool call request in the system prompt, then manually parse the tool call instructions in the LLM answer, execute the corresponding tool, and finally integrate the results into the final answer.

This is the ancient method of calling MCP (although it has only been traditional for half a year), and implicit only handles this part by LLM.

However, in fact, the explicit call used in actual development is not completely ancient.

4️⃣ Semi-automatic backfill display call

The method name is randomly chosen by me. The specific method is very simple. You need to give the LLM tool list to the MCP Client for the user's query, and ask it not to call the tool itself, but to return the name of the tool to be called.

Next, you need to let the Client manually call the MCP Server. Since this part of the data is likely to be the formatted JSON data returned by the ordinary API, you can encapsulate this and the LLM response and return it.

This method is not so widely applicable because it requires multiple rounds of LLM dialogue, which is still much more troublesome than a single dialogue. At the same time, your MCP HOST is better not to be a completely universal chat client that manages everything. This is more suitable for fixed services.

But the advantage is that with raw data, you can process the data completely freely, and the data processing and UI display codes can directly reuse the parts of the previous service. And this method is compatible with LLM that does not have automatic tool calls. The new service led by me in our company adopts this method, and the response is good.

Are there any other methods? I would like to hear your experience:

@dotey @chaowxyz @hylarucoder @yihong0618 @pangyusio

Substack version: open.substack.com/pub/web3rove...…

Quaily:

quaily.com/cryptonerdcn/p/disc...…

@lyricwai @QuailyQuaily

Some responses to various misunderstandings:

CryptoNerdCn

@cryptonerdcn

07-16

其实何止大模型输入需要在上下文里提供足够的信息呢,人类也是需要的。昨天我因为在这贴里忘了付上公司讲课时分享的前半段,就被几位质疑,又是觉得我在说“LLM有执行能力”,又是“我还以为是什么行业突破,没想到是细枝末节”😂 x.com/cryptonerdcn/s…

From Twitter

Disclaimer: The content above is only the author's opinion which does not represent any position of Followin, and is not intended as, and shall not be understood or construed as, investment advice from Followin.

Like

Add to Favorites

Comments

Share