Written by: Sreeram Kannan, EigenLayer; IOSG Ventures

IOSG Ventures’ 11th Friends event “Restaking Summit” recently concluded successfully in Denver. The theme of this event covers DA, Staking/Restaking, AVS, Bitcoin Rollup, Coprocessor and other hot topics. It has 4 Talks, 5 Panels, and a fireside chat, attracting 1862 participants to sign up, with a total of 2000+ live participants. We will recap the specific speeches and roundtable discussions at the event and publish them one after another, so stay tuned.

The following content is the Converting Cloud to Crypto keynote speech delivered by EigenLayer founder and CEO Sreeram Kannan. Welcome to read! 👇

MC: Let us now warmly welcome our esteemed guest, Sreeram Kannan, CEO & Founder of EigenLayer. He will present to us: Converting Cloud to Crypto.

Sreeram Kannan:

Thank you very much to the IOSG Ventures team for organizing this summit. I am very happy to be able to share it here. Given that we already have a good introduction to EigenLayer. I'll talk about EigenDA and the topics we're exploring. When and where do we build EigenDA and bring the cloud to cryptocurrency?

When you think of Rollup, you probably think of two different goals. One of them is how to outsource the traffic of Ethereum L1 to our L2? But that’s not what we’re going to talk about today, our argument is how to bring cloud-scale computing to cryptocurrencies.

First, why do cloud applications need encryption?

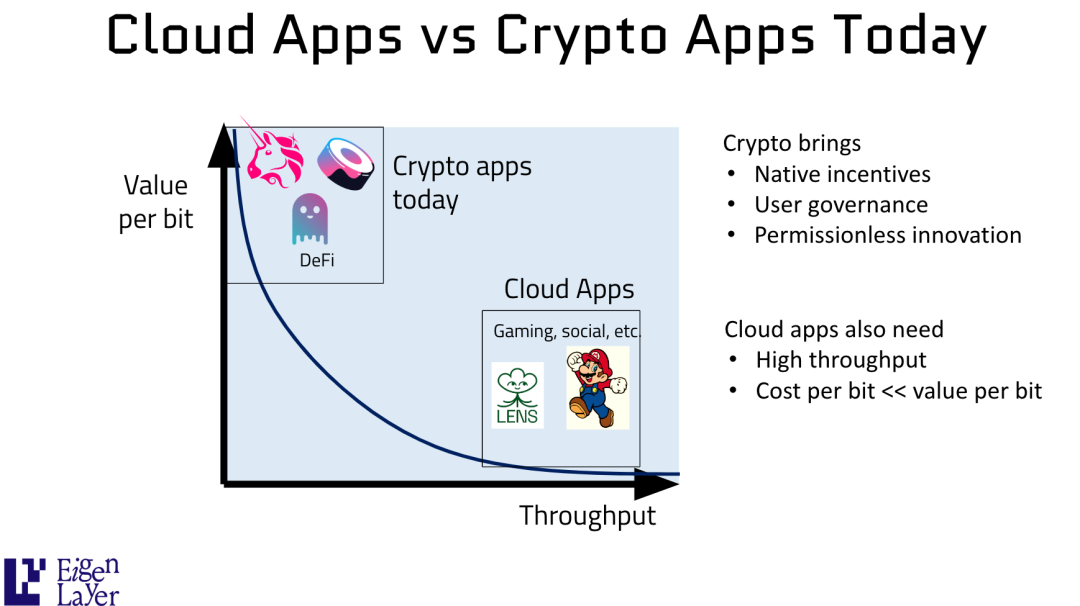

If you think about two different types of applications, they have two basically different axes. "Value per bit" is the value of each bit of the application's transaction, and "throughput" is the rate at which you communicate. While today's cryptocurrency applications are running on high value per bit, low throughput per bit, cloud applications really rely on the exact opposite situation, where there is a lot of throughput but low value per bit.

How much is a tweet someone writes worth? But if there are many such tweets added together, it will bring a lot of value to the social network. For example, why do cloud applications need cryptocurrencies? Because cryptocurrencies can bring native incentives, user governance, and permissionless innovation. This last point is one of our favorite topics: permissionless innovation; how do we ensure that anyone with a good idea can build on top of all existing applications? If you're building on top of the API Twitter or Facebook's API, then you're always worried about when is this API going to shut down, when is it going to be internalized into the core protocol.

But in cryptocurrencies, you have solid, immutable, and proven APIs that you can build on. But cloud applications also require very high throughput. You know that our throughput today cannot satisfy cloud applications. We also need very low cost per bit. This is one of the two dimensions that EigenDA really focuses on.

Before we discuss this, let us first examine why use Rollup?

When you think about cloud and consumer applications, there are several dimensions that don't fit into the application deployment model we have in cryptocurrency today.

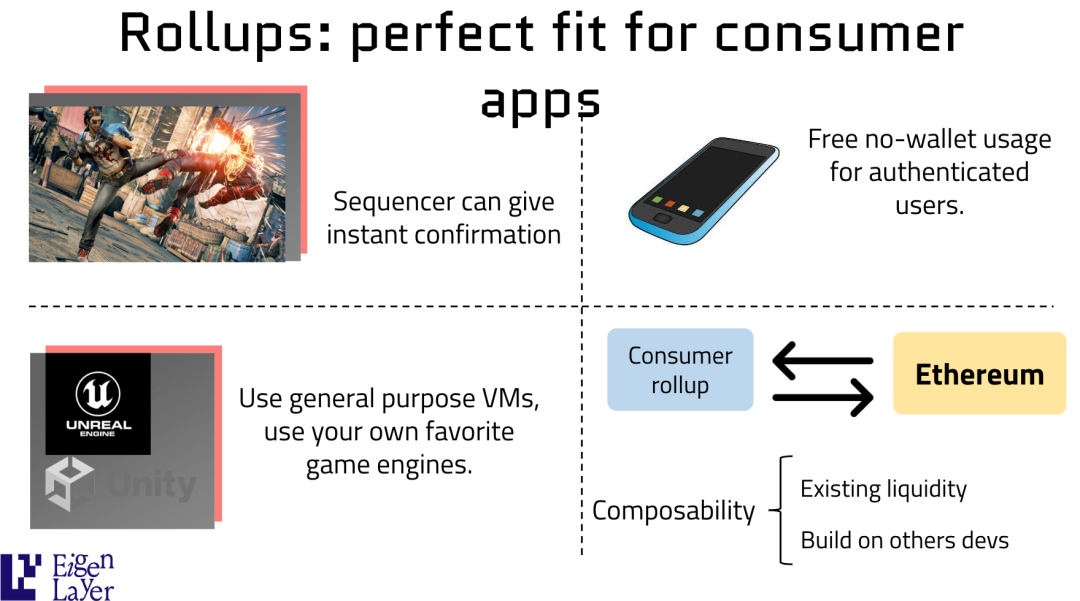

The first one is user experience. You want instant user experience, you want ultra-fast confirmation, what is better than a single centralized Sequencer?

This Sequencer is maintained through various checks and balances, where the correctness of the state transition function is checked through valid proofs or optimistic proofs, while also ensuring that transactions returning to Ethereum L1 or directly to the data availability layer are included. Instant confirmation completely changes the user experience.

The second one is when you want to introduce cloud native applications, we can't ask everyone to write their programs in EVM, we need new virtual machines, new programming languages, game engines, AI inference engines, all of them locally Integrated into our blockchain infrastructure. That's what Rollup really helps us do.

Another thing that I think very few people understand is that when you have a single centralized Sequencer, you can do some things that you can't do in a decentralized blockchain, and that's subjective Admission Control. What is subjective admission control? You know we want authenticated users to be able to use something for free and that's not something you can do on a blockchain because you know the blockchain has to make sure there's no spam and the only way to make sure there's no spam is to charge a fee and the price It is a very difficult mechanism to differentiate between MEV robots and real users.

This is what we can do with Rollup. Why can we do this with Rollup? Because we have a single centralized Sequencer or Sequencer that can impose subjective Admission Control. For example, if you already have a Facebook or Twitter ID, you should be able to log in and use the app without having to pay anything, not even a wallet, which greatly simplifies the onboarding experience for users, so Rollup has some superpowers that few people understand , and finally, we want to get all of this without losing the benefits we're used to.

Composability with other applications in the blockchain space. We want to gain access to existing liquidity, and we also want to build on the work of other developers. One of the things we're trying to do at EigenLayer is make sure that people focus on building truly resilient systems and not having to work redundantly. If you look at it from a "civilization" perspective, it's going in the direction of each of us doing more and more specialized things and consuming more general things, which is exactly what we see with Rollup and EigenLayer here Where it comes into play.

Okay, Rollups are great, but they also have a lot of problems. We observed these issues with Rollup.

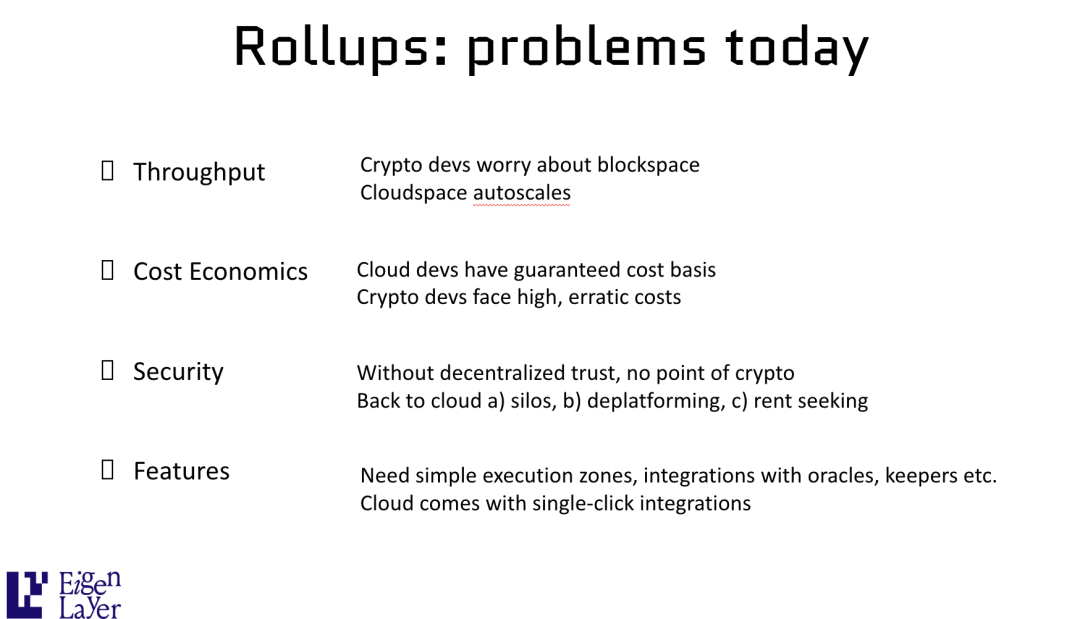

The first issue is throughput.

So if you go to any cryptocurrency developer, they are probably worried about block space. You know, if my demand for block space increases, will someone else come to flood the block space? Like Yuga Lab, their next boring ape suddenly drowns you in traffic and you can't get into it.

But this is not the case in the cloud, the space in the cloud automatically expands and if demand increases, the cloud has more space. This is what cryptocurrencies should be, but this is not the case.

There are also cost economics. If you are a cloud developer, you are used to a very guaranteed performance and cost base, cryptocurrency developers face high and unstable costs, and even if the costs are low, you do not know the block space of the blockchain. When it's full, you'll face congestion costs.

We need security. We have ways to solve the first two problems and achieve high throughput and low cost. But giving up security is not an option. Finally we need new functionality. We need to be able to build new virtual machines, we need more integration.

How we solve all these problems with EigenDA. The high-level idea is that EigenDA is an order of magnitude larger than anything out there. Ethereum 4844, the upcoming Dencun upgrade, tens of kilobytes per second, EigenDA will have 10MB per second at launch, which I think is beyond the range of what we need for today's applications. But the next generation of developers will know how to take advantage of this scale of throughput, and EigenDA is an order of magnitude improvement at the scale of today’s cryptocurrencies. We think that's not enough, our goal is to convert the cloud into cryptocurrency, which means we need more scale, so we are working on that.

One of the things is that in cryptocurrency, nominal decentralization is the opposite of scaling, if you want more nodes to participate, you need to lower the node requirements and so on. So decentralization and scaling are antithetical to each other. EigenDA scales horizontally, meaning EigenDA’s decentralization is scalable. The more nodes you have, the more throughput you can send over the network. No single node needs to download all the data. This is the architecture of EigenDA.

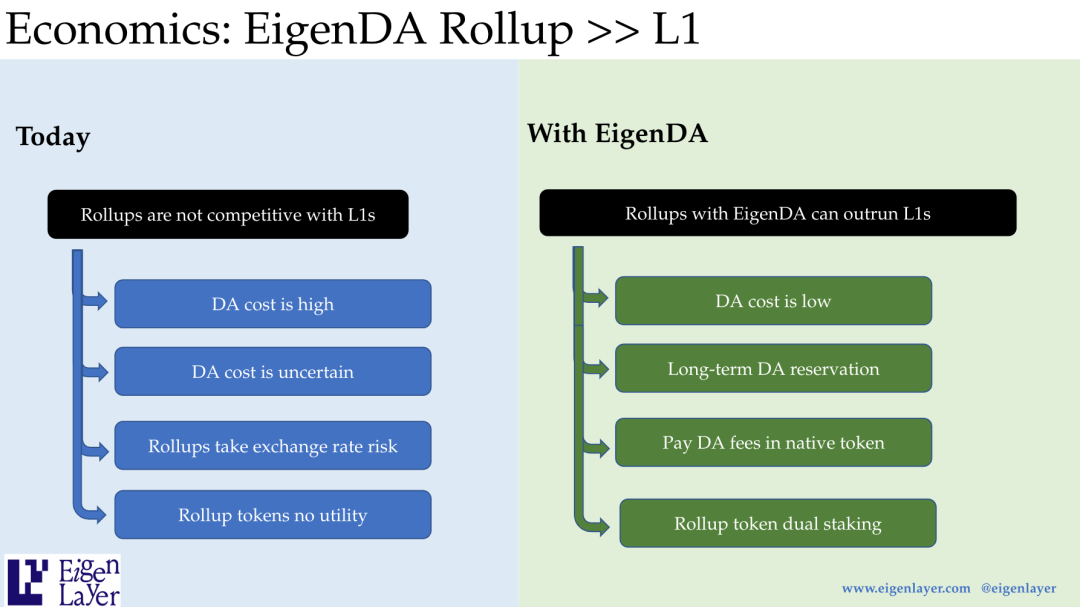

Okay, so what about economics, random price fluctuations and all that. So if you look at a Rollup and compare it to Layer 1, you'll find that Rollup has a hard time competing with Layer 1 on some dimensions.

The first is the cost of data availability, the cost of writing data to a common data repository is quite high; the second is that the cost of data availability is uncertain, even if it is low today, when 4844 is launched, you will say, I It's cheaper to know it's in the next location, so we just go get it. You know building all our applications out there and then someone comes up with some new Inscription and that floods your entire bandwidth.

My gut feeling is that 4844, even if it brings more bandwidth, it won't significantly reduce the gas cost because there is this uncertainty, which is a big problem, whereas if you are L1, you don't have this problem , because you know exactly what your cost basis is, and because you know no one else is interfering with you.

Finally, Rollup takes on exchange rate risk, which means if Rollup has a native token and pays a fee, they don't know how much the fee will fluctuate, because you know that the Rollup token may fluctuate with ETH, so there is a Swap risk. .

OK, but for a Layer 1, that's not the case because you can just give out a portion of your own inflation. How do we do that in EigenDA? How do we make Rollup better than Layer 1?

why? The first one is the low cost of data availability. We built a lot of data availability on a very large-scale system, so the cost is very low. The second one is that we have the ability in EigenDA to do long-term reservations, just like you go to AWS and reserve an instance that only you use and no one else touches it, you reserve a data availability channel for yourself, which in EigenDA is possible.

Next, even though when you make this kind of reservation, you're nominally paying in ETH, you're also paying with your own Native Token, which means you're fixing a certain inflation of your own native token, which is actually Used to run data availability, and finally, Rollup tokens can be used for Dual Staking in EigenDA.

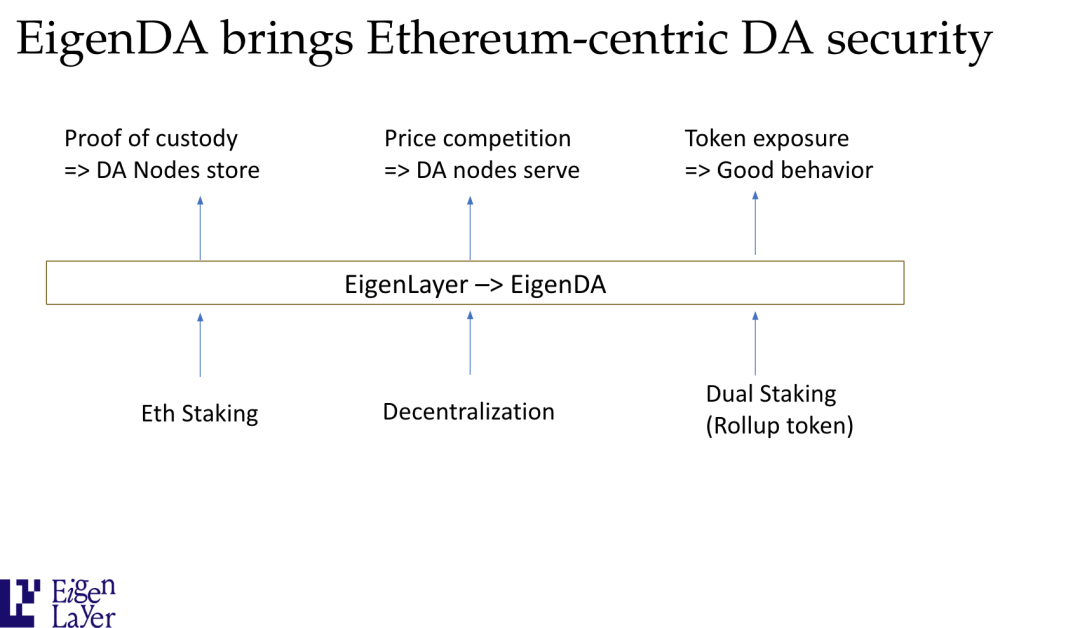

This means that the data availability system is secured not only by ETH Stakers, but also by a committee that holds your own tokens. All this work is outsourced to the EigenLayer and EigenDA systems to manage, and this is what EigenDA does for Rollup. EigenDA brings Ethereum-centric security, which is the architecture of EigenDA. ETH Stakers participate in EigenLayer and they can Restake to EigenDA. We also bring decentralization from the Operator of Ethereum nodes. Finally, we say that we Allow Rollup tokens to be used for Dual Staking.

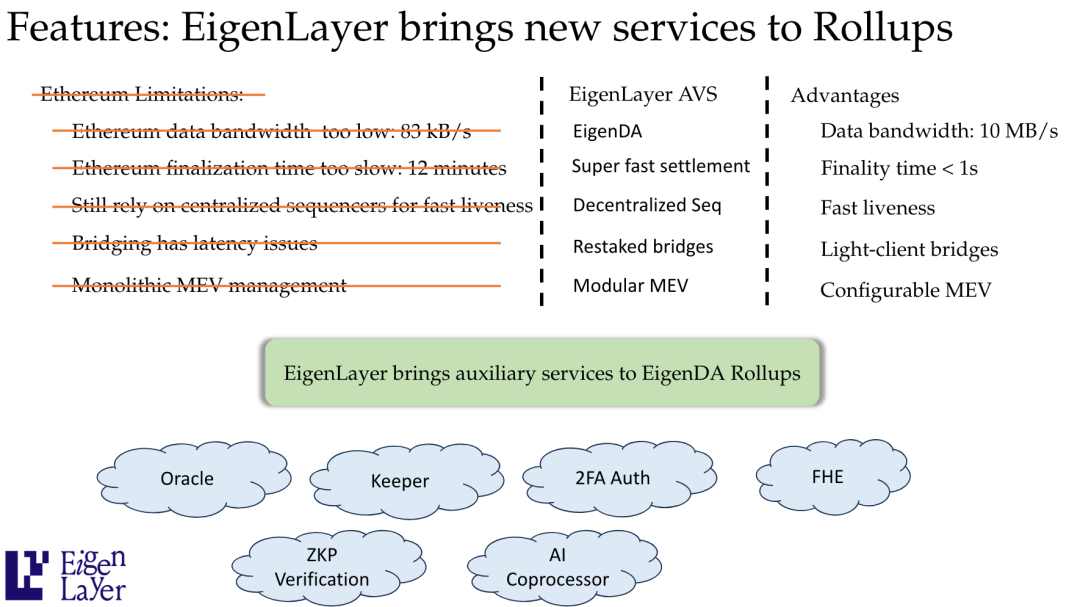

So these all ensure that when you build on EigenDA, we have high data availability security. Finally, there are limits beyond data bandwidth.

Other limitations you see in Ethereum today, for example, the time to Finality is so slow, it takes 12 minutes to finalize a block, there are new blockchains coming out and saying I'll do it in a second or less We will give you confirmation within the time, which is competitive.

So what we can do, we can build new services on top of EigenLayer to solve these problems, you can have a super-fast Finality Layer like NEAR is building, you can also use, for example, a decentralized Sequencer like Espresso to Do this. You can use distributed sequencers instead of bridges because bridges are a flimsy and low-trust model.

When someone wants to move data from one L2 to another, can we have a really solid bridge where you have enough Stake on the backing bridge and then immediately on the other end accept the receipt and move the Value, so you can Almost instant confirmation via the Restaked bridge, and finally, you can have more powerful MEV management tools that you can hook into if you're doing a Rollup on EigenDA.

So EigenDA brings many auxiliary services to EigenDA Rollup.

Now I mentioned some of the limitations of Ethereum, but there is also additivity, there are new Oracles, there are new Watchers, they do event-driven operations, fully homomorphic encryption, ZK proof verification, AI co-processors, and many, many more New categories that will make it easy to rollup over time with the EigenLayer ecosystem.

Many thanks to IOSG Ventures for organizing this summit for us.