The original text comes from Vitalik's " Ethereum has blobs. Where do we go from here? ", compiled by Odaily Odaily jk.

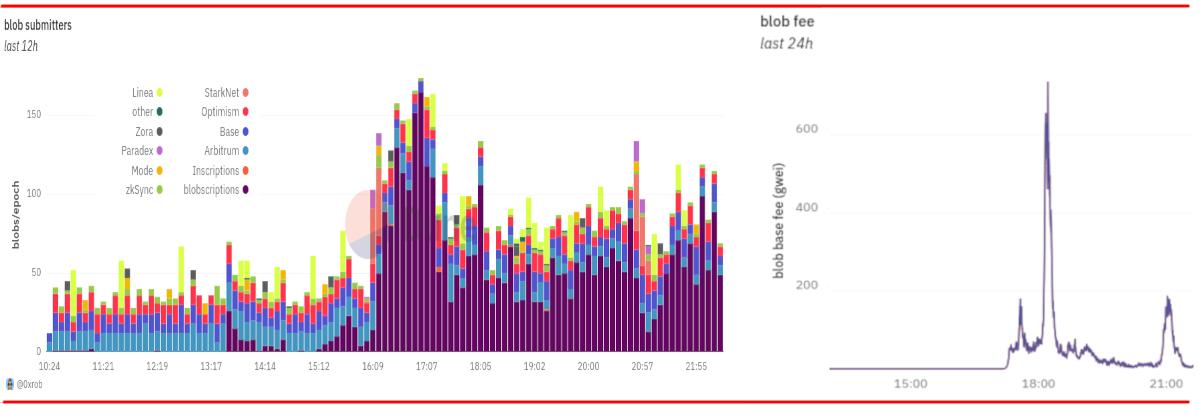

On March 13, the Dencun hard fork was activated, making possible one of Ethereum's long-awaited features: proto-danksharding (aka EIP-4844, aka blobs). Initially, the fork reduced transaction fees for rollups by more than 100x, as blobs were virtually free. Over the past day we've finally seen a surge in the size of blobs, with fee markets activating as blobscriptions protocols start using them. Blobs are not free, but they are still much cheaper than calldata.

Left: Thanks to Blobscriptions, blob usage has finally reached the goal of 3 per block. Right: With this comes blob fees "entering price discovery mode". Source: https://dune.com/0xRob/blobs .

This milestone represents a key shift in Ethereum’s long-term roadmap: with blobs, Ethereum’s scaling is no longer a “zero to one” problem, but a “one to many” problem. From here, significant scaling work, whether increasing the number of blobs or increasing the ability of rollups to utilize each blob, will continue, but it will be more incremental. Scaling changes related to fundamental changes to the way Ethereum operates as an ecosystem are increasingly behind us. In addition, the focus has slowly shifted and will continue to slowly shift from L1 issues such as PoS and scaling to issues closer to the application layer. The key questions this article will explore are: Where will Ethereum go next?

The future of Ethereum scaling

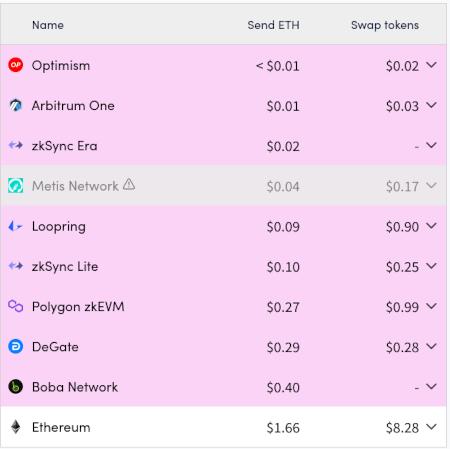

Over the past few years, we have witnessed Ethereum gradually transform into an L2-centric ecosystem. Major applications began to move from L1 to L2, payments began to be based on L2 by default, and wallets began to build their user experience around the new multi-L2 environment.

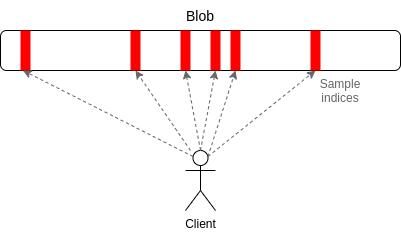

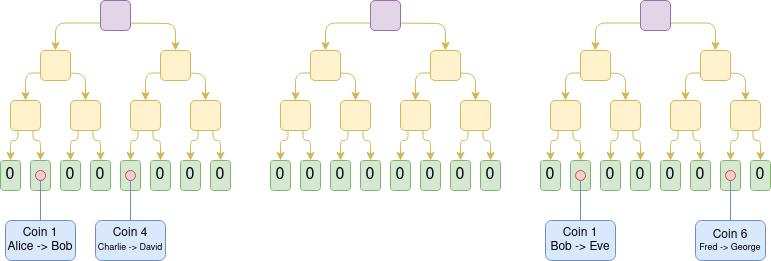

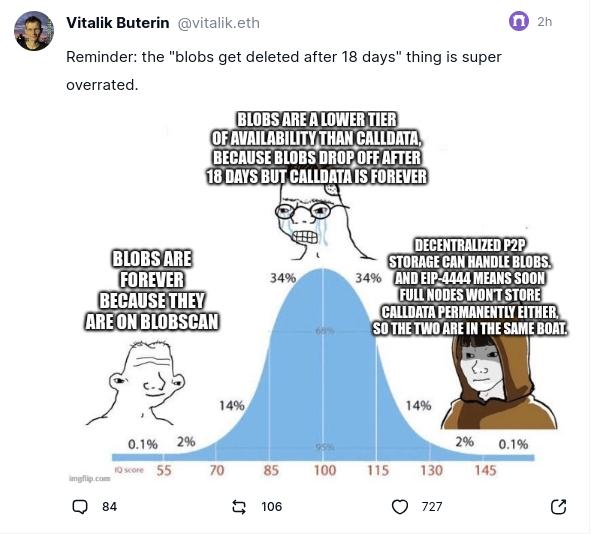

A key part of the Rollup-centric roadmap from the beginning has been the concept of independent data availability space : a special portion of space within a block, inaccessible to the EVM, that can store data for second-tier projects such as rollups. Since this data space is not accessible to the EVM, it can be broadcast separately from a block and verified separately. Ultimately, it can be verified through a technique called data availability sampling, which allows each node to verify that the data was published correctly by randomly checking several small samples. Once implemented, the blob space can be expanded significantly; the ultimate goal is 16 MB per slot (~1.33 MB/second).

Data availability sampling: Each node only needs to download a small portion of the data to verify the availability of the overall data

EIP-4844 (i.e. "blobs") does not provide us with data availability sampling. But it does set up the basic framework in such a way that from here, data availability sampling can be introduced and the number of blobs increased behind the scenes, all without any involvement from the user or application. In fact, the only "hard fork" required is a simple parameter change.

From here, the two directions in which development will need to continue are:

Gradually increase the blob capacity, eventually achieving a panoramic view of data availability sampling, providing 16 MB of data space per time slot;

Improving L2 to better utilize the data space we have.

Bringing DAS to life

The next stage may be a simplified version of DAS called PeerDAS. In PeerDAS, each node stores a significant portion (eg, 1/8) of the total blob data, and nodes maintain connections with many peers in the p2p network. When a node needs to sample a specific piece of data, it asks one of the peers known to be responsible for storing that piece of data.

If each node needs to download and store 1/8 of all the data, then PeerDAS theoretically allows us to increase the size of the blobs by 8x (actually 4x, since we lose 2x due to the redundancy of erasure coding) . PeerDAS can be rolled out over time: we could have a phase where professional stakers continue to download full blobs, while individual stakers only download 1/8 of the data.

In addition to this, EIP-7623 (or alternatives like 2D pricing) could be used to set tighter limits on the maximum size of execution blocks (i.e. "regular transactions" in a block), which would allow both increasing blob targets and L1 gas cap becomes safer. In the long term, more complex 2D DAS protocols will allow us to improve across the board and further increase blob space.

Improve L2 performance

Today, Layer 2 (L2) protocols can be improved in four key ways.

1. Use bytes more efficiently through data compression

My data compression overview diagram can still be viewed here ;

Naively speaking, a transaction takes up about 180 bytes of data. However, there are a range of compression techniques that can reduce this size in several stages; with optimal compression, we may eventually reduce the amount of data per transaction to less than 25 bytes.

2. Only use L1’s optimistic data technology under special circumstances to ensure the security of L2.

Plasma is a class of technology that allows you to keep data on L2 under normal circumstances while providing security equivalent to Rollup for some applications. For EVMs, Plasma cannot protect all coins. But Plasma-inspired builds can protect most coins. And a much simpler build than Plasma could vastly improve today's validitys . L2s unwilling to put all their data on-chain should explore such technology.

3. Continue to improve execution-related restrictions

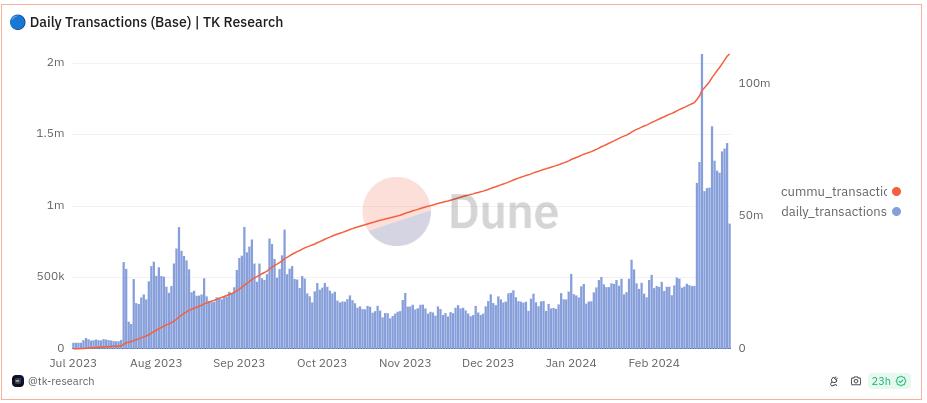

Once the Dencun hard fork is activated, the cost of setting up rollups to use the blobs it introduces is reduced by 100x. Base rollup saw an immediate surge in usage:

This in turn caused Base to hit its internal gas limit, causing an unexpected spike in fees . This leads to a broader recognition that Ethereum's data space is not the only one that needs to expand: rollups internally need to expand as well.

Part of this is parallelization; rollups can achieve something similar to EIP-648 . But equally important is storage, and the interplay between computation and storage. This is an important engineering challenge for rollups.

4. Continue to improve security

We are still far from a world where rollups are truly protected by code. In fact, according to l2 beat, only one of these five, only Arbitrum, fully supports EVM, even reaching what I call " stage one ".

This needs to be addressed head on. While we're not yet confident enough in the code for a sophisticated optimistic or SNARK-based EVM validator, we're definitely capable of getting halfway there, and have safety committees that can step in at high thresholds (e.g., what I'm proposing is 6- of-8; Arbitrum is executing 9-of-12) to change the behavior of the code.

The ecosystem’s standards need to become stricter: so far, we have been tolerant and accepting of any project that claims to be “on the path to decentralization.” By the end of the year, I think our standards should be raised and we should only consider as rollups those projects that have reached at least stage one.

After this, we can cautiously move towards the second stage: a rollups truly supported by the code, and a safety committee only if the code "obviously contradicts itself" (e.g., accepts two incompatible state roots, or two different A world where you can only intervene if you give different answers). One path towards this goal safely is to use multiple provers .

What does this mean for the development of Ethereum?

At ETHCC in the summer of 2022 , I gave a report describing the current state of Ethereum development as an S-curve: We are entering a very rapid transition period, after which, as L1 is consolidated and development refocuses on users and At the application layer, development will slow down again.

Today, I would say we are clearly in the decelerating, right-hand portion of this S-curve. As of two weeks ago, the two biggest changes to the Ethereum blockchain - the switch to proof-of-stake and the refactoring into blobs - have been completed. Future changes will still be important (e.g. Verkle trees , single-slot finality , intra-protocol account abstraction ), but they will be less dramatic than Proof-of-Stake and sharding. In 2022, Ethereum is like an airplane changing engines mid-flight. In 2023, it replaced its wings. The Verkle tree transition is the main remaining really important change (we already have a testnet); the others are more like replacing the rear wing.

The goal of EIP-4844 is to make a large one-time change in order to set long-term stability for rollups. Now that the blobs are out, future upgrades to full danksharding with 16 MB blobs, and even converting encryption to 64-bit goldilocks for STARKs on fields , can happen without the need for rollups and any further action by the user. It also reinforces an important precedent: Ethereum's development process is executed according to a long-standing, well-known roadmap, and applications built with the "new Ethereum" in mind, including L2, receive a long-term stable environment .

What does this mean for applications and users?

Ethereum's first ten years have been very much a training phase: the goal has always been to get Ethereum L1 off the ground, with adoption happening mostly among a small group of enthusiastic individuals. Many argue that the lack of mass adoption over the past decade proves cryptocurrencies are useless. I've always argued against the idea that nearly every non-financial speculation crypto application relies on low fees - so when we face high fees we shouldn't be surprised that what we see primarily is financial speculation.

Now that we have blobs, this key limitation that has been holding us back begins to melt away. Fees have finally come down significantly; my seven-year-old statement that the Internet of Money should cost no more than five cents per transaction has finally come true . We're not completely out of the woods yet: if usage grows too quickly, fees may still increase, and we'll need to keep working on scaling blobs (and rollups separately) over the next few years. But we see the light at the end of the tunnel...er...dark forest.

For developers, this means one simple thing: we no longer have any excuses. Until a few years ago, we set a low bar for ourselves, building applications that were clearly unusable at scale, as long as they worked as prototypes and were reasonably decentralized. Today, we have all the tools we need, and indeed most of the tools we will ever have, to build applications that are simultaneously cypherpunk and user-friendly. So we should go out and do it.

Many people are rising to this challenge. Daimo Wallet clearly describes itself as Venmo on Ethereum, aiming to combine the convenience of Venmo with the decentralization of Ethereum. In the world of decentralized social, Farcaster does a great job of combining true decentralization (e.g., check out this guide on how to build your own alternative client) with a great user experience. Unlike previous “social finance” crazes, the average Farcaster user isn’t there to gamble — passing a key test for a truly sustainable crypto app.

This post was sent via the main Farcaster client Warpcast, and this screenshot is from the alternative Farcaster + Lens client Firefly.

These successes are what we need to build on and extend to other application areas, including identity, reputation and governance.

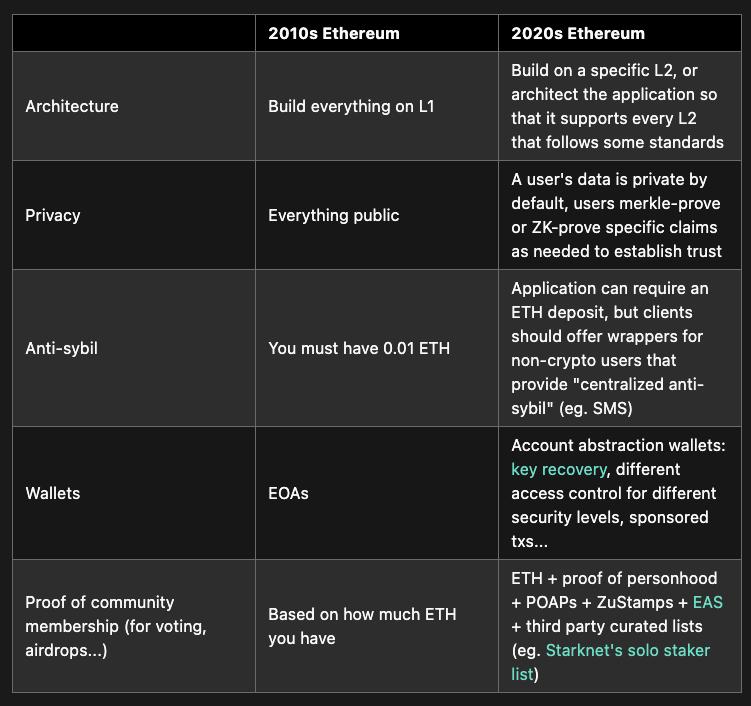

Applications built or maintained today should have the Ethereum of the 2020s as a blueprint

The Ethereum ecosystem still has a large number of applications operating around a workflow that is fundamentally "2010s Ethereum". Most ENS activity still occurs in the first layer (L1). Most token issuance also happens on the first layer, with no serious thought being given to ensuring that bridging tokens are available on the second layer (L2) (for example, check out this ZELENSKYY memecoin fan applauding the coin’s ongoing donations to Ukraine, But complaining about L1 fees makes it too expensive). In addition to scalability, we're also behind on privacy: POAPs are all exposed on-chain, which may be the right choice for some use cases, but very suboptimal for others. Most DAOs and Gitcoin Grants still use fully transparent on-chain voting, making them highly susceptible to bribery (including post-event airdrops), which has been shown to severely distort contribution patterns. Today, ZK-SNARKs have been around for many years, yet many applications still haven’t started using them properly.

These are hard working teams who have to deal with a large existing user base, so I don't blame them for not upgrading to the latest technology wave at the same time. But soon, this upgrade will need to happen. Here are some key differences between “a fundamentally 2010s Ethereum workflow” and “a fundamentally 2020s Ethereum workflow”:

Basically, Ethereum is no longer just a financial ecosystem . It is a full-stack alternative to most areas of "centralized technology" and even offers some things that centralized technology cannot (e.g., governance-related applications). We need to build with this broader ecosystem in mind.

in conclusion

Ethereum is undergoing a decisive transition from an era of "rapid L1 progress" to an era where L1 progress will still be significant, but slightly more modest and less disruptive to applications.

We still need to complete the expansion. This work will take place more behind the scenes, but is still important.

App developers are no longer just building prototypes; we are building tools for millions of people to use. Across the entire ecosystem, we need to completely adjust our mindset accordingly.

Ethereum has evolved from being “just” a financial ecosystem to a more thoroughly independent decentralized technology stack. Across the entire ecosystem, we need to adjust our mindset on this entirely accordingly.