Author: @charlotte0211z, @BlazingKevin_, Metrics Ventures

Vitalik published The promise and challenges of crypto + AI applications on January 30, discussing how blockchain and artificial intelligence should be combined, as well as the potential challenges that arise in the process. One month after this article was published, NMR, Near, and WLD mentioned in the article all achieved good gains, completing a round of value discovery. Based on the four methods of combining Crypto and AI proposed by Vitalik, this article sorts out the subdivision directions of the existing AI track and briefly introduces representative projects in each direction.

1 Introduction: Four ways to combine Crypto with AI

Decentralization is the consensus maintained by the blockchain, ensuring security is the core idea, and open source is the key foundation for making on-chain behavior have the above characteristics from a cryptographic perspective. In the past few years, this approach has been applicable to several rounds of blockchain changes, but when artificial intelligence is involved, the situation changes.

Imagine using artificial intelligence to design the architecture of a blockchain or application. Then it is necessary to open source the model. However, this will expose its vulnerability to adversarial machine learning; otherwise, it will lose its decentralization. Therefore, it is necessary for us to think about how and how deeply to integrate artificial intelligence into current blockchains or applications.

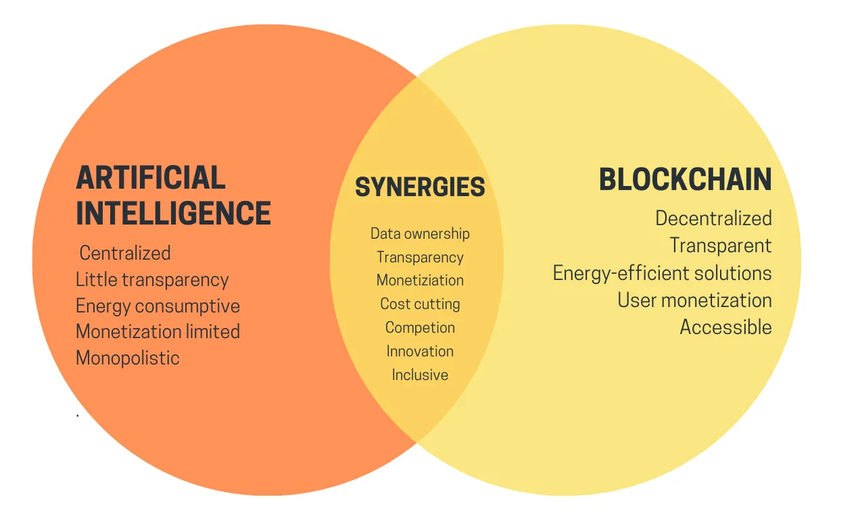

Source: DE UNIVERSITY OF ETHEREUM

In the article When Giants Collide: Exploring the Convergence of Crypto x AI by DE UNIVERSITY OF ETHEREUM , the differences in the core characteristics of artificial intelligence and blockchain are explained. As shown in the figure above, the characteristics of artificial intelligence are:

Centralization

low transparency

Energy consumption

Monopoly

Weak monetization attributes

Blockchain is completely opposite to artificial intelligence in these five points. This is also the real argument of Vitalik’s article. If artificial intelligence and blockchain are combined, what kind of trade-offs should be made in terms of data ownership, transparency, monetization capabilities, energy consumption costs, etc., and what infrastructure needs to be created? Ensure the effective combination of the two.

In accordance with the above principles and its own thinking, Vitalik divides applications combining artificial intelligence and blockchain into four major categories:

AI as a player in a game

AI as an interface to the game

AI as the rules of the game

AI as the objective of the game

Among them, the first three are mainly the three ways in which AI is introduced into the Crypto world, representing three levels from shallow to deep. According to the author’s understanding, this division represents the degree of influence of AI on human decision-making, and thus provides a basis for the entire Crypto introduces varying degrees of systemic risk:

Artificial intelligence as a participant in applications: Artificial intelligence itself will not affect human decision-making and behavior, so it will not bring risks to the real human world, and therefore has the highest level of implementation at present.

Artificial intelligence as an application interface: Artificial intelligence provides auxiliary information or auxiliary tools for human decision-making and behavior, which will improve user and developer experience and lower barriers to entry. However, incorrect information or operations will bring certain risks to the real world.

Rules for the application of artificial intelligence: Artificial intelligence will fully replace humans in decision-making and operations. Therefore, the evildoings and failures of artificial intelligence will directly lead to chaos in the real world. Whether in Web2 or Web3, artificial intelligence cannot currently be trusted to replace humans. decision making.

Finally, the fourth category of projects is committed to using the characteristics of Crypto to create better artificial intelligence. As mentioned above, centralization, low transparency, energy consumption, monopoly and weak currency attributes can all be naturally eliminated through the attributes of Crypto. and. Although many people have doubts about whether Crypto can have an impact on the development of artificial intelligence, influencing the real world through the power of decentralization has always been Crypto’s most fascinating narrative. This track has also become an AI competition with its grand ideas. The most hyped part.

2 AI as a participant

In the mechanism of AI participation, the ultimate source of incentives comes from the agreement entered by humans. Before AI becomes an interface or even a rule, we often need to evaluate the performance of different AIs so that the AI can participate in a mechanism and ultimately be rewarded or punished through an on-chain mechanism.

As a participant, AI's risk to users and the entire system is basically negligible compared to its role as interfaces and rules. It can be said that it is a necessary stage before AI begins to deeply affect user decisions and behaviors. Therefore, artificial intelligence and the difference between The costs and trade-offs required for the integration of blockchain at this level are relatively small, and it is also a type of product that Vitalik believes is highly implementable.

From a broad perspective and level of implementation, most current AI applications fall into this category, such as AI-empowered trading bots and chatbots. At the current level of implementation, it is difficult to realize the role of AI as an interface or even a rule. Users are using different Comparison and gradual optimization are carried out in the bot, and crypto users have not yet developed the behavioral habit of using AI applications. In Vitalik's article, Autonomous Agent is also classified into this category.

However, from a narrow sense and long-term perspective, we tend to divide AI applications or AI Agents into more detailed categories. Therefore, under this category, we believe that the representative subdivision tracks include:

2.1 AI games

To some extent, AI games can be classified into this category. Players interact with the AI and train their AI characters to make the AI characters more in line with their personal needs, such as more in line with personal preferences or in game mechanics. China is more combative and competitive. Games are a transitional stage before AI enters the real world. It is also a track that currently has low risk of implementation and is easiest for ordinary users to understand. Iconic projects such as AI Arena, Echelon Prime, Altered State Machine, etc.

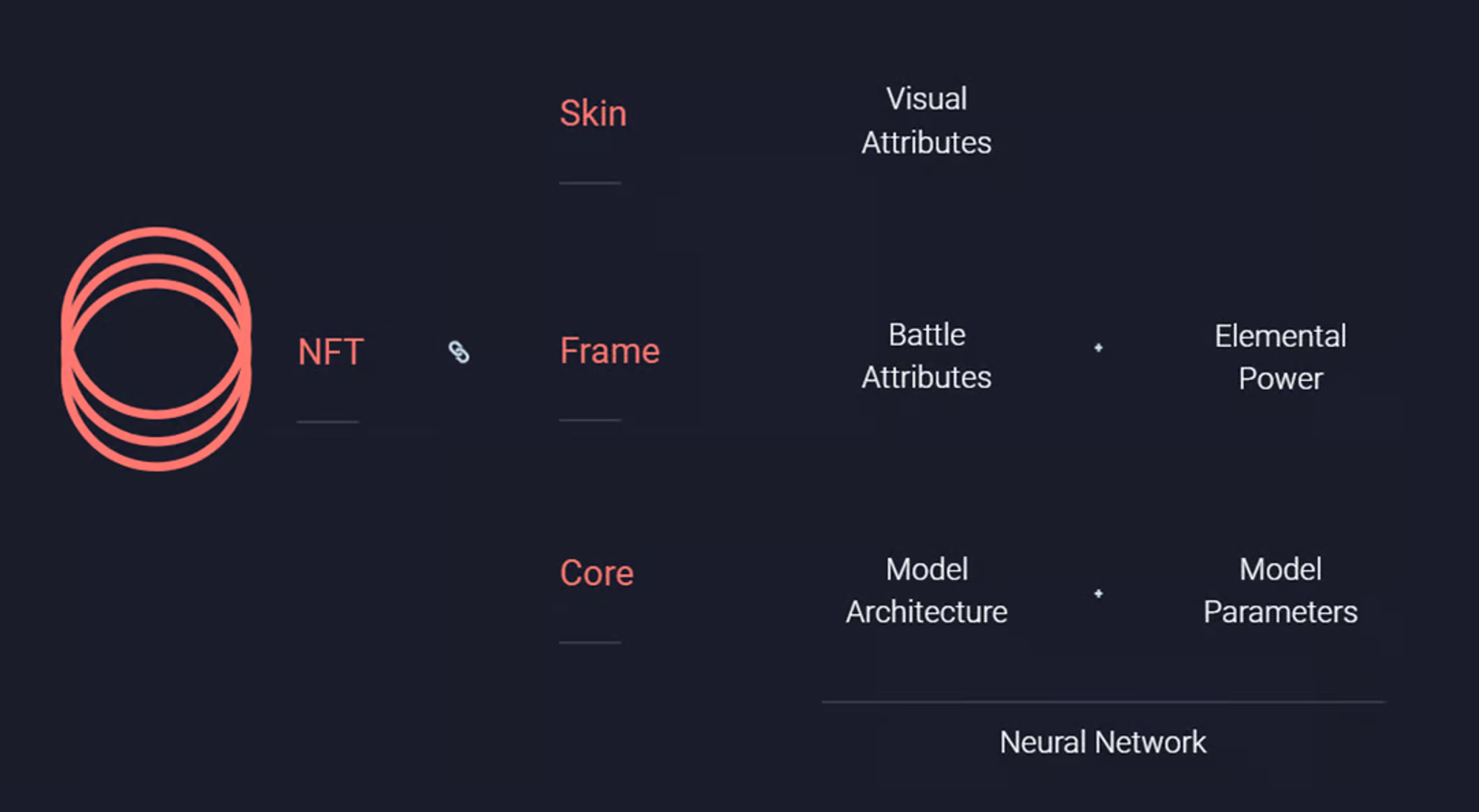

AI Arena : AI Arena is a PVP fighting game where players can learn and train through AI to continuously evolve game characters. It is hoped that more ordinary users can contact, understand and experience AI in the form of games, and at the same time allow artificial intelligence engineers to Based on AI Arena provides various AI algorithms to increase revenue. Each game character is an NFT empowered by AI, of which Core is the core containing the AI model, including two parts: architecture and parameters, which are stored on IPFS. The parameters in a new NFT are randomly generated, which means It will perform random actions, and users need to improve the character's strategic capabilities through the process of imitation learning (IL). Every time the user trains the character and saves the progress, the parameters will be updated on IPFS.

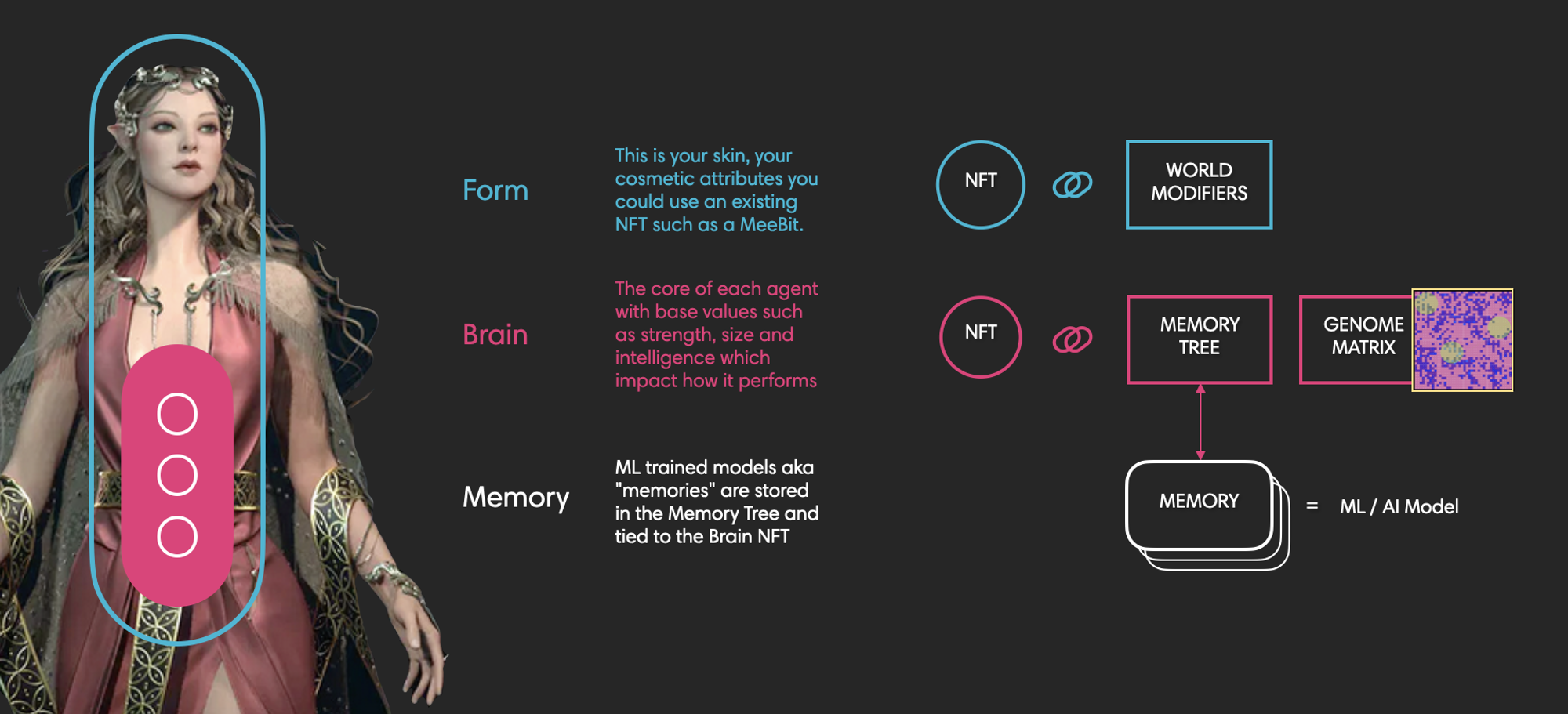

Altered State Machine : ASM is not an AI game, but a protocol for rights confirmation and transactions for AI Agents. It is positioned as the Metaverse AI protocol. It is currently integrated with multiple games including FIFA, introducing AI into games and the Metaverse. Agent. ASM uses NFT to confirm and trade AI Agents. Each Agent will contain three parts: Brain (the Agent's own characteristics), Memories (the part that stores the behavioral strategies learned by the Agent and model training, and is bound to the Brain) , Form (character appearance, etc.). ASM has a Gym module, including a decentralized GPU cloud provider, which can provide computing power support for the Agent. Projects currently using ASM as the underlying include AIFA (AI football game), Muhammed Ali (AI boxing game), AI League (street football game in partnership with FIFA), Raicers (AI-driven racing game), and FLUF World's Thingies (generative NFT).

Parallel Colony (PRIME) : Echelon Prime is developing Parallel Colony, a game based on AI LLM, where players can interact with and influence your AI Avatar, and the Avatar will act autonomously based on memories and life trajectories. Colony is currently one of the most anticipated AI games. Recently, the official released a white paper and announced its migration to Solana, which has ushered in a new wave of gains for PRIME.

2.2 Prediction Market/Contest

Predictive capabilities are the basis for AI’s future decisions and behaviors. Before the AI models are used for actual predictions, prediction competitions compare the performance of AI models at a higher level and provide incentives for data scientists/AI models through tokens. It has positive significance for the development of the entire Crypto×AI - by stimulating the continuous development of models and applications with greater efficiency and performance, more suitable for the crypto world, and creating better and safer products before AI can have a more profound impact on decision-making and behavior. The product. As Vitalik said, prediction market is a powerful primitive that can be expanded to other types of problems. Iconic projects in this track include: Numerai and Ocean Protocol.

Numerai : Numerai is a data science competition that has been running for a long time. Data scientists train machine learning models to predict the stock market based on historical market data (provided by Numerai), and stake models and NMR tokens for tournaments. The better performing model will Obtain NMR token incentives, and the pledged tokens of poor models will be destroyed. As of March 7, 2024, a total of 6,433 models have been staked, and the protocol has provided a total of $75,760,979 in incentives to data scientists. Numerai is inspiring global data scientists to collaborate to build new types of hedge funds, with funds launched so far including Numerai One and Numerai Supreme. Numerai’s path: market prediction competition → crowdsourcing prediction model → new hedge fund based on crowdsourcing model.

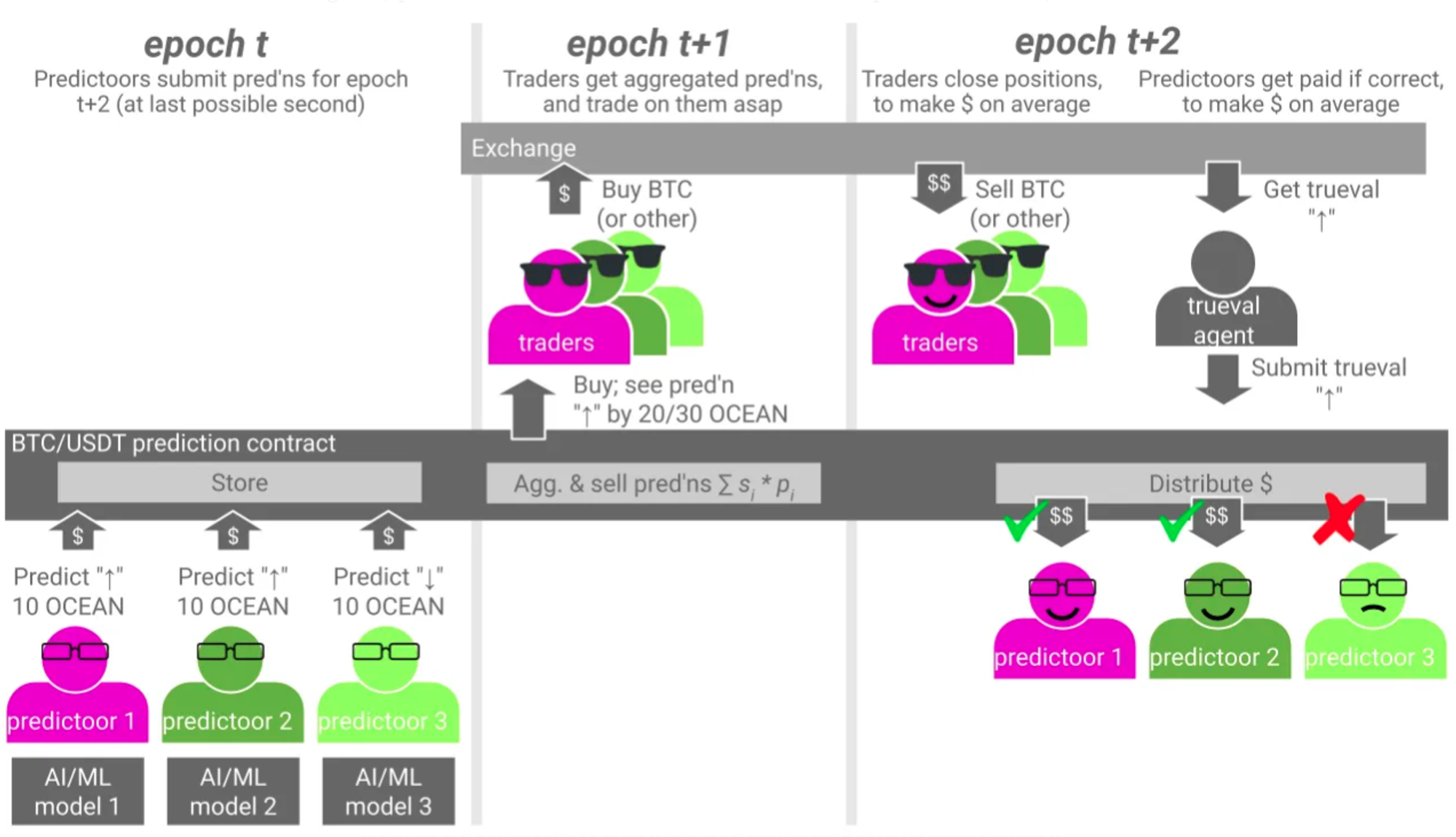

Ocean Protocol : Ocean Predictor is focusing on predictions, starting with crowdsourced predictions of cryptocurrency trends. Players can choose to run Predictoor bot or Trader bot. Predictoor bot uses an AI model to predict the price of cryptocurrency (such as BTC/USDT) at the next point in time (such as five minutes later) and pledges a certain amount of $OCEAN. The agreement will be based on The global prediction is calculated by weighting the pledge amount. Traders buy the prediction results and can trade based on them. When the accuracy of the prediction results is high, Traders can profit from it. Predictor who predicts incorrectly will be punished, while those who predict correctly can get This portion of the tokens and Traders purchase fees serve as rewards. On March 2, Ocean Predictoor announced its latest direction - World-World Model (WWM) in the media, and began to explore real-world predictions such as weather and energy.

3 AI as an interface

AI can help users understand what is happening in simple and easy-to-understand language, act as a mentor for users in the crypto world, and provide tips on possible risks to reduce Crypto’s usage threshold and user risks, and improve user experience. The specific products that can be implemented have rich functions, such as risk reminders during wallet interactions, AI-driven intent transactions, AI Chatbots that can answer crypto questions from ordinary users, etc. By expanding the audience group, almost all groups, including ordinary users, developers, analysts, etc., will become the service targets of AI.

Let us reiterate what these projects have in common: they have not yet replaced humans to perform certain decisions and behaviors, but are using AI models to provide humans with information and tools to assist decision-making and behaviors. Starting from this level, the risk of AI evil has begun to be exposed in the system - it can interfere with human beings' final judgment by providing wrong information. This has also been analyzed in detail in Vitalik's article.

There are many and complex projects that can be classified under this category, including AI chatbot, AI smart contract audit, AI code writing, AI trading bot, etc. It can be said that the vast majority of AI applications currently fall into this category. At the junior level, representative projects include:

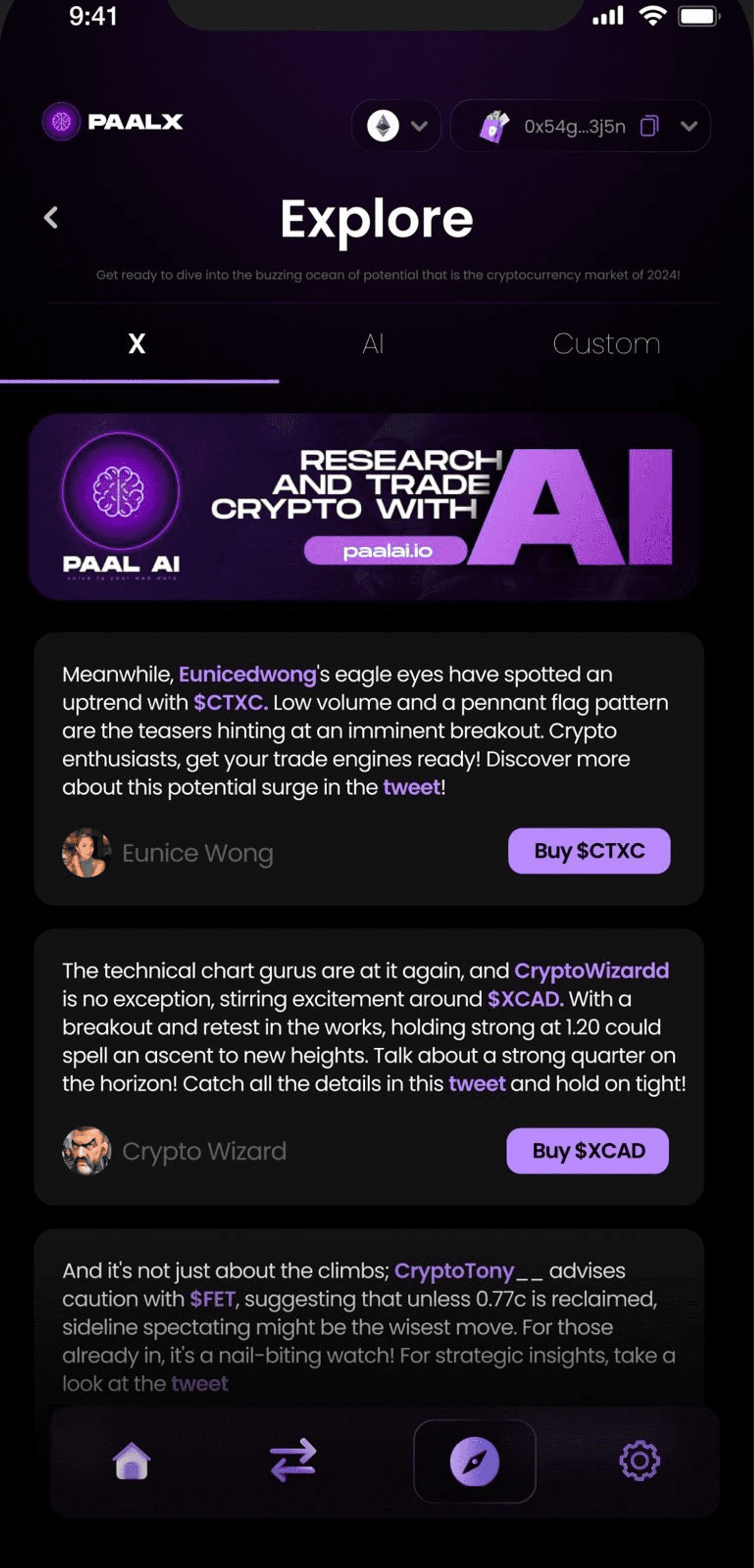

PaaL : PaaL is currently the leading project of AI Chatbot. It can be regarded as ChatGPT trained with crypto-related knowledge. By integrating TG and Discord, it can provide users with: token data analysis, token fundamentals and token economics analysis, and With other functions such as text generation and pictures, PaaL Bot can be integrated into group chats to automatically reply to some information. PaaL supports customizing personal bots, and users can build their own AI knowledge base and custom bots by feeding data sets. Paal is moving towards AI Trading Bot. On February 29, it announced the release of its AI-supported crypto research & trading terminal PaalX. According to the introduction, it can realize AI smart contract auditing, Twitter-based news integration and trading, Crypto research and trading support. Artificial intelligence assistants can lower the threshold for users to use.

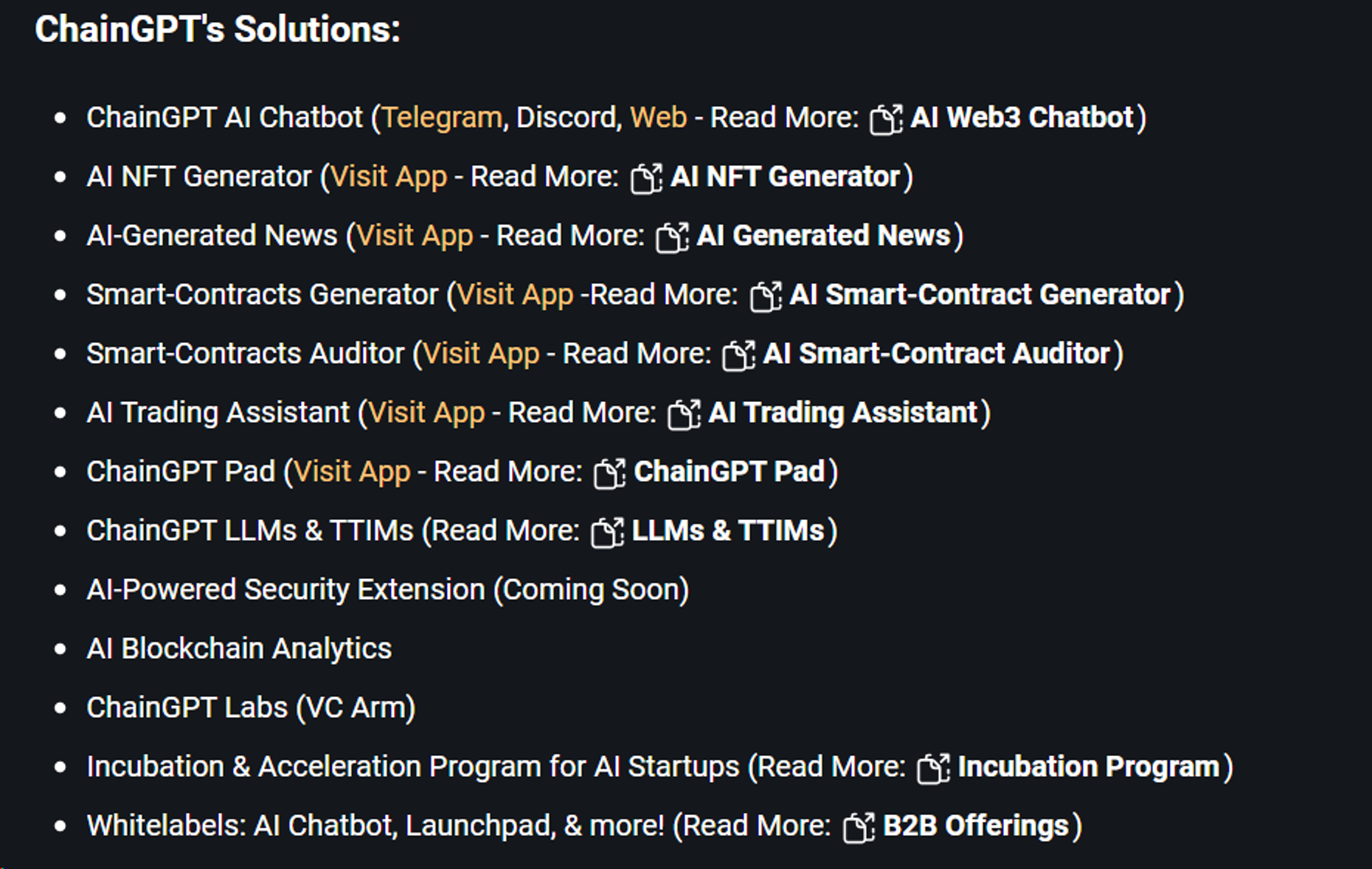

ChainGPT : ChainGPT relies on artificial intelligence to develop a series of crypto tools, such as chatbot, NFT generator, news collection, smart contract generation and audit, transaction assistant, Prompt market and AI cross-chain exchange. However, ChainGPT’s current focus is on project incubation and Launchpad, and it has completed IDOs for 24 projects and 4 Free Giveaways.

Arkham : Ultra is Arkham’s dedicated AI engine. The use case is to increase transparency in the crypto industry by algorithmically matching addresses with real-life entities. Ultra combines the on-chain and off-chain data provided by users and collected by itself, and outputs it into an expandable database, which is finally presented in the form of charts. However, the Ultra system is not discussed in detail in the Arkham document. The reason why Arkham has received attention this time is the personal investment of OpenAI founder Sam Altman, which has gained 5 times in the past 30 days.

GraphLinq : GraphLinq is an automated process management solution designed to enable users to deploy and manage various types of automated functions without programming, such as pushing the price of Bitcoin in Coingecko to TG Bot every 5 minutes. GraphLinq's solution is to use Graph to visualize the automation process. Users can create automated tasks by dragging nodes and execute them using GraphLinq Engine. Although no code is required, the process of creating Graph still has a certain threshold for ordinary users, including selecting the appropriate template, selecting the appropriate one among hundreds of logical blocks, and connecting them. Therefore, GraphLinq is introducing AI, allowing users to use conversational artificial intelligence and natural language to complete the construction and management of automated tasks.

0x0.ai : 0x0 has three main AI-related businesses: AI smart contract audit, AI anti-Rug detection and AI developer center. Among them, AI anti-Rug detection will detect suspicious behaviors, such as excessive taxes or draining liquidity, to prevent users from being deceived. The AI developer center uses machine learning technology to generate smart contracts and realize No-code deployment contracts. However, AI smart contract auditing has only been initially launched, and the other two functions have not yet been developed.

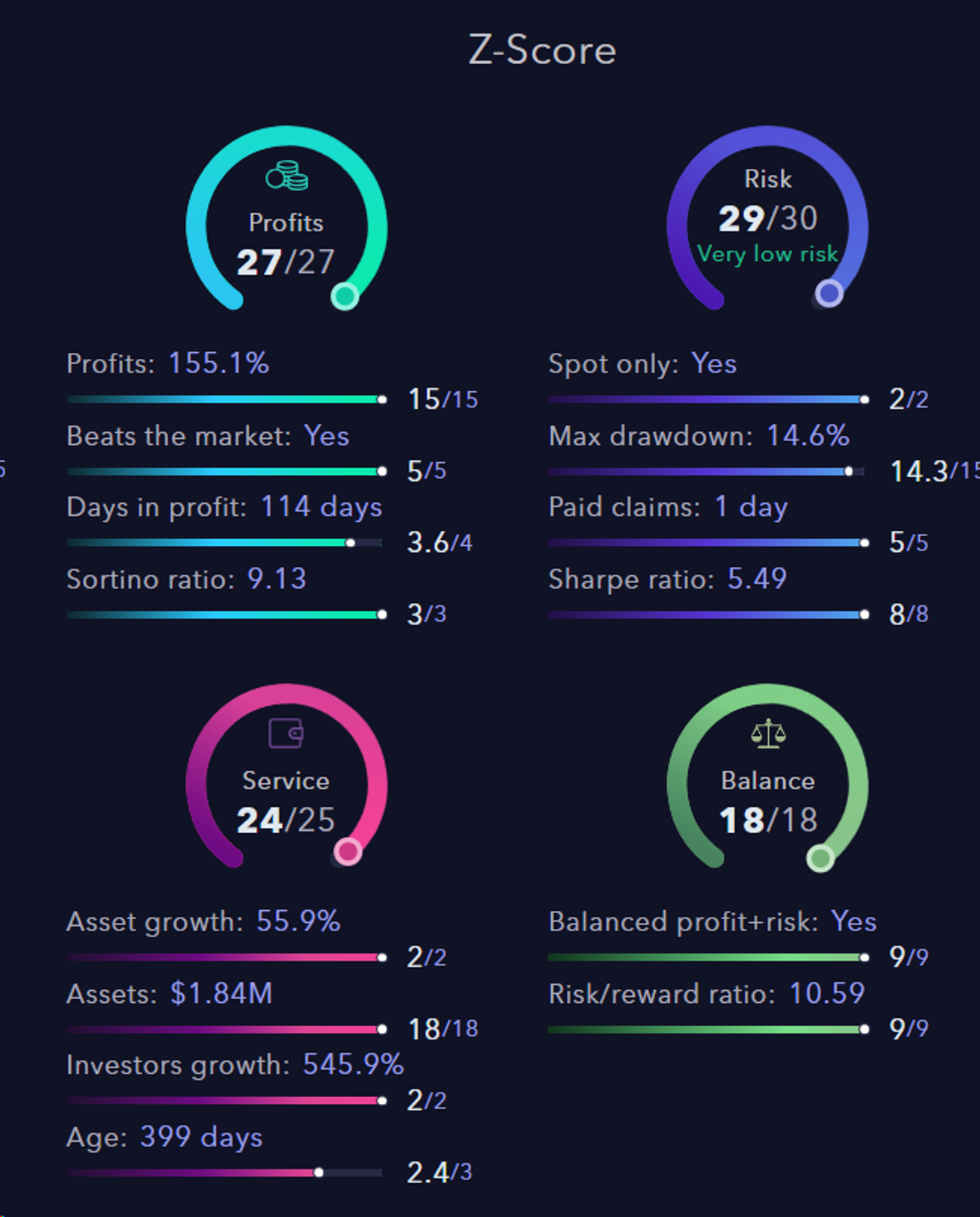

Zignaly : Zignaly was born in 2018, aiming to allow individual investors to choose fund managers to manage crypto assets for themselves, similar to the logic of copy-trading. Zignaly is using machine learning and artificial intelligence technology to establish an indicator system for systematic evaluation of fund managers. The first product currently launched is Z-Score, but as an artificial intelligence product, it is still relatively rudimentary.

4 AI as the rules of the game

This is the most exciting part - allowing AI to replace humans in decision-making and behavior. Your AI will directly control your wallet and make trading decisions and behaviors on your behalf. Under this classification, the author believes that it can be mainly divided into three levels: AI applications (especially applications with the vision of autonomous decision-making, such as AI automated trading bots, AI DeFi revenue bots), Autonomous Agent protocols, and zkml/opml.

AI applications are tools for making specific decisions on problems in a certain field. They accumulate knowledge and data in different subdivisions and rely on AI Models tailored to subdivided problems to make decisions. It can be noted that AI applications are classified into two categories in this article: interfaces and rules. From the perspective of development vision, AI applications should become independent decision-making agents. However, at present, neither the effectiveness of the AI model nor the security of integrated AI , are unable to meet this requirement, and are even slightly reluctant as an interface. AI applications are in a very early stage. The specific projects have been introduced previously, so I will not go into details here.

Autonomous Agent was mentioned by Vitalik in the first category (AI as a participant). From a long-term perspective, this article classifies it into the third category. Autonomous Agent utilizes large amounts of data and algorithms to simulate human thinking and decision-making processes and perform various tasks and interactions. This article focuses on the Agent's communication layer, network layer and other infrastructure. These protocols define the Agent's ownership rights, establish the Agent's identity, communication standards and communication methods, connect multiple Agent applications, and enable collaborative decision-making and behavior.

zkML/opML: Using cryptography or economics methods to ensure that the correct model inference process is used to provide credible output. Security issues are very fatal to the introduction of AI into smart contracts. Smart contracts rely on input to generate output and automatically perform a series of functions. Once AI does evil and gives wrong input, it will introduce great systemic risks to the entire Crypto system, so zkML /opML and a range of potential solutions are the basis for allowing AI to act and make decisions independently.

Finally, the three constitute the three basic levels of AI as operating rules: zkml/opml serves as the lowest infrastructure to ensure the security of the protocol; the Agent protocol establishes the Agent ecosystem and can collaboratively make decisions and behaviors; AI applications are also Specific AI Agents will continuously improve their capabilities in a certain field and actually make decisions and actions.

4.1 Autonomous Agent

The application of AI Agent in the Crypto world is natural. From smart contracts to TG Bots to AI Agents, the crypto world is moving towards higher automation and lower user thresholds. Although smart contracts automatically execute functions through non-tamperable code, they still need to rely on external triggers to wake up, and cannot run autonomously and continuously; TG Bots lowers the user threshold. Users do not need to interact directly with the encryption front-end, but through natural Language completes on-chain interactions, but it can only complete extremely simple and specific tasks, and still cannot achieve user-intent-centered transactions; AI Agents have certain independent decision-making capabilities, understand the user's natural language, and independently find and combine other Agents and on-chain tools to accomplish user-specified goals.

AI Agent is working to greatly improve the experience of using encryption products, and blockchain can also help the operation of AI Agent to be more decentralized, transparent and secure. The specific help is:

Incentivize more developers to provide Agent through tokens

NFT confirmation promotes Agent-based charging and transactions

Provide agent identity and registration mechanism on the chain

Provide agent activity logs that cannot be tampered with, and conduct timely traceability and accountability for their actions

The main projects of this track are as follows:

Autonolas : Autonolas supports the asset confirmation and composability of Agents and related components through on-chain protocols, enabling code components, Agents and services to be discovered and reused on the chain, and incentivizing developers to receive financial compensation. After developers develop a complete Agent or component, they will register the code on the chain and obtain NFT, which represents the ownership of the code; the Service Owner will unite multiple Agents to create a service and register it on the chain, and attract Agent Operators to The service is actually performed and the user pays to use the service.

Fetch.ai : Fetch.ai has a strong team background and development experience in the AI field, and is currently focusing on the AI Agent track. The protocol consists of four key layers: AI Agents, Agentverse, AI Engine and Fetch Network. AI Agents are the core of the system, and others are frameworks and tools that assist in building Agent services. Agentverse is a software-as-a-service platform mainly used to create and register AI Agents. The goal of the AI Engine is to perform tasks by reading user natural language input, converting it into actionable characters, and selecting the most appropriate registered AI Agent in the Agentverse. Fetch Network is the blockchain layer of the protocol. AI Agents must be registered in the Almanac contract on the chain before they can start collaborative services with other Agents. It is worth noting that Autonolas is currently focusing on the construction of Agents in the crypto world, introducing off-chain Agent operations to the chain; Fetch.ai's focus includes the Web2 world, such as travel booking, weather prediction, etc.

Delysium : Delysium has transformed from a game to an AI Agent protocol. It mainly includes two layers: the communication layer and the blockchain layer. The communication layer is the backbone of Delysium, providing a secure and scalable infrastructure that enables fast and efficient communication between AI Agents. For communication, the blockchain layer authenticates the Agent and achieves tamper-proof records of the Agent’s behavior through smart contracts. Specifically, the communication layer establishes a unified communication protocol between Agents and uses a standardized messaging system so that Agents can communicate with each other without any barriers through a common language. In addition, it establishes a service discovery protocol and API so that users and Other Agents can quickly discover and connect to available Agents. The blockchain layer mainly consists of two parts: Agent ID and Chronicle smart contract. Agent ID ensures that only legitimate Agents can access the network. Chronicle is a log repository of all important decisions and behaviors made by the Agent. It cannot be tampered with after being uploaded to the chain. , ensuring trustworthy traceability of Agent behavior.

Altered State Machine : It uses NFT to set standards for the asset ownership and transactions of Agents. Specific analysis can be found in Part 1. Although ASM is currently mainly connected to games, as a basic specification, it also has the possibility of expanding to other Agent fields.

Morpheous : An AI Agent ecological network is being built. The agreement is designed to connect the four roles of Coder, Computer provider, Community Builder and Capital, respectively providing AI Agent, computing power, front-end and development tools and funds to support the operation of Agent for the network. MOR will In the form of Fair launch, incentives are provided to miners who provide computing power, stETH pledgers, Agent or smart contract development contributors, and community development contributors.

4.2 zkML/opML

Zero-knowledge proof currently has two main application directions:

Prove that the operation is running correctly on the chain at a lower cost (ZK-Rollup and ZKP cross-chain bridge are taking advantage of this feature of ZK);

Privacy protection: You don’t need to know the details of the calculation to prove that the calculation was performed correctly.

Similarly, the application of ZKP in machine learning can also be divided into two categories:

Inference verification: Through ZK-proof, it is proved on the chain at a low cost that the intensive calculation process of AI model inference is correctly executed off the chain.

Privacy protection: It can be divided into two categories. One is the protection of data privacy, that is, using private data for inference on a public model, and ZKML can be used to protect private data; the other is the protection of model privacy, hoping to hide the model Specific information such as weights is calculated from the public input and the output result is obtained.

The author believes that what is more important to Crypto currently is inference verification. We will further elaborate on the inference verification scenario here. Starting from AI as a participant to AI as the rule of the world, we hope to make AI a part of the on-chain process. However, the computational cost of AI model reasoning is too high to run directly on the chain. Putting this process off-chain, This means that we need to endure the trust issues caused by this black box - did the AI model runner tamper with my input? Was the model I specified used for inference? By converting ML models into ZK circuits, it is possible to: (1) upload smaller models to the chain, store small zkML models in smart contracts, and directly upload them to the chain to solve the problem of opacity; (2) complete inference off-chain , and generate a ZK proof at the same time, to prove the correctness of the reasoning process by running the ZK proof on the chain. The infrastructure will include two contracts - the main contract (using the ML model to output results) and the ZK-Proof verification contract.

zkML is still in a very early stage, facing technical problems in converting ML models to ZK circuits, as well as extremely high computational and cryptographic overhead costs. Like Rollup's development path, opML has become another solution from an economic perspective. opML uses Arbitrum's AnyTrust assumption, that is, each claim has at least one honest node to ensure that the submitter or at least one verifier is honest. of. However, OPML can only be an alternative to inference verification and cannot achieve privacy protection.

The current project is building the infrastructure of zkML and working hard to explore its applications. The establishment of applications is equally important because it is necessary to clearly prove to encryption users the important role of zkML and prove that the ultimate value can offset the huge cost. Among these projects, some focus on ZK technology research and development related to machine learning (such as Modulus Labs), while others are more general ZK infrastructure construction. Related projects include:

Modulus is using zkML to apply artificial intelligence to the on-chain reasoning process. Modulus launched the zkML prover Remainder on February 27, which achieves a 180-fold efficiency improvement compared to traditional AI inference on equivalent hardware. In addition, Modulus cooperates with multiple projects to explore practical use cases of zkML, such as working with Upshot to collect complex market data, evaluate NFT prices, and transmit prices to the chain by using artificial intelligence with ZK proof; and AI Arena Cooperate to prove that the Avatar being fought is the same one trained by the player.

Risc Zero places the model on-chain, and by running the machine learning model in RISC Zero's ZKVM, it is possible to prove that the exact calculations involved in the model were performed correctly.

Ingonyama is developing hardware specifically for ZK technology, which may lower the barrier to entry into the ZK technology field, and zkML may also be used in the model training process.

5 AI as a goal

If the first three categories focus more on how AI empowers Crypto, then "AI as a goal" emphasizes how Crypto helps AI, that is, how to use Crypto to create better AI models and products, which may include multiple evaluation criteria. : More efficient, more precise, more decentralized, etc.

AI includes three cores: data, computing power and algorithms. In every dimension, Crypto is committed to providing more effective assistance to AI:

Data: Data is the basis for model training. The decentralized data protocol will encourage individuals or enterprises to provide more private domain data, while using cryptography to protect data privacy and avoid the leakage of sensitive personal data.

Computing power: The decentralized computing power track is currently the hottest AI track. The agreement promotes the matching of long-tail computing power and AI companies by providing a matching market between supply and demand for model training and inference.

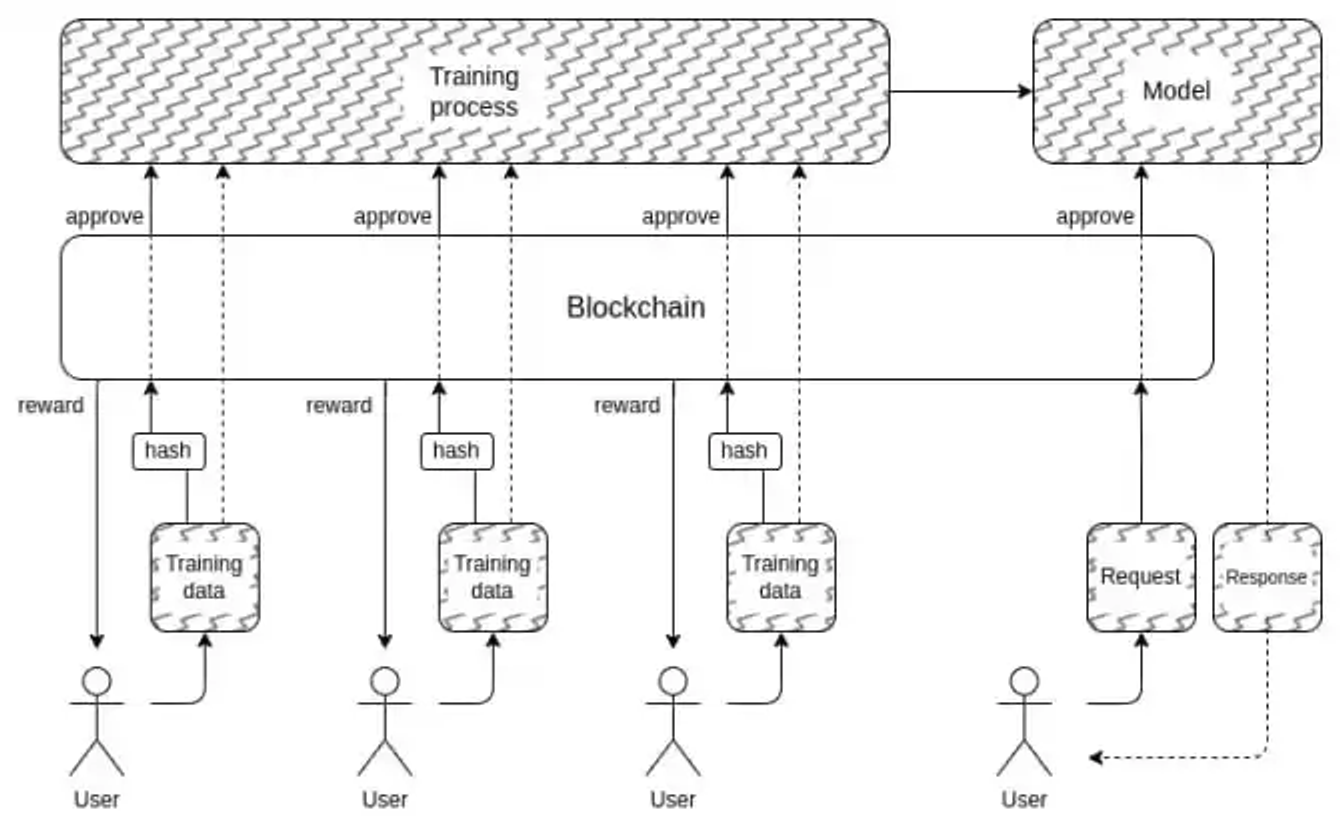

Algorithm: Crypto’s empowerment of algorithms is the core link in realizing decentralized AI. It is also the main content of the “AI as a goal” narrative in Vitalik article. To create a decentralized and trustworthy black box AI, as mentioned above The problem of adversarial machine learning will be solved, but it will face a series of obstacles such as extremely high cryptographic overhead. Furthermore, “using cryptographic incentives to encourage making better AI” can also be achieved without completely falling down the cryptographic rabbit hole of full encryption.

The monopoly of data and computing power by large technology companies has resulted in a monopoly on the model training process, and closed-source models have become the key to profits for large enterprises. From an infrastructure perspective, Crypto encourages the decentralized supply of data and computing power through economic means, while ensuring data privacy in the process through cryptographic methods, and based on this, it facilitates decentralized model training to achieve More transparent and decentralized AI.

5.1 Decentralized data protocol

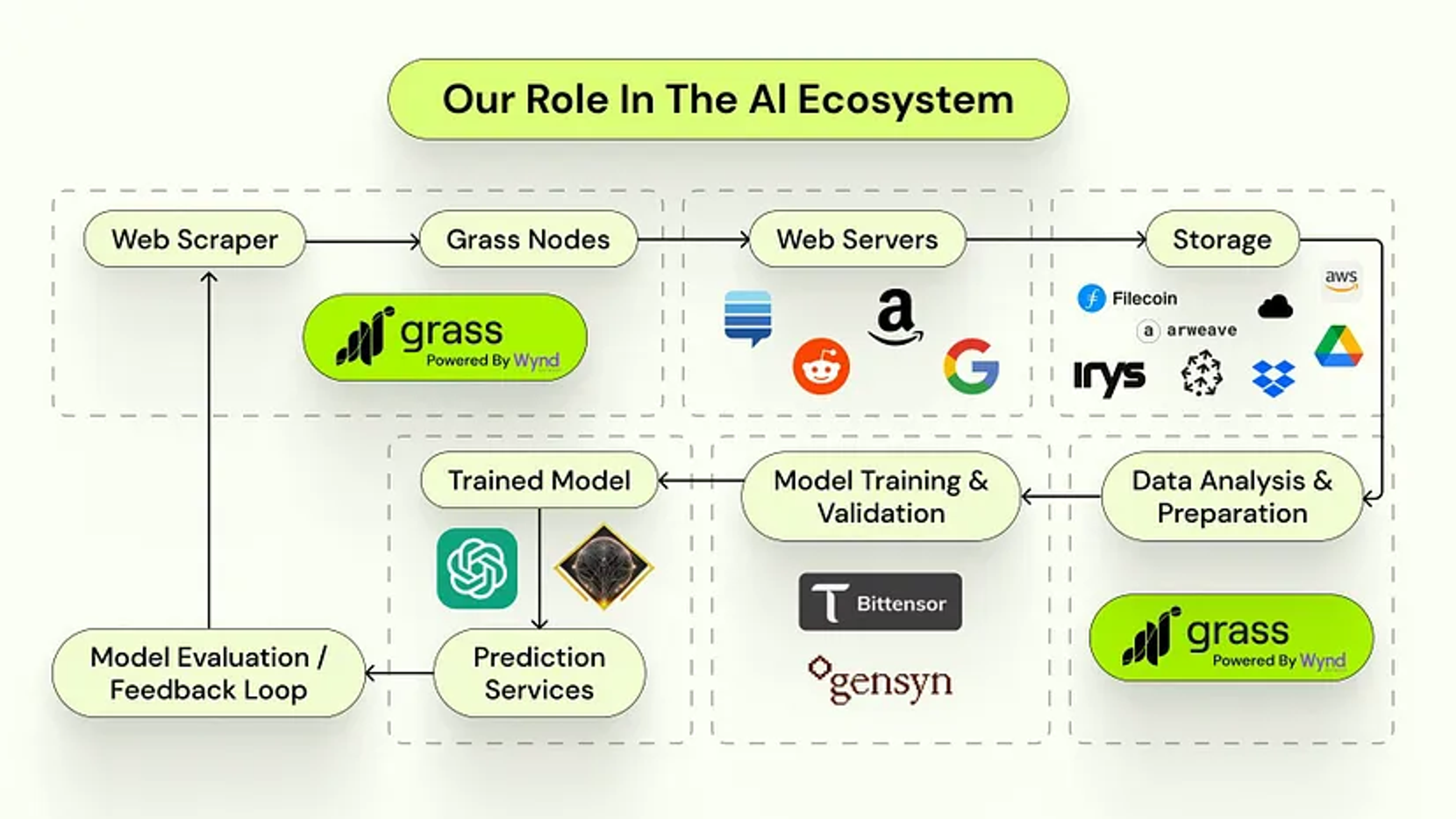

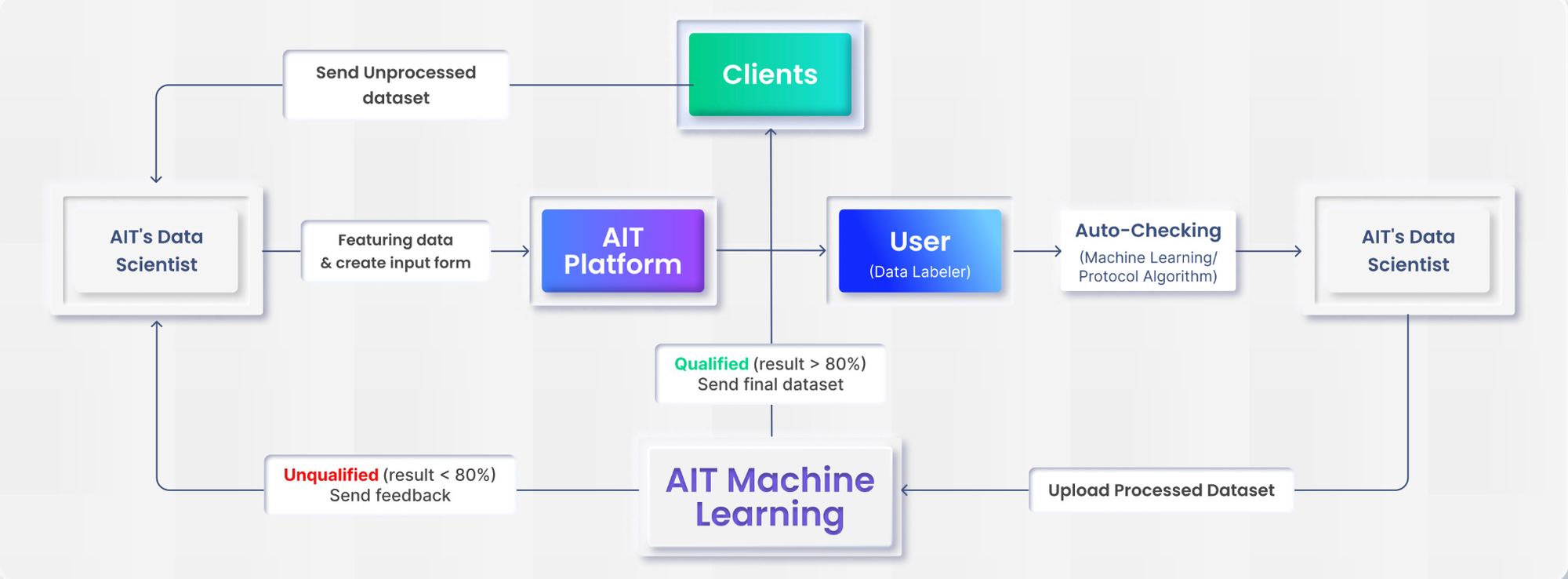

Decentralized data protocols are mainly carried out in the form of data crowdsourcing, motivating users to provide data sets or data services (such as data annotation) for enterprises to conduct model training, and opening Data Marketplace to promote the matching of supply and demand. Some protocols are also exploring through DePIN incentive protocol obtains the user's browsing data, or uses the user's device/bandwidth to complete network data crawling.

Ocean Protocol : Confirm and tokenize data. Users can create NFTs of data/algorithms in Ocean Protocol in a code-free manner, and create corresponding datatokens to control access to data NFTs. Ocean Protocol ensures the privacy of data through Compute To Data (C2D). Users can only obtain the output results based on the data/algorithm, but cannot download them in full. Ocean Protocol was established in 2017. As a data market, it is natural to catch up with the AI express train in this round of craze.

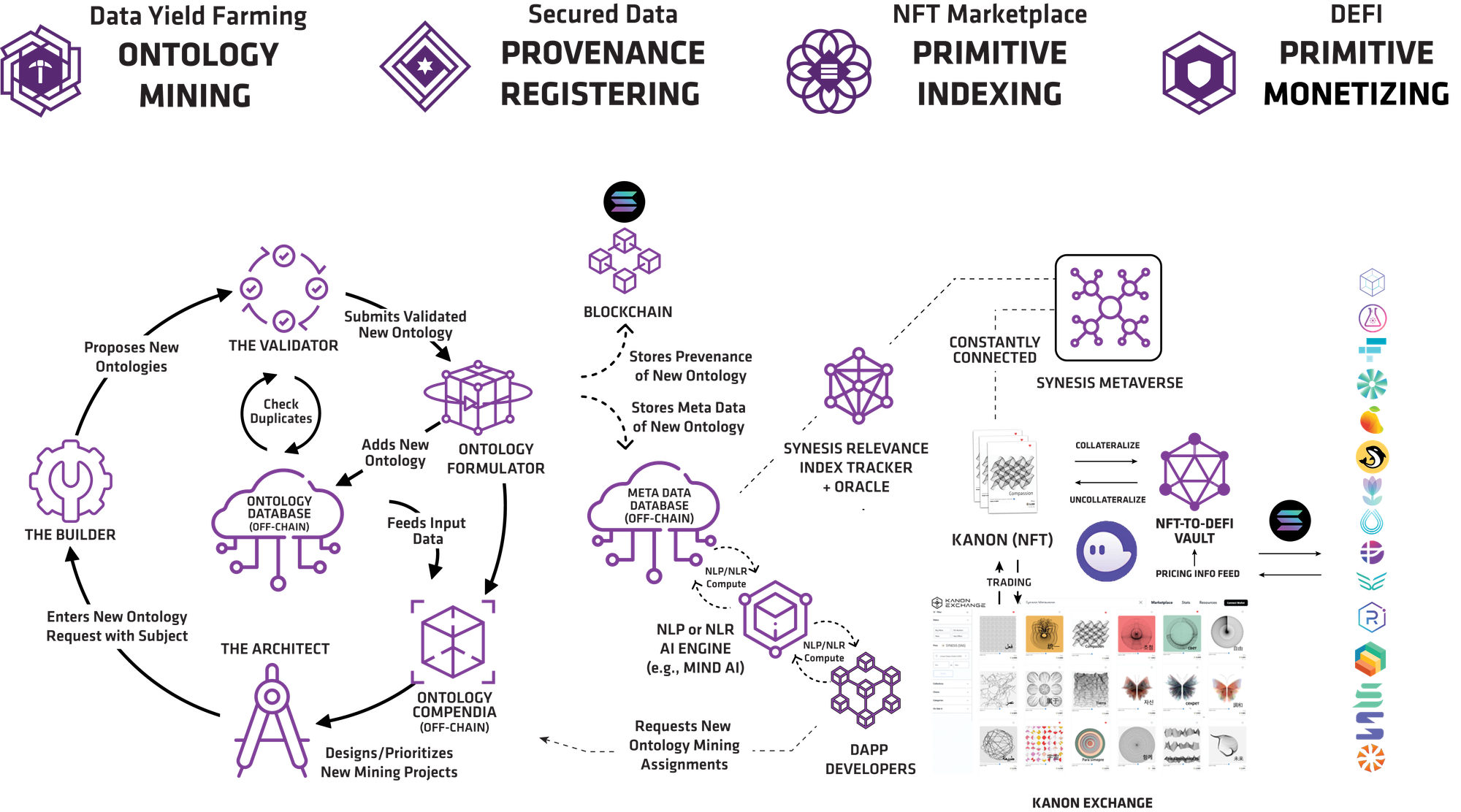

Synesis One : This project is the Train2Earn platform on Solana. Users obtain $SNS rewards by providing natural language data and data annotations. Users support mining by providing data. After verification, the data will be stored and uploaded to the chain, and will be processed by AI The company uses it for training and inference. Specifically, miners are divided into three categories: Architect/Builder/Validator. Architect is responsible for creating new data tasks, Builder provides corpus in the corresponding data tasks, and Validator verifies the data set provided by Builder. The completed data set will be stored in IPFS, and the data source and IPFS address will be saved on the chain and will be stored in an off-chain database for use by the AI company (currently Mind AI).

Grass : Known as the decentralized data layer of AI, it is essentially a decentralized network scraping market to obtain data for AI model training. Internet websites are an important source of AI training data. The data of many websites, including Twitter, Google, and Reddit, are of great value, but these websites are constantly restricting data crawling. Grass uses unused bandwidth in personal networks to reduce the impact of data blocking by using different IP addresses to capture data from public websites, complete preliminary data cleaning, and become a data source for AI model training companies and projects. Grass is currently in the beta testing phase, and users can provide bandwidth to earn points to receive potential airdrops.

AIT Protocol : AIT Protocol is a decentralized data annotation protocol designed to provide developers with high-quality data sets for model training. Web3 enables the global workforce to quickly access the network and gain incentives through data annotation. AIT's data scientists will pre-annotate the data, which will then be further processed by users. After being inspected by the data scientists, the data that has passed the quality inspection will be provided to developers. By.

In addition to the above-mentioned data provision and data annotation protocols, previous decentralized storage infrastructure, such as Filecoin, Arweave, etc., will also provide assistance for more decentralized data.

5.2 Decentralized computing power

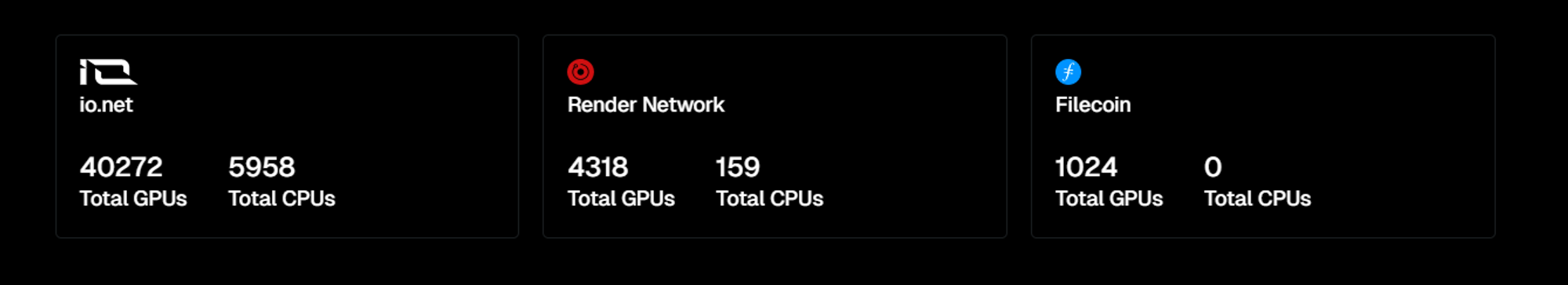

In the AI era, the importance of computing power is self-evident. Not only is Nvidia’s stock price climbing to peaks, but in the Crypto world, decentralized computing power can be said to be the most hyped segment of the AI track—among the top 200 companies in terms of market capitalization Among the 11 AI projects, there are 5 projects that focus on decentralized computing power (Render/Akash/AIOZ Network/Golem/Nosana), and have achieved high-fold increases in the past few months. We have also seen the emergence of many decentralized computing power platforms in projects with small market capitalization. Although they have just started, with the wave of Nvidia Conference, as long as they are related to GPU, they have quickly gained a surge.

Judging from the characteristics of the track, the basic logic of projects in this direction is highly homogenous - through token incentives, people or companies with idle computing resources can provide resources, thereby significantly reducing usage costs and establishing the supply and demand for computing power. In the market, currently, the main supply of computing power comes from data centers, miners (especially after Ethereum switches to PoS), consumer computing power and cooperation with other projects. Although homogeneous, this is a track with a high moat for leading projects. The main competitive advantages of the project come from: computing power resources, computing power rental price, computing power utilization rate and other technical advantages. Leading projects in this track include Akash, Render, io.net and Gensyn.

According to specific business directions, projects can be roughly divided into two categories: AI model inference and AI model training. Since AI model training requires much higher computing power and bandwidth than inference and is more difficult to implement than distributed inference, and the market for model inference is rapidly expanding, the predictable income will be significantly higher than that of model training in the future. Therefore, it is absolutely impossible to implement it at present. Most projects focus on reasoning (Akash, Render, io.net ), and the leader in training is Gensyn. Among them, Akash and Render were born earlier and were not born for AI computing. Akash was originally used for general computing, Render is mainly used for video and image rendering, and io.net is specially designed for AI computing, but in AI computing power will After the demand has increased to a higher level, these projects have tended to develop in AI.

The two most important competitive indicators still come from the supply side (computing power resources) and the demand side (computing power utilization rate). Akash has 282 GPUs and more than 20,000 CPUs, and has completed 160,000 leases. The utilization rate of the GPU network is 50-70%, which is a good number in this track. io.net has 40272 GPUs and 5958 CPUs, as well as Render's 4318 GPUs and 159 CPUs, and Filecoin's 1024 GPU licenses, including about 200 H100 blocks and thousands of A100 blocks. Currently, 151,879 inferences have been completed. This time, io.net is using extremely high airdrop expectations to attract computing resources. GPU data is growing rapidly, and its ability to attract resources needs to be re-evaluated after the token is launched. Render and Gensyn did not release specific data. In addition, many projects are improving their competitiveness on the supply and demand side through ecological cooperation. For example, io.net uses the computing power of Render and Filecoin to improve its resource reserves. Render has established a computing client plan (RNP-004). Allow users to indirectly access Render's computing resources through computing clients - io.net , Nosana, FedMl, Beam, thereby quickly transitioning from the rendering field to artificial intelligence computing.

In addition, the verification of decentralized computing remains a problem - how to prove that workers with computing resources performed computing tasks correctly. Gensyn is trying to build such a verification layer to ensure the correctness of calculations through probabilistic learning proofs, graph-based precise positioning protocols, and incentives. The verifiers and whistleblowers jointly check the calculations. Therefore, in addition to decentralized training, Gensyn It provides computing power support, and the verification mechanism it establishes also has unique value. Fluence, the computing protocol located on Solana, also adds verification of computing tasks. Developers can verify that their applications run as expected and that calculations are performed correctly by checking proofs issued by on-chain providers. However, the actual demand is still that "feasible" is greater than "trusted". The computing platform must first have sufficient computing power to have the possibility of competition. Of course, for excellent verification protocols, you can choose to access the computing power of other platforms to become Authentication layer and protocol layer play a unique role.

5.3 Decentralized model

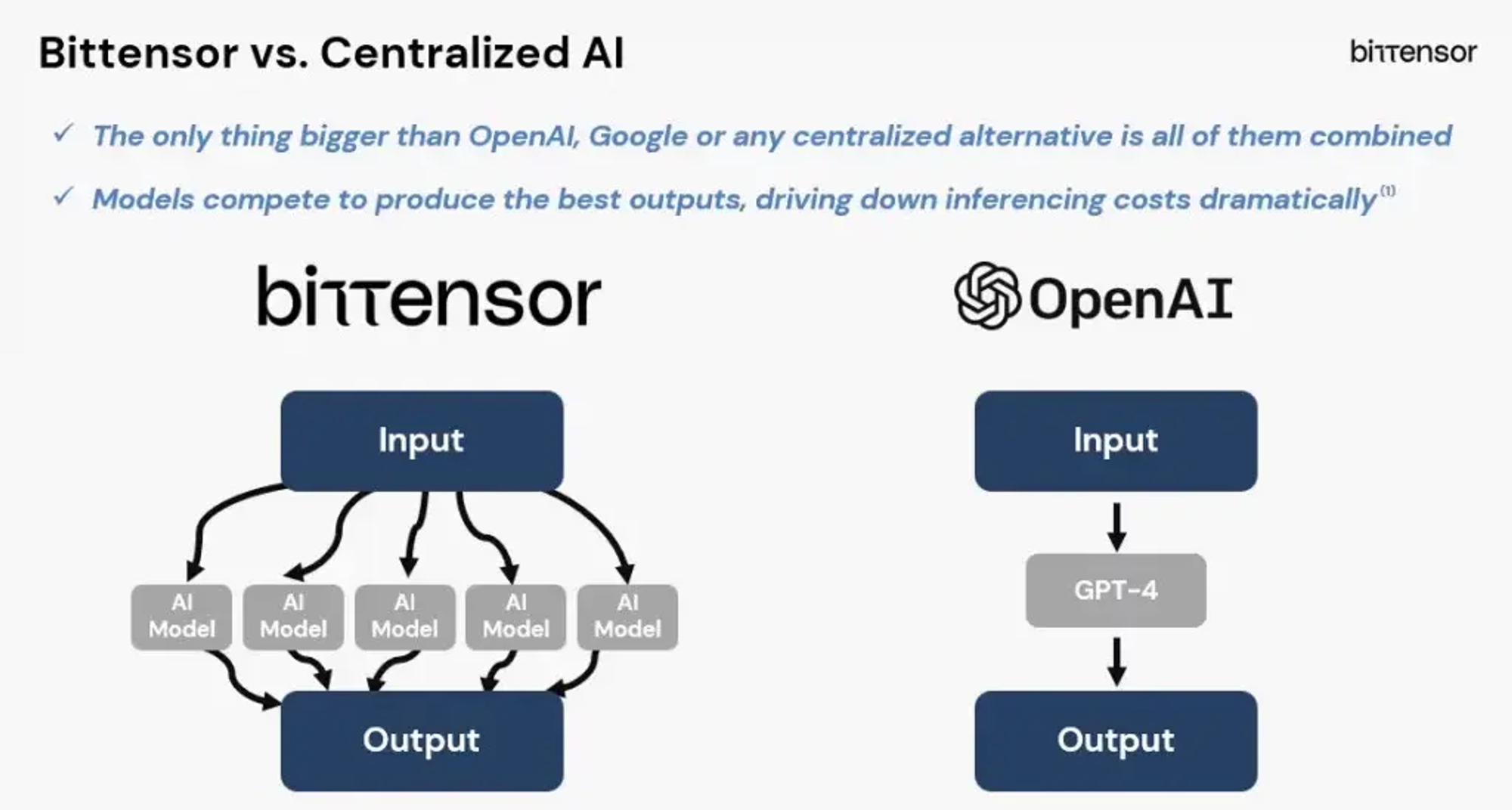

We are still very far away from the ultimate scenario described by Vitalik (shown in the figure below). We are currently unable to create a trustworthy black box AI through blockchain and encryption technology to solve the problem of adversarial machine learning and train data Encrypting the entire AI running process from query output to query output is a very large expense. However, there are currently projects that are trying to create better AI models through incentive mechanisms. They first open up the closed state between different models and create a pattern of mutual learning, collaboration and healthy competition between models. Bittensor is the most representative of them. project.

Bittensor : Bittensor is promoting the combination of different AI models, but it is worth noting that Bittensor itself does not train the model, but mainly provides AI inference services. Bittensor's 32 subnets focus on different service directions, such as data capture, text generation, Text2Image, etc. When completing a task, AI models belonging to different directions can collaborate with each other. The incentive mechanism promotes competition between subnets and within subnets. Currently, rewards are issued at a rate of 1 TAO per block. A total of approximately 7,200 TAO tokens are issued daily, and 64 validators in SN0 (root network) According to the performance of the subnet, the distribution ratio of these rewards among different subnets is determined. The subnet validator determines the distribution ratio among different miners by evaluating the work of the miners, thereby achieving better service and performance. Better models receive more incentives, which improves the overall reasoning quality of the system.

6 Conclusion: MEME hype or technological revolution?

From the skyrocketing price of ARKM and WLD brought about by Sam Altman's trends, to the NVIDIA conference leading to a series of participating projects, many people are adjusting their investment philosophy on the AI track. Is the AI track a MEME hype or a technological revolution?

Except for a few celebrity topics (such as ARKM and WLD), the AI track as a whole is more like "MEME dominated by technical narratives."

On the one hand, the overall hype of the Crypto AI track must be closely linked to the progress of Web2 AI. External hype led by OpenAI will become the trigger for the Crypto AI track. On the other hand, the story of the AI track is still dominated by technical narratives. Of course, what we emphasize here is "technical narrative" rather than "technology", which makes the choice of the subdivision direction of the AI track and the fundamentals of the project Attention is still important. We need to find a narrative direction with hype value, and we also need to find projects with mid- to long-term competitiveness and moats.

From the four possible combinations proposed by Vitalik, we can see the trade-off between narrative charm and implementation possibilities. In the first and second categories represented by AI applications, we have seen many GPT Wrapper. The products are launched quickly but the business homogeneity is also high. The first-mover advantage, ecosystem, number of users and product revenue become Stories to tell in homogeneous competition. The third and fourth categories represent the grand narrative of the combination of AI and Crypto, such as Agent on-chain collaboration network, zkML, and decentralized reshaping of AI. They are all in their early stages. Projects with technological innovation will quickly attract funds, even if It's just a very early implementation.