Artificial intelligence (AI) may possess one of the most uniquely human abilities, Theory of Mind (ToM), a new study finds.

That is to say, in terms of tracking human mental states, such as "detecting wrong ideas", "understanding indirect speech", "recognizing impoliteness", etc., GPT (GPT-4, GPT-3.5) and Llama 2 have been shown to perform close to or even better than humans in specific situations.

These findings not only demonstrate that large language models (LLMs) exhibit behavior consistent with the output of human psychological reasoning, but also highlight the importance of systematic testing to ensure non-superficial comparisons between human and artificial intelligence.

The related research paper, titled "Testing theory of mind in large language models and humans", has been published in Nature Human Behaviour, a Nature subsidiary journal.

GPT is more "misleading", Llama 2 is more "polite"

Theory of mind is a psychological term that refers to the ability to understand one's own and the people around him or her mental states, including emotions, beliefs, intentions, desires, pretense, etc. Autism is generally believed to be caused by the patient's lack of this ability.

In the past, the ability of theory of mind was considered unique to humans. However, in addition to humans, many primates, such as chimpanzees, as well as elephants, dolphins, horses, cats, dogs, etc., are believed to have simple theory of mind abilities, which is still controversial.

The recent rapid development of large language models (LLMs) such as ChatGPT has sparked a heated debate over whether these models behave in a manner consistent with human behavior in theory of mind tasks.

In this work, the research team from the University Medical Center Hamburg-Eppendorf in Germany and their collaborators repeatedly tested two series of LLMs (GPT and Llama 2) for different theory of mind abilities and compared their performance with that of 1,907 human participants.

They found that the GPT model's performance in identifying indirect requests, false ideas, and misleading can reach or even exceed the human average, while Llama 2's performance is worse than that of humans.

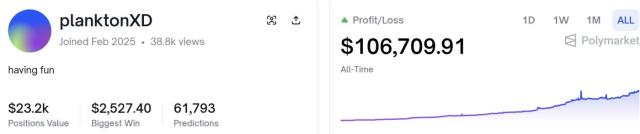

Figure |Performance of humans (purple), GPT-4 (dark blue), GPT-3.5 (light blue) and LLaMA2-70B (green) on theory of mind tests.

Llama 2 was better than humans at identifying faux pas, but GPT performed poorly.

The research team believes that Llama 2 performed well because its answers were less biased, rather than because it was really sensitive to faux pas, and that GPT performed poorly because it was ultra-conservative about sticking to its conclusions, rather than because of reasoning errors.

Has AI's theory of mind reached human levels?

In the discussion section of the paper, the research team conducted an in-depth analysis of the performance of the GPT model in the task of identifying inappropriate speech. The experimental results support the hypothesis that the GPT model is overly conservative in identifying inappropriate speech, rather than having poor reasoning ability. When the question is posed in the form of possibilities, the GPT model is able to correctly identify and select the most likely explanation.

At the same time, they also revealed through subsequent experiments that the superiority of LLaMA2-70B may be due to its bias towards ignorance rather than true reasoning ability.

In addition, they also pointed out the direction of future research, including further exploring the performance of GPT models in real-time human-computer interaction and how the decision-making behavior of these models affects human social cognition.

They caution that although LLMs perform comparable to humans on theory of mind tasks, this does not mean they have human-like abilities or that they can master theory of mind.

Nonetheless, they say these results are an important foundation for future research and suggest further investigation into how LLM performance on psychological inferences might affect individuals’ cognition in human-robot interactions.

Paper link

https://www.nature.com/articles/s41562-024-01882-z

This article comes from the WeChat public account "Academic Headlines" (ID: SciTouTiao) , author: Academic Headlines, published by 36Kr with authorization.