Phone calls are an API to the world — and AI takes this to the next level!

Phone calls are your API to the world — and AI takes it to the next level.

This article delves into a16z’s insights into AI voice assistants, comprehensively analyzing their development opportunities, technical architecture, future trends, and application potential in the B2B and B2C fields.

01. Opportunities of AI voice assistants

Now is the time to redefine the phone call. Thanks to the latest artificial intelligence technology, people no longer need to make calls in person. They will only choose to make calls when the call is truly valuable.

For businesses, this means:

1. Save the time and labor costs required for manual calls;

2. There is an opportunity to reallocate resources to increase revenue;

3. Reduce risk by providing a more compliant and consistent customer experience.

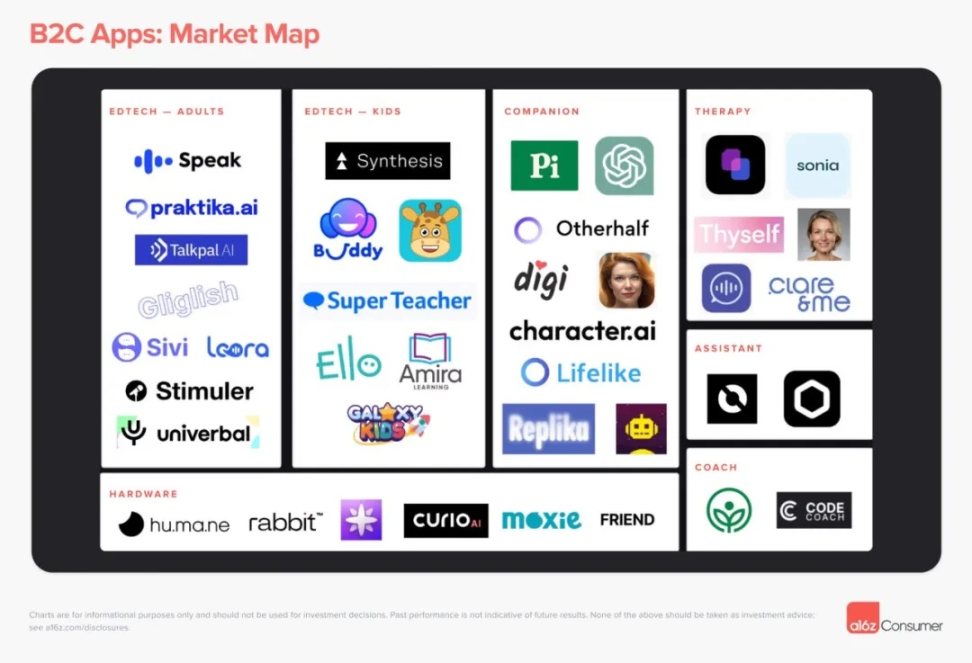

For consumers, voice assistants are able to provide services comparable to those provided by humans without the need to pay extra or be matched with a real person. Currently, this includes therapists, coaches, and companion services - in the future, this service may expand to a wider range of voice-based experiences. As with most toC software, the ultimate "winner" is unpredictable.

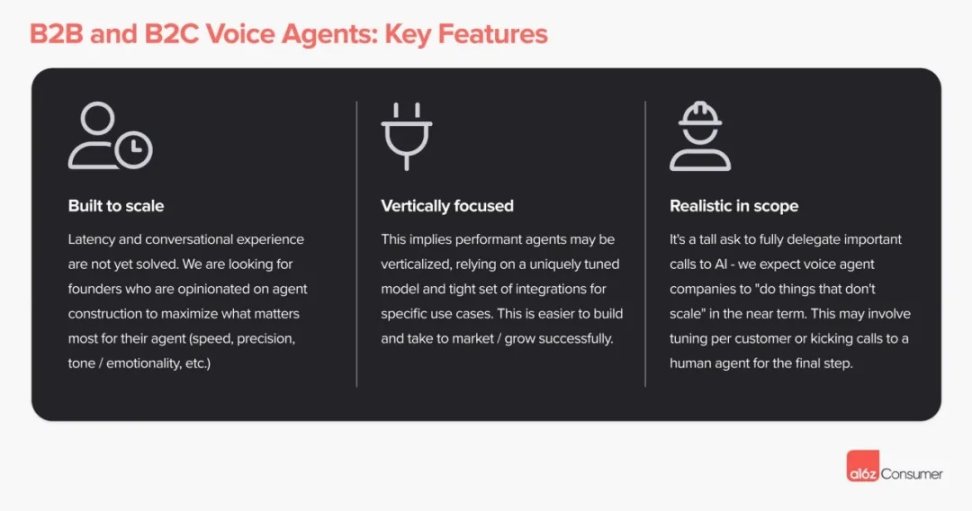

There are huge development opportunities at every level of voice assistants - whether it is building infrastructure, creating consumer interfaces, or providing enterprise-level proxy services. We have summarized the following key characteristics for voice assistants for consumers (B2C) and enterprises (B2B):

1. Focus on building efficiency

We are looking for founders who have a clear vision for building voice assistants and are focused on optimizing the key performance indicators of voice assistants - speed, accuracy, tone/emotion, etc. to provide a seamless user experience.

2. Vertical specialization

We believe that voice assistants that excel are likely to focus on specific verticals or sectors, leveraging models tailored for specific use cases and tightly integrated tool sets. This approach is easier to implement and can lead to faster market success.

3. Actual business scope

Relying entirely on AI to handle all important calls is challenging — we expect that in the short term, voice assistant companies may adopt some “non-scalable” strategies. This may include personalizing the tuning for each customer or transferring the call to a human agent when necessary.

02. Technical architecture

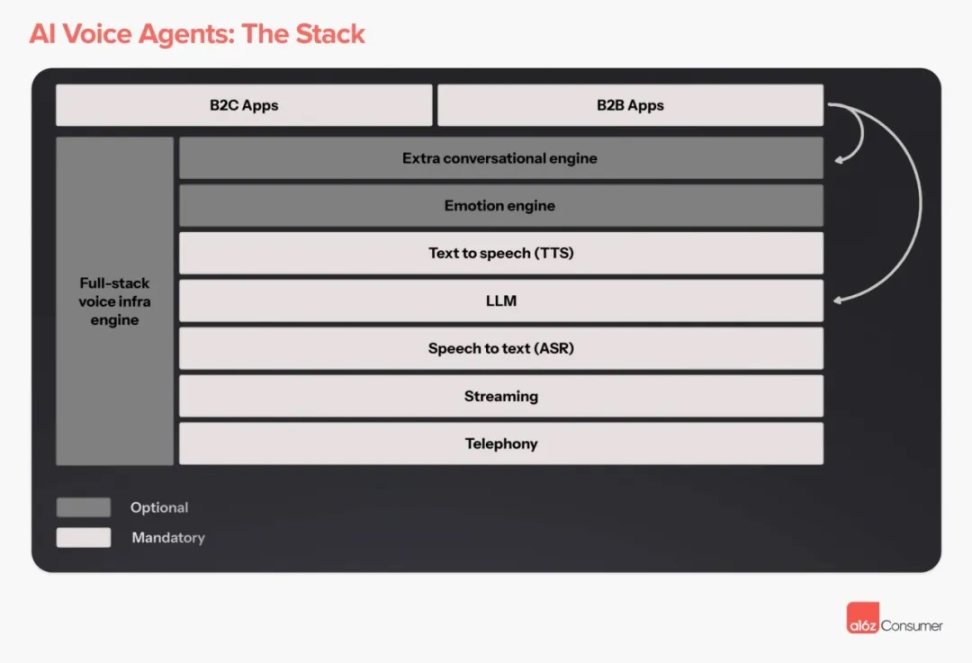

1. Build the architecture of the voice assistant

A new generation of multimodal models, such as GPT-4o, may change the existing architecture by processing multiple layers simultaneously with a single model. This will not only reduce latency and cost, but also support more natural conversational interfaces - since many agents have difficulty achieving truly human-like conversational quality under traditional stacked architectures.

The effective operation of a voice assistant requires several key steps: first receiving human speech (automatic speech recognition, ASR), then using a large language model (LLM) to process the input and generate output, and finally responding to humans in the form of speech (text to speech, TTS).

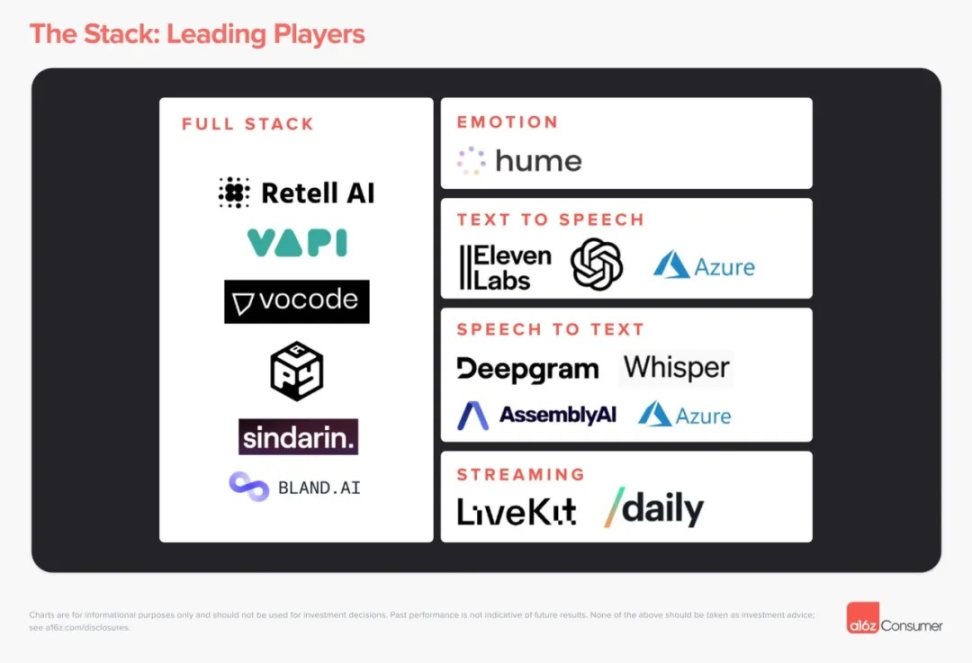

In some companies or approaches, a single or a series of LLMs handles both conversation flow and sentiment analysis. In other cases, there are specialized engines for adding emotional expressions, managing conversation interruptions, etc. Voice vendors that offer “full stack” services are able to provide all of the above capabilities in one place.

Consumer-facing (B2C) and enterprise-facing (B2B) applications sit on top of this architecture. Even when using third-party service providers, these applications often integrate custom LLMs—which are often used as conversation engines as well.

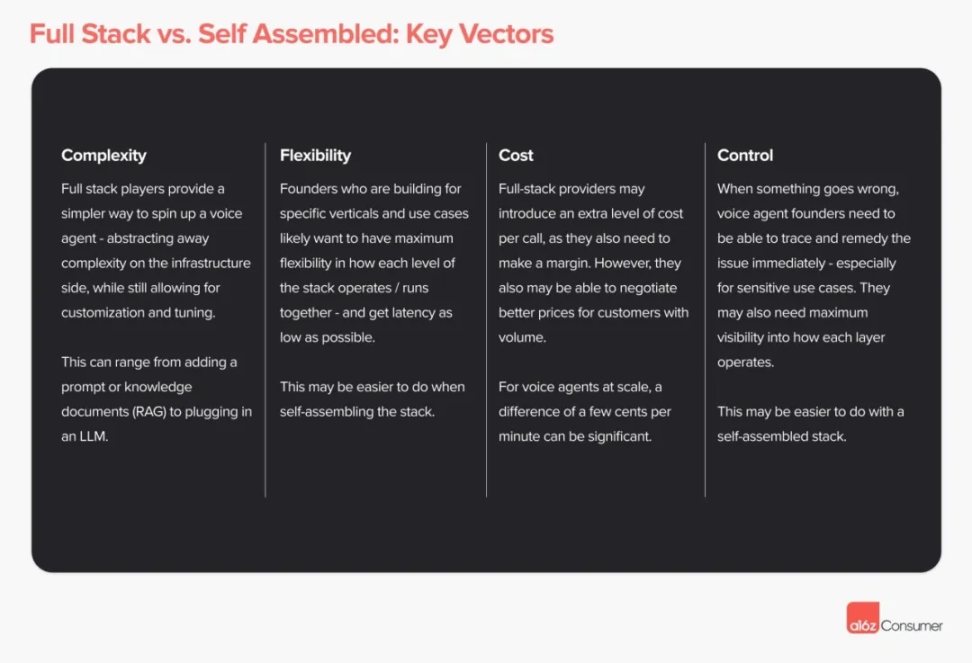

2. Full-stack solutions and custom assembly

When creating a voice assistant, developers can choose to launch their agent on a full-stack platform (such as Retell, Vapi, Bland, etc.) or assemble the required technology stack themselves. There are several key factors to consider when making this decision:

1. Complexity

Full-stack solutions offer a simplified approach to launching a voice assistant — they hide the complexity of the infrastructure while retaining the possibility for customization and tuning. This includes everything from adding prompts or knowledge documents (such as Retrieval-Augmented Generation, RAG) to integrating large language models (LLM).

2. Flexibility

For founders building products for specific industries or use cases, they may want maximum operational flexibility at every level of the stack with as little latency as possible. Achieving this flexibility may be easier when assembling a technology stack on their own.

3. Cost

Full-stack service providers may add additional costs to each call because they need to make a profit. However, they may also be able to offer more favorable pricing plans for large numbers of users. For voice agents deployed at scale, the cost difference per minute, even a few cents, can become an important consideration.

4. Control

When problems arise, voice agent founders need to be able to locate and resolve them quickly—especially for use cases where accuracy is paramount. They may also want deep knowledge and control over the technology at every layer. Using a self-assembled technology stack may make these needs easier to meet.

Below is a look at some of the current leading players at each technology level. This list is not an exhaustive market map, but rather represents some of the names most often mentioned by voice assistant founders.

We expect that with the rise of multimodal models, the entire technology stack will undergo major changes.

03. B2B Voice Assistant

1. Evolution

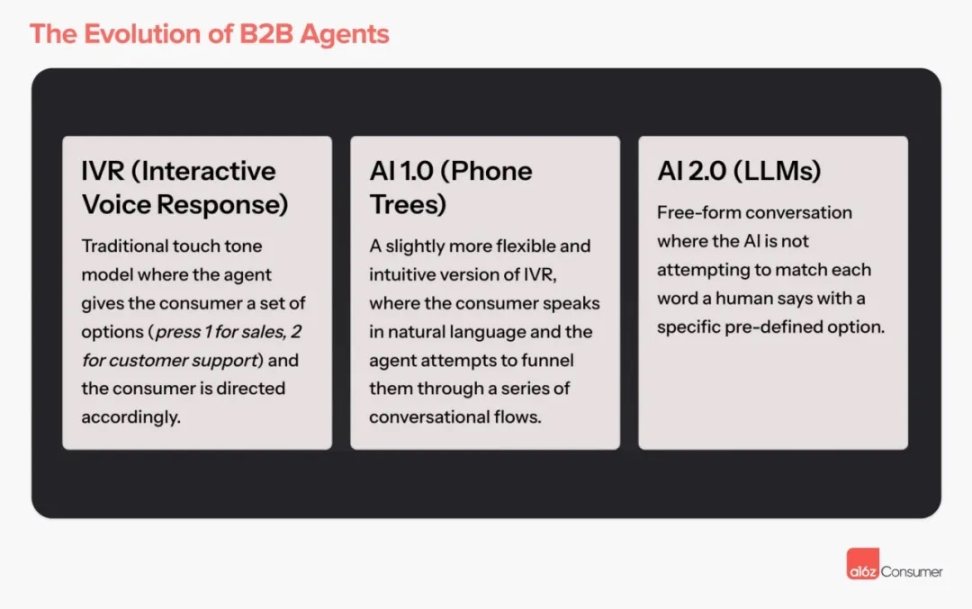

We’ve seen three major waves of technology in the B2B voice assistant space:

IVR (Interactive Voice Response)

In traditional IVR systems, consumers interact with the system by pressing buttons. For example, they may hear the prompt: "Press 1 for sales, press 2 for customer support", and then select the corresponding service according to the voice prompt.

AI 1.0 (Phone Tree)

AI 1.0 represents an advancement in IVR systems that allows consumers to communicate with the system using natural language. The agent will try to understand the consumer's needs through a series of conversational flows and guide them to the correct service options.

AI 2.0 (LLM)

AI 2.0 takes this concept a step further, allowing for more free-form conversations. In this model, AI is not forced to match every consumer's words with predefined options. Instead, it aims to understand the consumer's overall intent and provide a more natural, more human interaction experience.

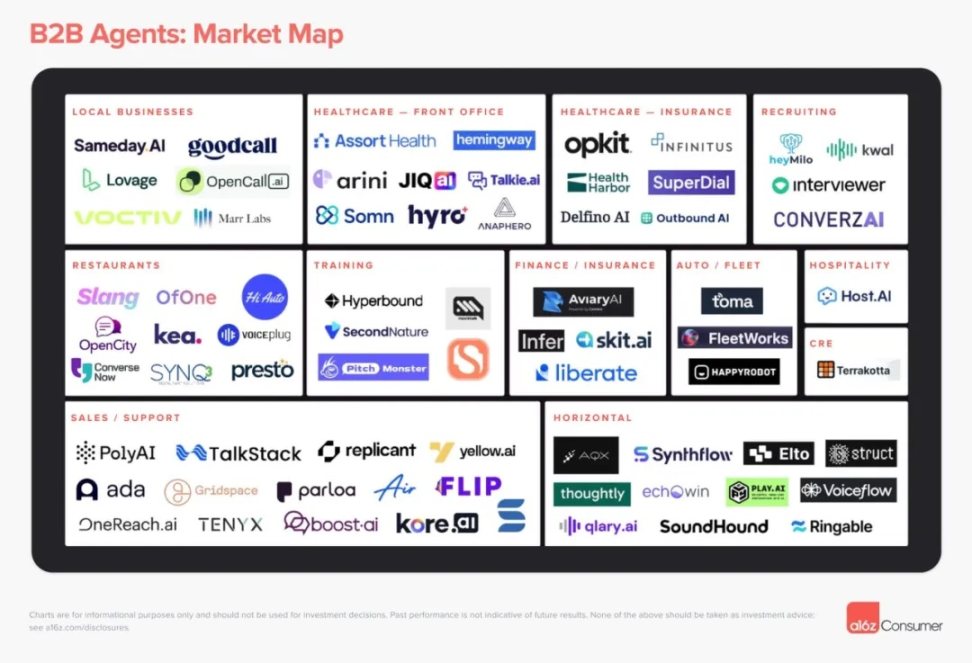

Many voice assistant companies have taken a vertical-specific approach, targeting a specific industry (e.g., car service) or a specific type of task (e.g., appointment scheduling) for the following reasons:

- Difficulty of execution

When entrusting a call to an AI, the quality bar for the conversation flow is extremely high — it can quickly become complex and specific. Companies that design for the “edge cases” of these verticals are more likely to succeed (e.g., unique terminology that a general model might not be able to understand).

- Regulations and licenses

Some voice assistant companies face unique regulatory restrictions and certification requirements. The healthcare industry (e.g. HIPAA compliance) is a prime example, though this also occurs in categories like sales, which have AI telemarketing regulations at the state level.

- integrated

In some categories, extensive or specialized integrations may be required to provide a good user experience (whether for businesses or consumers). These integrations may not be worth building unless they are needed to meet the needs of a specific use case.

- Integration with other software

Voice is a natural entry point into core customer behaviors like booking, renewal, quotes, etc. In some cases, this will be an opportunity for these businesses to enter broader vertical SaaS platforms – especially when the customer base still operates primarily offline.

2. Overall view

We are in the transition period from 1.0 AI voice (phone tree) to 2.0 AI voice (LLM based). In the past six months or so, 2.0 companies have emerged. While 1.0 companies may have the upper hand in accuracy currently, in the long run, the 2.0 approach will have the upper hand in scalability and accuracy.

It’s unlikely that there will be one universal model or platform for enterprise voice assistants, as there are some key differences between different verticals:

- call type, tone, and structure;

- Integration and processes;

- Go-to-market strategy (GTM) and “killer features”.

This may herald the rise of vertical voice assistants, specialized agents with strong personalization requirements in user interface (UI) design. This requires the founding team to have deep domain expertise or a strong interest in a specific field. Labor costs are a major cost center for many companies, and the total addressable market (TAM) is huge for companies that can "get it right."

Near-term opportunities are likely to emerge in industries with high labor dependency, severe labor shortages, and low call complexity. As agents become more skilled, they will be able to handle more complex calls.

3. The opportunities we see

1. Based on LLM, but not necessarily fully automated from the beginning

The “strong form” of an AI voice assistant will be a conversation driven entirely by a large language model (LLM), rather than the traditional interactive voice response (IVR) or phone tree approach. However, given that LLMs are not always 100% reliable, “human intervention” may be temporarily required for more sensitive or high-value transactions. This highlights the importance of vertical-specific workflows, as they can maximize the likelihood of success while minimizing the occurrence of human intervention and edge cases.

2. Combination of Customized Model and Prompt LLM Method

B2B voice assistants need to handle domain-specific (or vertical-specific) conversations, and a generic LLM may not be adequate for these needs. Many companies are tuning models based on data for each customer (hundreds or thousands of data points), and potentially extrapolating that back to a company-wide base model. Custom tuning may even go further and be specific to enterprise customers. Note: Some companies may tune a “generic” model (for customers to use) for their specific use case, and then tailor prompts to each customer.

3. Technical team with domain expertise

Given the complexity of B2B voice assistants, having some background in AI will be helpful (even if not required) to build and scale a high-quality solution. However, it is equally important to understand how to package and market your product into a specific vertical – which requires domain expertise or interest. You don’t need a PhD in AI to build an enterprise-grade voice assistant.

4. Deep insights into integration and ecosystem

Similar to the above, buyers in each vertical often want to see a few specific features or integrations before making a purchase. In fact, this may be the point at which a product moves from “useful” to “amazing” in their evaluation. This is why it makes sense to start building a product in a vertical.

5. Either targeting the “enterprise” market or having a strong product-led growth (PLG) trend

For those verticals where revenue is concentrated among the top companies/vendors, voice assistant companies may start in the enterprise market and eventually "penetrate" to SMBs with self-service products. SMB customers are eager for solutions and are willing to try various options - but they may not be able to provide enough data scale/quality for startups to tune models to enterprise levels.

04. B2C Voice Assistant

1. Evolution

So far, the AI voice assistants that dominate the consumer market come from large companies such as ChatGPT Voice and Inflection’s Pi app. There are several reasons why the development of consumer voice assistants has been slower:

- Large companies already have a broad consumer base and best-in-class models (in terms of accuracy, latency, etc.). Voice services are not easy to deliver at scale, especially given the recent launch of GPT-4o.

- Whereas B2B voice assistants “insert” AI into existing processes, B2C voice assistants require users to adopt new behaviors, which may be slower or require a more engaging product.

- Consumers’ perception of voice AI has been negative due to past experiences with products like Siri, so they may be reluctant to try new applications.

- Current products are already able to meet the basic use cases of voice AI - such as coaching, companionship, etc. B2C voice startups are just beginning to solve specific use cases or create experiences that ChatGPT, Pi, etc. cannot handle.

2. Overall view

In the B2B space, voice assistants primarily accomplish specific tasks by replacing existing phone calls. For consumer-facing agents, users must opt in to continue engaging, which is more challenging because voice interactions are not always convenient. This means the product needs to have a higher level of engagement.

The first and most obvious application for consumer voice assistants is to replace expensive or hard-to-get human services with AI. This includes therapy, coaching, mentoring, and more — anything that can be conversation-based and done virtually.

However, we believe the true potential of B2C voice assistants may not yet be fully realized. We are looking for products that can harness the power of voice to create new types of “conversations” that didn’t exist before. This could reinvent existing services or create entirely new ones.

For products that deliver a superior user experience, voice assistants offer an unprecedented opportunity to engage with consumers at an unprecedented level – truly mimicking a human connection. This could be in the form of agents as products in themselves, or voice as a mode for a wider range of products.

3. The opportunities we see

1. Clearly explain why sound is necessary

We look forward to seeing products and founders who can clearly articulate how voice brings unique value to a product — not just using voice for the sake of using it. In many cases, voice interfaces are actually disadvantageous compared to text interfaces because they are less convenient to use and less efficient in getting information out.

2. Clearly explain why real-time voice is necessary

While voice has its challenges, real-time voice is even more challenging to use (compared to asynchronous voice messaging). We expect to see founders understand why their products need to be built around real-time conversations — perhaps to provide human-like companionship, practice environments, etc.

3. From skeuomorphism to pre-AI “products”

We suspect that strong-form products will not simply replicate human-to-human conversations, but will instead use AI voice assistants as replacements for human service providers. First, meeting such standards is difficult — but more importantly, there is an opportunity to leverage AI to deliver the same value more efficiently and more pleasantly.

4. Verticalization to the point where model quality does not determine the winner

Leading general-purpose consumer AI products (such as ChatGPT, Pi, Claude) have high-quality voice models. They are able to effectively participate in many types of conversations and interactions. And, because they have their own models and stacks, they are likely to win in terms of latency and dialogue flow in the short term.

We expect to see startups succeed by customizing or tuning for specific types of conversations, or building UIs that provide more context and value to the voice assistant experience — for example, tracking progress over time, or guiding the conversation/experience in an opinionated way.